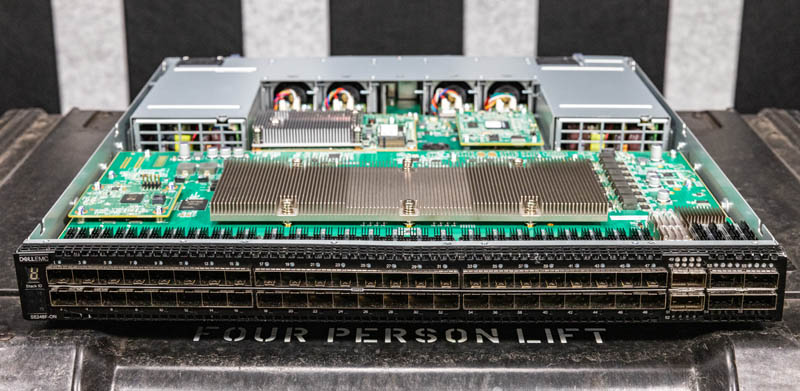

It is time to take a look at another switch, and we are going to move into the more mainstream, but also lower-end of the Dell EMC S5200 series with the Dell EMC S5248F-ON. This switch provides 48x 25GbE ports, four 100GbE ports, and two ports that offer 200GbE speeds. As a result, this switch is designed for in-rack 25GbE connectivity. Let us get into the switch.

Dell EMC Networking S5248F-ON Hardware Overview

As we have been doing with server reviews, we are going to split this into an external then an internal hardware overview. We also have a video review of this switch that you can find here:

As always, we suggest opening this in a new YouTube window, tab, or app for a better viewing experience.

Dell S5248F-ON External Hardware Overview

First, the Dell EMC Networking S5248F-ON is a 1U platform making it a similar form factor to the previous generation Dell S5148F-ON we reviewed, and about half the height of the Dell S5296F-ON that doubles the 25GbE port count compared to the 48-port switches.

One can quickly see the 48x SFP28 25GbE ports across the majority of the face of the unit.

A quick note here is that our model for photos and B-roll is a damaged switch. We have one of these switches running in our lab, but we had this damaged unit in the studio to take pictures of.

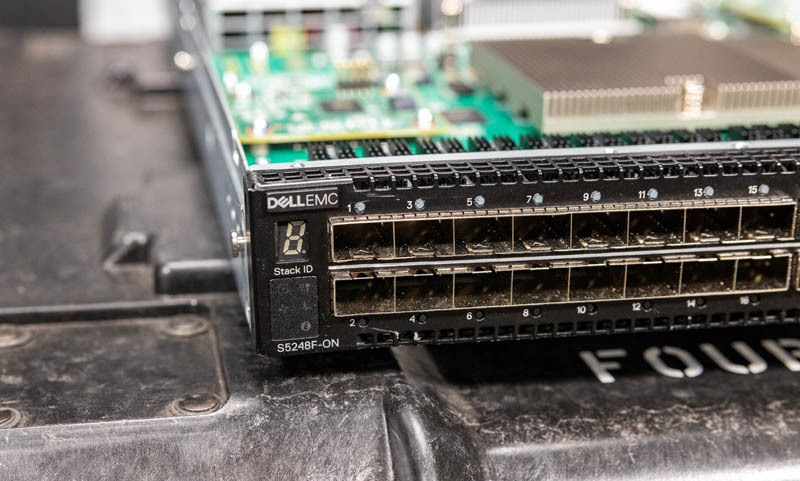

Starting at the front of the switch, on the left, we have the stack ID LCD as well as status lights. These are common features of Dell switches. The one difference here was on the S5296F-ON where there were also ports for things like the serial console and out-of-band management port here. This switch is more like the S5232F-ON with those features on the rear.

In the middle of the switch, we get 48x SFP28 25GbE ports. These are commonly used to connect to servers within a rack.

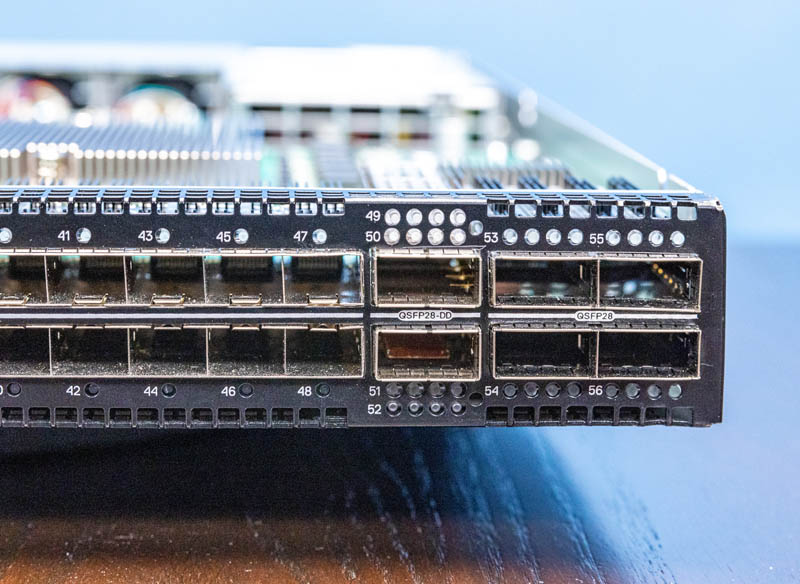

Unlike the S5232F-ON, the S5248F-ON does not have the two 10GbE SFP+ ports that would typically be used for higher-speed management duties. Instead, we get six high-speed ports. Two are QSFP28-DD ports that provide 200Gbps of bandwidth each. We can see that these ports can breakout into two 100GbE ports and they are labeled 49/50 and 51/52 to reflect that along with the LED status lights for four 100GbE connections.

Next to those, we have four QSFP28 100GbE ports. That gives us 800Gbps of uplink bandwidth and 1.2Tbps of 25GbE server connectivity. One item we will quickly note is that the last time we looked the QSFP28-DD ports are only supported in 100GbE mode with Cumulus.

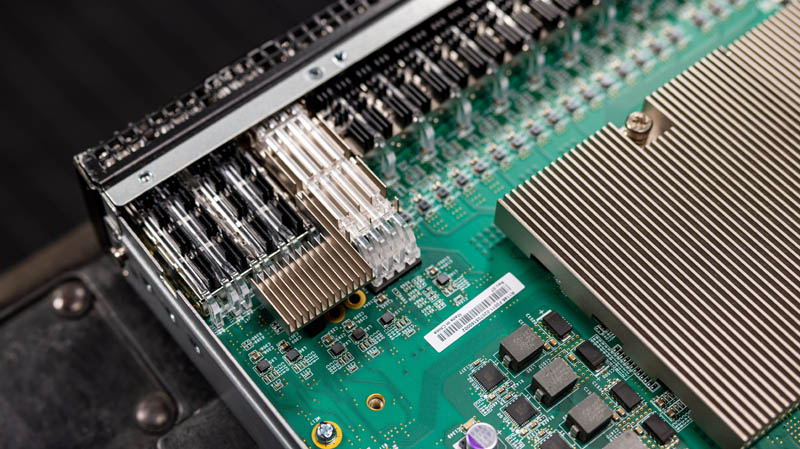

One other small feature is that while the SFP28 and QSFP28 cages have heatsinks that we have seen in other Dell switches, the QSFP28-DD ports have an extra little heatsink to provide cooling.

Here is another look at that heatsink that is required to cool the higher power optics possible for the 200Gbps ports.

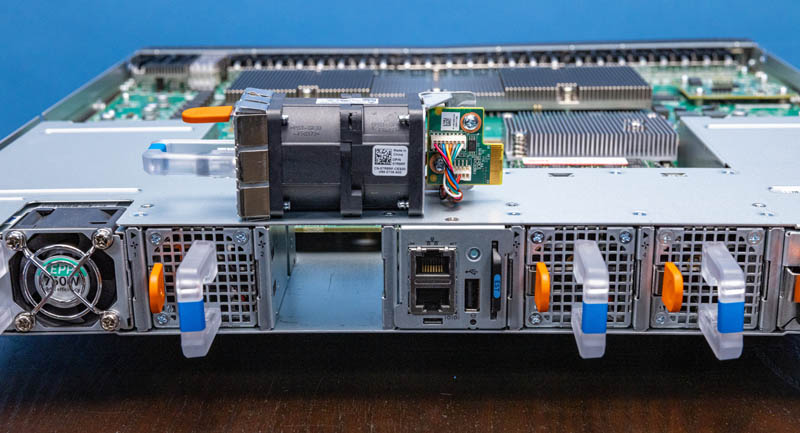

Moving to the rear of the switch, we get a layout similar to the S5232F-ON, and different from the S5296F-ON.

In the Dell S5232F-ON and previous generations such as the Dell S5148F-ON, the service ports are located at the rear, while the S5296F-ON has them on the front.

The four dual-fan modules are hot-swappable. Dell has both port-to-PSU and PSU-to-port airflow switches available. This is a PSU-to-port configuration.

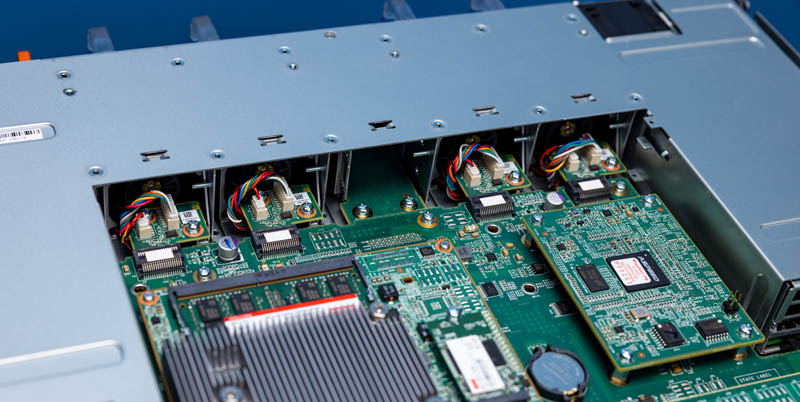

Here is a view of how the fans plug into the switch from the other side.

As for power supplies, we get two 750W 80Plus Platinum PSUs.

These are similar again to what we see on the S5232F-ON.

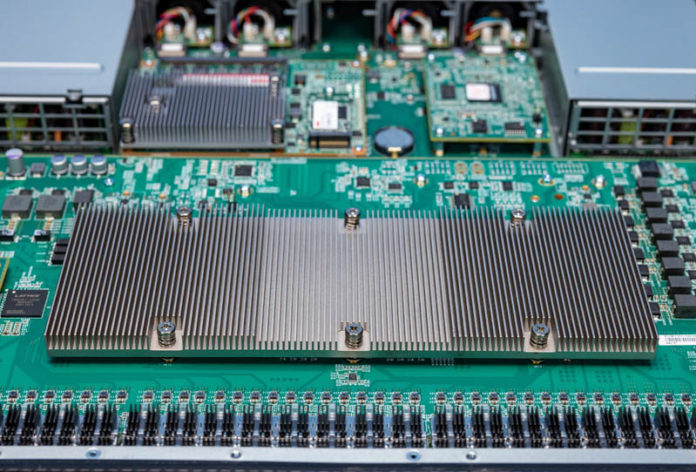

Next, let us get to our internal overview of the switch.

A Small Typo:

Next to those we have four QSFP28 100GbE ports. That gives us 800Gbps of uplink bandwidth and 1.2Gbps of 25GbE server connectivity.

Should be more like 1.2Tbps.

I found an Easter Egg in this article! It says the power supplies are 750 kW, so I imagine with two of them it would power around 50,000 PoE devices at 30 W each :)

What is the purpose of switches like these? We use 32 port 100G arista 7060s and use 4×25 breakout cables, and 4x 100g uplinks per ToR giving 800g to the rack itself. Obviously we have additional ports for uplinks if necessary, but it hasn’t been, but when I evaluated these switches I wasn’t sure what the usage was because it doesn’t provide the flexibility of straight 100g ports.

I would love to see what a DC designer would have to do to deal with a system that has dual 750,000w PSUs. lol

I just have to say thank you for your review of this switch – especially your coverage of Azure SONiC, which is on my road map for a parallel test installation we set our hearts on being able to roll out when we’re moving to the new software infrastructure that’s the result of years of development in order to reduce our monoliths to provide the seeds for what we hope to become our next only more populous monolith installation of expanded service clusters that made more sense to partition into more defined homologated workloads. We’re entirely vanilla in networking terms, having watched and waited for the beginning of the maturity phases of adoption, which I think is definitely under way now. To be able to deploy parallel vanilla and SDN (SONIC) switchgear on identical hardware, is precisely the trigger and encouragement that we’ve been looking out for – thanks to STH for bringing the news to us, yet again.