STH is now embarking on year 7 of its journey. On June 8, 2009, ServeTheHome was launched with a simple 322 word post. Frankly, back then it was an extremely simple WordPress site on $9/mo Dreamhost shared hosting versus today’s multiple datacenter plus AWS setup. It was my second attempt at a WordPress site and was really focused on the fact that I wanted to build a storage server. I purchased a few RAID controllers off of ebay along with some 15k rpm Seagate SAS drives pushing over 200MB/s and was off and running. It was at least a year later before I realized STH would be anything more than a simple hobby site. It took three years until I could even think of making a stretch goal about STH becoming a large enterprise technology site. The last year was an eventful one on the journey. As evidenced by the sheer magnitude of change and complexity we have added. Here are some of the changes over the past year and some of the future focus areas we have.

Big Changes in the last Year

2014 saw many major changes to STH. Here is a quick recap of some of the high points.

- Growth: the STH forum over the past year has been explosive. Today it is about 4x as active as it was just one year ago the main site has continued to grow at a very steady pace as well.

- Additional Contributors: We have seen more than a half dozen STH community members contribute to new content for the STH main site over the past 12 months. Beyond this the number of experts sharing insights in the forums has increased steadily over the last year.

- Architecture: we have moved from a single site 1/4 cabinet to 1.5 cabinets in two sites. This has allowed us to start developing new tools for STH and have more flexibility with hosting the site. Recently we transitioned the site from CentOS to Ubuntu and from Hyper-V to KVM and Docker.

Las Vegas Half in progress disk - Speed: Many of the changes have meant Y/Y decreases in load times by 40%. Multiply that by millions of visitors and we have given back over 300 days to our visitors this year simply by making the site faster.

- Breadth: We have increased the number of vendors whose products we have reviewed over the past year by 50% in the last year. With the new datacenter lab that growth is continuing.

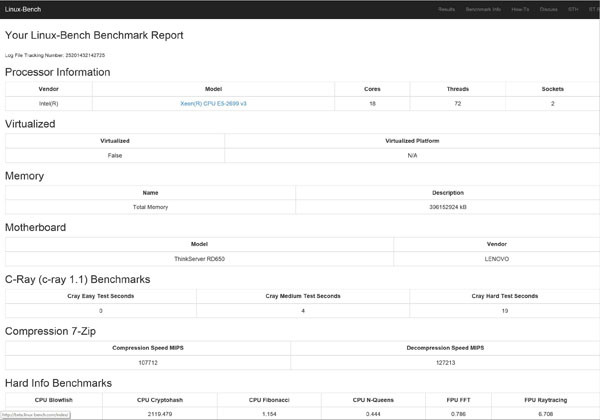

- Tools: We hired a developer to build the original Linux-Bench web interface and yet another to fix it and make it easy to use. This was certainly not a quick nor inexpensive undertaking. Compared to this time last year, Linux-Bench has about twelve times the usage and we hope continued work on the platform will help that grow more.

Of course, not everything this year was shamrocks and rainbows.

Lowest Point of the Journey: The Great Crash of 2014

I will vividly remember Thursday June 5, 2014 for many years to come. Exactly one month before my wedding, I was leaving a potential client in Sunnyvale, California when I noticed that monitoring services were sending many errors. At first I had suspected the pfsense firewall was having issues but after a successful VPN attempt, that was clearly not the case. I headed home only to find the site acting erratically. The main site forums and backups were all housed on three machines within a single Dell PowerEdge C6100 chassis while I had been setting up machines after the May 2014 colocation upgrade. A few frantic tickets to the datacenter and Steven @ Rack911 (who is awesome) and the culprit arose: dead nodes.

Within that Dell C6100 chassis the main Kingston E100 SSDs (400GB drives with 800GB NAND onboard) and a few OCZ drives all died. Intel boot SSDs were fine in each node but did not have data on them. We tried FedEx’ing the drives to a SSD recovery depot to no avail. The drives were toast and with them STH.

Just as I was getting ready to start writing the STH 5th birthday post, I probably hit the lowest point in the past six years. Everything had to be re-built from scratch including the VMs. We were able to find some older versions around as bases but this was a very long process of manually re-creating over 150 articles from share drives.

Lesson learned. STH has moved into a second datacenter in Fremont, California just to help prevent something like this ever happening again, even if an entire datacenter goes down.

STH 2015: A Preview

There have been a number of changes happening recently. There are a few examples that should be highlighted.

First, we have been building improved tools such as Linux-Bench which have become standard Linux server benchmarking suites used at STH, Tom’s Hardware, Anandtech and many other sites focused on computer hardware performance. The next revision will be leaving beta very soon. It is significantly easier to use and most usage has already switched to the beta. We also have started working with an official Docker version of Linux-Bench which is simple to run and can take advantage of the new containerization technology. We have a new and much bigger tool that will be launched in the (hopefully) not too distant future which is perhaps even more exciting. For anyone wondering what we are planning to do with all of the extra CPU cycles, there is a big MongoDB / node.js application under development that will be extremely useful.

Second, we added William Harmon, previously the main enterprise reviewer at TweakTown. Having another experienced writer is helping the site quite a bit. We are actively looking to add additional writers to the roster including some guest spots.

Third, we are now doing in-datacenter reviews of enterprise products. While other hardware review sites have racks setup in a home or office space, we are using colocation racks now to test servers in real-world conditions. An example of this is can be found in our recent RackStuds review where we found a need for two sizes between our lab and the colocation facility.

Fourth, the STH forums have been a major focus area in the past six months and have grown substantially. The community has become significantly more active and has grown in size consistently to the point where most metrics are sitting at around 4x year/ year activity growth. That is all driven by an awesome community of experts passionate about servers.

As we have spoken to vendors, the concept of racking equipment in a real datacenter has been very popular. We have had several vendors already tour the facility and several other visits scheduled.

Wrapping for Now

Since I started doing an OKR for STH goals have been quite aggressive. At some point we needed a new challenge. Perhaps a bit too aggressively, we have started executing on the plan to triple the size of STH over the next few quarters. Thank you to all of our loyal readers for being part of the journey thus far, and get excited for what we are building here.

I love this site. Keep up the great work for years to come.

Patrick, keep up the great work. Love your site. One negative comment would be when reviewing a product, as you know, price is what breaks or makes a product. So I would prefer all reviews to have transparent pricing information.

RE: The crash you experienced. You wrote>

Within that Dell C6100 chassis the main Kingston E100 SSDs (400GB drives with 800GB NAND onboard) and a few OCZ drives all died. Intel boot SSDs were fine in each node but did not have data on them

Have you done a post mortem to determine why boht the Intel and Kingston SSDs failed. Both are supposed to have ‘protect in flight data’ protection’. Is there any mechanism that could have allowed these drives to have retained data?

Hope you can and will respond here, or to plewin@optonline.net

Thanks!

Paul – the best we have figured was that Intel drives also have some power protection from a power surge. That is why those drives survived while others failed. They did not have data we needed on them because they were OS only.

Patrick, then I think you are saying that if the data had been on the Intel SSD, rather than Kingston your life would have been a lot happier :-). Would it be possible to provide a list of SSDs that provide the ‘power protection’ mechanism that the Intel drive had. I assume that the Intel SSD had some kind of fast MOFET like surge protection circuitry. Is this a single chip we all could look for in our SSD purchases. It would seem that so called ‘in flight data protection’ that many SSDs claim is problematic if a voltage surge can zap the SSD. Actually this kind of voltage surge protection might better be the responsibility of motherboard/power supply circuitry so it could protect all devices using PCI-e lanes (m2 msata et. al.). You would do us all a service to identify the exact circuitry that allowed the Intel SSD to survive and make this part of the SSD tests/evaluations STH provides. I found this now old information at the URLs listed below:

http://hardware.slashdot.org/story/13/03/01/224257/how-power-failures-corrupt-flash-ssd-data

https://www.usenix.org/conference/fast13/technical-sessions/presentation/zheng

Years ago Tandem’s non-stop architecture NEVER failed. I guess co-location is the modern way to accomplish guaranty non loss of data, and system availability. BUT what do you use to keep the two systems synched? Do you really have real time synchronization. And theory says this is IMOSSIBLE.

https://en.wikipedia.org/wiki/CAP_theorem