Dell EMC PowerEdge XE7100 and 100 Drive Bays

The big feature of the chassis is clearly the hundred drive bays. These are laid out across the system with only one major section carved out. That section is for the two power supplies as well as the power distribution board. We have four rows of 15x 3.5″ hard drives then the last three rows lose five drives total to fit the power infrastructure.

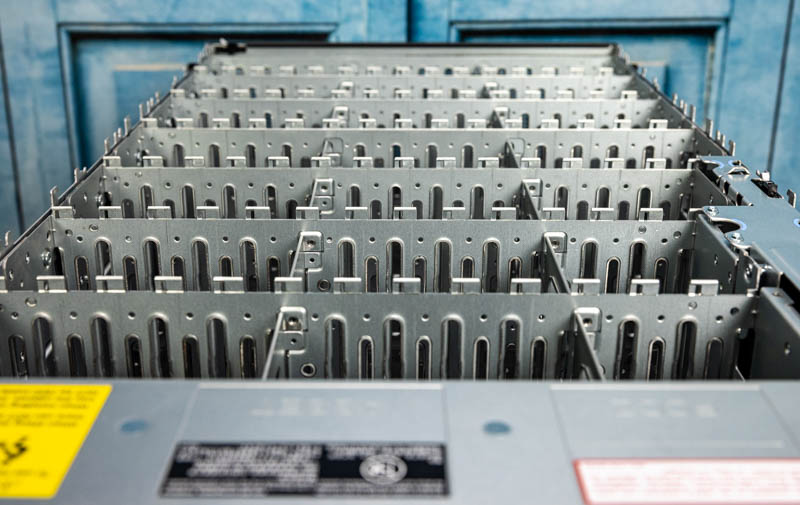

Inside the system, we see power and data connectors. These can handle SAS or SATA drives. We would generally see 3.5″ hard drives with an option for up to 20x SSDs. There is not a lot of visible vibration dampening in the chassis which we see some other vendors such as WD market on its systems. We did not take apart the chassis because this review took around 100 hours to complete and the procedure to get this open would have added quite a lot to that process. The plastic handles are there so that once the drive cage and some other parts are removed, this large backplane can be lifted from the chassis. Suffice to say, if anything on this backplane fails, it is a significant effort to replace it.

Each of the drives utilizes a tool-less drive tray and one of the interesting aspects is that they have metal latches and hooks. These hooks mount to holes which one can see at the top of the drive bays here.

It is a fairly long process to install all of the drives. Assuming all are in drive trays and ready to place in the system, just loading the system with drives takes 12-15 minutes. We have a time-lapse of this process in the accompanying video. Overall the installation works well but every so often a drive needs to be re-seated to engage the latch properly. On large storage servers like this that is a common concern.

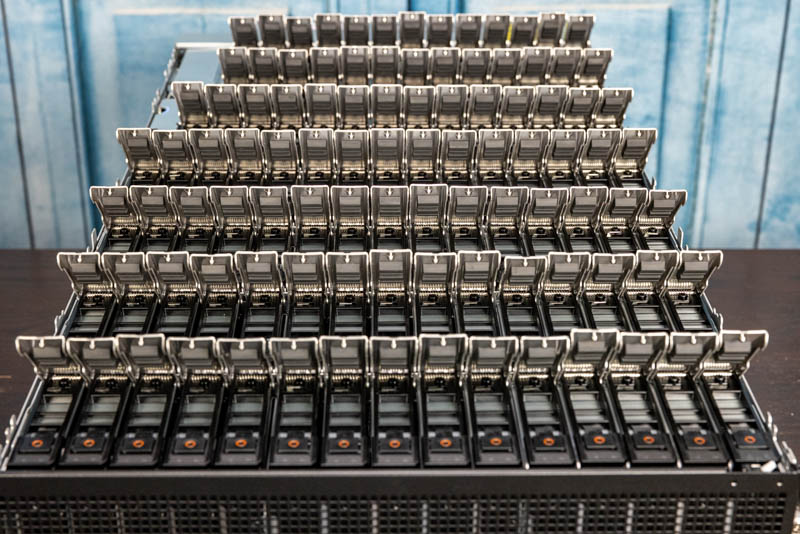

Here are the drive tray latches from 100x Seagate EXOS 16 12TB hard drives. This is a 1.2PB salute. One can see the latching mechanism this is a metal/ plastic design that works well.

Something that is important is that this is such a large system that one needs a large flat surface to work with the system on. We had the system on a turntable for photos/ video, and one can see that that caused significant flex across the chassis. Here is a photo where we simply pushed all 100 latches. A number of them failed to disengage because of the chassis flex with our uneven surface.

It is awesome to see a 1.2PB system, but Dell is offering more than just a JBOD here, Dell has a flexible compute solution to pair with its large scale-out storage node.

Next, we are going to take a look at the compute options for the XE7100.

Just out of curiosity, when a drive fails, what sort of information are you given regarding the location of said drive? Does it have LEDs on the drive trays, or are you told “row x, column y” or ???

On the PCB backplane and on the drive trays you can see the infrastructure for two status LEDs per drive. The system is also laid out to have all of the drive slots numbered and documented.

Unless, you are into Ceph or the likes and you are okay with huge failure domains (the only way to make Ceph economical for capacity), then I just don’t understand this product. No dual path (HA) makes it a no go. Perhaps there are places where a PB+ outage is no biggie.

Not sure what software you will run on this, most clustered file system or object storage system that I know recommend going for smaller nodes, primarily for rebuild times, one vendor that I know recommends 10 – 15 drives, and drives with no more than 8TB.

I enjoy your review of this system, definitely cool features, love to the flexibility with the gpu/ssd options and as you mentioned this would not be an individual setup but rather integrated in a clustered setup. I’d also imagine it has the typical IDRAC functionality, and all the dell basics for monitoring.

After all the fun putting it together, do you get to keep it for a while and play with or once you reviewed it….just pack it up and ship it out?

Hans Henrik Hape even with CEPH this is to big, loosing 1,2 PB becouse one servers fails, rebalancing, network and cpu load on nodes. It’s just bad idea not from data loose point of view but from maintaining operations durring failure.

Great Job STH, Patrick, you never stopped surprising us with these kind of reviews. sine you configured system as a raw 1.2PB can you check the internal bandwidth available using something like

iozone -t 1 -i 0 -i 1 -r 1M -s 1024G -+n

I would love to know since no parity HDD is it possible the bandwidth will 250MBpsx100HDD i.e. 25GBps. I’m sure their will be bottlenecks maybe the PCIe bus speed for the HBA controller or CPU limitation. But since you have it will nice to known

Again thanks for the great reviews

“250MBpsx100HDD i.e. 25GBps” ANy 7200 RPM drive is capable of ~140 MB/sec sustained, so, 100 of them would be ~14 GigaBYTES per second… Ergo, 100 Gbps connection will then propel this server to hypothetical ~10 GB/sec transfers… As it is, 25 Gbps would limit it quite quickly.

A great review of this awesome server! I wish I had a few hundred K$ to spare, and, needed a couple of them!

Happy wise (wo)men who’ve got to play with such grownup toys.

There is a typo in the conclusion section compeition -> competition.

But else a really cool review of a nice “toy” I sadly will never get too play with.

>AMD CPUs tend to have more I/O and core count which also means more power consumption

Ohrealy?

Perfect for my budget conscious Plex

James – if you look at Milan as an example, AMD does not even offer a sub 155W TDP CPU in the EPYC 7003 series. We discussed in that launch review why and it comes down to the I/O die using so much power.

While nothing was mentioned about prices, Dell typically charge at least 100% premium for the hard drives with their logo (all made NOT by Dell) despite having much shorter than normal 5yr warranty – so combination of 1U 2 CPU (AMD EPYC) server and 102-106 4U SAS JBOD from WD or Seagate will be MUCH cheaper, faster (in a right configuration) and much easier to upgrade if needed (the server part which improves faster than SAS HDD).

Color me unimpressed by this product despite impressive engineering.

Definitely getting one for my homelab

I just managed to purchase the 20 m.2 DSS FE1 card for my Dell R730xd. I did not to too much test but It works (both in esxi and truenas). And the cost for this card is absolute bargin. Feel free to contact me for the card.