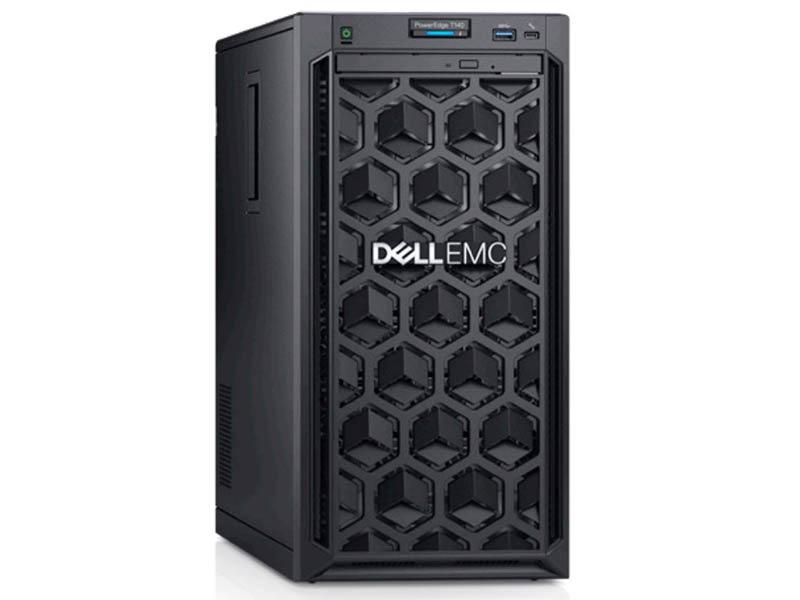

If you are looking for the lowest cost tower PowerEdge, the Dell EMC PowerEdge T140 may be your answer. In this review, we are going to take a look at the PowerEdge T140 from a number of different angles. We are going to delve into the hardware that makes the system tick. Many administrators will like the fact that the Dell EMC PowerEdge T140 utilizes the familiar iDRAC 9 for management. During the process, we will also test the PowerEdge T140 and see how it performs using a number of different processor options that you may configure. In the end, we are going to see how the Dell EMC PowerEdge T140 stacks up to its competition.

Dell EMC PowerEdge T140 Hardware Overview

When it comes to stature, the Dell EMC PowerEdge T140 is a relatively petite mini tower measuring 360mm x 175mm x 454mm. Front I/O does not include traditional hot swap bays. Instead, one has USB and service connectivity along with a slim optical drive bay.

Some servers in this class are built completely bare bones. Sheet metal is wrapped around a basic motherboard sometimes with screws and sharp metal in this class of machines, but not with the PowerEdge T140. The PowerEdge T140 gives its first clue that low cost does not necessarily mean abandoning the company’s principles of quality as one looks to open the chassis. Here, there is a nice latching mechanism that is easy to operate. In contrast, many competitive systems, even from large vendors, utilize a simple sheet metal side panel held by screws. Although this may seem like the most inconsequential feature at first, this is clearly a cost adder that the Dell EMC product team rightly designed in for the purpose of giving a user a quality experience.

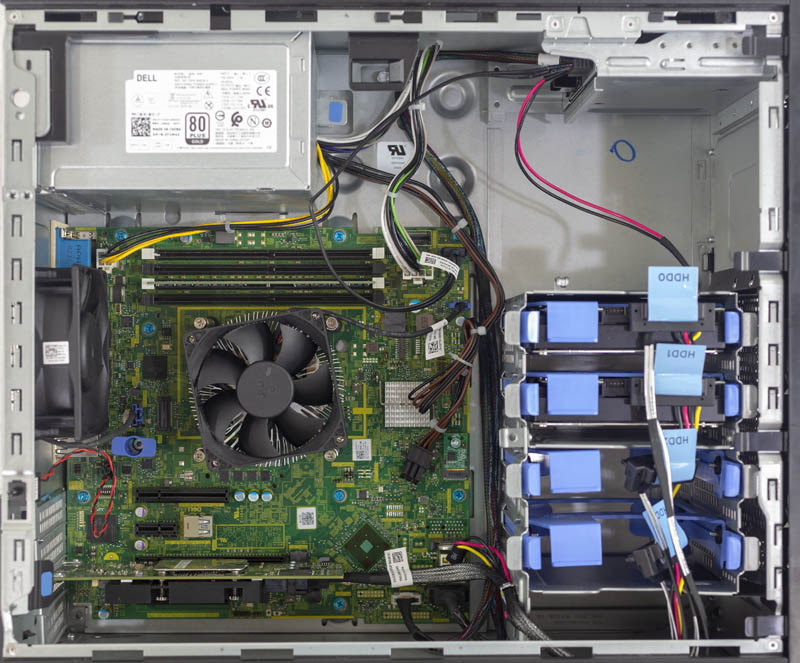

Inside the chassis, we can see the basic layout of the system. There is an mATX side motherboard, an ATX size power supply, an optical bay and four 3.5″ drive bays.

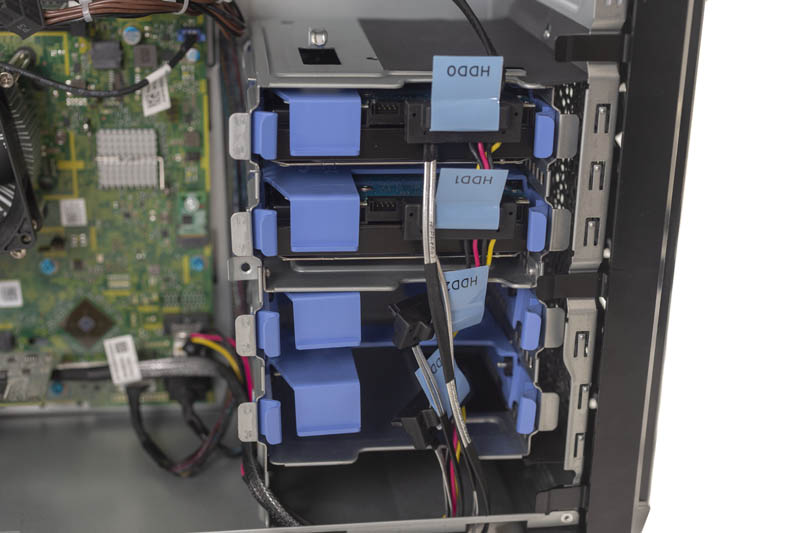

The 3.5″ bays are cabled and in tool-less trays. These are not traditional hot swap bays as there is more involved to swap drives. At the same time, this type of solution is common in this class of machine and it is usually easy enough for a novice IT admin, or a reasonable on-site employee to swap drives. Another quality area is that the drive connections are labeled with large lettering so they are easy to read. For those with limited eyesight who are installing drives, the small step of having large labels makes the process easier.

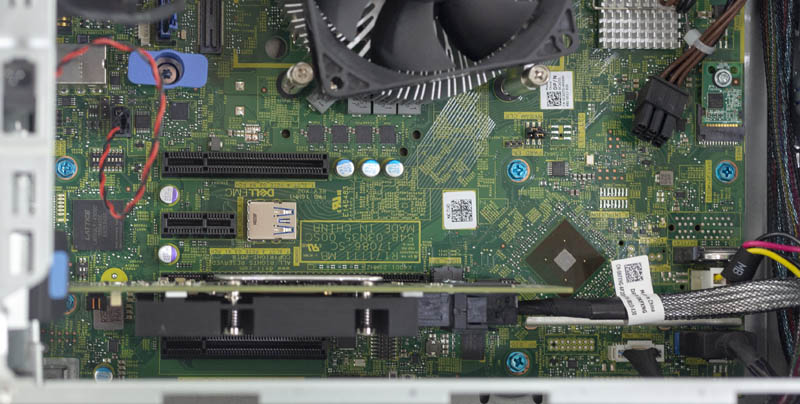

Typically, one would use the motherboard’s onboard SATA controller for storage at the ultra-low-cost segment. One can see a SFF-8087 header that can provide up to four SATA III 6.0gbps lanes. The SFF-8087 connector is often associated with SAS, but this port is SATA only. One can also see a traditional SATA III 6.0gbps 7-pin SATA port that is labeled for either the optical drive or another hard drive.

PCIe connectivity is provided through a series of four slots. We have a PERC card pictured here. One note is that there is not an onboard M.2 PCIe slot. M.2 has become a popular NVMe SSD form factor in servers. Dell EMC allows the use of a BOSS card with one or two SATA M.2 drives for boot, but NVMe drives are for a different use case and offer more performance.

These are a fairly basic set at:

- PCIe 3.0 x8 (x16 physical connector)

- PCIe 3.0 x1

- PCIe 3.0 x8

- PCIe 3.0 x4 (x8 physical connector)

With this class of server, I/O connectivity is limited by Intel’s underlying platform. If you want more PCIe connectivity, you will go to higher-end products like the Dell EMC PowerEdge T440 based on Intel Xeon Scalable.

For the power supply, Dell EMC uses a 365W unit that is 80Plus Gold rated. For sub 500W PSUs 80Plus Gold is still considered very good. There are competitors in this class using Bronze or non-rated PSUs. We commend Dell EMC for going with an industry standard efficiency rating PSU here to assure buyers of lower operational costs.

The rear I/O panel has a traditional serial port, VGA port and four USB 2.0 ports for the legacy I/O. One also gets two USB 3.0 ports each sitting below one of two 1GbE port. Dell EMC again went the extra step and have a dedicated iDRAC port for out-of-band management that we will focus on in a later section.

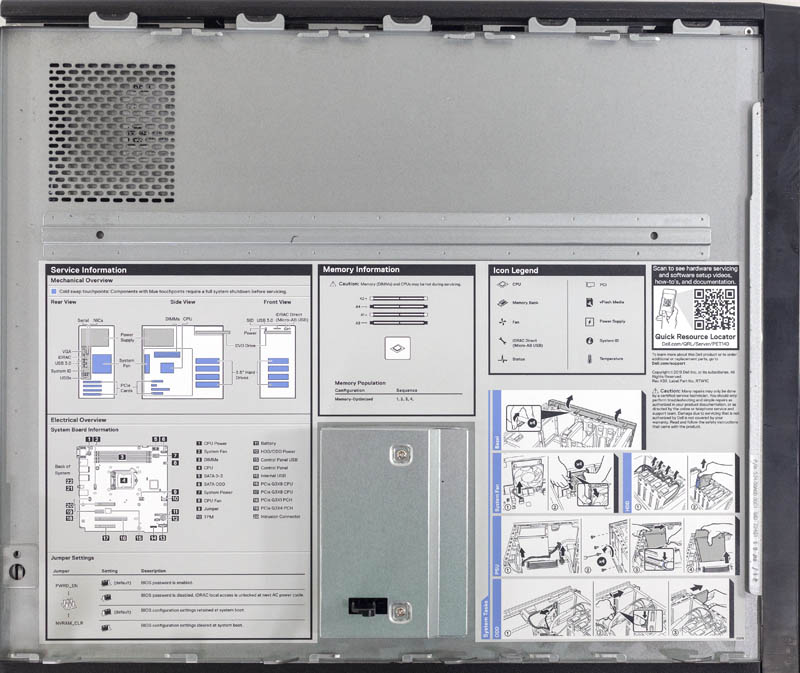

Before we end the hardware overview section, one other area that should be mentioned is documentation quality. Dell EMC has solid online documentation for IT admins. Designed for edge deployments, the Dell EMC PowerEdge T140 is also designed to be serviced by non-IT onsite staff. Here, simple features like stickers with pictures and pertinent service detail makes a big difference.

If you review a lot of servers as we do, it is fairly easy to see that the Dell EMC PowerEdge T140 is designed to be budget friendly. At the same time, the PowerEdge team did a great job balancing costs while still making the lower-cost system feel well built. There are small features we highlighted such as the latching mechanism and labels that contribute to the overall quality of the PowerEdge solution over other alternatives.

Hello,

How about hdd cooling fans ? Had temperature issues with 4 hdd’s in my T20. Had to switch to another case.

How many SATA connectors are there? The case/mobo looks like poweredge T110 II which we really like at our Uni. Unlike this one, in the T110 II the front 5.25 bays could be replaced by 3 or 4 hdd for ZFS mirrors (pool) by adding a small PCI-E sata card. I do hope that the PCI-E lanes support some basic gfx card (at least a nvidia Geforce GT1030) to get remote “ssh -X” for some virtualisation software. (PE T110 II has tons of issues with any gfx card as there is a kind of < 20W limit).

When the third image in a server review is a latch, you know you’re reading one of STH’s crazy in depth reviews. Nothing says hands on like featuring a part meant to put a hand on.

Any word on whether an inexpensive StarTech M.2 adapter can be used to accommodate a Samsung 983 DCT (MZ-1LB960NE) M.2 NVMe drive and have the T140 boot from it? I think Dell removed such NVMe boot ability from the T30.

Recently built a T140 for a client needing a bare metal SQL box with some solid per core grunt and the Xeon E’s are top of the class in that dept. Basically the T140 is a single socket Xeon workstation with iDRAC bolted on. That’s fine, because there’s always been a server line in that class and they’ve always been good value. I went with Intel SSDs in RAID 1, but with the BOSS card the server can do Intel VROC, which is NVMe RAID native….at least according to Dell.

Is there any advantage in having a Xeon E-xxxxG processor on that machine? Will the graphics capabilities of the chip be used for anything, or is there any wiring to make it available?

Hi Paulo – not for display output, however, you can use features like Quick Sync

I bought myself one, it’s really nice.

One thing that annoys me though is that the E-2136 I bought it with is capped for some reason at 4.18 GHz instead of going all the way up to 4.5 as it should have. And it doesn’t hit the 80W while testing single thread so it doesn’t throttle. I’m testing all this with intel extreme tuning utility to get the whole picture.

Dell capped it for some reason.

Actually the limit is exactly 4.2 under Linux, under Windows I only got to 4.18, but under linux, running cooler (70C) + undervolting and on a half an hour compiling it stays at 4.199 or 4.2 GHZ, never passing that, even by 1 Mhz, which is annoying as nowhere is written that you’re limited by Dell.

Preciso colocar mais HD SATA, ja usei uma CADDY HD/DVD e coloquei um SSD. porem tenho mais um disco SATA 1TB para adicionar além dos 4 existente.

qual a sugestao?

Hello

I just got the T140 with Intel 2126G (80 W), H330 controller and 1x 1 TB HDD.

My config should consume less power than the one you tested, since your config includes the Intel 2128G rated for 95 W

My power meter says:

220 V \ 50 Hz

51 W

Power factor 0.87

0.26 A

Why you have just 35 W when idle?

Hello, we just got one T140 with 2126G, 1TB HDD and 8GB DDR4 ECC for a client. Working upgrade:

3x8GB Samsung DDR4-2666 CL19 ECC (M391A1K43BB2-CTD -> 32GB)

Gigabyte Nvidia GT 1030 Low Profile 2G (GV-N1030D5-2GL, integrated video disabled)

Samsung 860 Pro 1TB SATA SSD (HDD for backup)

Will be used as an entry server-workstation with dualdisplay.

With iDRAC9, is an additional license required to get remote desktop capabilities, like with iLO?

Licenses are needed to get Idrac9 on a T140 to show the console display. I had to buy a vga-to-hdmi adapter to get a view of the console, for installation. A little disappointing for a server. Noise-level is low, but too much for a living-room.

Hello;

I am in between to choose this one for HPE ML30 Gen10. Which one would you choose and why?

Regarding CPU Turbo issue:

I’d guess its a deliberate BIOS limit on this board, any E-series Xeon i’ve seen is running max at its all-core Turbo frequency.

A comment above says that iDRAC9 as included with the T140 does not include a remote console feature. Is there an upgrade or license that adds that? And if so, does it ruin the economics of the server as is the case with the ProLiant servers that are a notch above the MicroServer Gen10 Plus?

Configured one of these for various test loads and have few comments. First of all it indeed does not support NVMe boot which is a bit odd for 2019s machine, not a real problem since I can always use a boot loader from USB to continue booting from NVMe.

The option to use Dell BOSS card also means you are limited to the two older SSD models BOSS supports and the card itself is just few bulk components for hefty price. Obviously if you already have a fleet of Dells and can acquire these things cheaply it’s a different situation and it also enables things in IDRAC and so on.

Also while the machine has multiple PCIE slots it considers most of the cards “third party devices” and automatically ramps up fans up to painful levels. Now there is a switch that can be used ignore “3rd party device fan control” via racadm. But for some reason my NVMe wasn’t considered third party PCIE card but a PCIE SSD meaning there was nothing to do. I found out that by downgrading iDRAC to 3.30 you could manually control the fans via undocumented IPMI codes (disgusting) so I tried downgrading. And in the end just installing 3.30 fixed the issue without any manual tuning, fans are set to auto and not running as 100% even with the unholy non-Dell third party PCIE device installed.

The decision to set fans at 100% when encountering “unknown devices” is just stupid. What’s the point of PCIE slots if you are not going to allow standard compliant devices to run easily? Is the case so badly designed that a typical PCIE device power draw could cause issues?

Is it make sense to buy xeon based processor in 2021? As now ARM servers can come soon with less cost better performance? Please suggest what do you think ? Thanks.

I really think this review missed out on a critical limitation in this series of Dell PowerEdge servers. Even though it was/is offered with the G series of E-2100/2200 processors, the use of the graphics engine is disabled. It does not allow the quick sync to be used for hardware transcoding. I found this out the hard way after building a TrueNAS Scale system on this server, upgrading the CPU from a 2124 to a 2126G only to find that it doesn’t work.

Digging back through Dell’s documentation, it says this in the Tech Guide:

“NOTE: We do not support graphics with E-2100 and E-2200 processors, Graphics cannot be enabled on Dell EMC servers using this processor due to technical restrictions.”

What technical restrictions? The chipset supports it, but the BIOS doesn’t have it enabled?