Power Consumption

The redundant power supplies in the server are 1.4kW units. These are 80Plus Platinum efficiency rated units.

- Idle: 0.46kW

- STH 70% CPU Load: 0.71kW

- 100% Load: 0.97kW

- Maximum Recorded: 1.1kW

There is room to further expand the configuration which can make these numbers higher than what we achieved, but the 1.4kW PSU seems reasonable. We are also starting to use kW instead of W as our base for reporting power consumption. This is a forward-looking feature since we are planning for higher TDP processors in the near future.

Note these results were taken using a 208V Schneider Electric / APC PDU at 17.5C and 71% RH. Our testing window shown here had a +/- 0.3C and +/- 2% RH variance.

Next, we are going to have the STH Spider followed by our final thoughts.

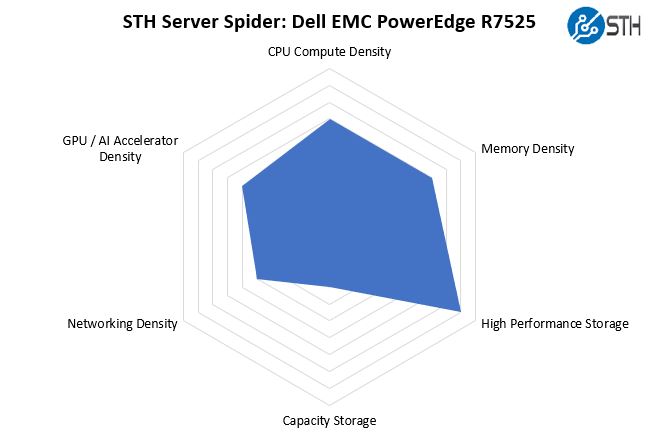

STH Server Spider: Dell EMC PowerEdge R7525

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

The compute density may surprise some of our readers since it is an ultra-fast system. Although we get two fast CPUs, it is 128 of these cores in 2U of space. In contrast, the Dell EMC PowerEdge C6525 we reviewed is a 2U 4-node (2U4N) system that can take the AMD EPYC 7H12 CPUs with four times the density. This is likely going to require liquid cooling, but there are form factors out there if you want higher-density. What the PowerEdge R7525 showcases is not just computing density, but also higher-end storage and networking density as well. For many, this is going to be a more balanced platform to align with the ultra-fast CPUs.

Final Words

Dell has certainly made headway since the initial Dell EMC PowerEdge R7415 we reviewed in the AMD EPYC 7001 “Naples” generation. The build quality on this machine is significantly better making it more on-part with the Intel Xeon competition. What the PowerEdge R7525 offers is the ability to consolidate Intel Xeon nodes, in the same generation, by more than a 2:1 ratio. One could argue that this is using more power than a dual Intel Xeon Gold 6258R or Platinum 8280 node, but it is also is providing a lot more performance.

When you get to 2:1 consolidation ratios, economics are transformative. Not only does one need less rack space in the data center, but one also requires fewer switch ports, PDU ports, and even software such as iDRAC licensed on a per-node basis. A smart buyer who has read our 2021 Intel Ice Pickle How 2021 Will be Crunch Time will know that Intel’s next competitive answer will be available in a few months. With Ice Lake Xeons and we are likely seeing a performance from the EPYC 7H12 that goes beyond what Ice Lake will offer. Platform features such as PCIe Gen4 are already here with EPYC but are several quarters away on the Xeon side. AMD will launch Milan later this quarter with Zen3 architecture unifying more of the cache design, so we are getting later in the AMD EPYC 7H12 “Rome” generation. Still, the business case for switching to AMD today is very strong.

The Dell EMC PowerEdge R7525 represents a strong message to the Dell Technologies ecosystem. This is a full-featured platform that allows AMD-curious or AMD-ready customers to get into the platform without having to sacrifice in the platform. Indeed, with the PowerEdge R7525, Dell has one of the most forward-looking platforms on the market on either the AMD EPYC or Intel Xeon side. For a customer that saw the first generation AMD EPYC 7001 offerings from Dell and felt that they were a heavily cost-optimized version of a PowerEdge platform, the PowerEdge R7525 elevates AMD’s platforms within the Dell ecosystem to beyond where its Intel Xeon platforms are at the time of writing this review. Make no mistake, that is a bold statement, but it is the state of the market today.

Speaking of course as an analytic database nut, I wonder this: Has anyone done iometer-style IO saturation testing of the AMD EPYC CPUs? I really wonder how many PCIe4 NVMe drives a pair of EPYC CPUs can push to their full read throughput.

I should have first said this: I want a few of these!!!

Patrick, I’m curious when you think we’ll start seeing common availability of U.3 across systems and storage manufacturers? Are we wasting money buying NVMe backplanes if U.3 is just around the corner? Perhaps it’s farther off than I think? Or will U.3 be more niche and geared towards tiered storage?

I see all these fantastic NVMe systems and wonder if two years from now I’ll wish I waited for U.3 backplanes.

The only thing I don’t like about the R7525s (and we have about 20 in our lab) is the riser configuration with 24x NVMe drives. The only x16 slots are the two half-height slots on the bottom. I’d prefer to get two full height x16 slots, especially now that we’re seeing more full height cards like the NVIDIA Bluefield adapters.

We’re looking at these for work. Thanks for the review. This is very helpful. I’ll send to our procurement team

This is the prelude to the next generation ultra-high density platforms with E1.S and E1.L and their PCIe Gen4 successors. AMD will really shine in this sphere as well.

We would set up two pNICs in this configuration:

Mellanox/NVIDIA ConnectX-6 100GbE Dual-Port OCPv3

Mellanox/NVIDIA ConnectX-t 100GbE Dual-Port PCIe Gen4 x16

Dual Mellanox/NVIDIA 100GbE switches (two data planes) configured for RoCEv2

With 400GbE aggregate in an HCI platform we’d see huge IOPS/Throughput performance with ultra-low latency across the board.

The 160 PCIe Gen4 peripheral facing lanes is one of the smartest and most innovative moves AMD made. 24 switchless NVMe drives with room for 400GbE of redundant ultra-low latency network bandwidth is nothing short of awesome.

Excellent article Patrick!

Happy New Year to everyone at Serve The Home! :)

> This is a forward-looking feature since we are planning for higher TDP processors in the near future.

*cough* Them thar’s a hint. *cough*

Dear Wes,

This is focused towards the data IO for NAS/Databases/Websites. If you want full height x16 slots for AI, GPGPU or VM’s with vGPU then you’ll have to look elsewhere for larger cases. You could still use the R7525 to host the storage & non-GPU apps.

@tygrus

There are full height storage cards (think computational storage devices) and full height Smart NICs (like the Nvidia Bluefield-2 dual port 100Gb NIC) that are extremely useful in systems with 24x NVMe devices. These are still single slot cards, not a dual slot like GPUs and accelerators. I’m also only discussing half length cards, not full length like GPUs and some other accelerators.

The 7525 today supports 2x HHHL x16 slots and 4x FHHL x8 slots. I think the lanes should have been shuffled on the risers a bit so that the x16 slots are FHHL and the HHHL slots are x8.

@Wes

Actually R7525 can be configured also with Riser config allowing full length configuration (on my configurator I see Riser Config 3 allow that). I see that both when using SAS/SATA/Nvme and when using NVME only. I also see that on DELL US configurator NVME only configuration does not allow Riser Config 3. Looking at Technical manual, I see that such configuration is not allowed while looking on at the Installation and Service Manual such configuration is listed in several parts of the manual. Take a look.

I configured mine with the 16 x NVMe backplane for my lab. 3 x Optane and 12 by NVMe drives. 1 cache + 4 capacity, 3 disk groups per server. I then have 5 x16 full-length slots. 2 x Mellanox 100Gbe adapters, plus the OCP NIC and 2 x Quadro RTX8000 across 4 servers. Can’t wait to put them into service.

Hey There, what’s the brand to chipset?

Hi, can I ask the operational wattage of DELL R7525?

I can’t seem to find any way to make the dell configurator allow me to build an R7525 with 24x NVMe on their web site. Does anyone happen to know how/where I would go about building a machine with the configurations mentioned in this article?