NVIDIA A100 MIG

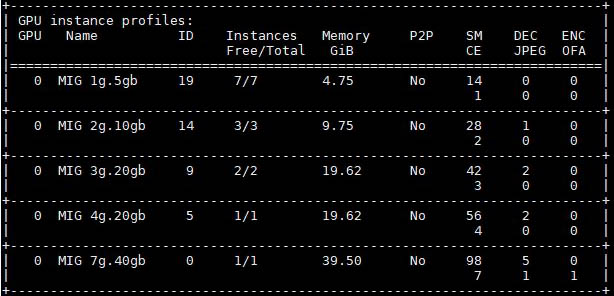

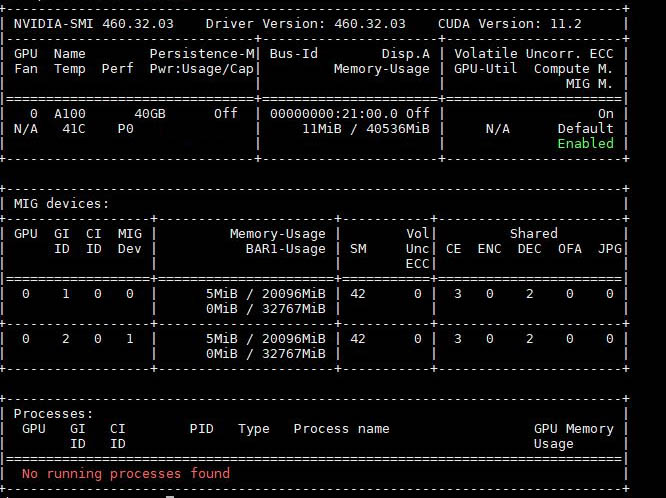

One feature that is very important in this system is support for NVIDIA MIG. With NVIDIA MIG, one can split a physical A100 GPU into multiple smaller logical GPUs, up to seven total.

That means that one can install four NVIDIA A100 GPUs, but then have them split to provide 8-28 GPUs to the system using MIG. For many AI inferencing tasks, it is more efficient to split the A100 into many GPUs rather than underutilizing the larger GPU. Each of these MIG instances can be used by individual VMs and configuration can happen on the fly.

This is only supported and with different instance limits on some NVIDIA GPUs, but it is a feature many of our readers are using today.

iDRAC 9 Management

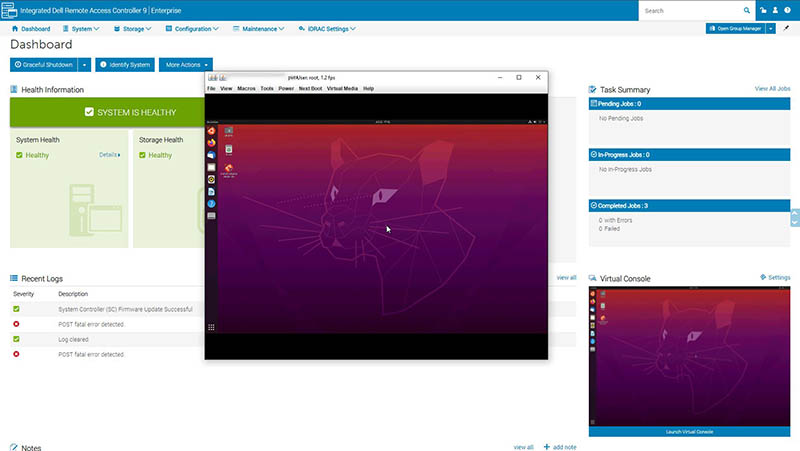

One of the biggest features of the PowerEdge R750xa is that it supports iDRAC. For those unaware, iDRAC is Dell’s management solution at the server level. Its BMC is then called the iDRAC controller. For those in the Dell ecosystem, you likely know what iDRAC is.

The iDRAC solution here we are not going to spend much time on. It is effectively similar to what we saw in our Dell EMC PowerEdge R7525 review, just with monitoring for the GPUs and the different fan/ drive configurations. One notable difference between the R750xa and competitive systems is that many GPU systems are designed to maximize GPU/$ and performance/$. As such, usually features such as iKVM support on GPU systems, outside of HPE and Lenovo, tend to be included. One needs to upgrade a license to do that with Dell.

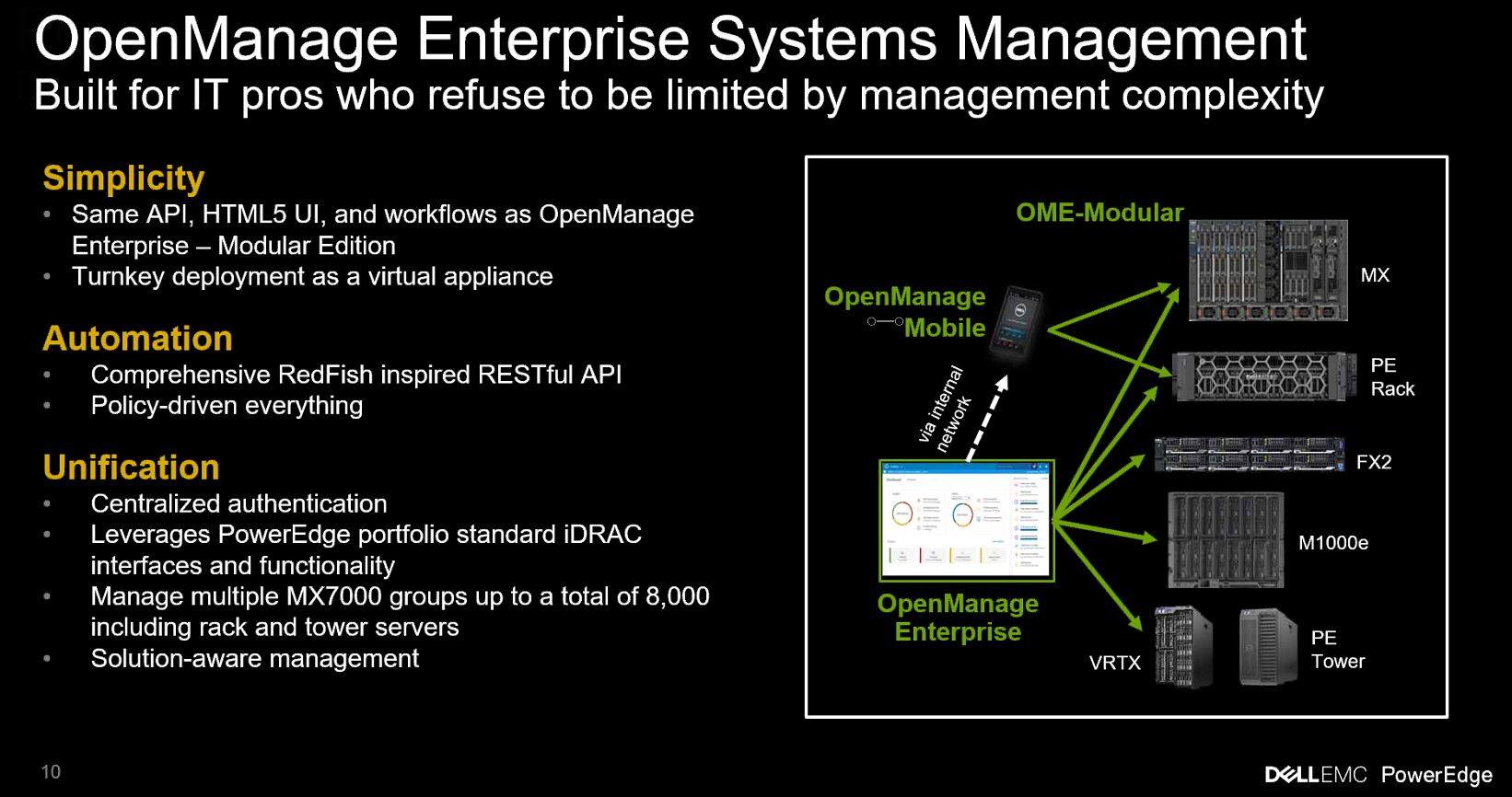

For most Dell shops, the big benefit is being able to manage the R750xa with Open Manage. That is the same management platform that can manage everything from the Dell EMC PowerEdge XE7100 storage platform to the Dell EMC PowerEdge MX, to the Dell EMC PowerEdge C6525.

A major benefit for organizations with the R750xa is the ability to manage the GPU-based solution alongside its other servers.

Next, let us look at performance.

Dell EMC PowerEdge R750xa Performance

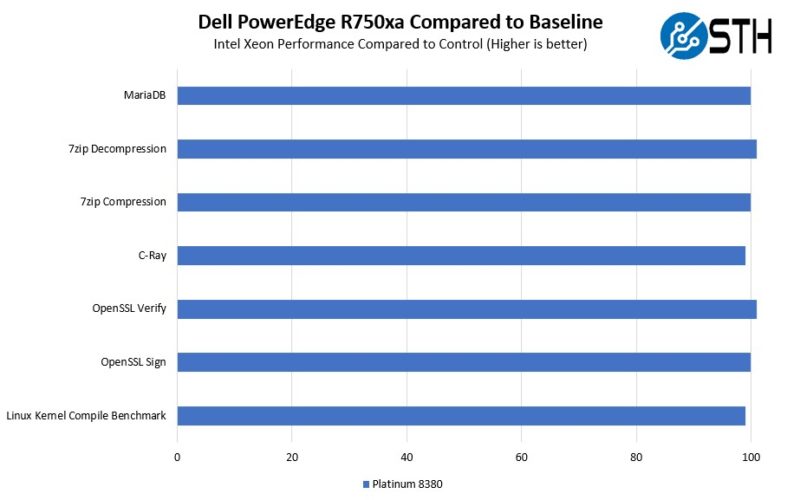

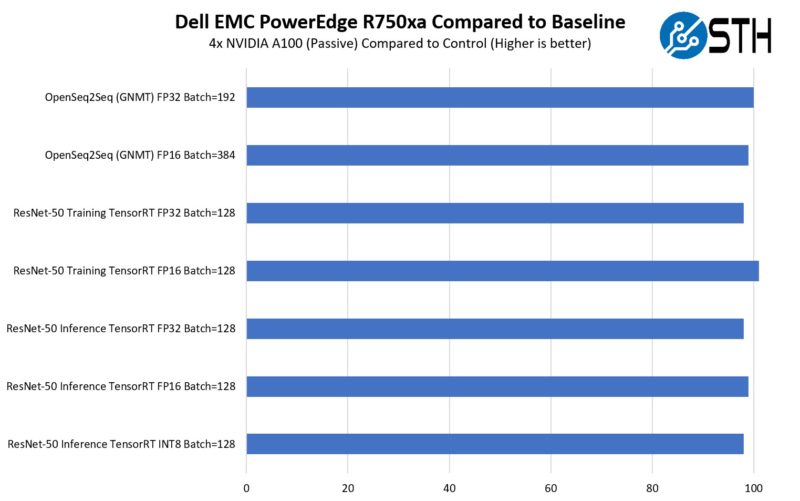

In terms of performance, in GPU accelerated systems the biggest deltas tend to come due to power and cooling. As a result, we loaded the system with 4x NVIDIA A100 40GB cards, and two Intel Xeon Platinum 8380 CPUs to see how well the system could cool the components and therefore perform. We are using 27.1C ambient in the data center’s cold aisle.

In terms of CPU performance, the CPUs seem to be cooled at about the same rate as a standard 2U server without GPUs, and that is the ideal result.

On the GPU performance, the performance was good. This is likely due to the GPUs being at the front of the system.

While this performance is good, it is also not exactly earth-shattering to the point where one would get this over a whitebox system for performance. The performance is good enough to keep Dell shops with Dell, but it is not a machine that will cause a company to switch vendors.

Next, we are going to get to power consumption, the STH Server Spider, and our final words.

It’s nice to see a real review of Dell not canned junk.