Dell EMC PowerEdge R7415 Hardware Overview

The Dell EMC PowerEdge R7415 is a 2U single-socket AMD EPYC server. In this section, we are going to show you around the server. We are going to show you how the R7415 uses much of the technology that makes PowerEdge the class-leading server in the market. We are also going to show you how the hardware compares to the company’s Intel Xeon offerings. Finally, we are going to show you where the R7415 design team made decisions for cost optimization and time to market.

Racking the Dell EMC PowerEdge R7415 is simple. It uses Ready Rails, so installation takes a minute or so. The front of the chassis looks like any other current generation 2U 24x 2.5″ bay Dell EMC server. You can configure the server with features like the LCD bezel and vary the number of NVMe and SAS3 drives that the chassis support. From the front of the chassis, this is the Dell EMC signature functionality down to the USB and monitor headers for cold aisle access.

Dell EMC PowerEdge R7415 Rear Features

The rear of the chassis is where we start to notice differences. For example, the rear I/O suite has two PCIe gen three full height riser slots. These are the first two in what will be four PCIe expansion slots exposing 3x PCIe x16 and 1x PCIe x8 slots. That feature alone is more than an Intel Xeon Scalable CPU can do in a single socket server.

THere are legacy serial and VGA ports along with two USB 3 ports for KVM cars in the data center. There is a dedicated iDRAC port. We are going to cover iDRAC on the server in more detail later in this review. The pair of 1GbE NIC ports (Broadcom) is a departure from the Intel Xeon offerings like the PowerEdge R740xd. These days most data centers are running 10GbE or faster networking, and Dell EMC uses a LOM for high-speed networking.

You can see two low profile PCIe slots giving us two low profile and two full height slots via risers. Opposite those PCIe slots, you can see the first spring loaded screw in the chassis. We are going to call this “screw number one” and discuss it in more detail when we get to “screw number two.”

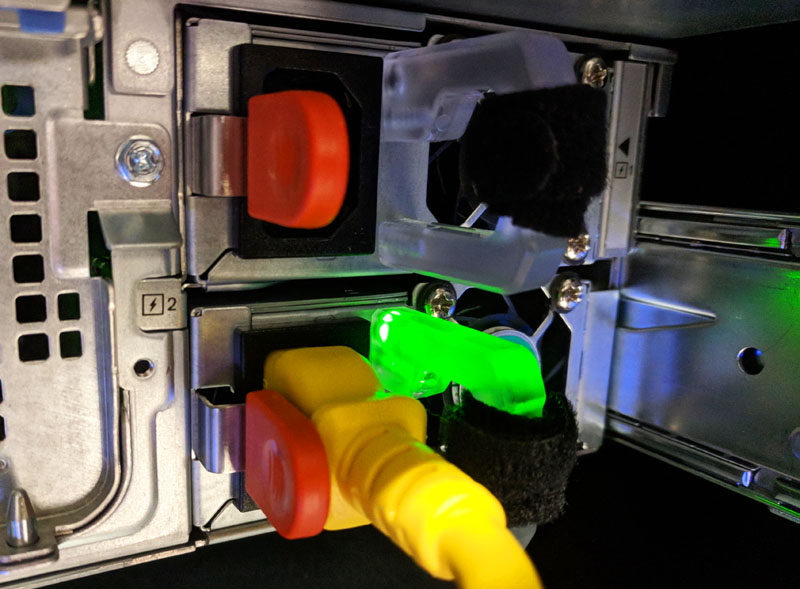

Power supplies included our units 1.6kW power supplies. Like other PowerEdge servers, these are hot swap, and the LED lighting tells us which PSUs are receiving power for easy diagnostics.

Dell EMC PowerEdge R7415 Internals

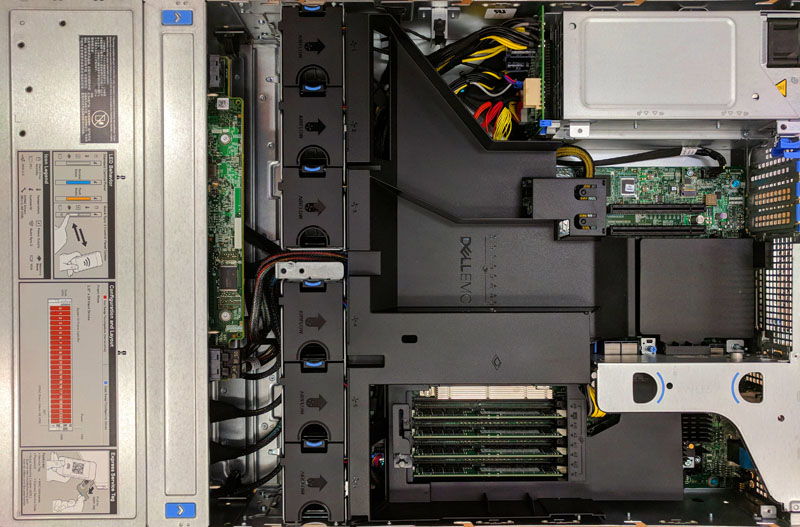

Moving inside the chassis, we find a well laid out 2U server. If you look at the power supplies, you will notice something immediately. The PSUs utilize a power distribution board instead of being plugged directly into the motherboard like on many other higher-end Dell EMC PowerEdge servers. We are going to discuss this in the next subsection, but it sets the tone for the PowerEdge R7415 being distinctly Dell while also being adapted to its target market.

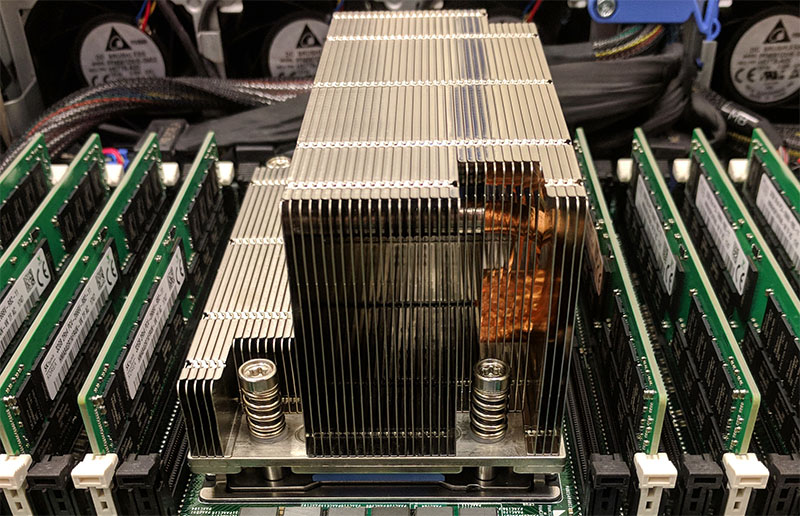

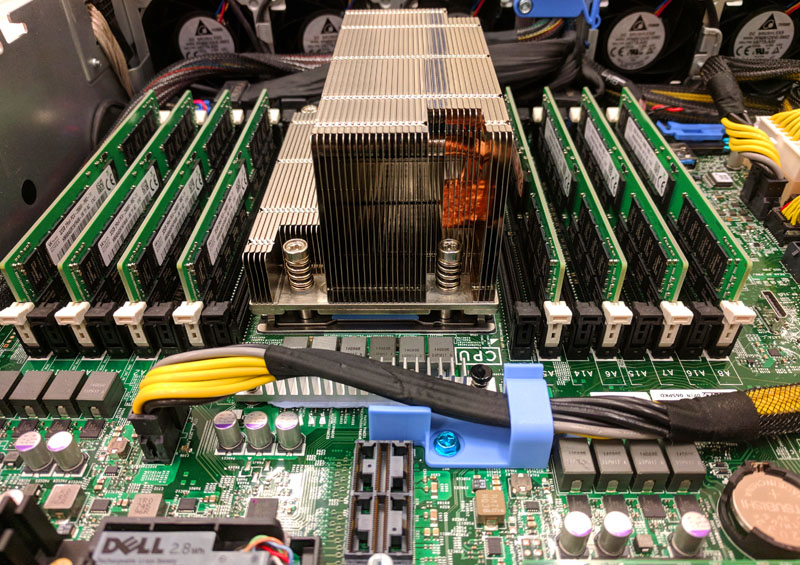

Air shrouds. These pieces generally the least exciting part of our reviews. They direct airflow to critical components. Then, the PowerEdge team gave us this surprise. There is a large center channel that directs air from fans 4 and five over the CPU heatsink. You can see that this only covers part of the heatsink while a small portion is exposed. On either side, there are eight DIMM slots all labeled. The difference is that eight (four populated in our test server) are exposed when the air shroud is present, and the other eight are completely covered.

Despite this asymmetry, underneath, the CPU is flanked by 16 symmetric DDR4-2666 DIMM slots with eight on either side. AMD EPYC 7001 series CPUs are 8-channel DDR4 memory chips, which means they have about 33% more memory bandwidth than Intel Xeon Scalable chips and more single socket memory bandwidth than dual Intel Xeon E5-2600 V4 systems (when the EPYC chips have DDR4-2666 RAM.) Capacity can also reach up to 2TB using 128GB x 16 DIMM configurations which are significantly more than the 0.75TB per CPU capacity of most Intel Xeon Scalable CPUs. The actual AMD EPYC socket is covered by a wonderfully asymmetric heatsink to fit in that air shroud.

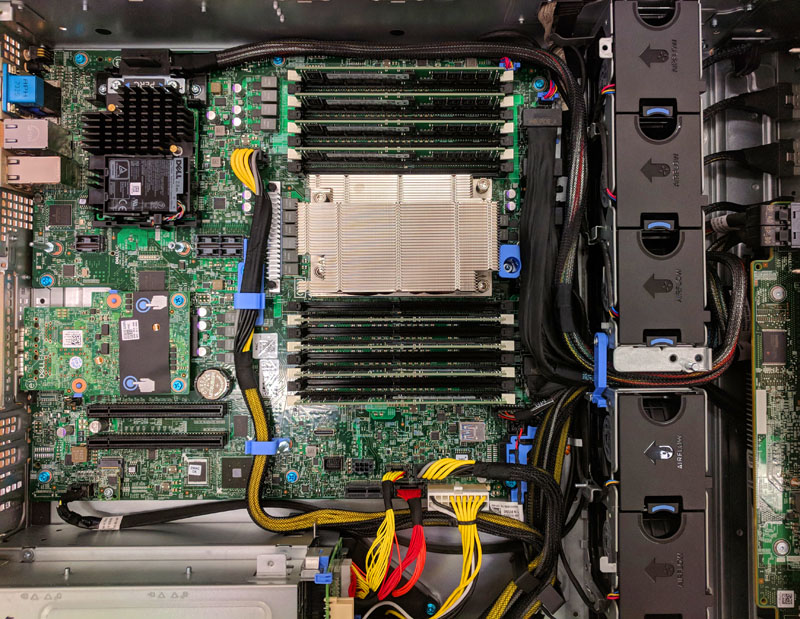

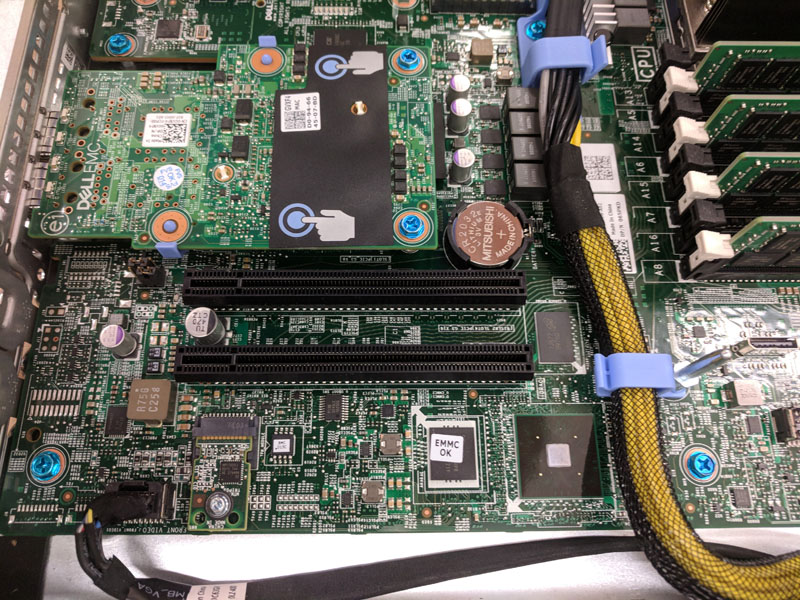

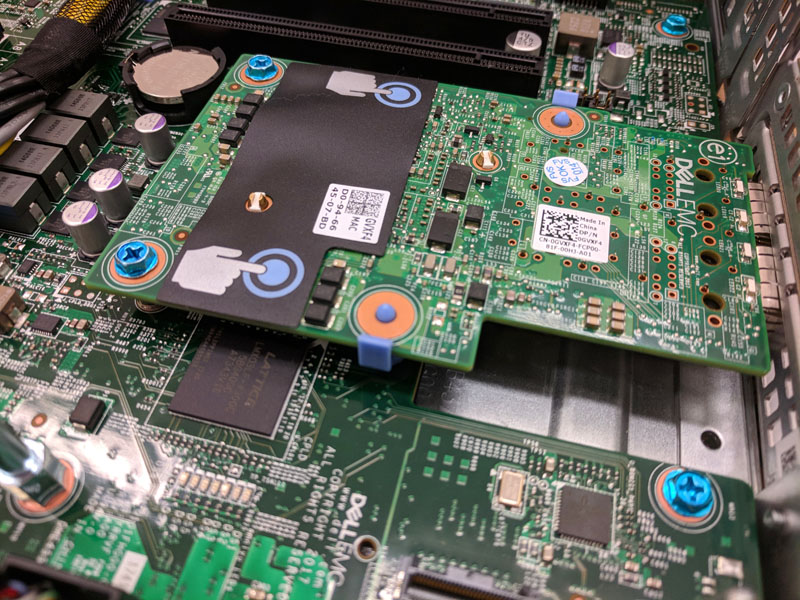

Looking at the system without the air shroud and PCIe raiser shows us the basic layout of the system. One can see that a lot is going on here.

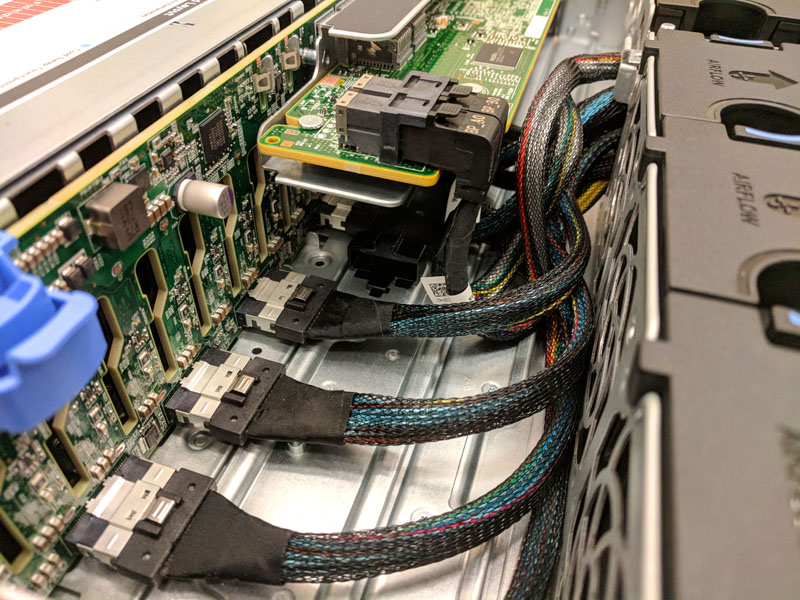

PCIe connectivity is a strong point of the AMD EPYC 7001 series (and future AMD EPYC platforms as well.) A single AMD EPYC has access to 128x high speed I/O lanes which can be used for PCIe and SATA. That means that the PowerEdge R7415 can handle an array of NVMe drives while still leaving a large number of PCIe lanes for expansion. These are the x16 slots that we saw feed the low profile expansion slots at the rear of the chassis.

Networking is provided by a fairly standard Dell EMC LOM with a Broadcom chipset. Our test server had dual SFP+ 10GbE networking via this card.

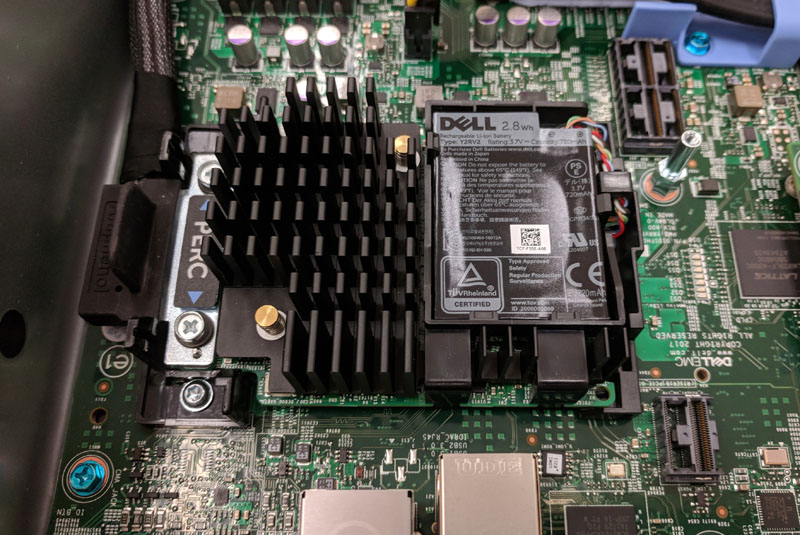

SAS3 12gbps connectivity is provided by a PERC H740P Mini RAID controller. These even have space for a battery and do not take up a valuable PCIe slot.

Dell EMC utilizes one of their awesome backplanes with SAS3 and NVMe capabilities mixed along the 24x 2.5″ hot swap bay array.

The system itself is well laid out and thoroughly PowerEdge. We wanted to focus on a few of the Dell EMC PowerEdge R7415 features that you may not be accustomed to seeing on the company’s Intel Xeon servers, but make sense in this target market.

Dell EMC PowerEdge R7415 Cost Saving Features

Like many new products on the market, the Dell EMC PowerEdge R7415 is seeking to gain an edge in the price/ performance category. Make no mistake; the PowerEdge R7415 still uses high-quality components everywhere down to the wall of Delta fans. At the same time, the designers optimized for cost and time to market. One example of this is a “zoom out” of the CPU and DIMM area. One will notice a power cable crossing the motherboard. This cable is something absent in platforms like the PowerEdge R640 and PowerEdge R740xd.

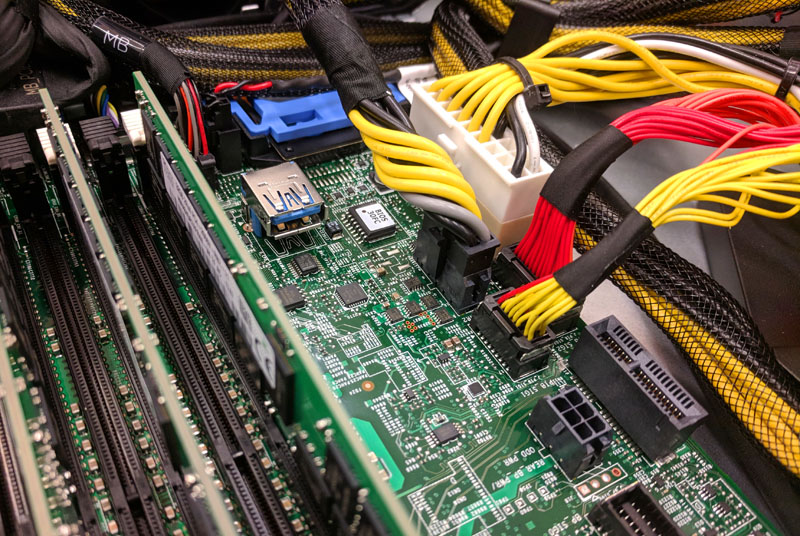

Now we get to “screw number two” which secures the PCIe riser card in place. If you are accustomed to tool-less servicing, the PowerEdge R7415 has a few areas where you will need to pull out the screwdriver. It is not a big deal, but it is there.

Near screw number two, you can see sell fitted air shrouds that direct air over the network module. These are the types of touches that show how, even optimizing for cost, the PowerEdge team still produces a higher quality product than some of the white box implementations we have seen. The Dell EMC attention to detail is still there.

Like other PowerEdge servers, there is an internal USB 3.0 Type A header. This header is a required feature for those who use embedded OSes or license key USB dongles. Near that header is an array of power cables. We mentioned earlier that the PSUs do not plug directly into the motherboard. This is one of the impacts of that design. Frankly, it is a reasonable tradeoff to make in order to speed time to market. Designs like this can also lower the number of PCB layers and the size of PCB which can have a meaningful impact on cost.

While it is fair to say that the Dell EMC PowerEdge R7415 is a first-generation product that has a handful of cost optimization design choices, it is not fair to say it is inferior. We would have zero qualms about taking the PowerEdge R7415 out of the lab and putting it into our production cluster. In fact, as we are going to show later, we think that these cost optimization steps make sense and Dell EMC came to market with a product that has features its competitors cannot match. These combine to make the PowerEdge R7415 the ultimate 2U single socket AMD EPYC platform at this time.

Next, we are going to discuss a must-cover topic with AMD EPYC 7001 servers: topology. This is one of the most important topics to understand if you want to know the difference between an Intel Xeon and AMD EPYC server. After the topology discussion, we are going to continue with performance testing, power consumption, and our final thoughts.

Patrick,

Does the unit support SAS3/NVMe is any drive slot/bay, or is the chassis preconfigured?

You can configure for all SAS/ SATA, all NVMe, or a mix. Check the configurator for the up to date options.

@BinkyTO

It depends on how you have the chassis configured. It sounds like they have the 24 drive with max 8 NVMe disk configuration. With that the NVMe drives need to be in the last 8 slots.

How come nobody else mentioned that hot swap feature in their reviews. Great review as always STH. This will help convince others at work that we can start trying EPYC.

The system tested has a 12 + 12 backplane. 12 drives are SAS/SATA and 12 are universal supporting either direct connect NVMe or SAS/SATA. Thanks Patrick and Serve the Home for this very thorough review… Dell EMC PowerEdge product management team

These reviews are gems on the Internet. They’re so comprehensive. Better than many of the paid reports we buy.

I take it the single 8GB DIMM and epyc 7251 config that’s on sale for $2.1k right now isn’t what you’d get.

You’ve raved about the 7551p but I’d say the 7401p is the best perf/$

Great review! Any chance of a cooling analysis (with thermal imaging)?

Impressive review

Koen – we do thermal imaging on some motherboard reviews. On the systems side, we have largely stopped thermal imaging since getting inside the system while it is racked in a data center is difficult. Also, removing the top to do imaging impacts airflow through the chassis which changes cooling.

The PowerEdge team generally has a good handle on how to cool servers and we did not see anything in our testing, including 100GbE NIC cooling, to suggest thermals were anything other than acceptable.

Great review.

Thanks for the reply Patrick. Understand the practical difficulties and appreciate you sharing your thoughts on the cooling. Fill it up with hot swap nvme 2.5″ + add-in cards and you got a serious amount of heat being generated!

Great Review! Many thanks, Patrick!