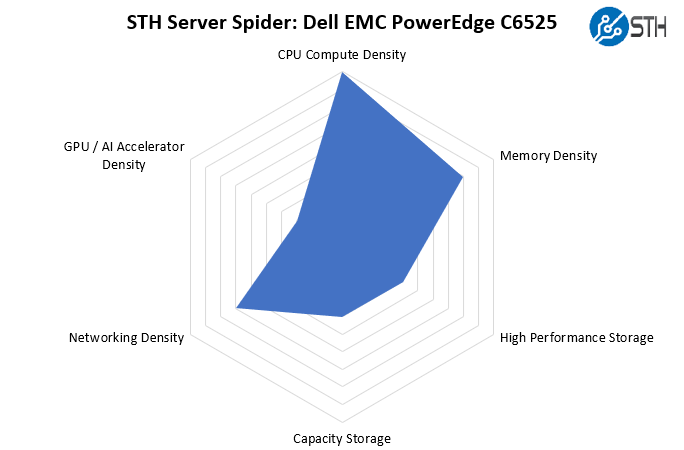

STH Server Spider: Dell EMC PowerEdge C6525

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

As a one kilo-thread server, the PowerEdge C6525 is just about the densest 2U of compute you can purchase today. It far outpaces the Intel Xeon alternatives given the raw core counts of the AMD EPYC 7002 (and soon 7003 series.) Also, with the OCP NIC 3.0 and PCIe Gen4 x16 slots, there is a solid amount of expansion. Our test system came with a single PCIe x16 riser, but Dell advertises up to two. Those PCIe slots alone give some extra ability to handle more I/O. One effectively gets double the PCIe Gen3 bandwidth found on current-generation Xeon (Cascade Lake) servers using AMD EPYC Rome processors along with Dell’s platform.

Final Words

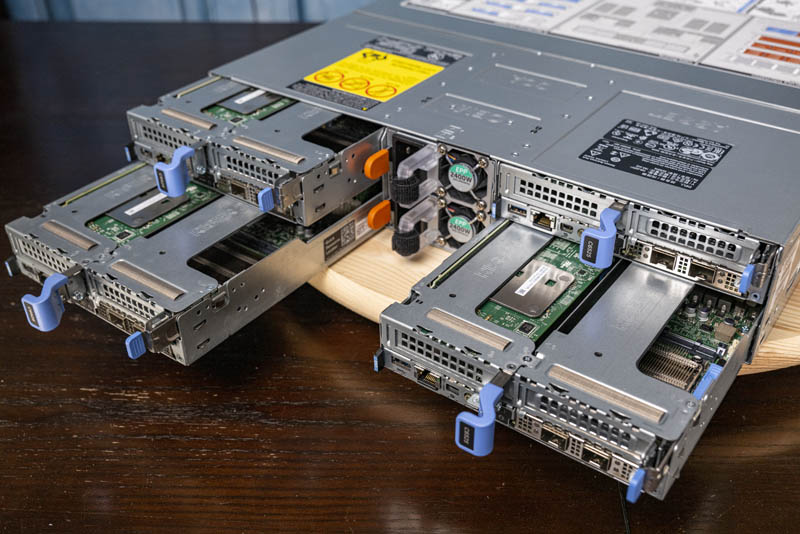

The Dell EMC PowerEdge C6525 pushes a lot of boundaries. It is one of the first 2U systems that we are calling a kilo-thread server because it scales up to 1024 threads using AMD EPYC 7002 “Rome” CPUs. Presumably, the EPYC 7003 “Milan” CPUs coming later in 2020 should also work in this system if Dell enables them.

Beyond raw core counts, it is one of only a handful of 2U4N AMD EPYC servers on the market that also has liquid-cooling options which means it can support AMD EPYC 7H12 CPUs even in these ultra-dense configurations. For high-performance computing centers, this offers lots of cores, cache, and memory bandwidth along with the density.

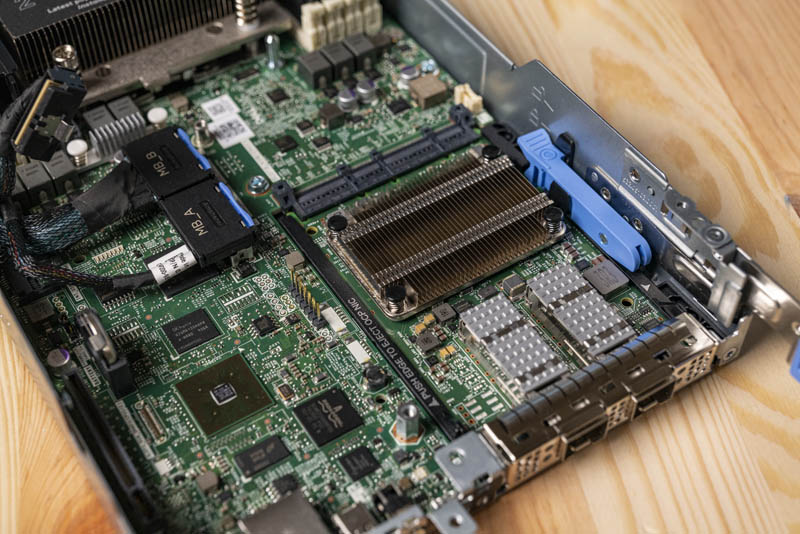

For enterprise buyers, a big feature may be PCIe Gen4 slots. This effectively doubles bandwidth over PCIe Gen3. In a space-constrained 2U4N environment, one can, for example, attach external DAS storage using PCIe Gen4 controllers and get twice the bandwidth to the node. Likewise, one can get two 100GbE ports on a PCIe Gen4 x16 slot that can operate at line speed or even a 200GbE port. That is not possible with PCIe Gen3 x16. For those disaggregating their environments, adding massive networking bandwidth is often a priority, as is achieving higher compute density.

One of the biggest results from our testing is that we saw a performance baseline within testing variances while getting around 2-3% lower power consumption in the “STH Sandwich” test configuration we use to simulate dense racks of these servers. That is a very impressive result as it adds an additional facet to TCO calculations when looking to consolidate from 1U 1-node to 2U 4-node platforms. Not only does one need a quarter of the power cables, but there are power savings to be had as well.

While we love the transition to OCP NIC 3.0, there are still some areas that can be improved in future iterations. The need to both latch and remove risers to service the NIC seems like a painful exercise in redundancy. That brings us to Dell’s riser design certainly feels older compared to the modern competition. Not having a chassis-level management controller also feels like a more traditional design rather than what we are seeing from newer systems. We also would prefer it if Dell found a way to balance platform security with circular economy and the environmental impacts of its systems when using AMD EPYC processors.

Overall, the Dell EMC PowerEdge C6525 is a very well designed system. STH’s first 2U4N servers were the Dell C6100, and the STH website ran on the C6100’s years ago so there is a nostalgic connection to this line. For those who want high compute density and operational efficiency, the PowerEdge C6525 offers management and hardware tempered by over a decade of experience making 2U 4-node servers.

“We did not get to test higher-end CPUs since Dell’s iDRAC can pop field-programmable fuses in AMD EPYC CPUs that locks them to Dell systems. Normally we would test with different CPUs, but we cannot do that with our lab CPUs given Dell’s firmware behavior.”

I am astonished by just how much of a gargantuan dick move this is from Dell.

Could you elaborate here or in a future article how blowing some OTP fuses in the EPYC CPU so it will only work on Dell motherboards improves security. As far as I can tell, anyone stealing the CPUs out of a server simply has to steal some Dell motherboards to plug them into as well. Maybe there will also be third party motherboards not for sale in the US that take these CPUs.

I’m just curious to know how this improves security.

This is an UNREAL review. Compared to the principle tech junk Dell pushes all over. I’m loving the amount of depth on competitive and even just the use. That’s insane.

Cool system too!

“Dell’s iDRAC can pop field-programmable fuses in AMD EPYC CPUs that locks them to Dell systems”?? i’m not quickly finding any information on that? please do point to that or even better do an article on that, sounds horrible.

Ya’ll are crazy on the depth of these reviews.

Patrick,

It won’t be long before the lab could be augmented with those Dell bound AMD EPYC Rome CPUs that come at a bargain eh? ;)

I’m digging this system. I’ll also agree with the earlier commenters that STH is on another level of depth and insights. Praise Jesus that Dell still does this kind of marketing. Every time my Dell rep sends me a principled tech paper I delete and look if STH has done a system yet. It’s good you guys are great at this because you’re the only ones doing this.

You’ve got great insights in this review.

To who it may concern, Dell’s explanation:

Layer 1: AMD EPYC-based System Security for Processor, Memory and VMs on PowerEdge

The first generation of the AMD EPYC processors have the AMD Secure Processor – an independent processor core integrated in the CPU package alongside the main CPU cores. On system power-on or reset, the AMD Secure Processor executes its firmware while the main CPU cores are held in reset. One of the AMD Secure Processor’s tasks is to provide a secure hardware root-of-trust by authenticating the initial PowerEdge BIOS firmware. If the initial PowerEdge BIOS is corrupted or compromised, the AMD Secure Processor will halt the system and prevent OS boot. If no corruption, the AMD Secure Processor starts the main CPU cores, and initial BIOS execution begins.

The very first time a CPU is powered on (typically in the Dell EMC factory) the AMD Secure Processor permanently stores a unique Dell EMC ID inside the CPU. This is also the case when a new off-the-shelf CPU is installed in a Dell EMC server. The unique Dell EMC ID inside the CPU binds the CPU to the Dell EMC server. Consequently, the AMD Secure Processor may not allow a PowerEdge server to boot if a CPU is transferred from a non-Del EMC server (and CPU transferred from a Dell EMC server to a non-Dell EMC server may not boot).

Source: “Defense in-depth: Comprehensive Security on PowerEdge AMD EPYC Generation 2 (Rome) Servers” – security_poweredge_amd_epyc_gen2.pdf

PS: I don’t work for Dell, and also don’t purchase their hardware – they have some great features, and some unwanted gotchas from time to time.

What would be nice is some pricing on this

Wish the 1gb Management nic would just go away. There is no need to have this per blade. It would be simple for dell to route the connections to a dumb unmanaged switch chip on that center compartment and then run a single port for the chassis. Wiring up lots of cables to each blade is a messy. Better yet, place 2 ports allowing daisy chaining every chassis in a rack and elimate the management switch entirety.

Holy mother of deer… Dell has once again pushed vendor lock and DRM way too far! Unbelievable!

It’s part of AMD’s Secure Processor, and it allows the CPU to verify that the BIOS isn’t modified or corrupted, and if it is, it refuses to post.. It’s not exactly an efuse and more of a cryptographic signing thing going on where the Secure Processor validates that the computer is running a trusted BIOS image. The iDRAC 9 can even validate the BIOS image while the system is running. The iDRAC can also reflash the BIOS back to a non-corrupt, trusted image. On the first boot of an Epyc processor in a Dell EMC system, it gets a key to verify with; this is what can stop the processor from working in other systems as well.

Honestly, there is no reason Dell can’t have it both ways with iDrac. iDrac is mostly software, and could be virtualized with each VM having access to one set of the hardware interfaces. This ould cut their costs by three, roughly, while giving them their current management solution. After all, ho often do you access all four at once?

haw is the price for this server PowerEdge C6525