Perhaps the most often asked question we get at STH is: “does WordPress hosting really require server hardware?” Strictly speaking, you can run WordPress just fine on modern desktop PCs, and even laptops. Just because something works, does not make it a good idea. We often find that those who purchase consumer hardware to save a few dollars at the outset end up converting installations to server hardware at some point in the future. Here is why you want server hardware for WordPress hosting.

Server v. Workstation Hardware for WordPress Hosting

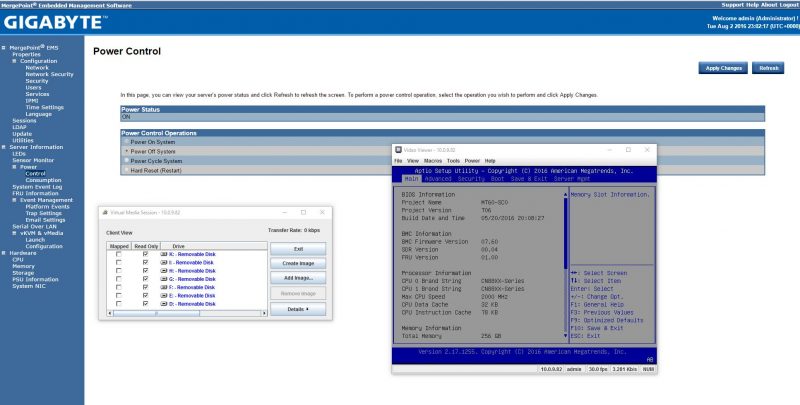

The number one reason to get server instead of workstation hardware is IPMI and iKVM functionality. When something goes terribly wrong, you can easily remotely diagnose what is happening. Here is an example of a Gigabyte server where we are using iKVM to troubleshoot a BIOS setting:

This capability to access a server, before booting to the OS, is a life-saver for remote management.

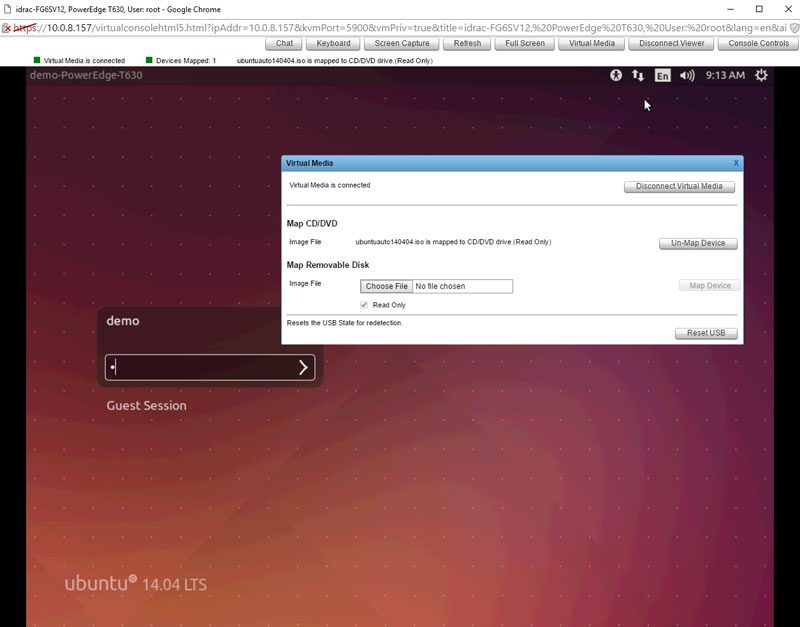

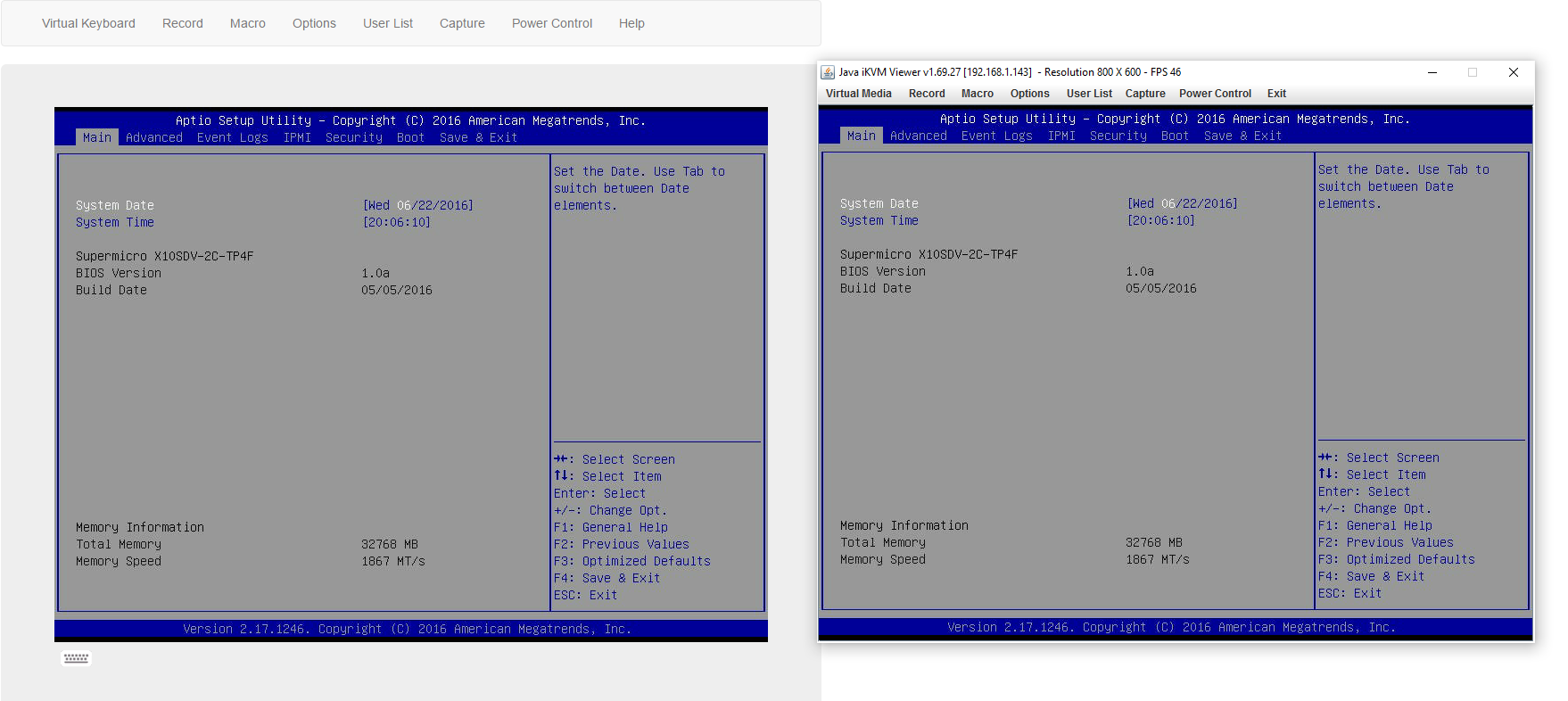

Newer versions from companies like Dell and Supermicro will have HTML5 iKVM clients which remove the need for JAVA.

These features allow you to install OSes remotely which is handy if you ever need to rebuild a machine. IPMI features also let you power on/ off machines remotely and get a remote serial console. You can even monitor sensors to see if a component is overheating leading to failure.

To give you an idea in terms of the level of impact, if a problem occurs, this can save hundreds of dollars in remote hands fees.

Server Platform Memory Support

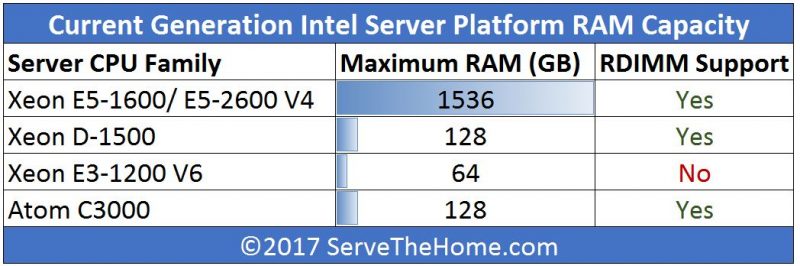

While remote management is the top feature, the other side of this equation is ECC memory. ECC memory helps detect memory errors and in some cases correct the errors. That means systems have a lower likelihood of crashing, causing downtime. Aside from the ECC feature, server platforms have been highly influenced by applications like virtualization and in-memory analytics so server platforms also have higher memory capacity than the ~16GB – 64GB maximum memory we see in consumer-grade platforms.

Here is what you need to know for current Intel server CPUs in single-socket configurations:

You can read a bit about UDIMM v. RDIMM ECC memory in our guide, but here is the summary. You always want RDIMM support if possible. While there are some niche cases were RDIMM performance may impact performance noticeably, WordPress is not one of them. Instead, you want more memory.

Honorable mentions in terms of other chips you may buy, but we do not recommend:

- Cavium ThunderX (ARM64): RDIMM

- AMD Ryzen: UDIMM (limited ECC)

- Intel Core i7 Series: UDIMM (non-ECC)

AMD Naples is the big launch coming up and that will have RDIMM support, but at the time of this writing, you cannot buy one yet. The Cavium ThunderX platform uses ARM64 instead of a traditional x86 Intel/ AMD architecture and can run WordPress well. The caveat is that there is a significantly larger buy-in for tuning a hosting stack on ARM. If you are just getting started with your own servers, we suggest getting an Intel or AMD based machine.

There are other nice features such as higher-quality onboard network interfaces, more rigorous testing with enterprise NVMe drives and etc., but the above items are probably the largest. Once you have been around server hardware long enough, the platform advantages become almost insurmountable.

Bottom Line: Get server hardware for dedicated WordPress hosting hardware.

This article in NO way explains why dedicated hardware is beneficial for running. Yes, WordPress can run on a server, and yes a server has things, but why are the things in server helpful WordPress. The title of this article might as well been called “IPMI, iKVM, CPU, RAM, WordPress”. Strays dangerously close to content farm article for search engine optimisation.

This is far below the standard of most articles on this website. Please don’t keep this up.

Ok, so my original comment was perhaps overly harsh, and without the full context. According to the forums, but not apparent in the article above, is that the article is to be part of a series of posts. Apologies.

Might be handy from an ops perspective, but I agree: I don’t see the connection here.

You’d better argue along the line of more memory bandwidth (effects on cache in Redis), dedicated uplink to a second (database) server, cores and number of ECDHE-ECDSA connections/s (though you persistently refuse to benchmark with an OpenSSL with Intel’s patches), TPM and security (integrity checking from start to runtime of the OS), even remote hardware monitoring is standard in med-sized businesses today and no word about that in the article…

But then a forum and some WordPress isn’t the most complicated or demanding software.

Sorry, Patrick, but you clearly don’t have the background for this.

WordPress needs a proper Varnish config, that’s all.

@edwald van geffen do people still use varnish after their lead has been anti HTTPS and HTTP/2? You’re right that it’s all about caching and proxying. I don’t see where I can like your comment but spot on.

We run a hosting business with tens of thousands of WP sites hosted. We sometimes see colo boxes that look like someone’s gaming PC come in. We now reject them and maybe we’ll start sending them this link. The use where we’ve seen customers want consumer hardware is gaming servers with higher single core cheaply. It’s also different when you’ve got the provisioning systems of larger hosting companies where you can manage consumer hardware at scale.

@Mark – sounds like you’re coming from a performance engineering background. Of people building WP hosting servers only the top 0.01% care about any of that stuff in this market. All of our customers who would find this useful are the same ones that have 1 to 4 servers and they’ve got no idea what Intel’s OpenSSL patches are. I haven’t seen one that even cares. They’re getting more performance than AWS and save wads of cash. We’ve had customers doing this and seeing payback in 1-2Q.

Usually when I see varnish deployed it’s sandwiched behind an nginx or another TLS enabled web server / load balancer, although one could probably do a lot of the caching stuff directly in nginx.

Otherwise gotta agree with Sam H, most people don’t care much about performance or even know which knobs to turn. I see it all the time when working with clients, they scale up to the next size AWS node or buy better hardware instead of looking at using proper caching or sometimes do trivial things like creating indices. AWS really only makes sense cost-wise once you also consume their service offerings (ALB, RDS, Cloudfront and the like) saving you a bit on the operations side, especially if you don’t know how to set up that stuff on your own.

@Sam, you’re right regarding ROI and performance. (Though, it’s security engineering for me.) But then, the premise of the article is why and how server-grade hardware excels, but says very little about it. It’s more like why someone new to ops finds IPMI nice and how much memory can be stuffed in the machine.

By the way, no word about vPRO as cheap alternative to IPMI’s remote access feature, especially for home and office.

For example, what’s left out for starters, is that in the event of uncorrectable ECC errors the system’s default behaviour will be to shut down or reboot. So you’d either write why this (and incurred data loss) is better than corruption (a flipped character in a forum post or article?), less likely, or how to configure just an alarm or increase resiliency. – But again, consumer hardware would suffice here. Worst case a checksum mismatch forces someone to change his/her password or a typo. – After all the most important difference between a i7 and an Xeon E3 is the memory controller.

Bottom line: Server hardware is a nice toy, but you can get overwhelmed by all the knobs to turn and places to monitor. This creates a false impression of reliability and illusion of control.

That’s a novel use. If only I could find the 32gb ones in stock. I know a few of our clients that’d be intrigued.

If we could make a wish list, this + a E5 V4 UP w/ 10×4 bifurcation. That’d be a stellar shared WP platform.

I tried the 16GB modules with a client who’s got a vB site >3k posts/ day. We put the db here and images on nvme. DB write latency is really good.