This is one that we have been talking about at STH for many quarters, but it is finally memorialized. As we have been discussing, CXL and Gen-Z are set to co-exist largely in different realms. Starting with the PCIe Gen5 generation, we expect to see CXL enter server nodes as the cache coherent fabric that binds components together. As we discussed even in our Gen-Z in Dell EMC PowerEdge MX and CXL Implications piece, we expect Gen-Z to be the node-to-node interconnect. That is effectively what the CXL and Gen-Z MOU covers. We had the opportunity to talk to the joint representatives from both organizations about this.

CXL and Gen-Z MOU Impact

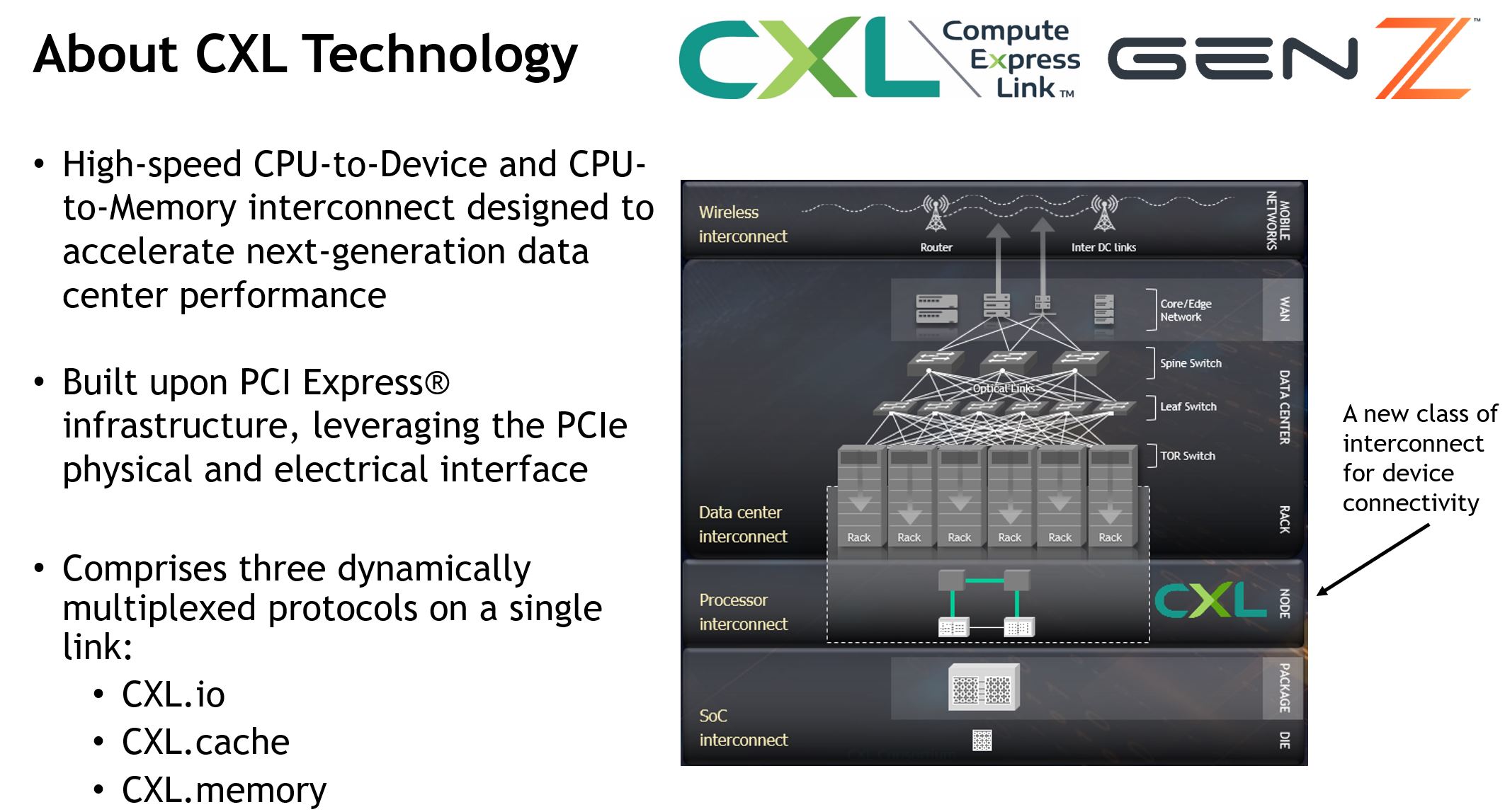

The MOU between CXL and Gen-Z lays out the borders in the industry fairly well. CXL is essentially going to be a layer atop PCIe, starting with Gen5 and will focus on more inside the node applications. If you think about PCIe, there are solutions with external GPUs, accelerators, and storage. Those may also make their way to the CXL ecosystem, but the node-level is the CXL’s general direction. With Intel, AMD, Arm, and IBM (Power) now supporting CXL, this is simply going to be the local fabric that the industry transitions to.

Moving outside of a single node and into a rack or row level architecture, that is where Gen-Z plays. A big part of the MOU and working group is to enable a CXL to Gen-Z bridge. This is similar to how we have external SAS HBAs that convert PCIe to SAS3 for disk shelves as well as interconnects such as Infiniband and Ethernet. Scaling to further distances and larger topologies is the domain of Gen-Z.

This will profoundly impact the industry. Let us take two cases, a cloud service provider (SCP), and an enterprise VMware data center.

For the CSP, and notably, Microsoft is a promoter of Gen-Z and CXL, this has enormous implications. If memory can be placed in a Gen-Z or CXL shelf and disaggregated from the compute node it can be better provisioned to a number of nodes and devices. For example, what if we had persistent memory in a disk shelf and it could be provisioned to an AMD EPYC, Intel Xeon, Arm, or Power node? One could swap one CPU architecture for another and still use a common interconnect. Likewise, one could use that persistent memory potentially for an accelerator or a future device that needs fast write logging. Rumor has it Azure looks at memory as an enormous chunk of their infrastructure spend, and therefore getting higher utilization has a huge ROI.

For the corporate data center running VMware, or other virtualization solutions, imagine the possibilities if a disk and memory can sit on a coherent interconnect and accessed by another device. Your RAM often does not need a reboot so a reliable shelf or two of memory could hold a VM in-memory. It could then be “vMotioned” not by copying contents of memory over the network, but instead having a new node start accessing that memory location.

For edge deployments and even hyper-scale data centers, this is the basic technical foundation that will bring practical disaggregation on a grander scale than we have seen to date.

When Will We See CXL and Gen-Z?

We asked when the first products will be out that support this future architecture. From what we have heard companies like Intel should be lighting up the first CXL silicon in the next quarter or so. Given silicon cycle times, and several quarters for customer readiness, the end of 2021 is probably the absolute earliest. By 2023, there is a good chance we will CXL in most shipping processors.

Our sense is that a CXL to Gen-Z bridge chip starts to show up in the market 9-12 months after CXL chips start shipping, but that may be impacted by chip timelines.

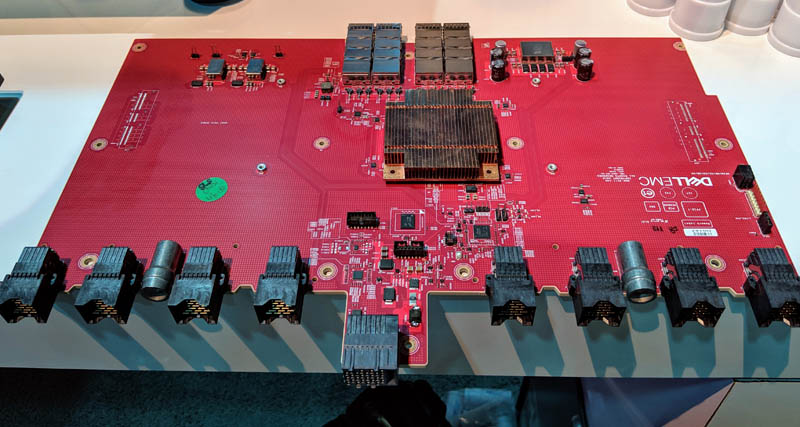

We have already seen solutions such as the Dell EMC Gen-Z fabric module. This is still a prototype but we expect the idea will get refined rapidly by Dell EMC and others as the market moves to this being production viable.

For our readers, this is not necessarily something we are going to say is important in the next 12-18 months for hardware purchases, but it is something to start thinking about and planning for on longer-term roadmaps.

Final Words

In the space of about a year since CXL’s introduction, the industry has largely agreed on where we are going. This is an excellent development in terms of getting clarity but it also means that we essentially have a winners (CXL and Gen-Z) as well as those on the outside.

This sets up a new world as well. We have heard Dell EMC and HPE heavily pushing Gen-Z. As the CPU vendors start to get closer to commercial solutions, we expect that other server vendors will start discussing their solutions.

A transition to the cache coherent interconnect future is not something that will happen overnight. Still, we are already seeing systems designed with them in mind and will continue to do so in the near future. The potential for a truly disaggregated architecture with these new technologies is absolutely tantalizing with the changes it will bring. What is certain is that the server architectures we have today will not be the same as we see in five years.

I was recommended this web site through my cousin. I’m not sure whether or

not this put up is written by way of him as no one else know such exact about my problem.

You’re amazing! Thanks!