FreeNAS Initial Configuration

This is where things get a bit more interesting. Our main objectives here are to:

- Create a storage pool

- Share that storage pool over the network

- Setup network interfaces to accept direct connections

Let us get started. These steps are fairly easy to follow, and once you go through it, you will understand how a large portion of the storage industry operates.

Creating the FreeNAS Storage Pool

When you have a lot of drives, like in the Jellyfish Fryer, you need to tell the NAS which drives you want to assign to a set. For some perspective, large storage systems will have thousands of drives, so decomposing drives into unique sets is a way to manage very large arrays. In ZFS like FreeNAS and the Lumaforge Jellyfish use, these sets are called pools. Think of it like a pool of disks you are going to store data on.

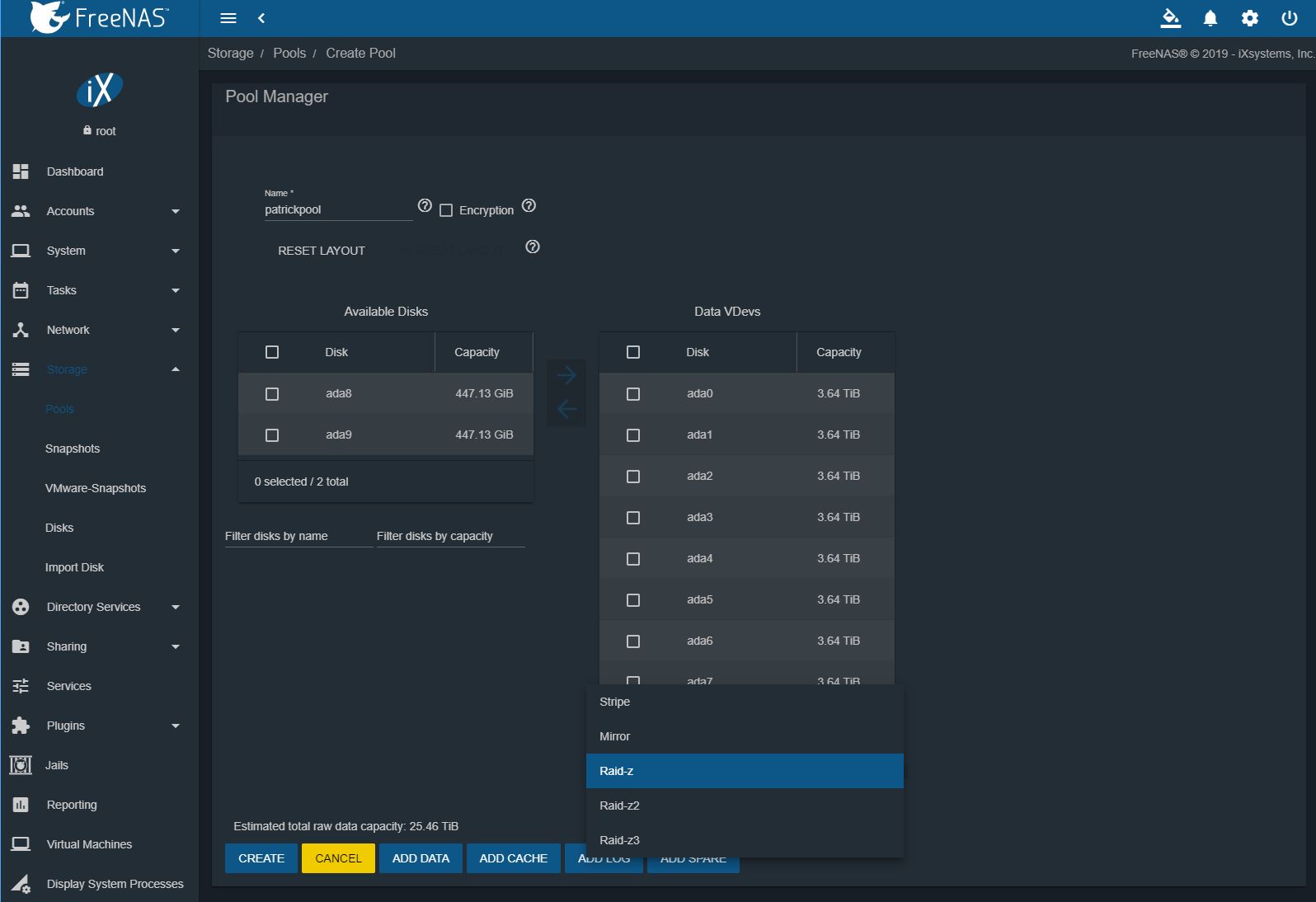

In the FreeNAS UI go to Storage -> Pools -> Create Pool. Then enter a pool name (here “patrickpool” because I lack creativity. Then select drives from “Available Disks” and hit the arrow towards Data VDevs. A “vdev” is a ZFS term. You will then want to select a RAID level (for this guide, we are skipping Stripe and Mirror.) When selecting a “Raid-z” level, think that the number of z’s is how many drives can fail. So that gives us the model of:

- -z = z*1 = 1 drive can fail and you will retain data

- -z2 = z*2 = 2 drives can fail and you will retain data

- -z3 = z*3 = 3 drives can fail and you will retain data.

In a 24-32 drive array, especially using hard drives, you probably will set up something like 3 groups of RAID-z and put them together. For 26 or so SATA SSDs, you can use RAID-z2 and still push 40GbE speeds. This keeps complexity low and you should be able to have multiple editors online at the same time with this. Again, this is a benefit of using SSDs.

You can see we have cache SSDs here, but with ZFS, usually we do not need to cache SATA SSDs so we are going to skip that. In the Jellyfish Fryer video, we had a 26x 3.84TB Raid-z2 array. We had a 27th drive that we used as a hot spare.

You can add a hot spare by clicking “ADD SPARE” on this page and selecting the extra drive. The hot spare greatly increases array reliability, especially with SSDs and Raid-z2. If a drive fails, this spare drive will quickly start taking over for the failed drive without manual intervention. High-end arrays usually have hot spares which usually turns a disk failure into a non-event. That is something you want if your livelihood depends on it.

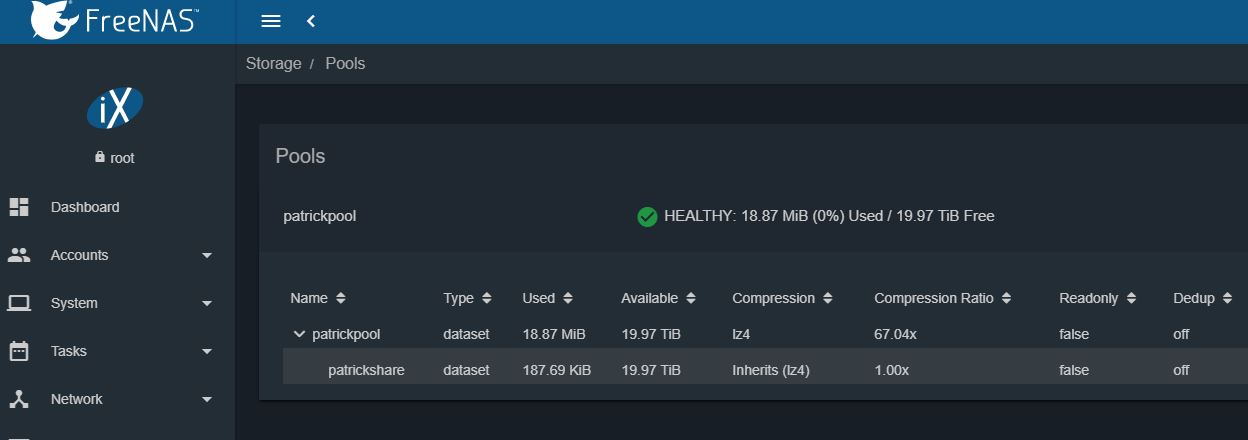

Once the pool is created, there are still more steps to do. Being frank, these are steps we do not have to do with some of the consumer NAS options out there. The iXsystems/ FreeNAS team is going to have Wizards to make this easier in their upcoming update. For now, there are a few more steps.

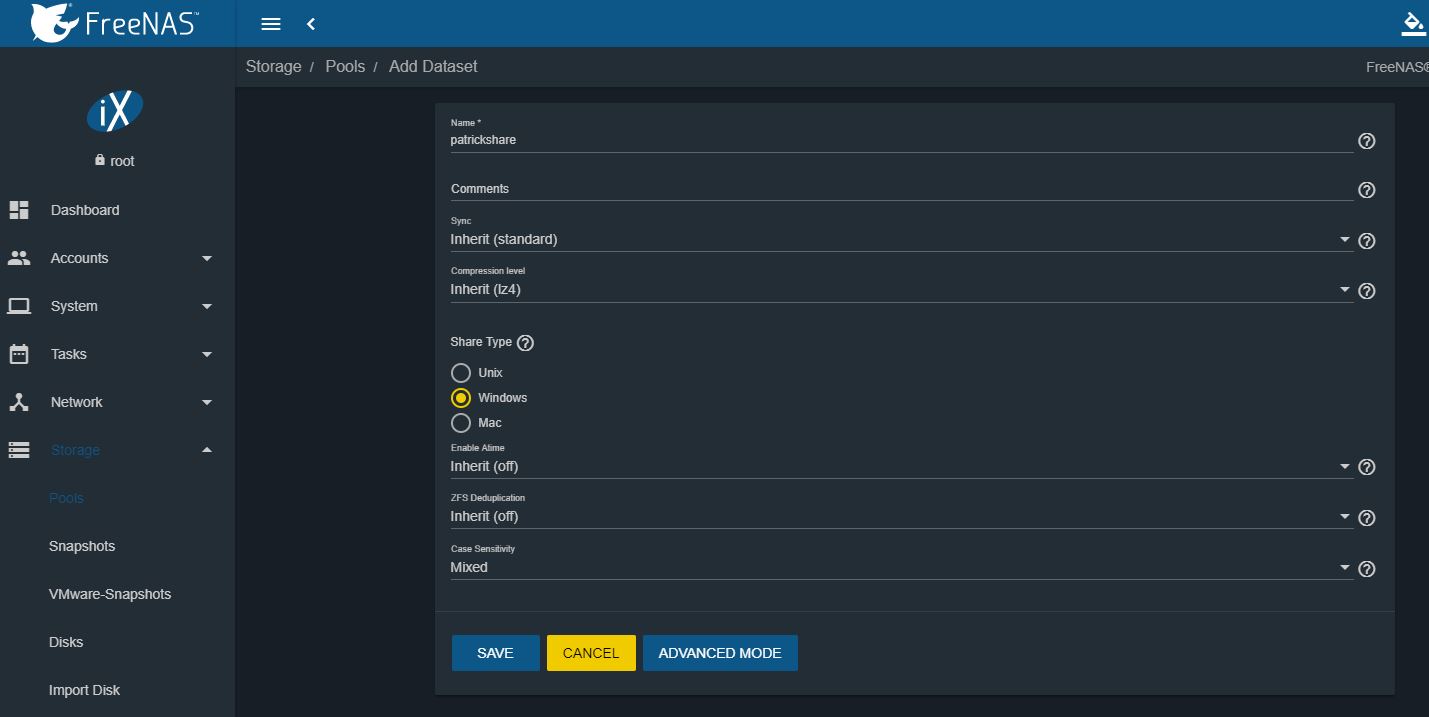

Next, we need to make a dataset. Think of the dataset as a Here, we are going to set up a Windows dataset. You will want to go to Storage -> Pools -> Add Dataset. This option is a bit hard to find but in our example, it is in the “patrickpool” dropdown context menu on the right.

Here select the “Windows” type then use Case Sensitivity “Insensitive” (it is Mixed in the screenshot.) Once you are done, you can click SAVE and the dataset will be ready to go.

The hardcore ZFS folks will have a long explanation of why to do this. Here is the short conceptual model. You are creating a specific shared “folder” that you can set things like snapshots specifically for. That helps with organization and also you can manage that folder/ dataset separately assigning permissions or backup as needed.

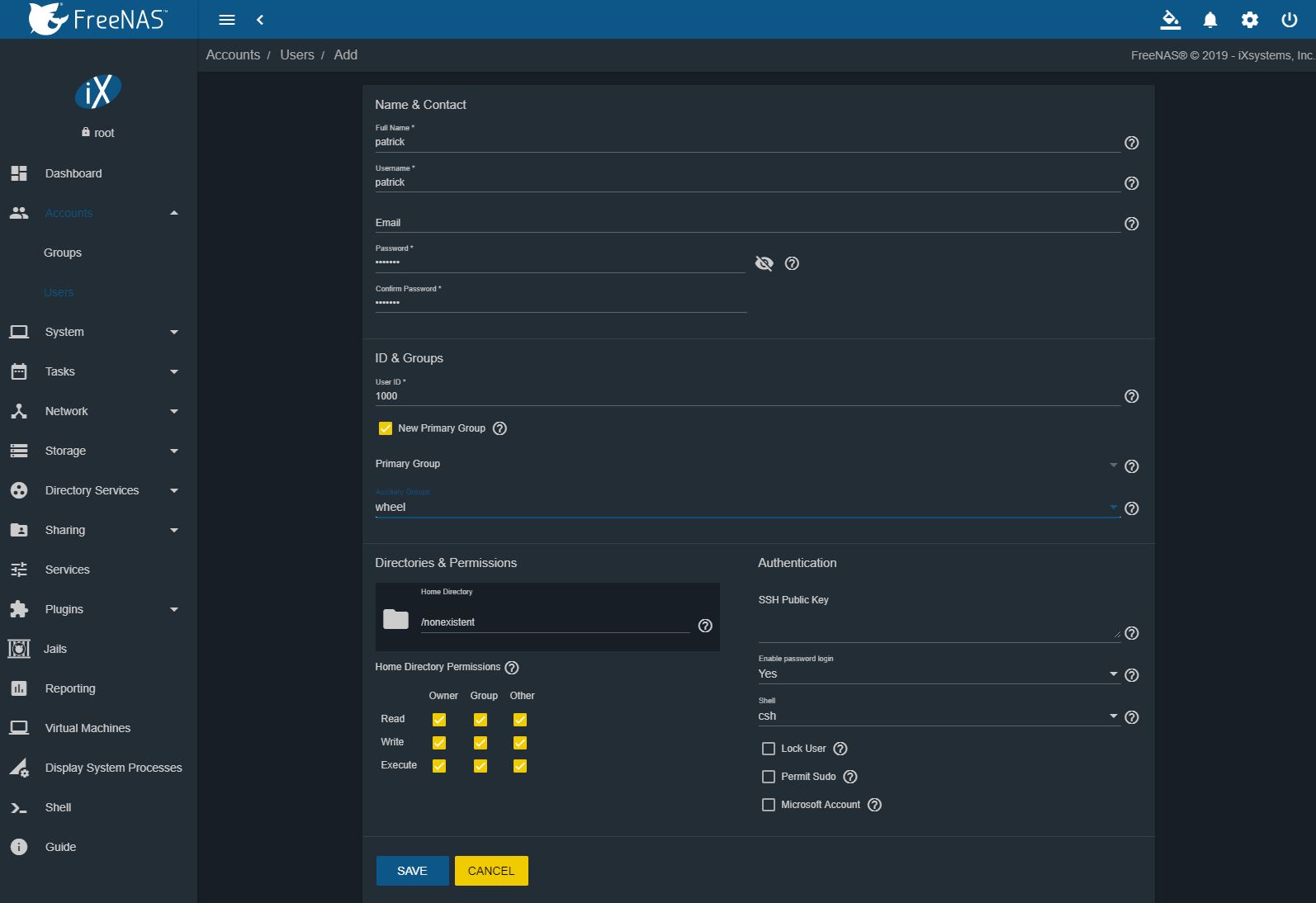

Add User(s)

At this point, you can just use the “root” login and access data, but this is a bad idea. You should make a new user under Accounts-> Users. We are going to let you read the documentation on all of those boxes.

Here we have a user I am making called “patrick”, again due to a lack of creativity.

Creating the Share

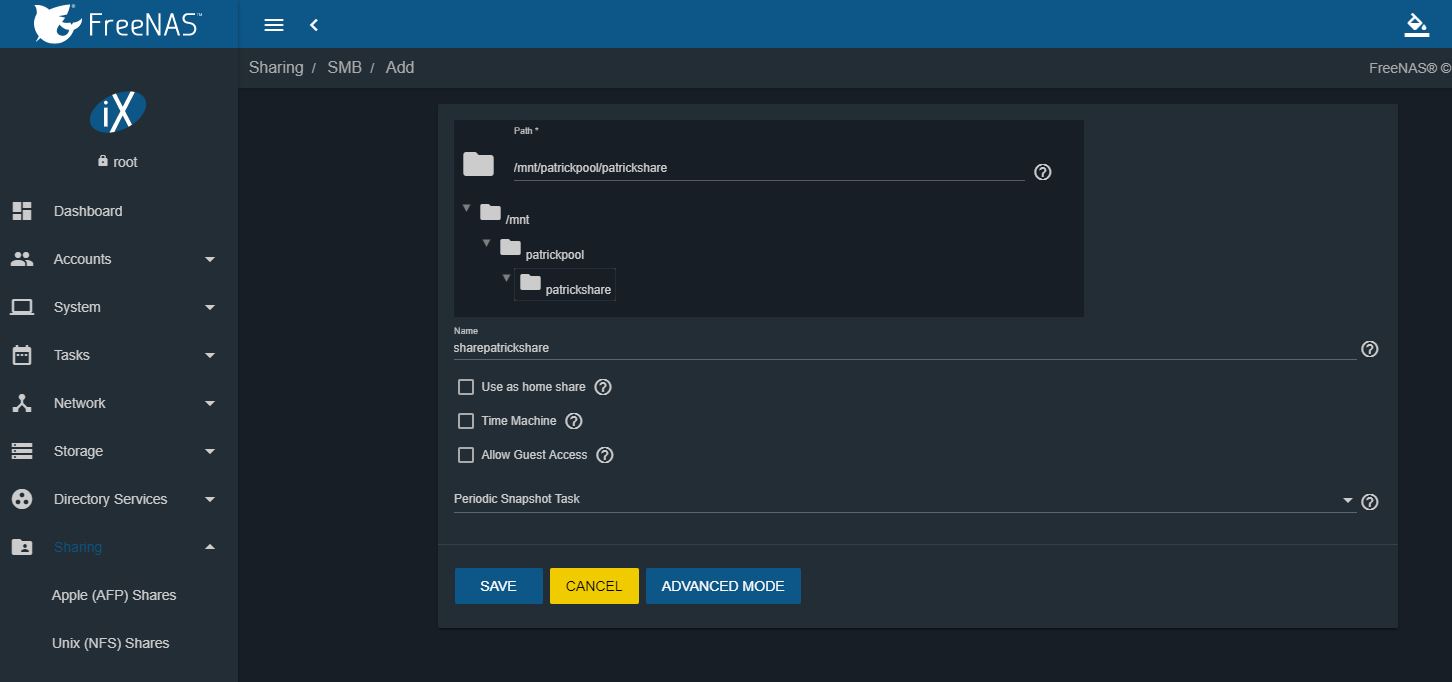

Next, you want to create a Windows / SMB / Samba share. Go to Sharing-> Windows (SMB) Shares -> Add. Then click folders to the share dataset “patrickshare” for us.

As you may have gleaned from the naming conventions we are using, this all seems very repetitive. Needing this many steps is frankly one of the biggest complaints we hear about FreeNAS. On consumer NAS systems, this is easy. FreeNAS is a tinkerer’s solution that grew up focusing on exposing the power of all of this complexity.

This workflow we are using is perhaps the most common workflow for FreeNAS, and yet it takes an enormous number of screens and a decent amount of knowledge. At this point, our guide is 2000 words long. In the future, FreeNAS 11.3 wizards are supposed to finally make this easier.

Next, we are going to cover how to connect to the Jellyfish Fryer.

Is FreeNAS any different from a zfs on linux setup?

>Is FreeNAS any different from a zfs on linux setup?

A little more appliancy. Personally ZFSOL has legs. Have a look at what Jim Salter is doing. Next level ZFS.

Tony, they use the same OpenZFS base. At an OS-level, FreeNAS is built on FreeBSD which is different than Linux (some good, some bad.) FreeNAS also has a Web GUI and an active team developing it.

With that said, I personally use a lot of ZoL. More recently our lab has been using a lot of Ceph storage which I am contemplating using at home as well.

Speaking of Ceph, i would really like it if iX implemented Ceph into FreeNAS/TrueNAS, that way FreeNAS could act as a controller for Ceph and at the same time act as a gateway for Samba.

45drives uses Ceph alot, couldn’t they develop a Ceph plugin for FreeNAS, or work together with iX to implement it.

Currently there is very few options to scale a NAS other than UP, would be nice to have an option with horisontal scaling too. Now, Ceph isn’t really ment for NAS:es but hey, atleast it would be an option.

Will probably never happen but there is a huge gap in the market between these single enclosure NAS systems and for example a multi-deployed Isilon “cluster”

But what about ssd trim under zfs ?

It’s worth pointing out that the Stornado can also come with FreeNAS pre-installed. It also comes with support and a warranty packaged into the price.

Interesting video showing the two sides (DIY vs paying an OEM to do it all for you). The SSD storage in the DIY solution sure seems like a no brainer decision though, especially compared to HDDs in the jelly. But MKBHD gave good argument for those who choose the jelly.

Also was nice seeing Patrick on LTT, quite a different audience for STH.

Krzysiek,

Trim is supported in ZFS 0.8.1.

If only SAMBA supported SMBDIRECT…

Incredible the performance of SMBdirect with 2 direct connection over 40GB Mellanox CX3.

Benchmarching a ramdisk exposed as SMB share, nearly 6GBYTES performance on E5 2640 v0 server, IOPS thru the roof…

So putting a large Optane or NVMe cache of any kind wouldn’t help with performance?

Is it possible to set this up on a mac? How different is the process?

Jellyfish fryer…

somehow, this brought to my memory that Video Toaster thing for the good old Commodore Amiga

LOVE this series! I am in the middle of planning a DIY all-flash server for video editing, VFX, and color-grading myself, so am totally the target audience for this. This series couldn’t have come at a better time for me, personally. A few questions I hope to get some input on:

1. I was planning to go with 8 x Micron 9300 Max 12.8TB U.2 NVMe SSDs attached to 2 x HighPoint SSD7120 X16 PCIe HBAs. These are capable of 3.5GBps individually, so massive performance. I would probably do Z+1 and have a good backup strategy. Any comments on this as a good idea / pitfalls / tweaks?

2. Would there be a good Windows Server-based solution to doing this sort of build, or is there no equivalent/good file system + software RAID solution there compared to ZFS? It’s just that I’m much more familiar with Windows as a platform and opens up some additional services / features to me on the host (i.e. run some transcodes on it, etc.).

3. Is there any way to make a Thunderbolt port behave as a direct-connect network so as to negate the need for a Thunderbolt 10G Ethernet adapter if wanting to connect a laptop?

4. Anyone know a good way to determine what level of CPU is needed to run these high-performance NAS systems? I mean, is the CPU a bottleneck so it pays to have dual high-spec Xeons, or when do we hit the point of diminishing returns? I want max performance, and haven’t figured out a good way to avoid over/under speccing the CPU(s).

How can the freenas centurion be cheaper then the stornado?

It has 20Tb less and 6 cores less but the freenas has more ram, more 10gbe ports and a warrenty.

This might be the most commented STH article of all time on here. Looks like a bunch of people just found out what we’ve known – Patrick’s the Server Jesus these days. It was great seeing him on LTT. I hope they’re planning to do more.

G the Centurion uses a standard off the shelf server so that’s cheaper. They’re probably getting a better deal because all the storage is included while Linus and Patrick used retail pricing. You can get lots of 3.84tb drives under $500 now. They didn’t cover Centurion noise but if it’s saving you $15k then that buys a lot of sound deadening. Maybe they’ve tuned fans like on the mini xl+?

This sentence from the article seems…odd. (I read it five times, and am still unsure of the original intent, even if a single word was perhaps skipped, etc…)

“Many of the frustrations we hear from STH readers around FreeNAS the team is working on. ”

Seems like it needs an extra word or phrase to clarify meaning…

I’ve been hearing that using USB flash drives has fallen from favor, and that most flash drives will fail within a month or three…

Yet, the installer still seems to condone/recommend it…or does it?

Is there an official verdict from the FreeNAS/TrueNAS folks?

[quote]This sentence from the article seems…odd. (I read it five times, and am still unsure of the original intent, even if a single word was perhaps skipped, etc…)

“Many of the frustrations we hear from STH readers around FreeNAS the team is working on. ”

Seems like it needs an extra word or phrase to clarify meaning…[/quote]

Insert comma after FreeNAS. ‘Tis a poor sentence though.

Good to see Patrick on LTT :)

I think I’ve gotten more anxiety than fun from watching Linus play like a child with networking. He really needs to read STH more often and learn a thing or two.

Glad you could represent Patrick! Also glad you could do a ‘back-to-roots’ STH post like this. Love me some tutorials and thrifty data management advice.

Ouahh! Great idea this Jelly fryer!!

Funny, I had an online demo session with the lumaforge team 3 or 4 years ago… And it the end of demo I was like…. wait…. but hey!!! That’s FreeNAS behind your system and you are not even mentioning them. I didn’t find that very “Open source friendly”… I get that a turnkey system with warranty and after sales services come at a price… but I don’t won’t to over pay the service.

So I decided I would build my own… although I never really found enough time to do as I also wanted to go down the 100% refurbished lane. More eco friendly ;)

Anyway, I am now ready to proceed and this tutoriel of both STH and LTT couldn’t be better.

I have ONE question if I may. This biggest hurdle for me is to find the right chassis with Direct Attached Backplanes. But do I really need a Direct Attached Backplane if I’m not using SSD’s?

I understand Supermicro offers that option with TQ backplanes.

I will be using 2 x 10 refurbished SAS 3 Drives ( HGST Ultrastar 7K6000 HUS726040AL4210 4TB ) in a Raid z2 in a 24 bay SuperChassis 846TQ-R900B.

HBA: LSI 3008

NIC: Mellanox ConnectX-3 VPI Single FDR

I will also be using 256 GB of Ram and 4Tb PCIe Flash storage for L2ARC.

Any help / tip regarding backplanes / HBA and NIC would be awesome.

Direct Attached or Expander? This really changes the number HBA ports needed. And the overall price. :)

Cheers and thanks for any help. Maybe I can hire your help? I’m not sure how STH actually works. :)

Leigh (@ Ring Ring Ring production and post production shop, Paris, France)

Generally with FreeNAS we prefer direct attach. At the same time, and especially if you are using SAS drives, an expander will likely be fine. Much of the early issues with SAS expanders and SATA drives were in the SAS 1 era.

Thank you very much @Patrick Kennedy for your help.

I think I will go forward with the TQ (as I can’t find a refurbished A) backplane. It’ll be futur prof if I wish to Upgrade the NAS to ALL SSDs. :)

Cheers

Leigh

Hello @Patrick

I have a new puzzling question…

What kind of 40Gbe NIC should (could) I use on a Mac Pro Classic (or hakintosh )? If I have Intel Nic on the Nas server, can I have Atto on the client end?

Thanks for any of your wisdom.

Leigh

Hi Leigh – 40GbE is standardized so you can use NICs from different vendors on each end. What I do not know offhand is which 40GbE cards work with OS X.

Love the idea but the implementation kind of sucks, this could be a lot more compact and not cost 12k dollars.