I do not often write entirely editorial pieces, but with the latest colo build-out I have become more than enamored with the Dell C6100‘s compute model. This is the same architecture found elsewhere in components like the Supermicro Fat Twin and 2U Twin2 server lines. The experience has made me an advocate of that type of server architecture and the need for a common compute node slot. This is doubly true now that more devices are being integrated in a System on Chip (SoC) style model. Looking back to older architectures, we needed common footprints to handle many expansion slots, north bridge, south bridge and etc components. Today that is becoming more and more irrelevant. What we need is a common, small form factor design for servers. For those wondering mITX is too big.

The Dell C6100 has four hot swap nodes and is/ was Dell’s best selling cloud server series for a while. The key to the Dell C6100 is the fact that it uses four dual processor nodes in a 2U chassis. Motherboards have their power and SATA/ SAS ports converted to a slot-type connector, to make for easy hot swap insertion. The format is a common compute node size for twin architectures. This is something that we should see in most if not all compute nodes today so that they can be quickly swapped.

This twin form factor is a common compute node size but still only allows for only two systems per 1U. With that being said it sill provides for a lot of power in a relatively compact platform. The compute nodes are also easy to swap out since they utilize backplane connections for power and drive connectivity.

In the world of Atom and ARM servers, this is an important development. Today, Atom mITX motherboards are on the order of $200/ each loaded with multiple NICs and IPMI. My sense is that this will fall drastically in the next 24 months. SoC designs are going to force this transition. Simply put, not as many components are needed for basic nodes. In my non-existent spare time I have been putting together a proof of concept around an Intel Atom and ARM (Raspberry Pi) cluster in a small box (the AtomPi project):

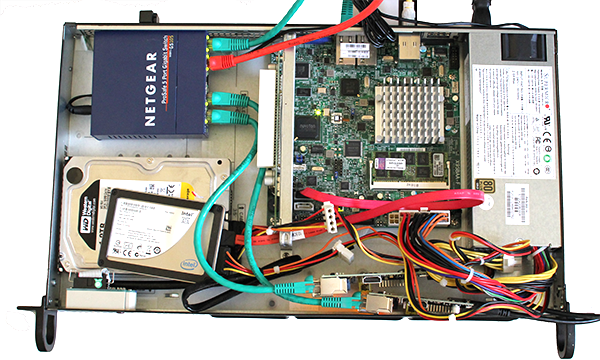

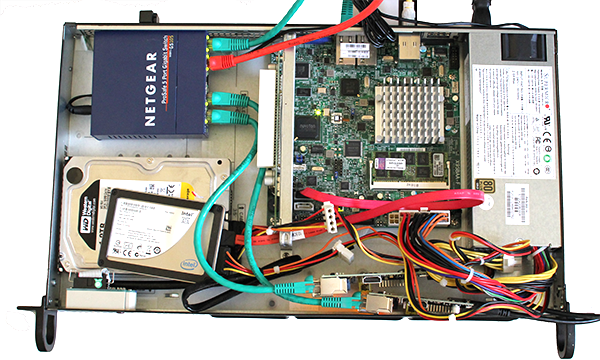

The AtomPi cluster proof of concept (pictured above as it was being assembled) included a Netgear switch, a SSD, a large 3.5″ storage drive, a Centerton Atom based Supermicro X9SBAA-F and two Raspberry Pi nodes all in a short-depth 1U and sub 30w. One thing that this proof of concept has taught me is that mini ITX is no longer a small enough form factor (although it certainly has merit). If I look at Intel’s next unit of computing (NUC), I see a great place to start for scaling down even the heftier Intel architectures to smaller form factors. Another observation from the AtomPi PoC cluster is that Raspberry Pi sized cards need a PCB backplane-based power and network delivery system.

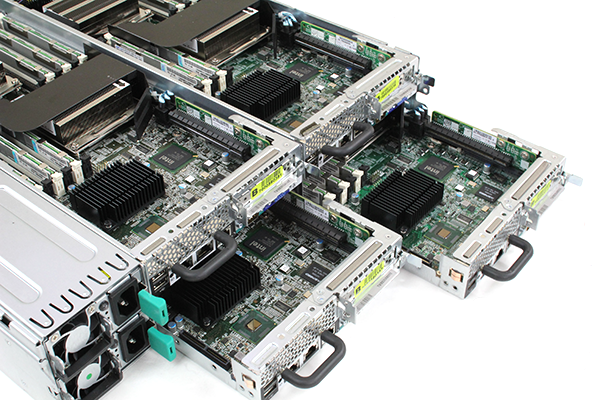

Given, the ARM CPU on a Raspberry Pi is completely outclassed by the Intel Atom Centerton, but as we approach sub 6w TDP, smaller heatsinks and lower power density mean that 1U is no longer a good enough unit of measure for density. Adding or mixing Intel NUC and Raspberry Pi sized nodes in a normal depth 1U chassis even staying under 1A @ 120V is not an issue in terms of density. Further, with SSD capacities rising, and given the performance/ power consumption characteristics, one can even build NAS/ SAN storage rivaling many U of performance only three years ago all into these clusters. Vendors are already heading down this path using proprietary formats:

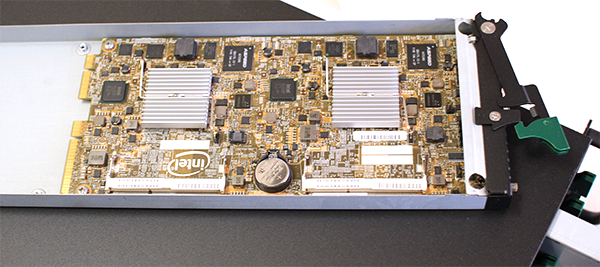

The above microcloud had various Intel Atom Centerton nodes but uses a proprietary motherboard format instead of a common compute node format. Below is a dual Atom Centerton system board from one of those systems. One can see that they are much larger than the Raspberry Pi and utilize a proprietary format instead of a common compute node format.

Of course, there is going to be a place for the “big iron” Intel Xeon E5 series and AMD Opteron 6000 series CPUs, but with Haswell, there is a good chance NUC form factors can be achieved with the new Intel Xeon E3-1200 V3 CPUs. These new lower power processors, as well as the incoming rush of ARM based server CPUs are going to re-shape the datacenter and require something akin to system on index card or credit card like functionality.

While density/ rack may be an important metric today, we are quickly moving towards a figure of density/ 1U. If form factors allowed it, we are at the point where applications such as low-cost web hosting (thinking Amazon EC2 micro instance sized dedicated servers for example) can be transitioned to this type of platform. It is time for the industry to adopt smaller common compute node system board sizes.

Great idea. Maybe the Xi3 mobo size is what is needed.

Fantastic idea. And re ITX being too big, I think its more wrong shape, than too large. If there was a board with the same surface area, but twice as deep, and half the height, it would work better in a blade server type scenario. IE, a motherboard 8.5cm (~3.4″) and 34cm (~13.6″) deep.

A great article, though looking at those bare pi boards against the chassis makes me feel nervous :-)

Great article, I am looking forward to ARM-platform, I am actually screaming after a MiniITX ARM with 6x SATA, 2x NIC og good quality, with place to lot of RAM, it would be very useful as a NAS. Of course it won’t be as high performance as a Atom, but it would use less power.

I have currently a Supermicro x7spa-h and it draws <30 watt.

But why are blade servers important?

I hope that some can answer that question, it seems that the software aren't scalable enought to cope with several users on a processor, or it's a useful technology to get more money from the customers…

I think that I need to read more on this topic… :)