Compute Performance and Power Baselines

One of the biggest areas that manufacturers can differentiate their 2U4N offerings on is cooling capacity. As modern processors heat up, they lower clock speed thus decreasing performance. Fans spin faster to cool which increases power consumption and power supply efficiency.

STH goes through extraordinary lengths to test 2U4N servers in a real-world type scenario. You can see our methodology here: How We Test 2U 4-Node System Power Consumption.

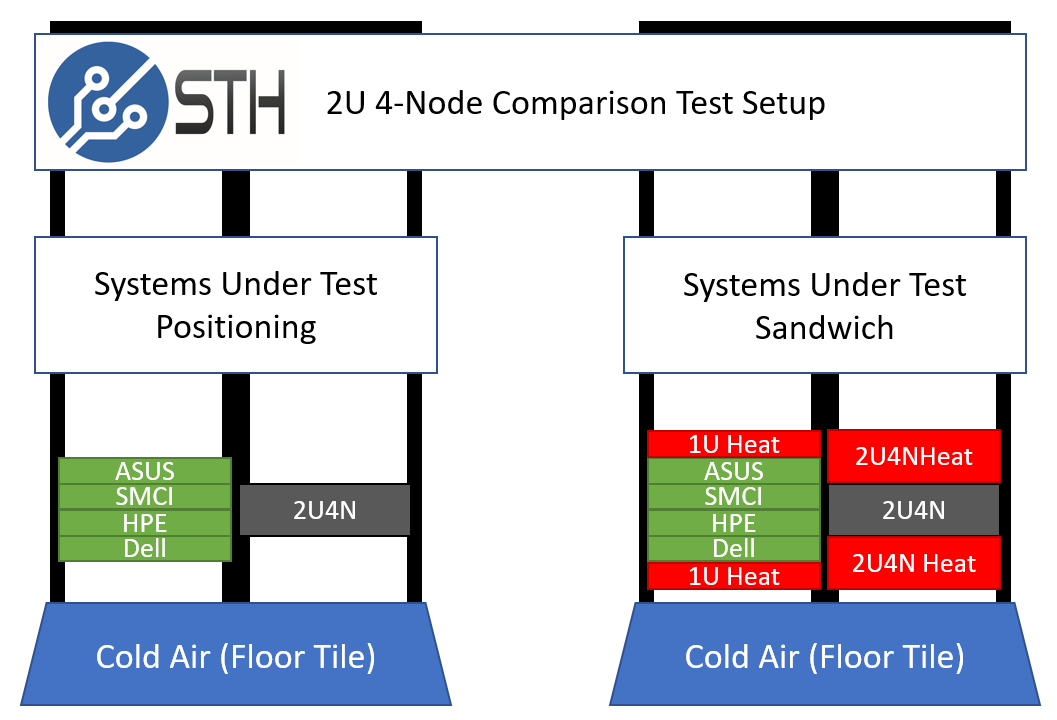

Since this was our third AMD EPYC test, we used four 1U servers from different vendors to compare power consumption and performance. The STH “sandwich” ensures that each system is heated on the top and bottom as they would be deployed in dense deployment.

This type of configuration has an enormous impact on some systems. All 2U4N systems must be tested in a similar manner or else performance and power consumption results are borderline useless.

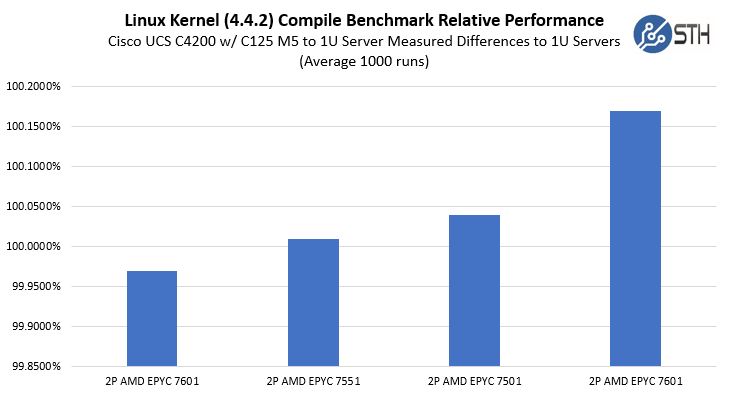

Compute Performance to Baseline

We loaded the Cisco UCS C4200 nodes with 256 cores and 512 threads worth of AMD EPYC CPUs. Each node also had a 10GbE OCP NIC and a 100GbE PCIe x16 NIC. We then ran one of our favorite workloads on all four nodes simultaneously for 1400 runs. We threw out the first 100 runs worth of data and considered the 101st run to be sufficiently heat soaked. The other runs are used to keep the machine warm until all systems have completed their runs. We also used the same CPUs in both sets of test systems to remove silicon differences from the comparison.

Note: This is not using a 0 on the Y-axis. If we used 0-101% you would not be able to see the deltas as they are too small.

The Cisco UCS C4200 chassis did a great job cooling the C125 M5 compute nodes, even with higher-wattage AMD EPYC 7001 32-core parts. Those 32-core CPUs can be difficult for some 2U4N designs to cool. If you want to see individual AMD EPYC CPU reviews, STH has benchmarked almost every model including the AMD EPYC 7501, AMD EPYC 7551, and AMD EPYC 7601 models noted above.

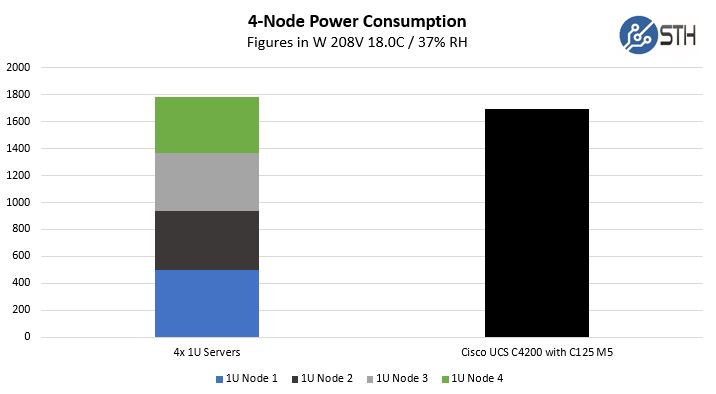

Cisco UCS C4200 Power Consumption to Baseline

One of the other, sometimes overlooked, benefits of the 2U4N form factor is power consumption savings. We ran our standard STH 80% CPU utilization workload, which is a common figure for a well-utilized virtualization server, and ran that in the sandwich between the 1U servers and the Cisco UCS.

Here we saw almost a 5% decrease in power consumption over the four 1U servers once we equipped them with two NVMe SSDs and four SATA SSDs. That is a big deal. Saving 5% is hard to do within a generation. Here, running efficient power supplies in their peak efficiency range along with excellent design means that operating costs are lower.

A hyperscale company we work with uses $6 per watt savings over the course of a server’s lifetime. Given this test was done under load not at idle, but we can see a $250-550 TCO savings using this over four 1U servers just from the reduced power consumption.

Putting this in context, the Cisco UCS C4200 with C125 M5 compute nodes delivers virtually identical performance to four 1U servers while reducing rack space by 50%, power consumption by 5%, number of power supplies by 75%, and the number of base power/ 1GbE/ management cables by 56% (7 v. 16.) That is exactly why 2U4N systems are so popular.

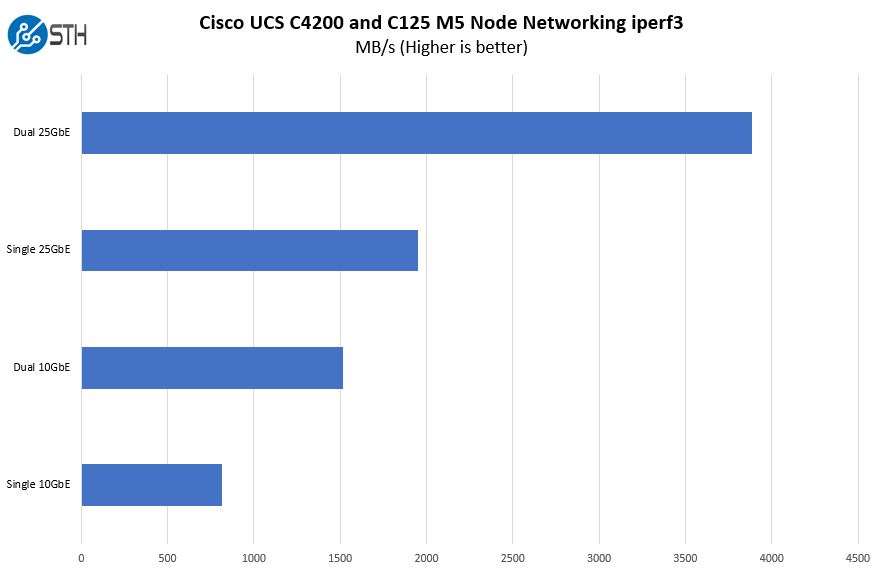

Cisco UCS C4200 Network Performance

We simply added the Cisco UCS C4200 to our 25GbE and 10GbE networks to show performance.

Cisco has higher-speed networking options and the VIC as a headline networking feature. We had 25GbE OCP NICs so we simply wanted to show a key message: get 25GbE with this machine. These days, 25GbE costs have come down to where the massive performance improvement comes at a minimal cost. Further, using dual 25GbE is a better use of PCIe expansion in a dense chassis like the Cisco UCS C4200 than 10GbE networking.

Next, we will have the STH Server Spider for the system followed by our final words.

I’m sure you’re right. Cisco has been doing this so long they’ve got to have a dedicated usability team.

I got one of these to test about 4 months ago loved it. Used cisco intersight for deployment. It was a little rough with the c125’s but updates were literally coming out weekly and it handled my M4 and M5 servers perfectly. I plan on replacing my whole UCS b series with C4200 and intersight once rome is released. Really happy to see this review, was the final nail in the coffin for the B series for me.

I knew Supermicro and Gigabyte had 2u4n but never knew Cisco did until I read this.