Our review of the Cisco UCS C4200 has been in the works for over a year. The units were in such demand that we finally managed to get one packed with C125 M5 nodes. For starters, the Cisco UCS C4200 we have for review includes eight AMD EPYC 7001 processors across four nodes. That gives a system up to 256 cores and 512 threads in a 2U 4-node (2U4N) form factor. Later in Q3, we will see the AMD EPYC 7002 series launch that will double those totals to 512 cores and 1024 threads in 2U. Cisco built the machine with this in mind and in our review, we will show you how Cisco is leveraging this generation’s most innovative CPUs to deliver dense compute.

Cisco UCS C4200 Hardware Overview

We are going to break our Cisco UCS C4200 hardware overview into two sections. First, we will check out some of the features of the main chassis. We will then focus on the Cisco UCS C125 M5 compute node.

Cisco UCS C4200 Chassis Overview

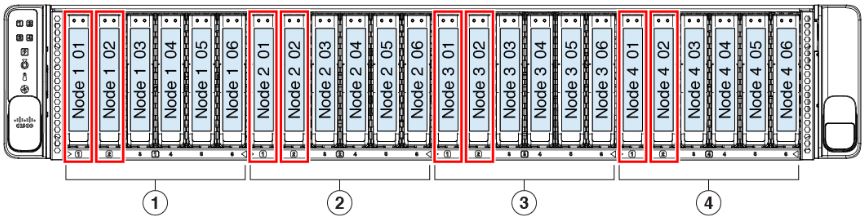

The Cisco UCS C4200 chassis is a 2U design that has a layout common in these types of systems. Our test system has 24x 2.5″ front drive bays.

Each of the four nodes gets six drive bays that can attach different types of storage. Two for each node (eight total) can handle NVMe SSDs. The other four are either SAS3 or SATA drive bays. The Cisco C125 M5 nodes can drive up to six SATA bays without a SAS controller, but for those who want the option, one can add an optional SAS controller. This is a very common design with two NVMe/ SAS/ SATA and four SAS/ SATA bays per node. Indeed, the two other 2U4N chassis in the above picture have a similar drive layout.

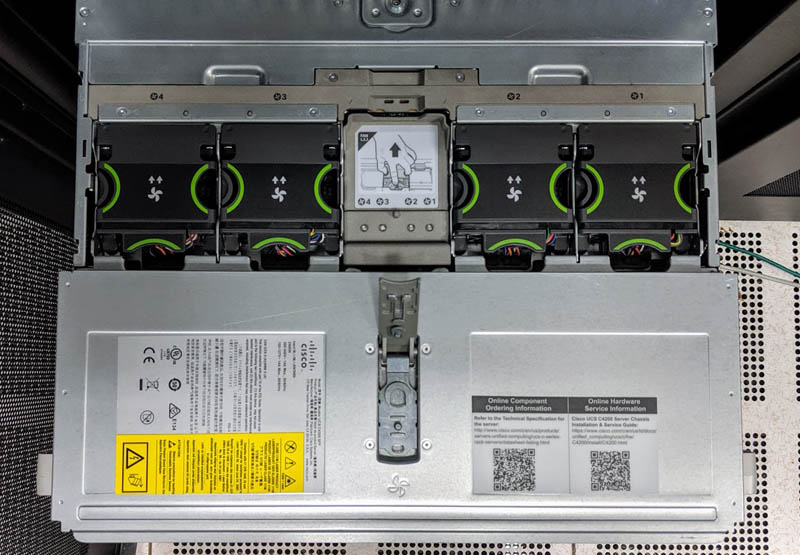

Servicing chassis fans is easy. One pulls the chassis partly out of the rack and there is a small latched cover that leads to the fan assembly area. One can see this cover also has QR codes to service instructions. That is important for field service technicians and remote hands.

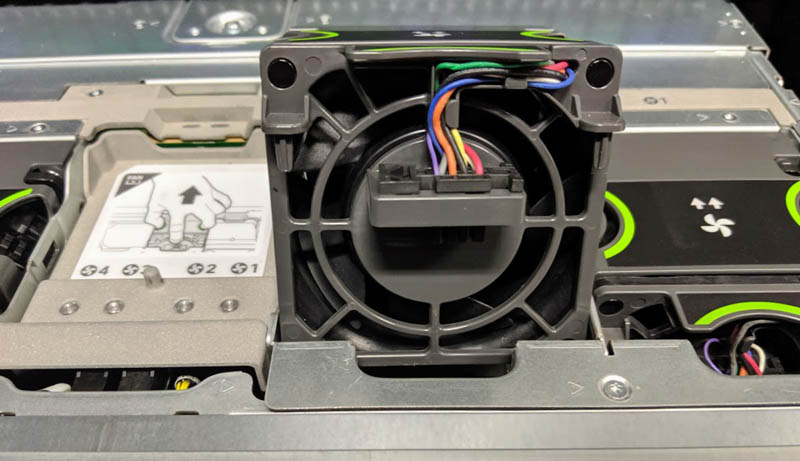

Four nodes, four fans. That is the layout of the Cisco UCS C4200. Each of the fans are hot-swap even though fans are very reliable these days. Pulling a fan to take this photo, one can easily see how robust the mechanical design of the UCS C4200 is. We test servers from virtually every major manufacturer, and these are probably the most solidly placed fans in any 2U4N chassis we have tested.

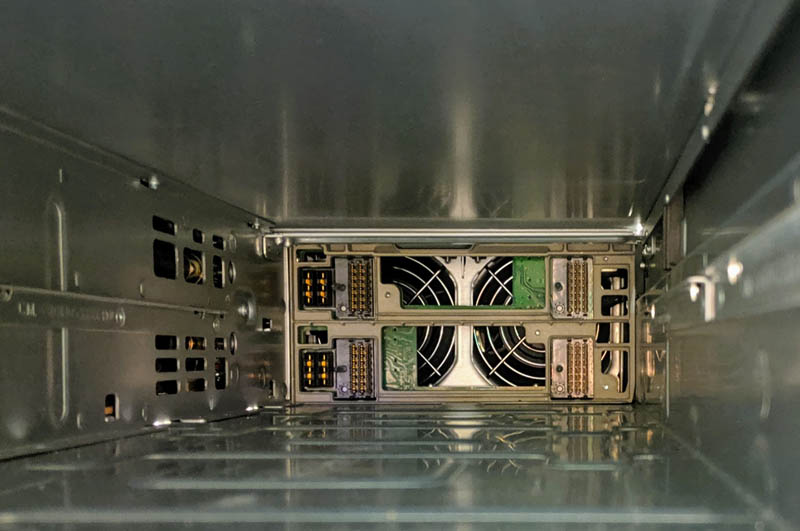

Looking from the rear towards the fans through the node chamber, one can see each side of the chassis has large fans and connectors mounted on PCBs. Cisco uses hotplug connectors on the nodes, unlike the typical gold finger designs we typically see in 2U4N chassis. When you are Cisco and have been making high-speed hot-plug connectors for years, such a task is second nature. The impact is that the chassis can be shorter due to the space savings these connectors provide.

Power supplies in our test unit are 2.4kW 80Plus Platinum units from Delta.

Again, no PCB gold fingers on the PSU as Dell EMC, HPE, Inspur, Lenovo, Supermicro, and others use. Cisco’s mechanical design heritage certainly heralds from a different lineage than its competition and is operating at a different level.

Officially, the Cisco UCS C4200 is listed as a 32-inch chassis. Compared to some of today’s GPU servers and high-density storage servers, it is not deep at all.

One item we wanted to note is that the rails for the Cisco UCS C4200 protrude further than the chassis. That means they can interfere with zero U PDUs in some racks even if the chassis fits fine. If you have a rack less than 38 inches, we suggest checking PDU placement for fit.

Next, we are going to look at the Cisco UCS C125 M5 compute nodes that populate the UCS C4200 chassis.

I’m sure you’re right. Cisco has been doing this so long they’ve got to have a dedicated usability team.

I got one of these to test about 4 months ago loved it. Used cisco intersight for deployment. It was a little rough with the c125’s but updates were literally coming out weekly and it handled my M4 and M5 servers perfectly. I plan on replacing my whole UCS b series with C4200 and intersight once rome is released. Really happy to see this review, was the final nail in the coffin for the B series for me.

I knew Supermicro and Gigabyte had 2u4n but never knew Cisco did until I read this.