Cinebench has been a popular rendering benchmark for some time. Much of its popularity is due to the fact that it can be run on even the lowliest of Atom machines and ran (until today) fairly well into dual and quad socket systems. Today, we are seeing evidence of Cinebench R15 simply breaking.

Last week we published a video that we did before the Skylake-SP launch called Crushing Cinebench R15 V4. We did that video prior to the official Intel Xeon Scalable Processor launch. As a result, we had old drivers on our installation.

While testing another Xeon system in the lab, we noticed there were some new chipset/ BMC drivers available so we loaded them onto the quad Intel Xeon Platinum 8180 system. Not only did the new drivers help improve performance, but we saw something very strange. Cinebench appears to be breaking.

A New Cinebench R15 World Record 11584 cb CPU Score

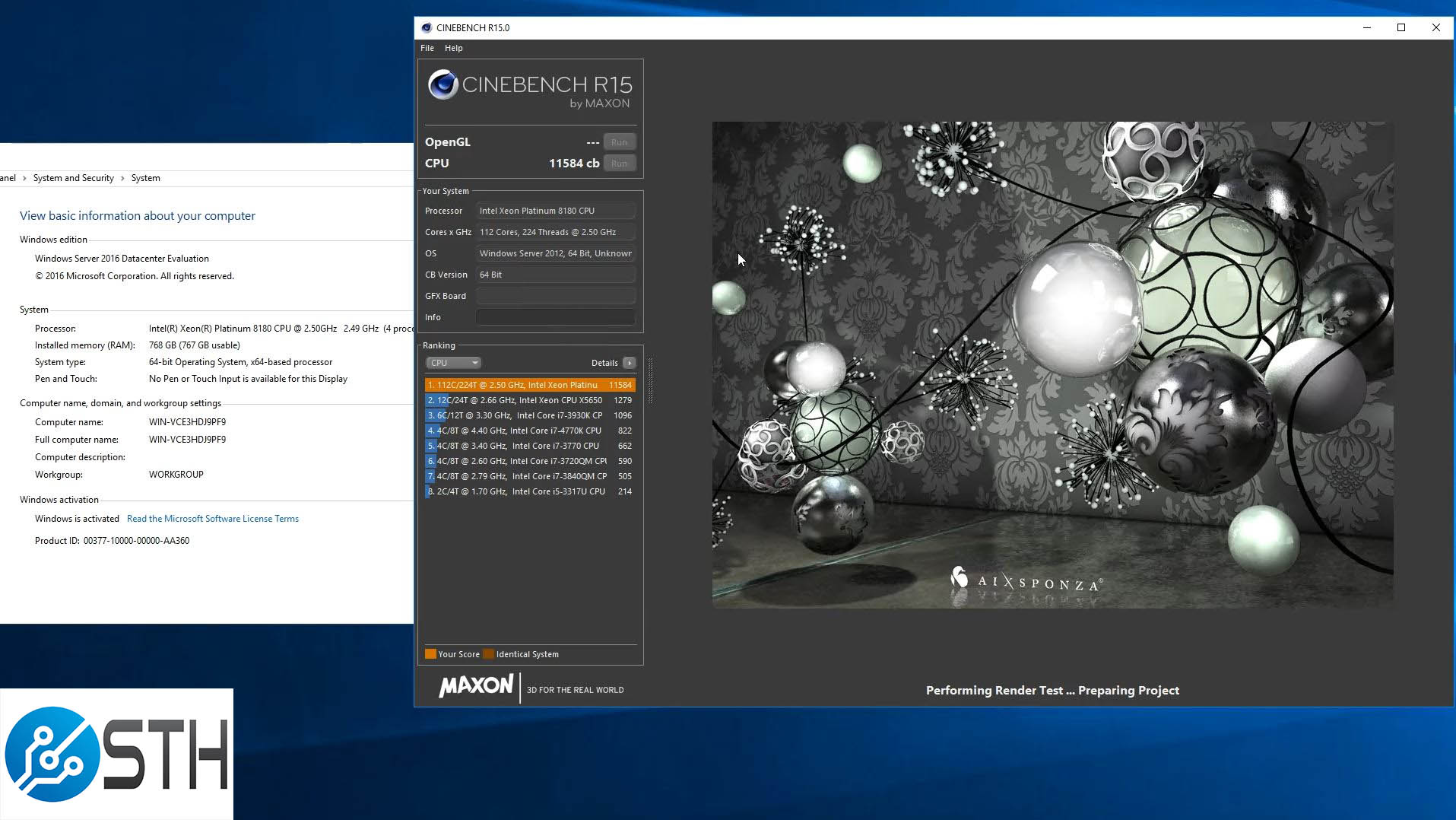

First off, the result: here is a screenshot from the video of the system reaching 11584cb.

That is a huge result surely. Given these are expensive CPUs targeted at software that can cost thousands (or more) per core. Still, that is a score that represents over a 20% improvement versus what we had before. As a world record, that may be exciting for some, but there is more to the story. Specifically, the system may be able to go higher as we are seeing a telltale sign that the hardware has out scaled the software.

Is Cinebench R15 Breaking as a Benchmark?

One of the difficulties scaling benchmarks is that on one hand you now have 4 socket systems like this with 28 core CPUs. On the other hand, you have small dual core Pentium chips and previous generation (slow) Atom chips that the benchmark must run on. At STH, we are a data center focused site so all of our test systems are tucked away in a secure facility well out of earshot so doing longer test runs is not an issue.

We deal with this on a regular basis as we were the first site to benchmark the Intel Atom C3338 dual core “Denverton” server CPUs but also test large systems like last generation’s quad E7 PowerEdge R930 and this generation’s quad Intel Xeon Platinum 8180 and 8176. Covering that large of a scale with benchmarks that will work through multiple generations is quite a feat. Over time, Cinebench R15 has fared well.

Now it seems like there is a clear need, at the high-end, for a larger benchmark test scene. That will make the benchmark more valuable for higher-end systems but will be more time consuming for desktop parts. We understand why Maxon has made this choice.

Here is a video of the results we received with simply about 6 minutes of recording time spent launching runs. We took a 100-second clip from those 6 minutes around the world record run just to show this variance at play:

One area you will note is that the entirety of runs is in the 5-7 second range. That is important as modern CPUs will typically hit an all core turbo mode. Along those lines, we are seeing fairly massive differences, at times greater than 20% peak to valley on some runs. Generally when we see that level of variance, and consistent variance (e.g. not one of 100 runs but every run moving significantly), we know it is time to take a look at a benchmark.

As an example, even though our c-ray “hard” setting we developed in 2012 is starting to run into the same 5-7 window, it is still producing repeatable runs with well under 5% benchmark variance. We are still going to be introducing our 8K version soon simply to get longer run times on large machines.

5-7 seconds is an extremely short time to run a “benchmark” on a modern CPU. We have run significantly longer workloads on this machine, the types that take days to run and they are extremely consistent. Even tasks like doing large compile jobs in linux are predictable where we have a sub 1% test variance over 100 runs. 20% is enormous in comparison.

What Can be Done?

We are making the suggestion that Maxon increase the test render scene size. From what we can see, the benchmark is pushing work to all 224 threads. At the same time, with such a short runtime and extremely inconsistent results, the workload needs to run longer to make any initialization negligible. In the professional rendering industry, people do not optimize for 6-7 second renders. It is the multi hour and day (sometimes longer) renders that creative professionals are trying to reduce.

While this is the top-end four socket machine today, it is also is a harbinger for the not too distant future. In 2012 the top of the line dual Intel Xeon E5 platform had 16 cores / 32 threads, a feat that AMD is about to match five years later at $1000 with Threadripper.

Your suggestions at the end are quite valid but another important feature would be for Maxon to actually use AVX (even AVX1, I’m not even requiring AVX-512 support) in a rendering benchmark to show something a little more modern than a synthetic test of SSE instructions that were in the Pentium IV.

On a different note, would you comment on why companies are making 6+2 DIMMs motherboards in this generation? 6+2, when fully populated, seems to be an unbalanced configuration which can result in lower performance than 6 DIMMs on something like linpack (with AVX-512).

Hi Patrick,

The CB benchmark renders a C4D file located at “C:\Program Files\CINEBENCH_R15\plugins\bench\cpu\cpu.c4d”. You can open this scene in C4D and increase the output resolution. Default resolution is 896x640px.

Here is the same scene with output resolution increased to 3584x2560px.

https://we.tl/X6mGX0wq4p

If you replace the cpu.c4d file, it will benchmark the larger scene.

The resulting CB score will be unique and not compareable with other scores.

F – We covered this as part of our launch coverage: https://www.servethehome.com/intel-xeon-scalable-processor-family-platform-level-overview/

Sen – thank you for this. We are using Cinebench R15 for its ability to be compared to general purpose PC’s. If Cinebench adds a new standard, we will look into that but we have little desire to create a custom data set just for Cinebench (we do our benchmarking in Ubuntu / CentOS.)

it is also is a harbinger for the not too distant future.

A little strange here

What they need to do is make the rendering process NOT time limited.

ie, it should just continually render as long as you want.

But then the actual “test” would be taking a “sample” of a certain period of the rendering.

Something that would be logical to collect a large enough variance to produce a trustworthy result.

Lets say the test sample size is 5 minutes.

The benchmark software would determine how much time it needs to load up whatever CPU’s are running the software, and then just wait to run the sample until the loadup is finished.

And there you go, totally scalable benchmark :D