Cerebras is certainly making waves in the AI industry with a fresh approach. While companies like Habana Labs and Graphcore are building chips in PCIe and OAM form factors to combat NVIDIA’s AI leadership, Cerebras is doing something different. They are using a giant piece of silicon to consolidate a cluster of AI systems into a single box. You can read more about their wafer-scale product in our piece Cerebras Wafer Scale Engine AI chip is Largest Ever. At SC19, the company showed off the Cerebras CS-1 which is an integrated system to take the wafer-scale chip and productize it into a system.

Cerebras CS-1 Wafer-Scale AI System at SC19

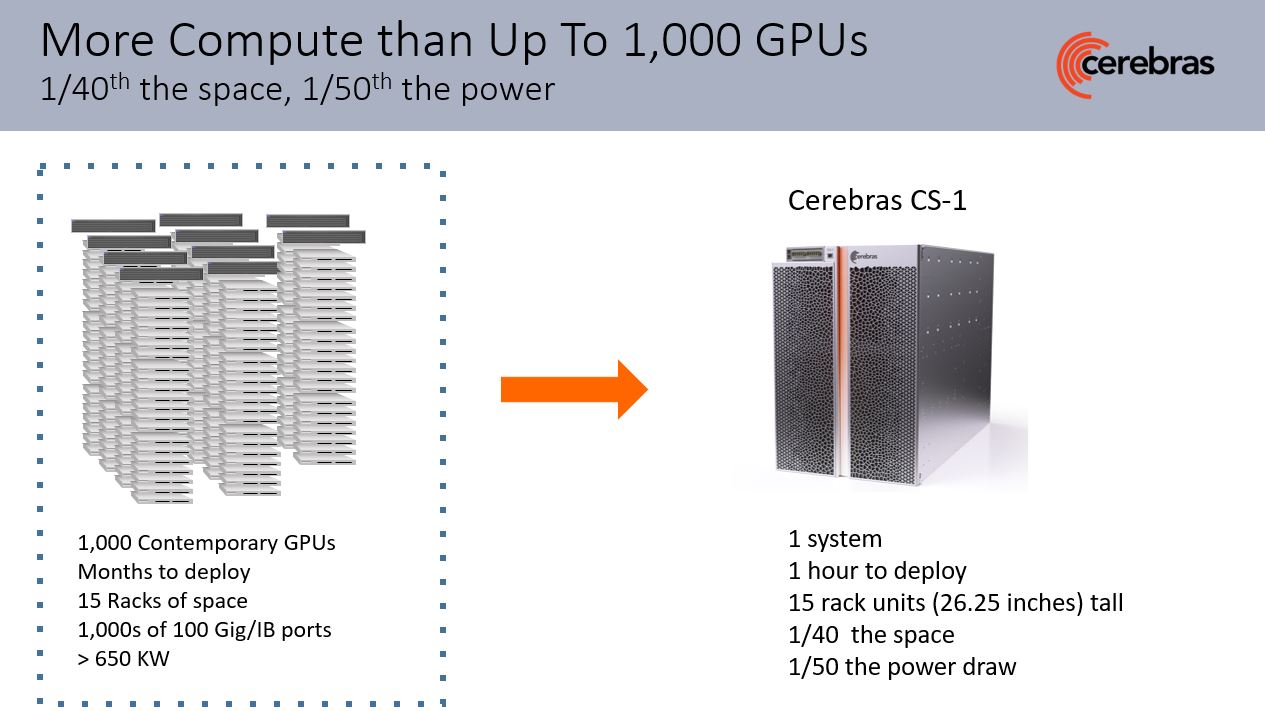

On the SC19 show floor, the company put to rest the idea that its wafer-scale AI chip would be hard to deploy. It showed up with Cerebras CS-1 systems, the same model that has been delivered to Argonne National Laboratory.

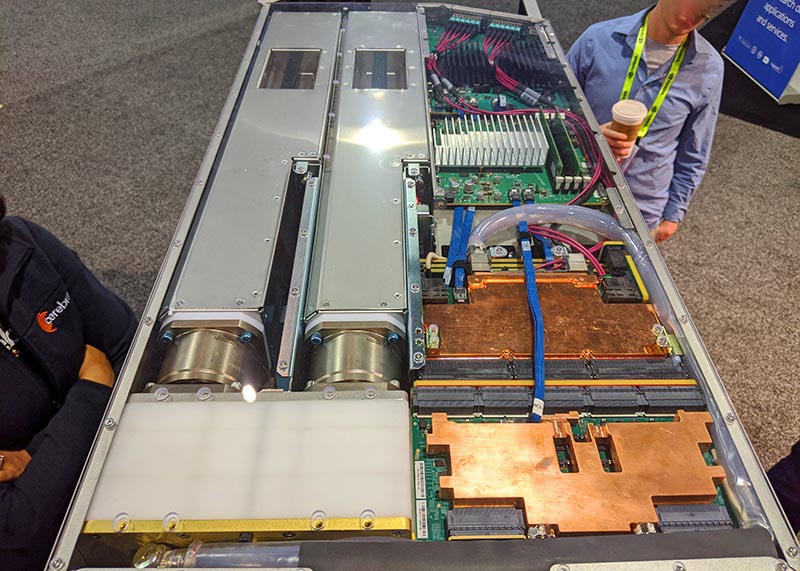

You can see the I/O module at the top left, 12x power supplies below that. To the right are the pump modules, and fan modules below. As an interesting aside, those big doors at the front of the CS-1 are each made from a single piece of aluminum.

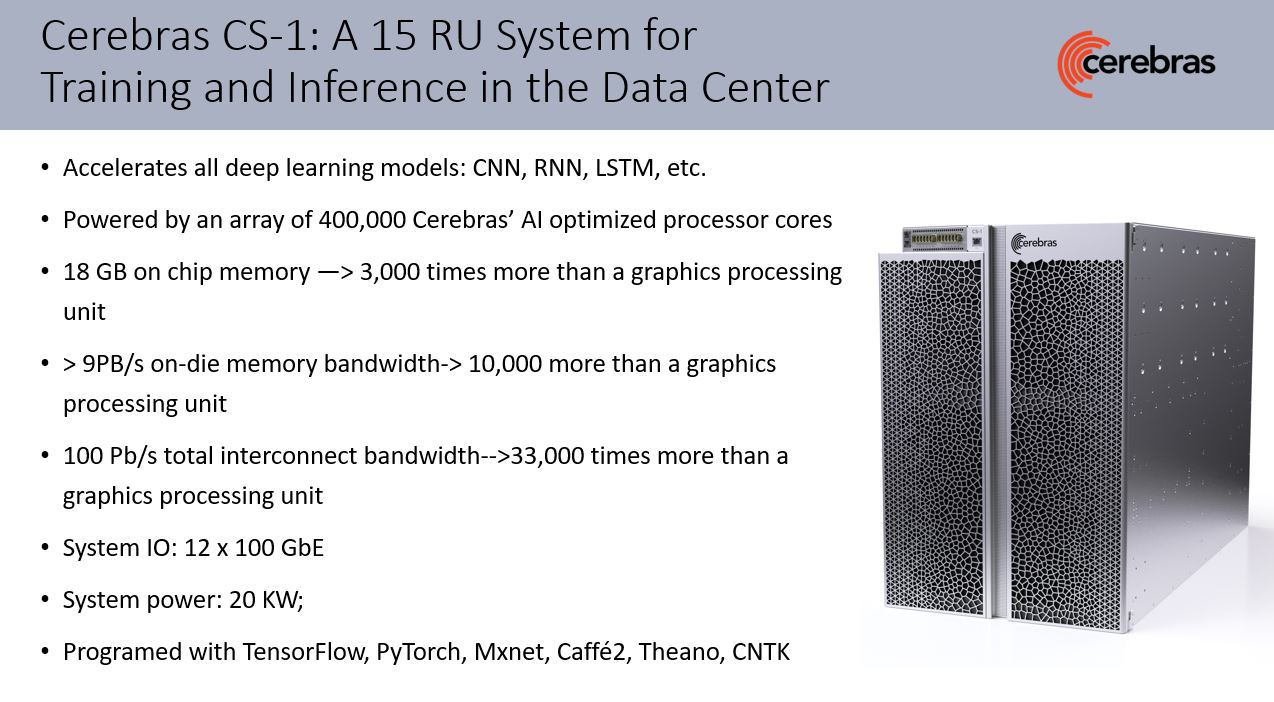

Inside the system is one of the company’s chips along with all of the power delivery and liquid cooling bits necessary to use a chip that large. The 400,000 AI core chip has 18GB of on-chip memory, 9PB/s of memory bandwidth, and over 100Pb/s in interconnect bandwidth.

Each Cerebras CS-1 is designed to use up to 20kW. In a 15U chassis, that is only about 1.3kW/ per U or about what we would see from a 2U 4-node server or a GPU compute server.

Although the system utilizes 12x 100GbE connections for up to 1.2Tbps of external bandwidth, the real excitement happens on the massive chip itself. It is much easier, and uses less power, to get high bandwidth interconnects through a piece of silicon, rather than externally connecting multiple systems. Putting more compute, memory, and fabric onto a single chip and packaging that into a single system makes it easier to integrate. Instead of having to bring up an Infiniband fabric, to scale to multiple GPUs across multiple systems (a key reason NVIDIA is to acquire Mellanox) everything happens across the wafer. That lowers power consumption but also makes it much faster to integrate and deploy.

In the top view, one can see the 12x 100GbE links heading to the front of the 15U chassis. You can also see the two pump modules on the left which help ensure the chip stays cool. Here is a side view of the CS-1 where you can see the modules from another angle:

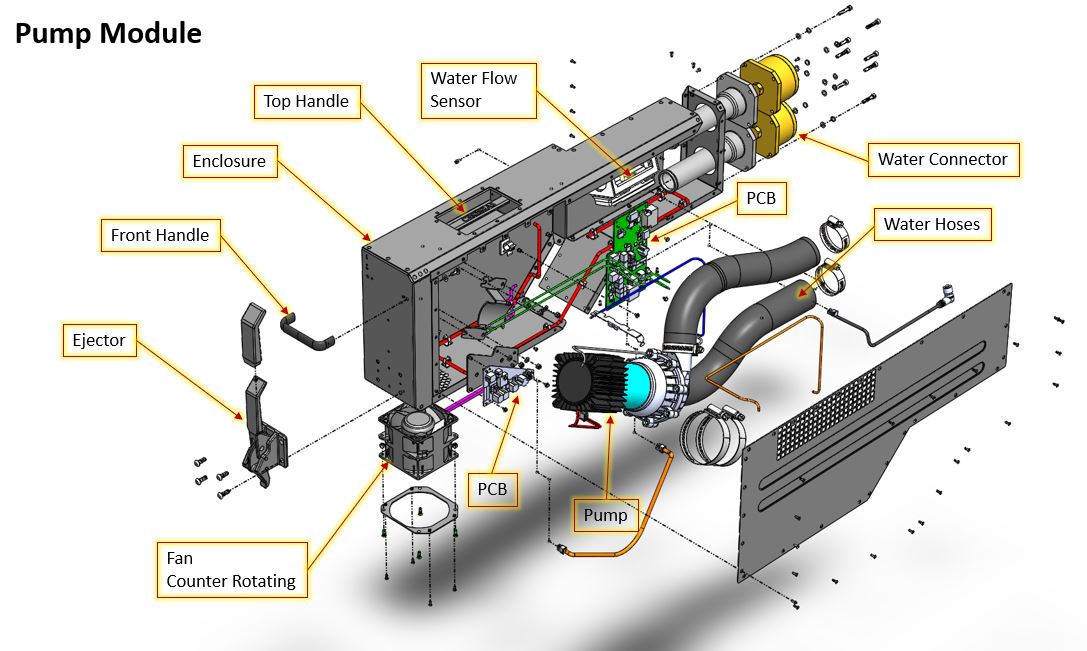

Here is a quick look at the mechanicals of a pump module:

As complex as this seems, Cerebras designed this solution to be serviceable and integrate into existing data centers. The other key aspect is that each system is essentially replacing a cluster of GPU systems, cooling, and network fabric for them which makes this a relatively simple design.

Cerebras Software and Clustering

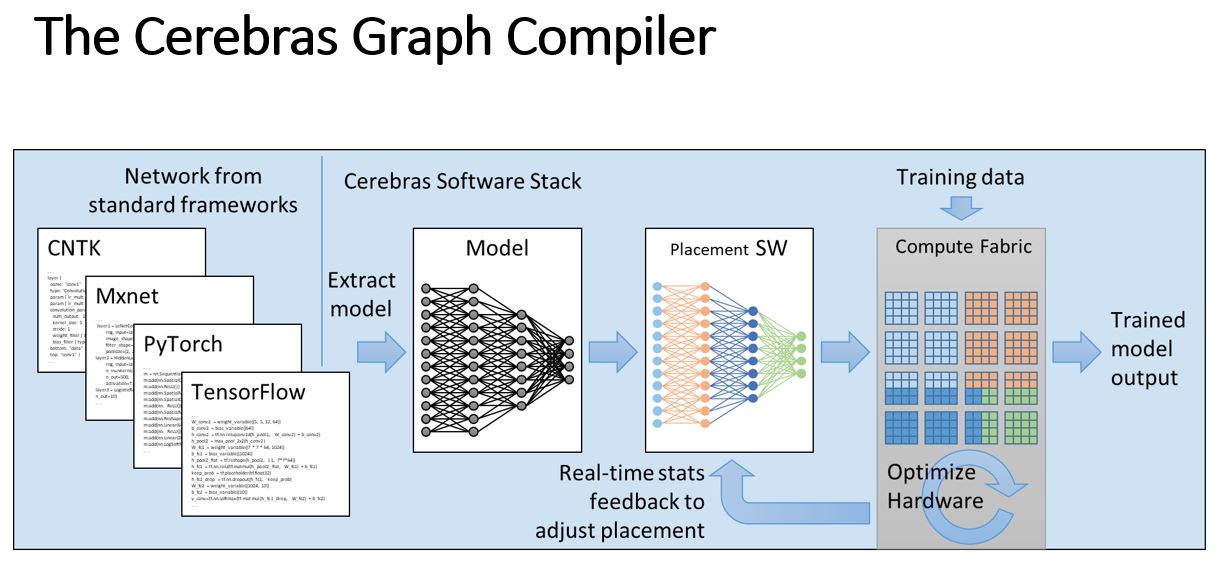

Although we mostly have focused on the hardware, the software side is where a lot of the “magic” happens. Cerebras’ software stack takes an AI developer through the journey of going from high-level frameworks like Tensorflow and PyTorch through placing the model on the wafer-scale chip and running it.

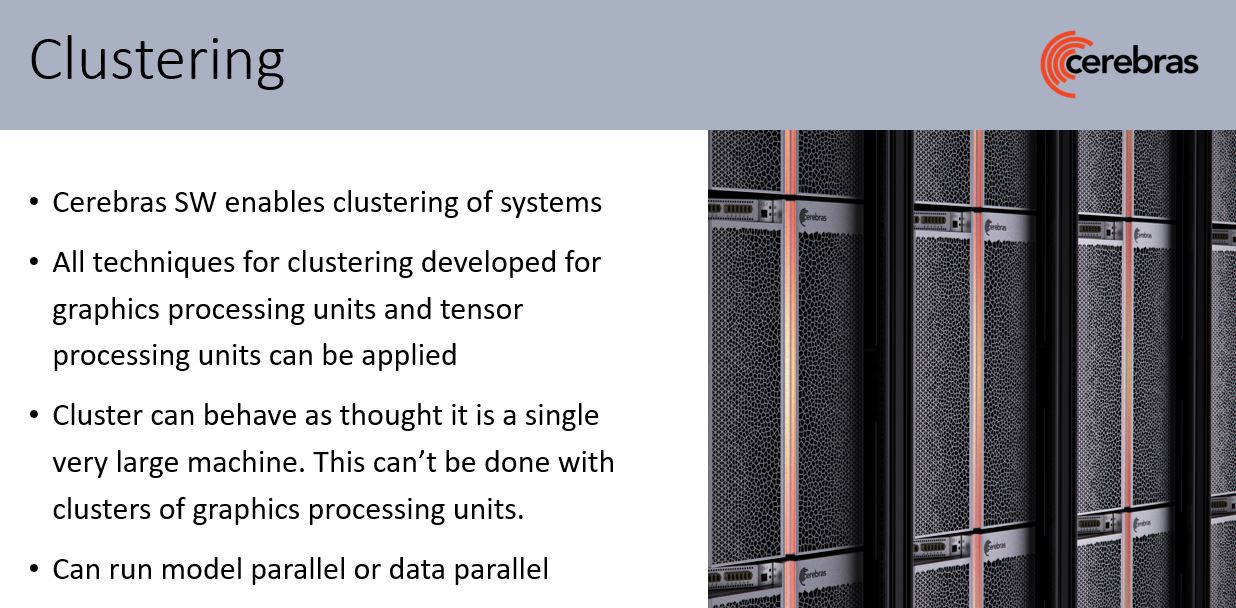

Cerebras is not stopping with a single system. Instead, its solution is designed to scale to multiple systems.

Cerebras is delivering software and solutions and thinking beyond a handful of PCIe accelerators focusing on scale.

Final Words

Absolutely Cerebras is doing something different with the CS-1. At Hot Chips this year when the company showed the chip, the question was how would it go from that point to something deployable. By showing off a system that it is starting to ship, Cerebras is putting distance between itself and other startups that have ideas but are not shipping. Add that to the inherent benefit of massive consolidation from wafer-scale and Cerebras is doing enough to challenge the norm in the AI space.

Thank you for the in-depth article Patrick! Is there any indication on the price or would I have to get a direct quote from Cerebras?

Any benchmark results where it’s faster than 1000 GPUs?

How much does it cost?

I heard it’s like $2.5 mil. If it’s really replacing 100 GPUs at only 20KW that’s actually a bargain.

Finally something in line with historic ThinkingMachines CM-1/2. Great achievement indeed.

It looks badass! I like it. Although I won’t presume these things are sold for their looks.

I’m just going to agree with everone else here. This thing is so freaking cool! Talk about heavy iron!