Build #3: Falcon Northwest Does it Better

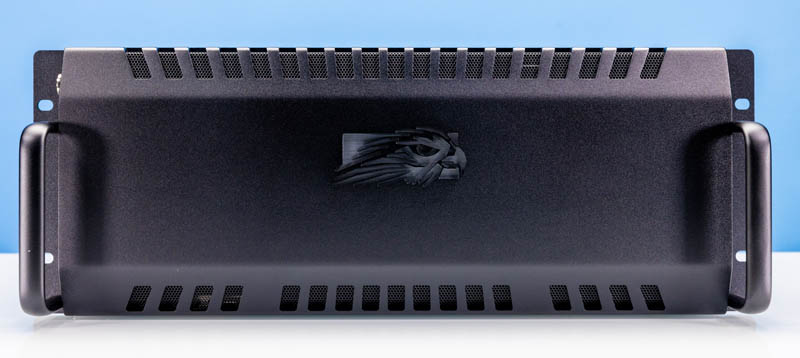

Falcon Northwest caught wind that we were doing these builds and asked to send one of their new rackmount workstations. The Falcon Northwest RAK is a 4U chassis but we were very surprised.

The overall system is only 19″ deep making it much shorter-depth than we were expecting. Unlike when we build workstations into 4U cases, this is a liquid-cooled and shockingly quiet workstation.

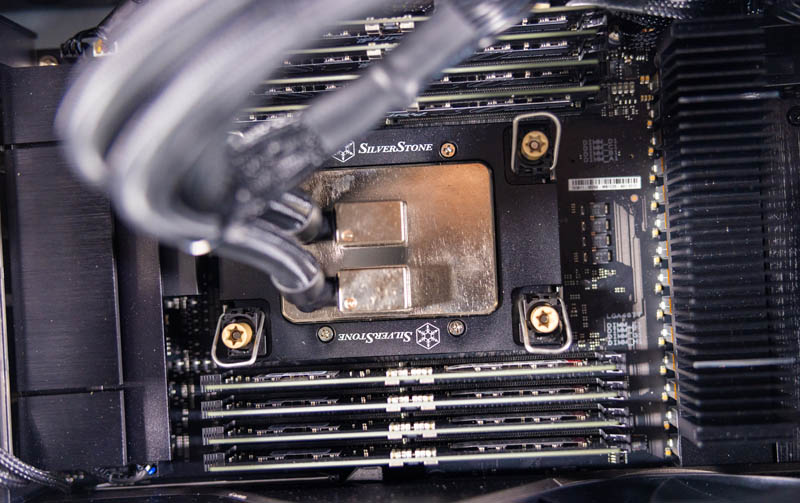

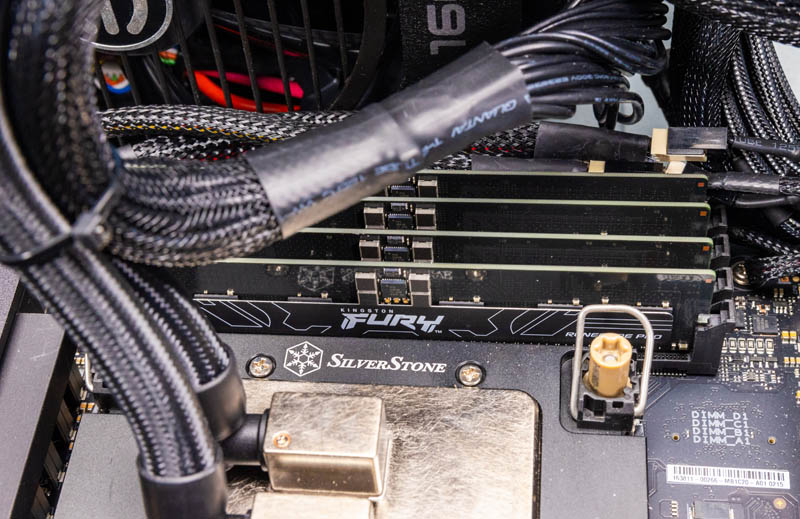

The liquid cooling block is made by SilverStone.

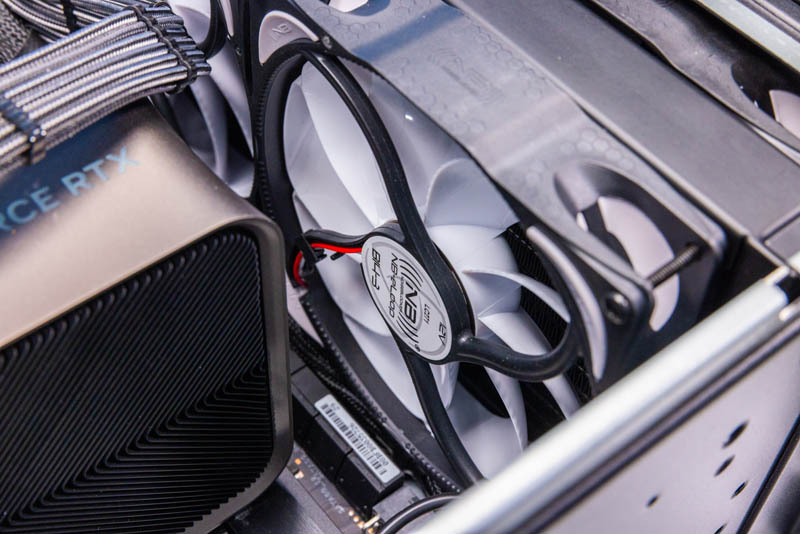

The front radiator uses four huge Noise Blocker fans.

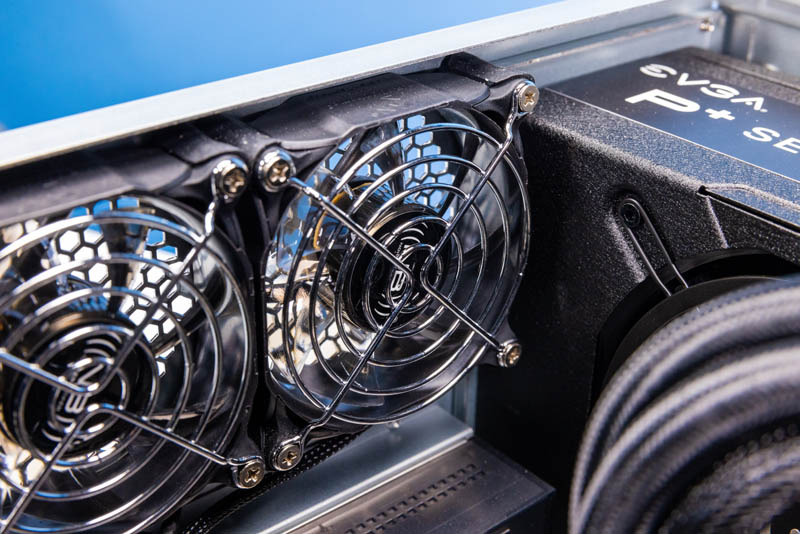

The rear fans are also Noise Blocker units.

Memory is the Kingston Fury Renegade Pro. This is DDR5-6000 ECC RDIMM memory so one gets around 25% more memory bandwidth from the faster DIMMs.

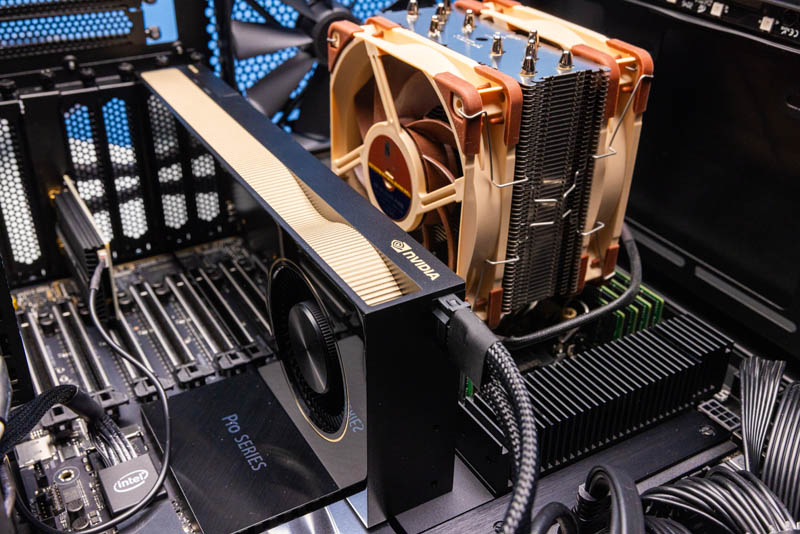

The NVIDIA RTX 4090, as massive as it is, fits in this case. We also put a NVIDIA RTX 6000 Ada in the system and that uses a smaller cooler so it fits as well. It was fun to see Falcon Northwest added a small WiFi card for us almost exactly like we did for the DIY build.

Perhaps the most interesting part of this is that the system can be rackmounted with IPMI, lots of USB and audio I/O (not common on server motherboards), and has dual 10Gbase-T from the motherboard as base networking. If you wanted a GeForce GPU compute server, this can fill that role well.

One area that we showed in the video is the noise. This system took our 34dba studio to 37.5-39dba. We were shocked as we thought it would sound like a jet engine. Falcon Northwest makes premium systems, and part of that is making pre-built systems that can be used in studios even if they are packing a huge amount of performance. Bryan ran from the studio to my office when he turned it on because he was so shocked at how quiet it ended up being and wanted to show me.

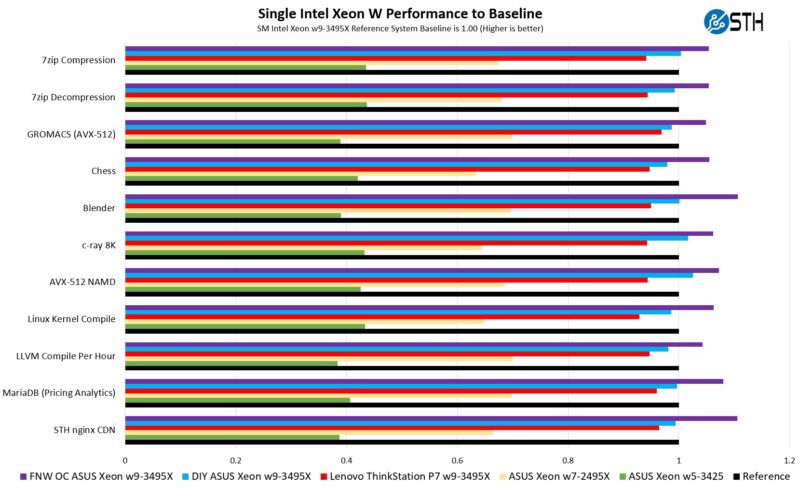

Using the ASUS motherboard to its max, one can get 100K on Cinebench R23. We instead used the standard overclock profile Falcon Northwest used and one can see that it easily outpaces our DIY build. It is also in another league of performance compared to the Lenovo P7.

We often assume workstations with the same CPU are very close in performance, but in the above, we have four workstations with the same CPU and have very different performance outcomes.

Final Words

Hopefully, this gives our STH readers some idea about what can be done with this platform. Having what is essentially a Sapphire Rapids Xeon with a range of options from lower-core counts to high-core counts and the flexibility to even overclock memory and go into a high-power performance mode should be interesting to many of our readers. If you are not using Sapphire Rapids acceleration, these will be faster single-socket server platforms than the Intel Xeon Platinum 8480 at a fraction of the price. Using the motherboard with ASUS’s management solution helps make these either server or workstation platforms, or ones that migrate from workstation to server roles.

We have already reviewed many of the components used in these systems. We will have a full Falcon Northwest RAK review coming as it is really interesting to get into the details of the system. We are also going to be reviewing some of the products that we used in these builds.

The strange part is that the STH team was very polarized on which build we thought was the best. Some thought the Falcon Northwest build was the best because it could actually edge out a 64 core Threadripper Pro 5995WX with more memory bandwidth and connectivity, even having eight fewer cores. Others really liked the first build with tons of connectivity. There is a premium for this platform because of that connectivity, but we have a lot of STH users looking for a system where PCIe lanes are more important than core counts.

We hope our readers like this type of content. I will say, our team did a great job even if this one got impacted (significantly) by scope creep as we kept finding new components to use.

Can you undervolt these cpus? The power consumption is limiting them too much.

@Makis

There are options to apply negative voltage offsets in the BIOS of this board but I suspect that these settings are being ignored in actual operation because power consumption still remains relatively high after applying such offsets.

Despite the high power consumption, these CPUs aren’t typically being thermally constrained, their performance will not benefit from undervolting.

@Patrick

Did you have trouble cooling the memory during more stressful workloads? I’ve got the same platform and ended up having to get water blocks for all the DIMMs to keep them from overheating.

Also I’ve noticed a huge discrepancy (~30%) in performance between running the same FEA workload on Windows vs Linux; I’m thinking its scheduler related.

Was there a reason for having the Noctua cooler blowing down onto the GPU instead of up to the top vents? Curious if having it the other way round would’ve been even better.

The QAT situation on these is … there just isn’t one? I am having trouble interpreting the extremely large Xeon feature matrix for this generation.

Impressive choice! Building 3x Intel Xeon W-3400 workstations and servers with the ASUS Pro WS W790E SAGE SE is a fantastic way to achieve top-notch performance and reliability. The ASUS Pro WS motherboard’s robust features and support for multiple Xeon processors make it an ideal foundation for high-end computing solutions. Well done on your hardware selection!

@Patrick

Any idea if there would be boards from Supermicro, ASRock Rack, etc. for these CPU’s?

Would be interesting to see what a slightly more server-like board would be for these.

@wes What is wrong with Supermicro X13SWA/X13SRA ?

You write, “We will quickly note that we had an EPYC Genoa workstation build that our team thought was too cumbersome to really recommend as it was a bear to get working.” Even if not recommended, it would be interesting to hear about the troubles of the parallel Genoa project.

It seems a shame that it has seven PCIe slots but most of them are impossible to use once you put a GPU or three in. So then you use riser cables but GPUs generally fail to fully utilise a 16x slot. Actually maxing this system out for use with HuggingFace AI models would be intriguing but perhaps the hardware to do so doesn’t really exist…?

I am looking at the CPU-Z specs presented in the video at time 00:49, and am trying to figure out why the Intel Xeon w5-3425 (left), the alpha numeric portion not include in the “Name” field near the top of the “Processor” portion of the frame, like it is for the Intel Xeon w9-3495X (right); does not seem to share logic continuity in the “Clocks (Core #0)” portion of the frame, compared to the w9-3495X. The w5-3425, and rated (advertised) at 3.2GHz with a turbo speed of 4.6GHz, being shown to have a “Core Speed” of just 796.00MHz, the “Multiplier” set to only x8.0, given a “Bus Speed” of 99.75MHz, were as the w9-3495X, and rated at 1.9GHz with a turbo speed of 4.8GHz, displays a more understandable “Core Speed” value of 4800.00MHz, the “Multiplier” set to x48, given a Bus Speed of 100.00MHz. Shouldn’t, presuming all settings are basically identical in the BIOS configuration, the CPU-Z report for the w5-3425, with regards to the “Core Speed” read 4600.00MHz, thus consistent with the advertised turbo speed value, the “Multiplier” value set to x46? Please explain what the values in parenthesis mean, the w5-3425 range being from (5.0-32.0), the w9-3495X range being from (5.0-7.0). I would like to see the industry address the legacy (mid 1990’s) “Bus Speed” of ~100MHz-133MHz.

Do these servers support CXL?

but why was the supermico platform faster. you nonchalantly dropped in that comment as if it was no big deal. well it’s a big deal! lol. is there a simple explanation?

Hi everyone,

great that I found this article. However, I faced memory issues during a similar build but with 2 4090 GPUs. I used RDIMM ECC memory (64GB DDR5 4800MT/s ECC Reg 2Rx4 Module KTL-TS548D4-64G Server Memory) and was surprised with d2,b7, and b9 codes, all memory-related errors.

Can you give any hints and what memory was used in your setup?