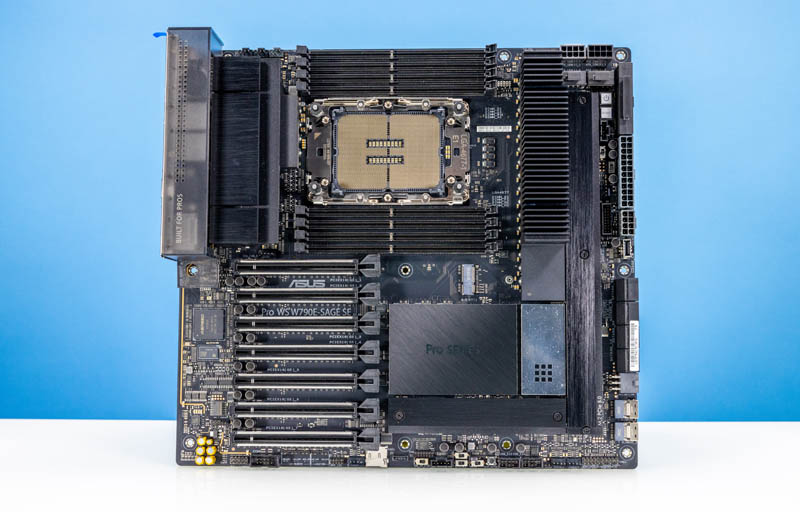

A few months ago we covered the Intel Xeon W-3400 Xeon W-2400 and W790 launch. That set about perhaps the most ambitious project in STH history. We built THREE different systems all based around the ASUS Pro WS W790E SAGE SE motherboard, and somewhat unintentionally. We built a system that can act as a workstation or server with fewer CPU cores but plenty of ECC memory capacity and PCIe Gen5 connectivity, a DIY high-end workstation, and then we had a Falcon Northwest RAK system that was a higher-performance, lower-noise, short-depth 4U system fully in the spirit of STH. With that, let us get started here.

Accompanying Video

For this one, we had to do a video because we put so much into this. If you want to see the video, check it out here:

We always suggest watching this in its own tab, window, or app for a better viewing experience.

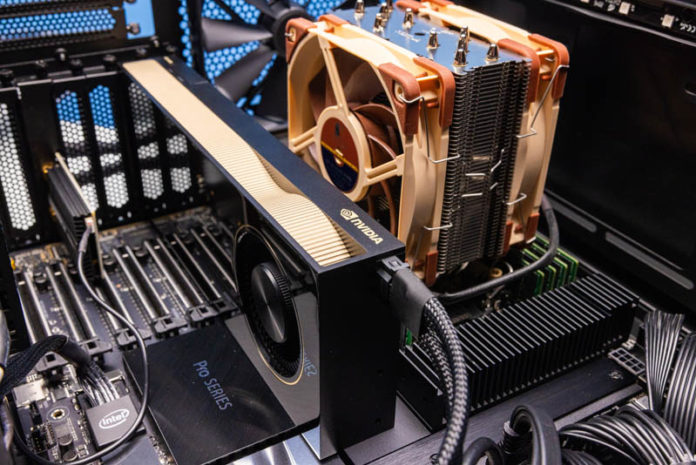

As a crazy ambitious project, requiring a massive amount of hardware, the following companies let us use products to do this. We ended up using a common motherboard, the ASUS Pro WS W790E SAGE SE because that is what we had available. Since this took a long time to put together, we also had chips from Intel, we used the PNY RTX 6000 Ada when we still had the review unit in the lab. We used a Micron SSD along with a Kioxia CM5 PCIe Gen5 NVMe SSD which we have used in these systems since, but it arrived after we were in the production pipeline. We also received coolers mid-build from Noctua. To say that this took a massive amount of hardware to put together is an understatement. Also, the STH YouTube members helped get us a lower-cost Xeon W for the first build so a thank you to them is in order.

Build #1: Lower-Cost Higher-Connectivity

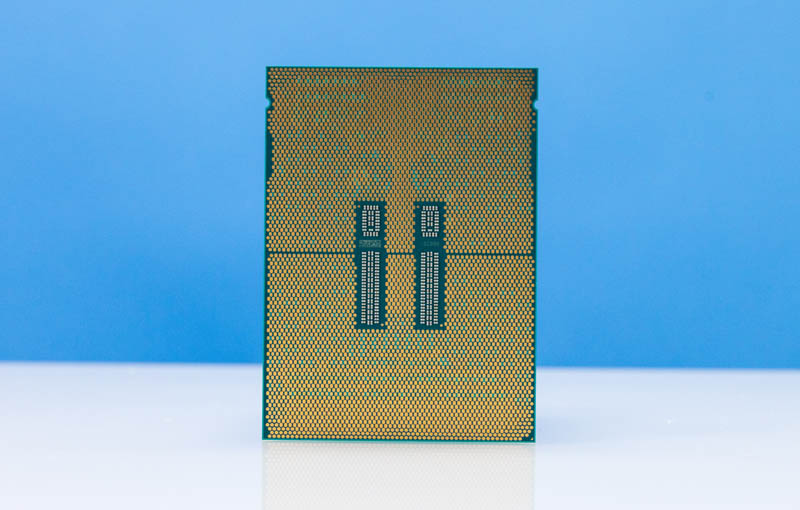

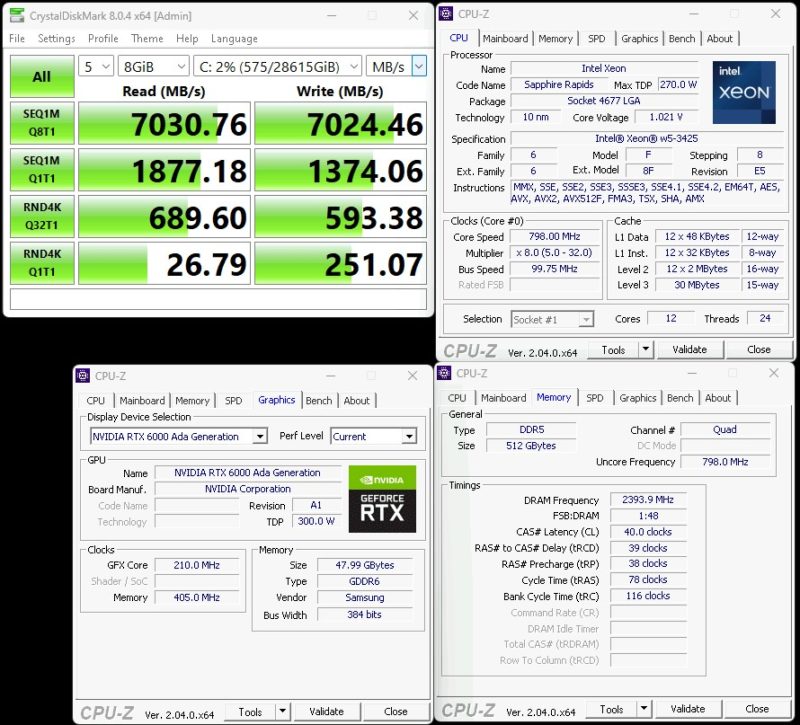

Often when folks look at the Intel Xeon W-3400 series, they immediately go to the top-end. The Intel Xeon W-3400 series has a number of lower-cost SKUs that are not the $4000 Intel Xeon w9-3495X. We are using the Intel Xeon w5-3425 which is costly at around $1190, but it has a few major features. Namely, while it is a mundane 12 cores, it has 112 lanes of PCIe Gen5, plus PCH IO, and 8 channels of DDR5 ECC RDIMM support.

That connectivity is what we are exploiting for this build. Many of our readers are frustrated that to get PCIe Gen5 one had to run a new Xeon or EPYC platform or a consumer platform with limited memory capacity and PCIe connectivity. The lower-end Intel Xeon W-3400 series processors fix those challenges. We will quickly note that we had an EPYC Genoa workstation build that our team thought was too cumbersome to really recommend as it was a bear to get working. The advantage of what we are doing here is we get all of the features with an easy consumer-like setup experience.

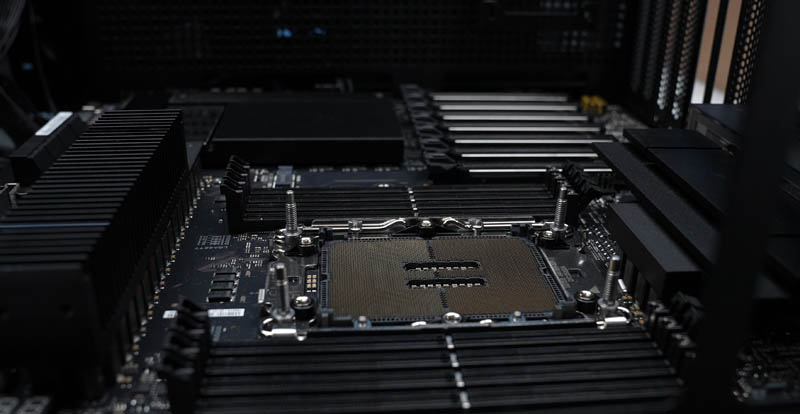

Intel also sent an Intel Xeon w7-2495X for this build. That offers lower PCIe Gen5 and DDR5 connectivity but is a lower cost. If you saw our piece yesterday, Intel LGA4677 112L E1A and 64L E1B Brackets for Intel Xeon W-3400 and W-2400 Series they actually use a different bracket from the W-3400 series. Our motherboard had both.

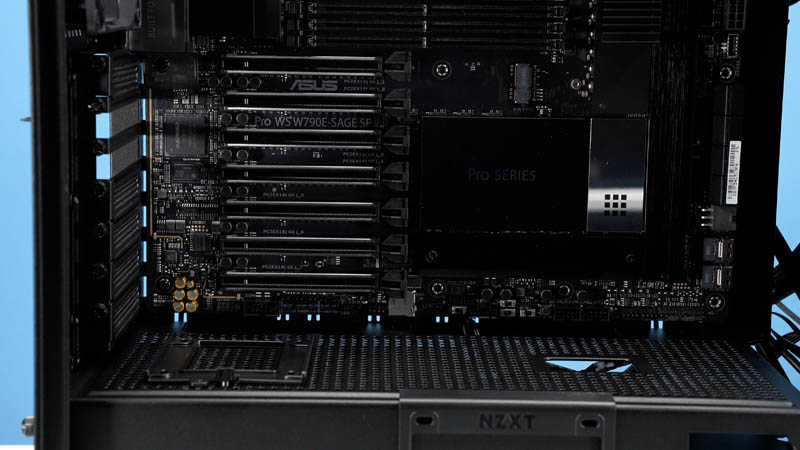

Bryan took this photo, which we thought looked cool of all the slots. 15 slots and one socket down the board.

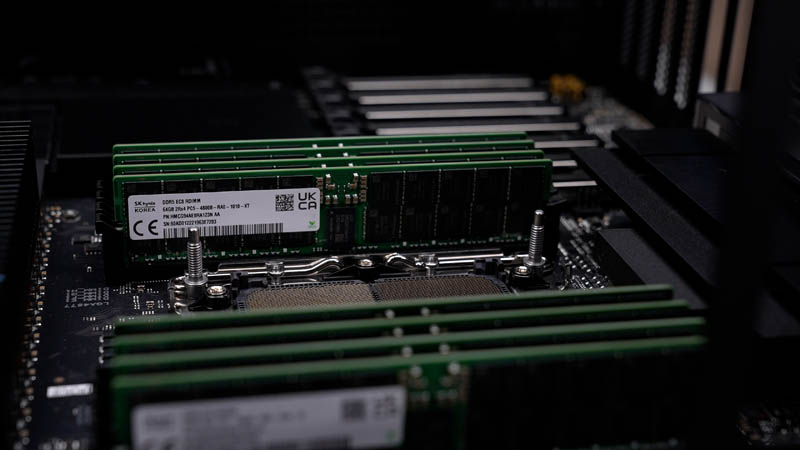

On the memory side, one gets plenty of DDR5 ECC RDIMM slots so one can easily pick between 16GB, 32GB, and 64GB at fairly reasonable costs. One can go larger or smaller, but that provides a lot of flexibility.

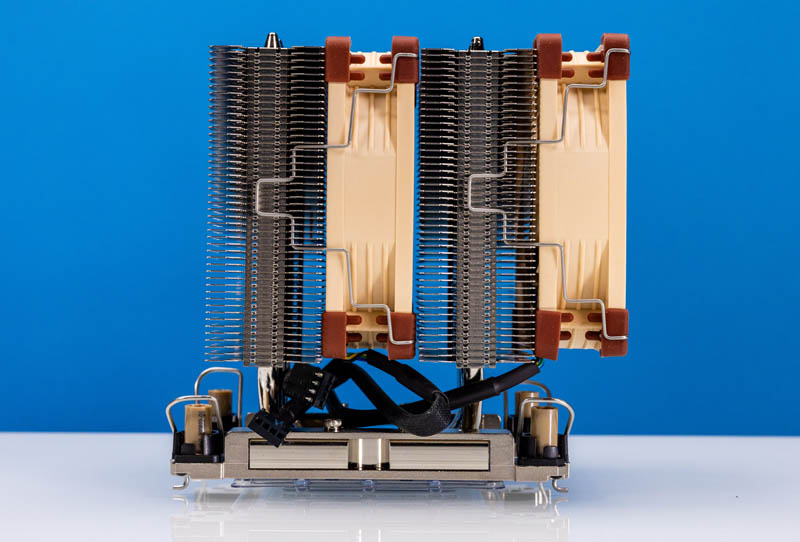

For the build, we are using the Noctua D9 4U cooler for this build.

This is a solid cooler and worked great on the 12-core Xeon. It also kept airflow flowing front-to-back in our chassis.

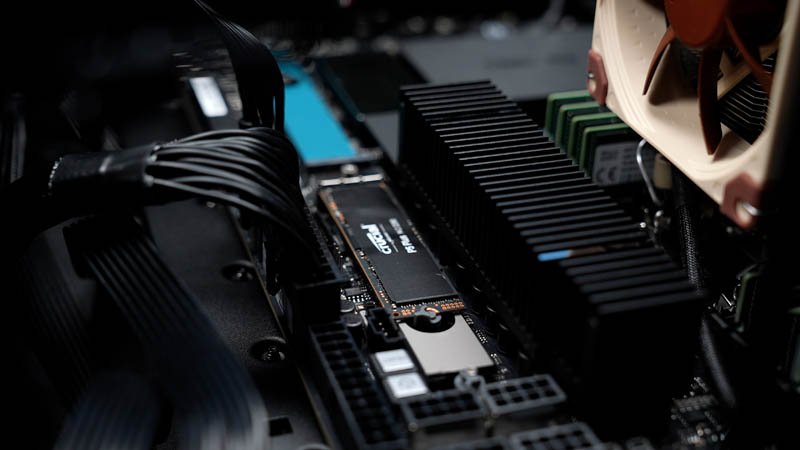

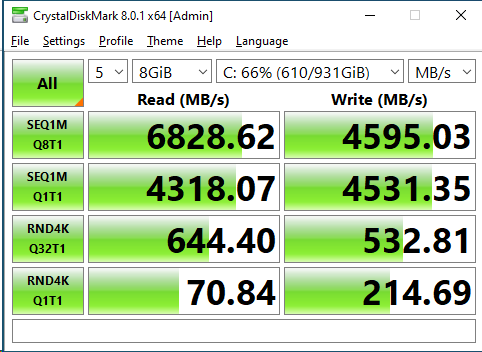

We had a reasonable Crucial P5 Plus as a boot drive using the onboard M.2. That kept the PCIe Gen5 slots open.

On the PCIe connectivity side, there are seven PCIe Gen5 slots. This is simply a ton of connectivity along with the PCH SATA and SSD connectivity options.

While the above would be the configuration for a high-connectivity server one can add a GPU (likely not this one) to make this a workstation as well, especially with dual 10Gbase-T onboard.

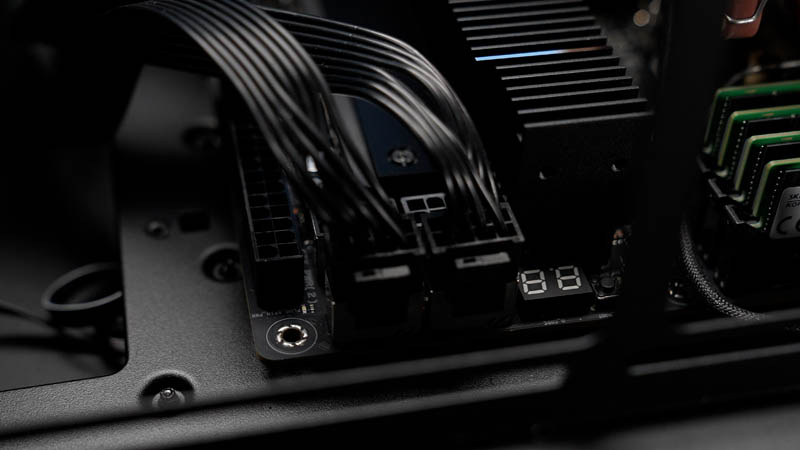

Something fun is that this motherboard can run on one high-end PSU but can use two PSUs for higher-power configurations.

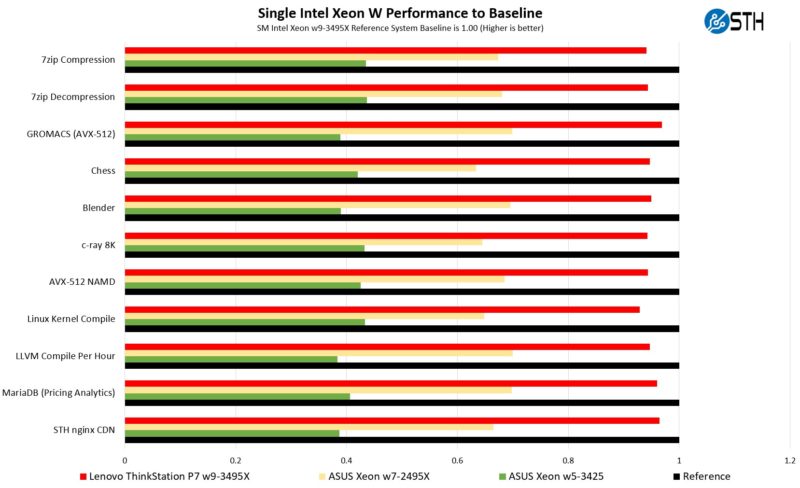

The negative of this setup is that it is not as fast as our baseline Supermicro platform (review soon) or the Lenovo Thinkstation P7 with the 56-core Xeons. We also put the Xeon w7-2495X on here just to provide another option in the middle.

Here is a quick shot of the onboard PCie Gen4 SSD.

Here is a look at a workstation where we added the 32TB Micron 9400 Pro is a Beast PCIe Gen4 NVMe SSD as we were transforming the build into a DIY workstation.

With that in mind, let us discuss the DIY workstation.

Can you undervolt these cpus? The power consumption is limiting them too much.

@Makis

There are options to apply negative voltage offsets in the BIOS of this board but I suspect that these settings are being ignored in actual operation because power consumption still remains relatively high after applying such offsets.

Despite the high power consumption, these CPUs aren’t typically being thermally constrained, their performance will not benefit from undervolting.

@Patrick

Did you have trouble cooling the memory during more stressful workloads? I’ve got the same platform and ended up having to get water blocks for all the DIMMs to keep them from overheating.

Also I’ve noticed a huge discrepancy (~30%) in performance between running the same FEA workload on Windows vs Linux; I’m thinking its scheduler related.

Was there a reason for having the Noctua cooler blowing down onto the GPU instead of up to the top vents? Curious if having it the other way round would’ve been even better.

The QAT situation on these is … there just isn’t one? I am having trouble interpreting the extremely large Xeon feature matrix for this generation.

Impressive choice! Building 3x Intel Xeon W-3400 workstations and servers with the ASUS Pro WS W790E SAGE SE is a fantastic way to achieve top-notch performance and reliability. The ASUS Pro WS motherboard’s robust features and support for multiple Xeon processors make it an ideal foundation for high-end computing solutions. Well done on your hardware selection!

@Patrick

Any idea if there would be boards from Supermicro, ASRock Rack, etc. for these CPU’s?

Would be interesting to see what a slightly more server-like board would be for these.

@wes What is wrong with Supermicro X13SWA/X13SRA ?

You write, “We will quickly note that we had an EPYC Genoa workstation build that our team thought was too cumbersome to really recommend as it was a bear to get working.” Even if not recommended, it would be interesting to hear about the troubles of the parallel Genoa project.

It seems a shame that it has seven PCIe slots but most of them are impossible to use once you put a GPU or three in. So then you use riser cables but GPUs generally fail to fully utilise a 16x slot. Actually maxing this system out for use with HuggingFace AI models would be intriguing but perhaps the hardware to do so doesn’t really exist…?

I am looking at the CPU-Z specs presented in the video at time 00:49, and am trying to figure out why the Intel Xeon w5-3425 (left), the alpha numeric portion not include in the “Name” field near the top of the “Processor” portion of the frame, like it is for the Intel Xeon w9-3495X (right); does not seem to share logic continuity in the “Clocks (Core #0)” portion of the frame, compared to the w9-3495X. The w5-3425, and rated (advertised) at 3.2GHz with a turbo speed of 4.6GHz, being shown to have a “Core Speed” of just 796.00MHz, the “Multiplier” set to only x8.0, given a “Bus Speed” of 99.75MHz, were as the w9-3495X, and rated at 1.9GHz with a turbo speed of 4.8GHz, displays a more understandable “Core Speed” value of 4800.00MHz, the “Multiplier” set to x48, given a Bus Speed of 100.00MHz. Shouldn’t, presuming all settings are basically identical in the BIOS configuration, the CPU-Z report for the w5-3425, with regards to the “Core Speed” read 4600.00MHz, thus consistent with the advertised turbo speed value, the “Multiplier” value set to x46? Please explain what the values in parenthesis mean, the w5-3425 range being from (5.0-32.0), the w9-3495X range being from (5.0-7.0). I would like to see the industry address the legacy (mid 1990’s) “Bus Speed” of ~100MHz-133MHz.

Do these servers support CXL?

but why was the supermico platform faster. you nonchalantly dropped in that comment as if it was no big deal. well it’s a big deal! lol. is there a simple explanation?

Hi everyone,

great that I found this article. However, I faced memory issues during a similar build but with 2 4090 GPUs. I used RDIMM ECC memory (64GB DDR5 4800MT/s ECC Reg 2Rx4 Module KTL-TS548D4-64G Server Memory) and was surprised with d2,b7, and b9 codes, all memory-related errors.

Can you give any hints and what memory was used in your setup?