Broadcom has a new set of 400GbE adapters in its Thor line. Like everything these days, it is being marketed as an AI product, but at the same time, AI servers are consuming 400GbE networking at a very high attach rate, and AI clusters are one of the applications driving for fast generational leaps.

Broadcom 400GbE NICs Launched for the AI Era

400GbE is important because it is roughly the speed at which a PCIe Gen5 x16 bus can push traffic. That means the Ethernet standard is matched to PCIe Gen5 GPU and AI accelerator interfaces, which are often x16. They are also matched to the x16 root ports in modern CPUs. At these speeds, features like offloads and RDMA support are very important since CPUs cannot handle multiple 400GbE NICs without offloads these days.

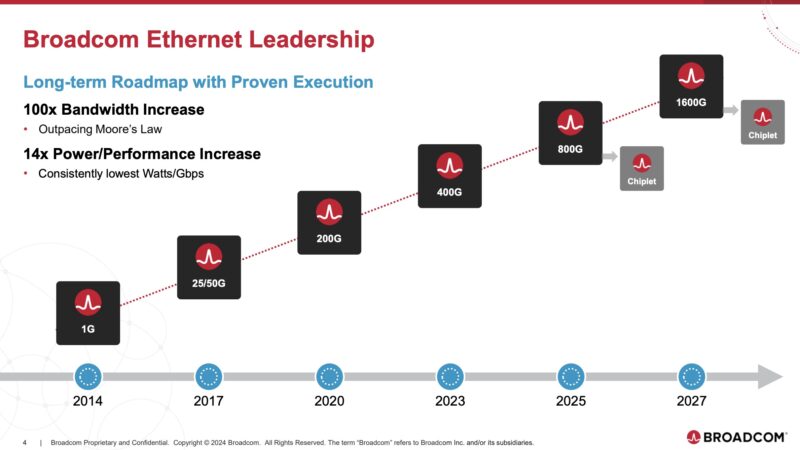

Broadcom is bringing a new generation of Thor adapters to market. Just for some sense, the 800GbE generation that we would expect in the not-far-off future is the generation where Broadcom will also offer the IP as a chiplet for integration into advanced packages. We also expect that generation to really start on the path of Ultra Ethernet Consortium compatible NICs, but there are likely to be some alignment challenges with UEC standards finalization timing and when the 800GbE NICs will need to finalize their design.

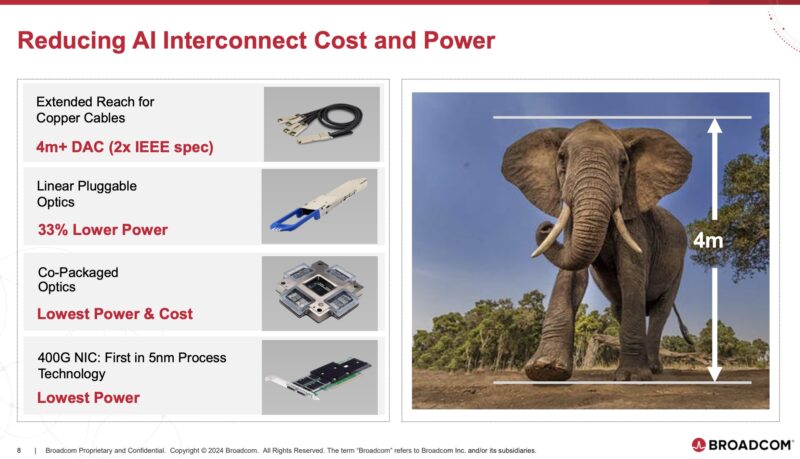

We reviewed the NVIDIA ConnectX-7 400GbE and NDR Infiniband Adapter some time ago and the NVIDIA ConnectX-7 OCP NIC 3.0 2-port 200GbE and NDR200 IB Adapter earlier this month. Since Intel still does not have its 400GbE NIC IP in the market, NVIDIA is perhaps Broadcom’s biggest competitor. For its part, it says that one of the significant differences with its 5nm 400GbE NIC is the lower power consumption as well as the SerDes that have extended reach using copper DACs.

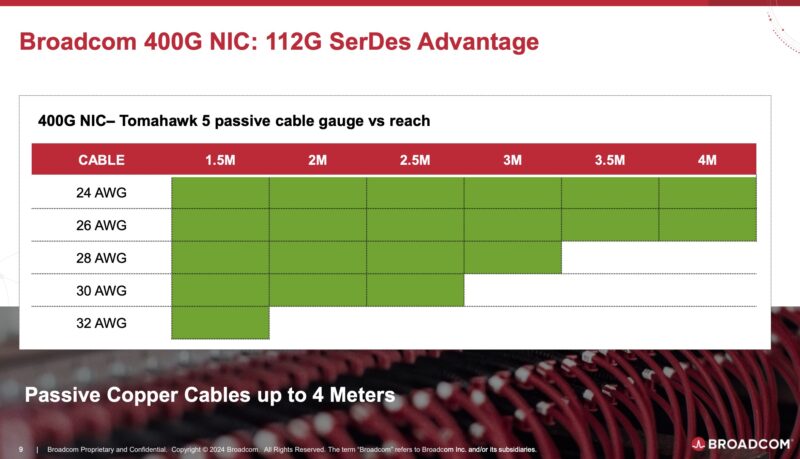

Broadcom has a table of its 112G SerDes reach when connecting one of its 400GbE NICs to a Tomahawk 5 switch.

The key takeaways are that it can use slimmer DAC cables to achieve shorter reach or even just the ability to hit longer reaches with passive DACs up to 4M. The idea is that not having to swap to active cabling or optics that it lowers cost and power consumption. Modern AI servers can easily have 8-10 of these 400GbE interfaces, so those power savings can add up. Usually using slimmer DACs also means they are easier to route and restrict airflow less than thicker DACs. If you have used a 400GbE DAC and a 40GbE DAC, you have experienced this first-hand.

Final Words

We have two adapters here for review, so expect the reviews to come in the next few weeks, although the next three weeks are absolutely packed. Still, this is not just a paper launch, as we have cards already running in our lab. This is important since NVIDIA’s ConnectX-7 cards have often been on allocation over the past year or two. Broadcom is also more open to a larger set of DAC and optics makers versus NVIDIA. We have heard of AI clusters being held up because of needing NVIDIA cables. Having another source of 400GbE NICs with a broad DAC and optical module ecosystem is important to continue pushing 400GbE to more servers.

So fast

Are you planning to review the Alveo V80 Compute Accelerator?

“We have heard of AI clusters being held up because of needing NVIDIA cables.”

This seems pretty dramatic, since those are presumably NVIDIA customers being left hanging. Is it known whether NVIDIA is just that willing to hold the line on parts whose margins they find tasty; or are ConnectX NICs fussy in some way that means that cable coding isn’t primarily artificial and even if they were willing to do some emergency blessing of 3rd party cables there simply aren’t enough that actually do what the NICs need them to?

do these nics have any fanout capability?

The speed of development is too dizzying!