Since we have done this a few times for SC23, we wanted to show Giga Computing/ Gigabyte’s big NVIDIA AI servers on display at the show. We picked our top five from the show, so we took some photos and wanted to do a quick piece on them.

For those who want the ultra-fast short video version, we have that on our new behind-the-scenes shorts channel, STH Labs.

Here is our top 5.

Gigabyte G593-SD0 NVIDIA HGX H100 8-GPU Server

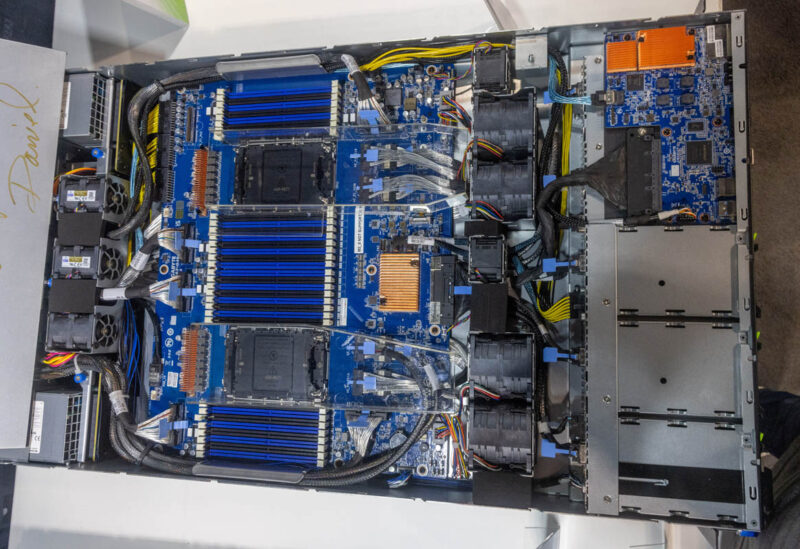

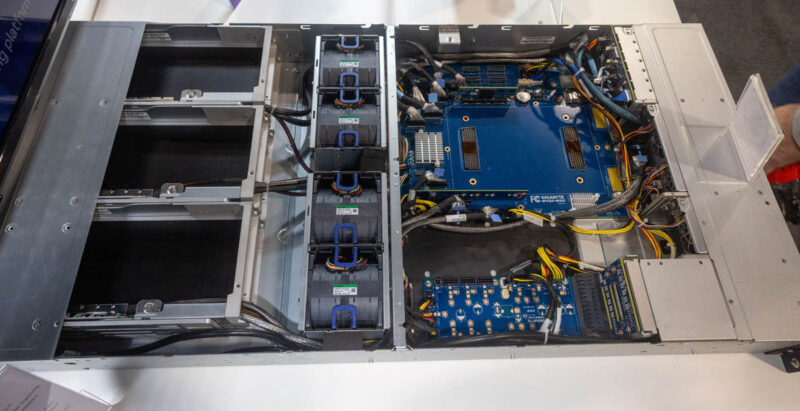

The Gigabyte G593-SD0 is the big one, the big show if you will. This is Gigabyte’s 8-GPU NVIDIA HGX H100 platform.

One unique feature that Gigabyte has is the front-mounted BMC and options for storage and a network card in addition to the card slots on the rear.

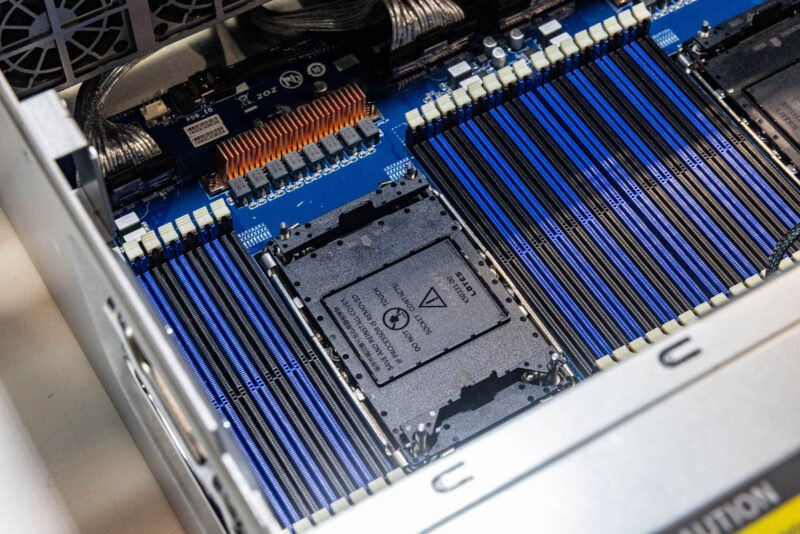

Gigabyte has dual processors from the 4th or new 5th Gen Intel Xeon families, each with a full set of 16 DIMMs (32 total.)

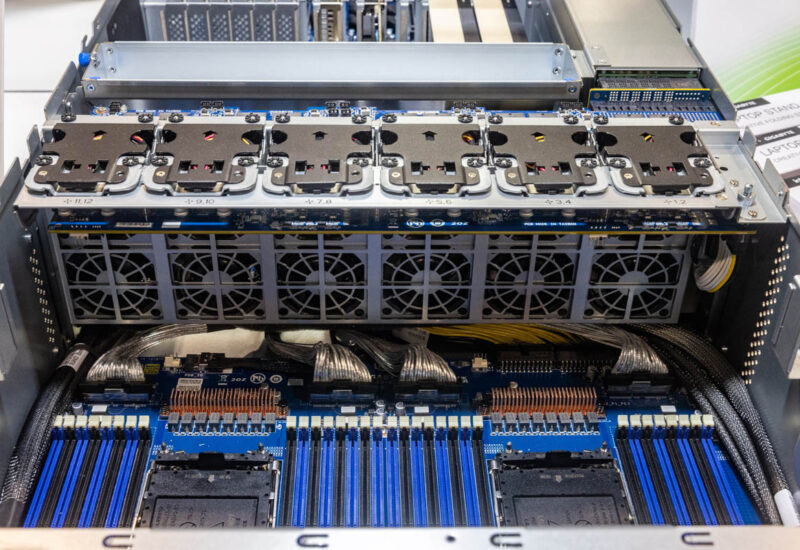

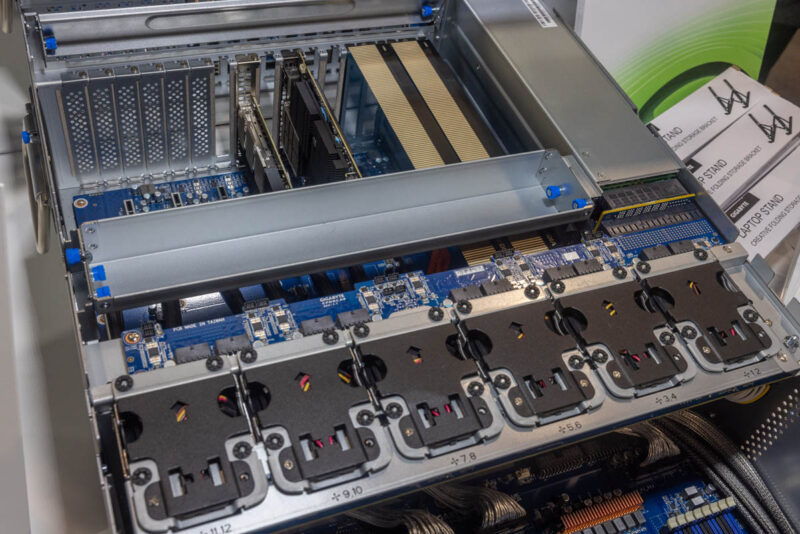

The eight NVIDIA H100 GPUs are set in a tray that slides out.

Here is a cool look inside the system that we managed to light up. Gigabyte has both expansion slots in the top middle and the bottom sides of the chassis.

This is a very important HGX H100 platform in the market because of its top 2 or top 3 customers.

Gigabyte G493-SB0 8x PCIe GPU Server

The front of the Gigabyte G493-SB0 is really interesting with NVMe SSD and hard drive storage, two expansion slots, and then the front I/O for management and control plane networking.

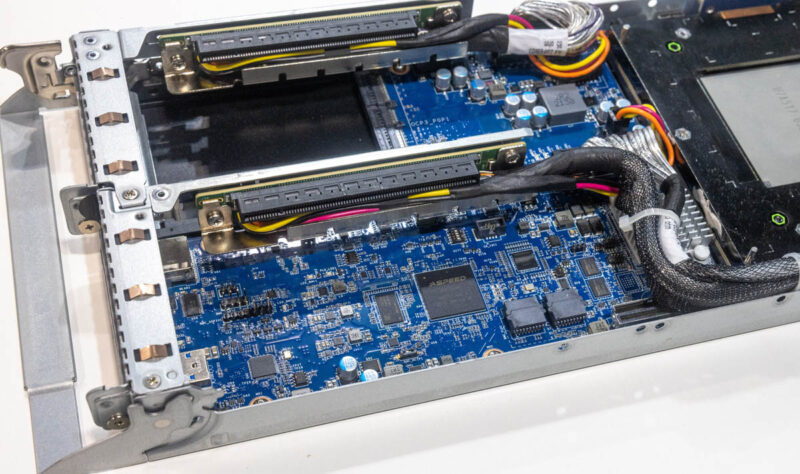

The Gigabyte G493-SB0 is an 8x PCIe Gen5 double-width GPU server, again based on Intel Xeon processors.

The mid-chassis fans are really fun as they remind us a bit of the Gigabyte G481-S80 8x NVIDIA Tesla GPU server we reviewed in 2018. It is cool to see these cooling design themes span generations like that.

The rear has the 4x 3kW power supplies and eight double-width GPU slots for cards like the NVIDIA L40S.

This has been a staple platform for AI systems for well over half a decade.

Gigabyte H223-V10 NVIDIA Grace Hopper 2U 4-Node Server

The Gigabyte H223-V10 is a NVIDIA Grace Hopper system with 72 Arm cores and a NVIDIA Hopper GPU per node.

Each node is on a sled with its rear I/O expansion cards. This allows Gigabyte to put four nodes in a 2U chassis.

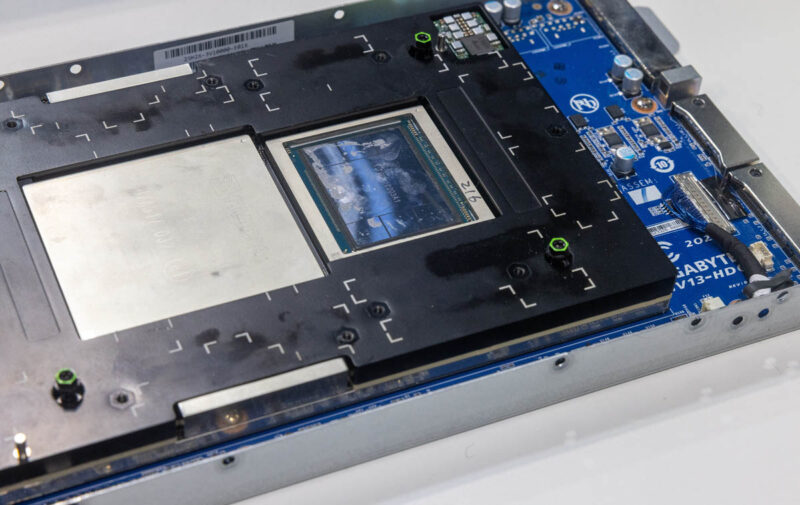

Here is a quick shot of the NVIDIA Grace Hopper package.

Keep in mind that this is a 1kW package so cooling four of these in 2U means that the power consumption is well over 2kW/ U.

Gigabyte H263-V60 NVIDIA Grace Superchip 2U 4-Node Server

The Gigabyte H263-V60 is the company’s 2U 4-node solution for the NVIDIA Grace Superchip.

This puts 144 Arm Neoverse V2 cores into a single node or 572 cores in 2U.

There is also room for PCIe I/O and more expansion slots than the GH200 nodes.

These are much lower power than the GH100/ GH200 nodes, but also do not have the onboard GPU.

Gigabyte XH23-VG0 NVIDIA Grace Hopper 2U Server

The Gigabyte XH23-VG0 is the company’s MGX-style 2U system for Grace Hopper.

This does not have the same density as the 2U 4-node H233-V10, but it offers more I/O expansion possibilities.

One cool feature is all of the expansion slots in the rear of the system.

Final Words

Overall, it was great to see all of these systems at SC23. We know the NVIDIA AI systems are a hot topic these days, so we wanted to show off Gigabyte’s collection. Stay tuned for non-NVIDIA server solutions in January 2024, as STH will have cool motherboard and server reviews coming.

Why would Gigabyte go with Xeons when Epycs offer more (PCIe lanes, cores, memory channels)? Shouldn’t Epycs be more affordable as well?

Epycs blows.. Everyone that has been around computers for the last 20yrs knows amd is not the way to go..

Intel is always the winner..

I wouldn’t touch that with a 10ft pole. The quality of their bigger GPU boxes is horrible. I bought many over the past year and have had a near 90% failure rate with them. Think engineering failures causing power rails to short out on GPUs. When I’m spending this kind of cash, this level of quality is absurd.

Servers in general don’t fare well when dunked in oil.