This week, Backblaze released its quarterly hard drive reliability stats. When they did, we had a number of folks reach out because of a recent feature on STH. Specifically, we said Farewell Seagate Exos X12 12TB Enterprise Hard Drives that failed next to one another. That drive happens to be in Backblaze’s data as a standout, and not for a good reason.

Backblaze 2021 Seagate Exos X12 ST12000NM0007

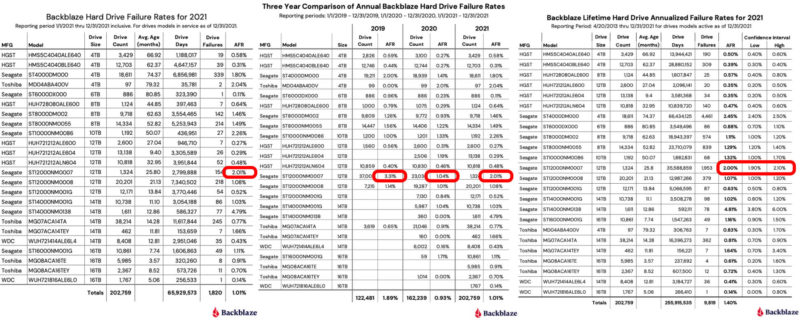

We took the charts from Backblaze’s 2021 stats. The source for all of this is here. When you look at the drive stats for the Seagate Exos X12 ST12000NM0007 you can see a pattern:

The Seagate ST12000NM0007 is not always the least reliable drive in every slice of the data, but it is certainly near the top for most of these. We actually did not even think of this when we first saw Backblaze’s data, but we had a reader point out that the model was the same as what we just covered in our recent anecdotal piece.

Of course, there is a lot more going on here, and these drives were in a much smaller array than what Backblaze uses. We also have more of these drives running perfectly fine so we are in no way saying these drives have some sort of mass failure. At the same time, it seems notable that these stories keep coming up. Even Backblaze saw the data and worked with Seagate to phase out this model in 2020.

Final Words

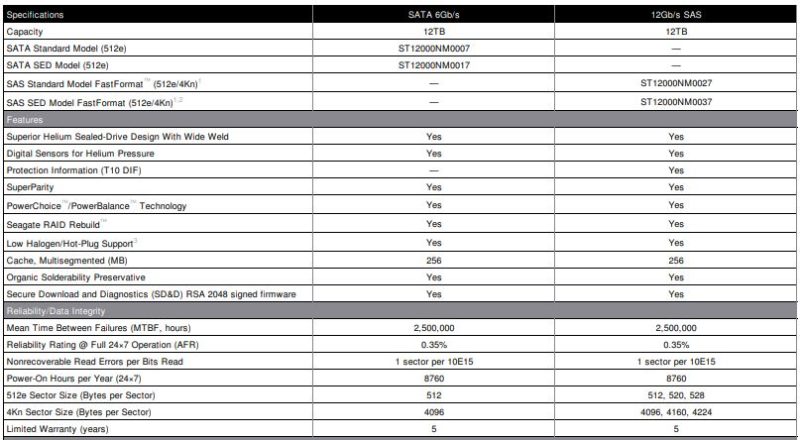

Something was apparent if you read some of the comments on our piece from a few weeks ago. STH readers were citing mass failures, where half of the storage arrays would fail and be replaced by a different disk. Backblaze’s data does not suggest an AFR of 30-50% or anywhere near that. At the same time, it is notable that we had several users anecdotally remember the drives as ones with higher failure rates, we saw a strange failure, and Backblaze has these drives as high-failure rate devices. These seem to not be in as bad of a position as the 14TB Dell-Seagate ST14000NM0138 drives at Backblaze, but these 12TB Exos X12’s seem to have a lot of stories that indicate AFR that greatly exceeds the 0.35% on its spec sheet. One has to wonder about that spec given these anecdotal experiences.

Since no organization, not even Seagate, has a complete picture of failure percentages (many drives are scrapped without data going back to the OEM like ours were) it is very hard to get an accurate view of reliability.

At this point, these are probably not drives we would pay a premium for or look to find more of given the data that is out there. It is also the case where we will likely replace these drives with different drives as we did with the failure a few weeks ago, just to help with diversity. For our readers, this is probably worth noting if you have this drive model installed and something to keep an eye on for the future.

I will assume Backblaze is using their JBOD chassis’s? If so how about they compare an equally equipped JBOD from another manufacture? Backblaze JBOD’s are … frugal so expect frugal results after long term use. Somehow this is ignored in their reports not to mention the physical setup of these JBOD’s and the environment.

That “14TB Dell-Seagate ST14000NM0138” they talked about is Seagate drives in a Dell not a Blackblaze server and those had the worst reliability of them all.

@IdeaStormer What sorts of problems are you expecting with the Backblaze chassis? They publish SMART data so you can check the drive temperature etc. so vibration is the only thing I can think of that could be problematic, but then it’s hard to blame the chassis when other drive models have a much lower failure rate in the same chassis. If these particular drives did fail less in another manufacturer’s chassis, it would just show that they are much more sensitive to their environment than other drive models are, which is still not great.

Interestingly those drives in the Dell chassis that had the worst reliability – the Backblaze report says they did a firmware upgrade on them and that significantly reduced the failure rate. Makes you wonder what they changed in the firmware…

The Dell ones are still Way worse than average with the new firmware. I’d also want to in know if it’s a custom Dell drive firmware. If so, then could that be the reason it fails? If it’s just Seagate firmware then is Dells chassis that bad? It’s stories like these that make me cynical and ask if they’re designed for slightly more failures to increase service revenues

Installing all the same model and age of drives in a RAID stack is introducing a SPOF. Not even considering the bathtub curve or the specific reliability of a given model, there’s also firmware issues which crop up from time to time, which result in drives simultaneously shutting down. So just don’t do it. “Ease of use yadda yadda…” ok, but accept the consequences.

@William Barath – Totally agree, despite vendor recommendation to do so you should have different FW, MFG date drives. As for large capacity NAS builders you don’t have the luxury of waiting for shipments from various sources, and the techs have only 1 or 2 minutes per drive for the install – and no time for mix & match etc

I had purchased 4 six tb Seagate iron Wolf drives for my new asustor Nas. Within a month I was getting abnormal vibration alerts from the software. I purchased 4 Toshiba 6 TB drives and sent the Seagate drives back. I never liked Seagate drives in the past and my latest dealing with the iron Wolf drives leaves me to never want to purchase Seagate drives again. After a year and a half, one Toshiba drive started reporting bad sectors. I purchased another 6 TB Toshiba from Amazon, pulled the bad drive out and put the replacement in, raid rebuilt itself automatically. I sent the drive back to Toshiba and got a full refund of my money. The Toshiba drives are quieter and faster than the iron Wolf drives.

@William No large scale provider will give you a mix of hardware like that unless they happened to be mixed when supplied. If you roll your own, then more power to you. @IdeaStormer backplanes should be irrelevant. In this context, it’s a well known issue that this series of drives has a defect at the source.

And it’s not just the specific unit shown. In my case, ST12…037 was also in the mix.

@Malvineous I can tell you from the info I received, the FW did nothing but to change how failures were reported. I think it masked some of that information. This was from ET03 to FW04 specifically. Interestintly, in my case, the drives I mention were swapped from some 2019 build dates with ET02 to some 2020 builds with ET03 FW. These were then upgraded to ET04. In the original system the were in, reported errors did drop, however, when moved to a completely different system, they were dropping like flies. The original setup was write heavy. The second system had initial writes being performed when the issues crept up. Some reads also became an issue.

But, sometimes it happens. It happened way back in 2005-ish with bad ram from Samsung on some Cisco 6500 line cards. However, in my experience, Seagate is typically terrible. Anecdotes aside, fool me once and all that.

We bought an array with 42 of these drives x12’s. An aircon failed and the array ram hot for a while.

The first year we had 7 replaced. 2nd year half of them have been returned. 3rd year they have been separated out in to low level raids for temp tasks but still have replaced 2/3rds of the drives.

I thought they just got Heat stroke. This is info is a relief to know it wasn’t something that we caused.

Backblaze stats show Seagate drives have always, across the years across their lines, had the worst failure rates. Fine, not all, not always, but so so so many. Does no one remember their previous catastrophic failures with one line? The stats don’t lie.

Wouldn’t touch them or recommend them ever.

Datapoint from us:

We run 384 of the ST12000NM0027 model. We had 72 failures from 336 active drives, within nearly 3 years. Works out to an AFR above 7%.

The really ugly thing is that thy don’t fail hard, they just get extremly slow, so very often, they are not dropped from the raid but just slow it down extremly, which is far, far worse.

The HGST consumer NAS drivers we bought before our vendor convinced us to buy ‘enterprise grade’ are still the best of the lot of 6,8,10 and 12TB discs we have. Cheapest and oldest, too.

i wonder if this means anything for the 12tb irons wolfs in my mini server at home

Large capacity NAS manufacturers cannot afford to wait for deliveries from several suppliers, and technicians have only a few minutes per drive in which to install it.