The ASUS RS720Q-E8-RS8-P is a basic 2U 4-node system that has a unique place in the STH/ DemoEval lab. We purchased one and within 30 days picked up two more for our STH 2U 4-node power testing. These days, 2U 4-node systems are the go-to compute blocks as they can fit four complete systems in a 2U form factor. These four systems share redundant power supplies and cooling offering higher space and power efficiencies. Unlike some of the higher-end 2U 4-node dual Intel Xeon E5-2600 V3/ V4 systems, the ASUS RS720Q-E8-RS8-P is heavily optimized for cost.b

ASUS RS720Q-E8-RS8-P Hardware Overview

The ASUS RS720Q-E8-RS8-P crams four nodes, redundant power supplies and cooling into a 2U form factor. What is fascinating, is the degree to which ASUS has cost-optimized the platform.

On the front of the chassis, there is a total of eight 2.5″ hard drive/ SSD bays. These are optimized for SATA implementations given cabling in the chassis. Most 2U 4-node systems have 24x 2.5″ bays. Removing the additional drive bays lowers the overall cost of the system. We did note that the platform appears to be able to handle another two sets of 8 drive bays similar to how the Intel-Chenbro 2U chassis can add additional drive bays.

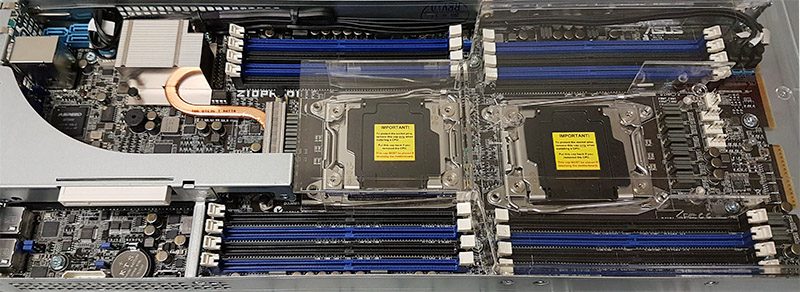

Here are the specs via the ASUS website. These systems utilize two DIMM per channel (2DPC) configuration with a total of sixteen DIMM slots.

Expansion wise there are two PCIe slots. There is one half-length low profile PCIe 3.0 x16 slot which is the primary expansion vector. The other PCIe 3.0 x8 slot is a proprietary slot that is marginally too small to fit even the smallest low profile cards. We wish that ASUS would have either used an OCP card slot or a second PCIe LP slot. ASUS uses OCP mezzanine card slots in many of its other E5-2600 V3/ V4 generation servers such as the ASUS RS520-E8-RS8 2U server we reviewed. We recommend that every E5-2600 V3/ V4 system is equipped with 10GbE or higher networking which means that one has to use the very limited proprietary ASUS options or the only PCIe slot for networking.

Storage is provided via six SATA ports. Three of which are wired from one end of the node to another via a 7-pin cable. Two of these cables provide data paths to the hot swap bays. The third cable services the m.2 port. Each node’s 2242 m.2 port can either handle a SATA or PCIe/ NVMe SSD. There are very limited PCIe 2242 m.2 drives so SATA will be the most likely solution. We have been using 16GB Kingston m.2 drives as boot SSDs for VMware or other OSes so we can use the hot swap bays for storage. Each node also has an internal USB Type-A connector as potential boot options.

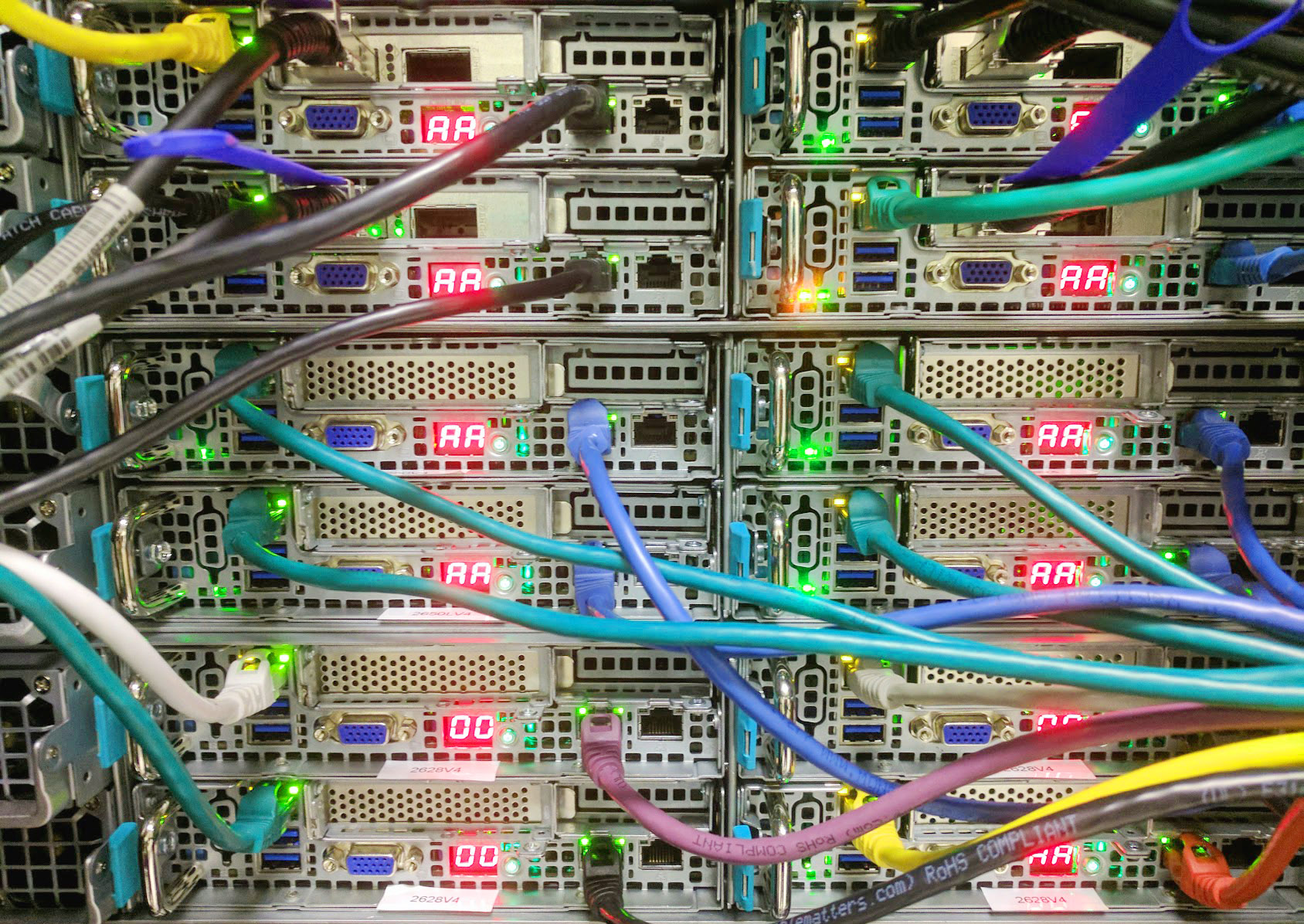

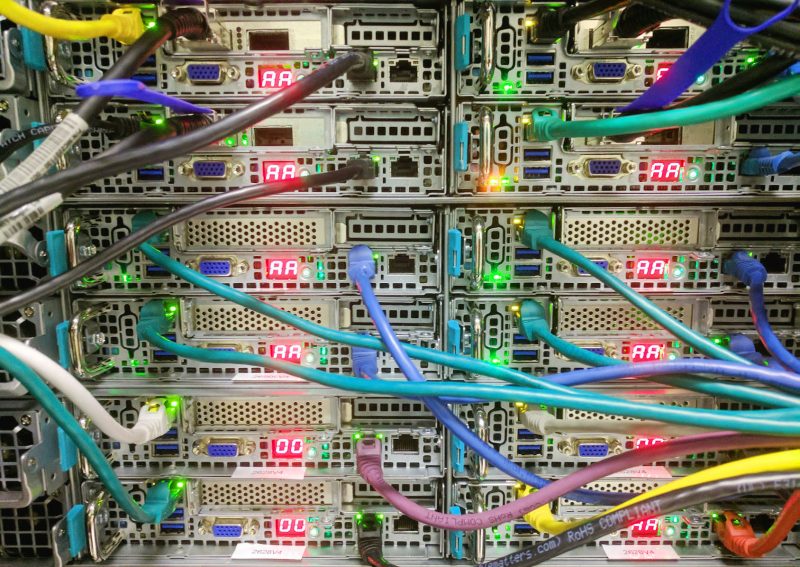

When you move to the rear I/O panel of each node, there is a very spartan configuration. For networking, we find dual 1GbE network ports and a management Ethernet port. There are two USB 3.0 ports, a power button, and a VGA connector. By far, the standout feature of the ASUS RS720Q-E8-RS8-P is the LCD screen that shows POST codes. Here is a view of what these look like in a stack of three 2U systems:

AA means that the system has successfully booted into the OS. You can see the nodes in the bottom ASUS RS720Q-E8-RS8-P are still booting or awaiting provisioning from our Foreman provisioning tool, therefore they have different codes on the LCD screens.

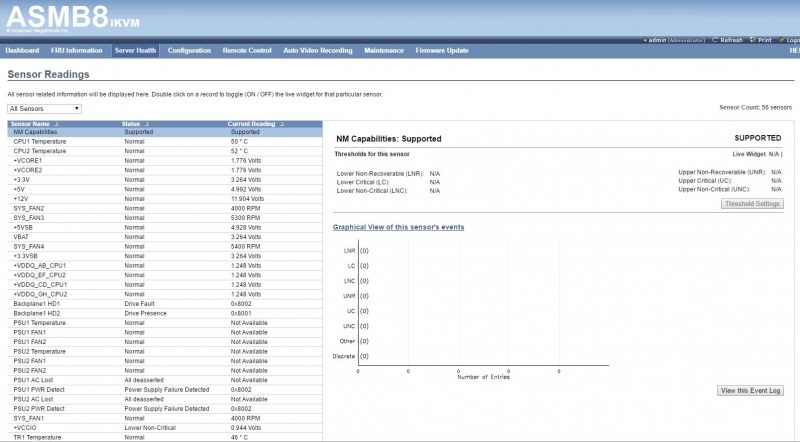

ASUS Management Software Overview

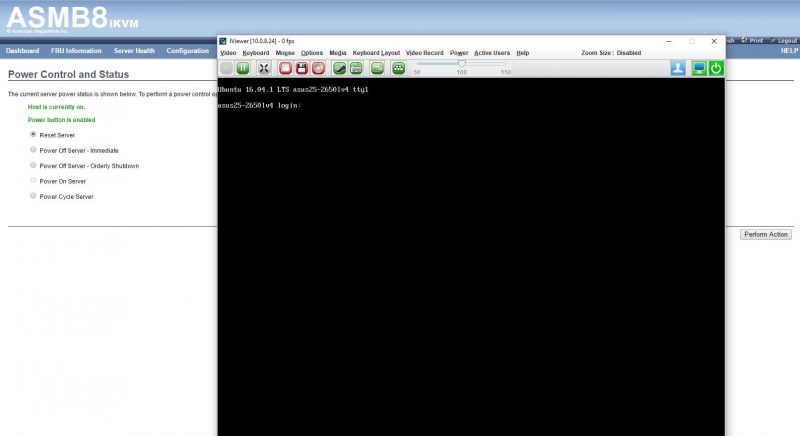

ASUS has an industry standard IPMI and iKVM implementation. For monitoring, there are features such as sensor readings. We also have the ability to manage users and groups who can administer the system.

ASUS includes iKVM functionality with the unit by default. If you compare this to HPE, Lenovo, and Dell, it is a feature that ASUS includes for free where other larger players charge for the feature. Using iKVM you can remotely manage the system including BIOS updates, OS installations, and remote troubleshooting.

We did not see any chassis level features in the firmware. As a result, each node in a chassis looks like a completely independent server to the management firmware.

Power Consumption Comparison

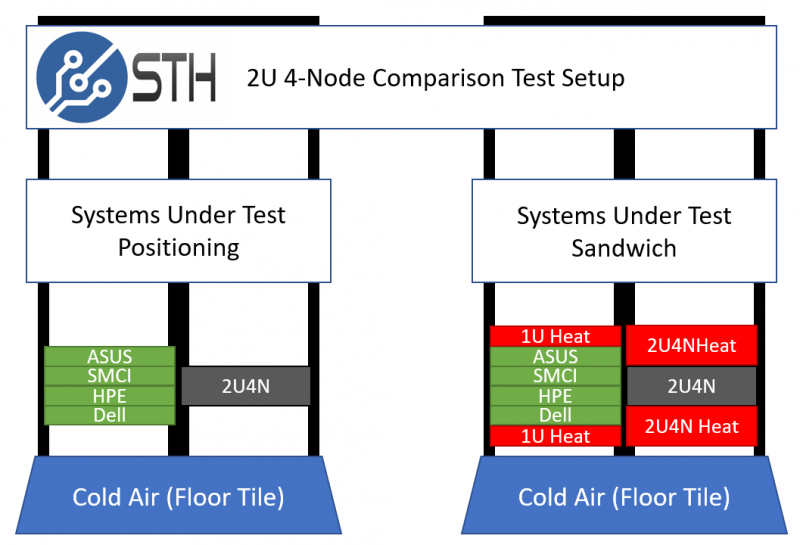

We have an industry leading methodology for measuring power for 2U 4-node systems. You can read about our 2U 4-node power consumption testing methodology in detail. Essentially, we set up four independent 1U systems in the lab from various vendors. We then take all four sets of CPUs, RAM, Mellanox ConnectX-3 EN Pro dual port 40GbE adapters, SATA DOMs and put them into the nodes of a 2U 4-node system we are testing. We then “sandwich” the systems with a similar set of servers to ensure proper heat soak over the 24-hour testing cycle.

We then apply a constant workload, developed in-house, pushing each CPU to around 75%-80% of its maximum power consumption. There are few workloads that hit 100% power consumption (e.g. heavy AVX2 workloads for Haswell-EP and Broadwell-EP.) So we believe that this is a more realistic view of what most deployments will see. That gives us a back-to-back comparison of how much more efficient the 2U 4-node layout is.

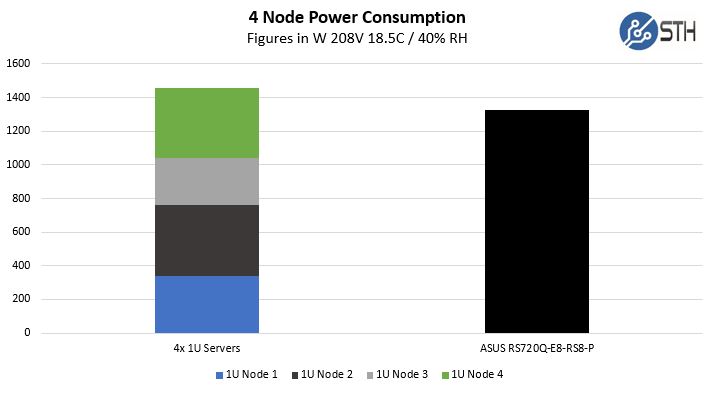

As we can see, the ASUS RS720Q-E8-RS8-P is quite awesome compared to four similarly configured 1U servers:

We got a consolidation power savings of just over 9% by consolidating into 2U 4-node systems. If you are wondering why these platforms are so popular, they cut space by 50% and power consumption by over 9% for the same workloads. In some of our data centers, this equates to over $95/ month per chassis in operational savings.

Much of this is attributed to the 2kW 80+ Titanium efficiency rated redundant power supplies (1+1.) The other factor is the shared power and cooling efficiencies in the chassis with larger midplane fans.

CPU Performance Baselines

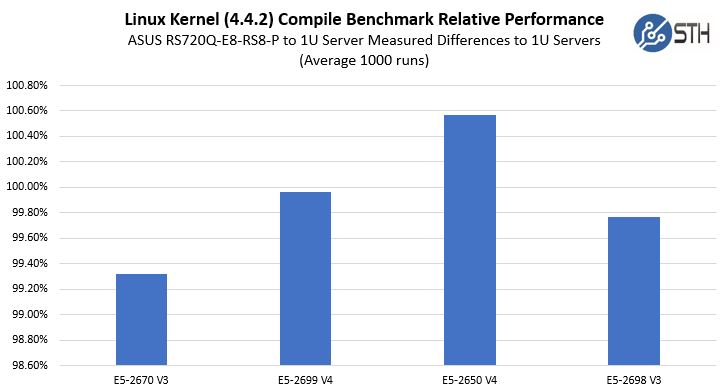

We did run heat soaked machines through standard benchmarking. We perform these benchmarks in the “sandwich” we use for power consumption tests. Since the dual E5-2699 V4 and E5-2698 V3 systems finish a full day before the E5-2650 V4 system, we immediately spin up our power consumption test load script at the end of the 1000 runs.

Note: We did zoom that graph’s Y-axis because seeing sub-1% variations on an o-101% Y-axis is difficult. These results are extremely close since we are using the same CPUs and RAM.

As you can see, the four nodes were extremely consistent in terms of performance and consistent with our baseline runs from 1U servers. They were also different than some of the newer 2U 4-node servers we have tested.

In earlier iterations of 2U 4-node servers we saw issues with CPU throttling while heat soaked. By running the 4kW+ test load over three days our test results held within an expected benchmark variance which was the purpose of the test. That means that the ASUS job performed similarly to the four 1U test servers which is what we want to see.

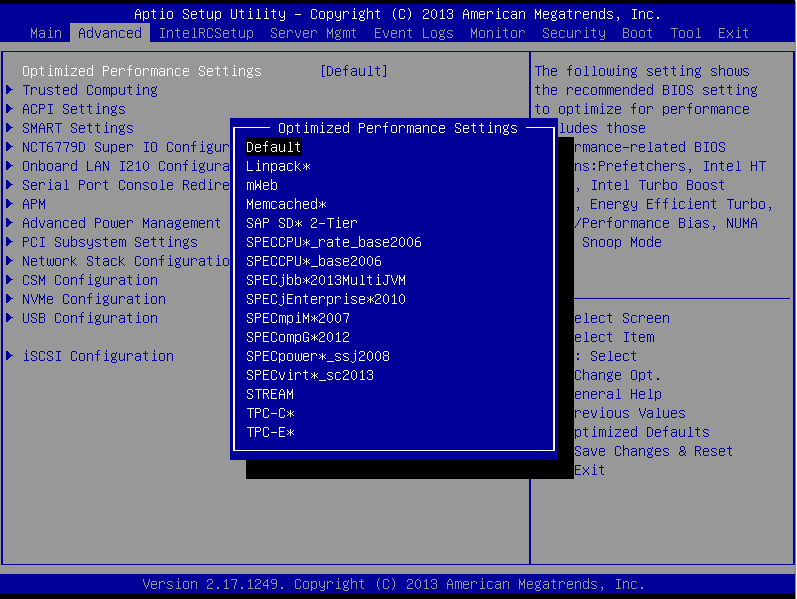

One extremely interesting option we saw was optimized BIOS performance settings for various common benchmark applications. We did not use these settings for our workloads since we tend to run mixed workloads on our machines, however, this is interesting to see on the ASUS machine.

Final Words

The 2U 4-node form factor is so good from an efficiency perspective that ASUS’ approach with this unit makes a lot of sense. While the ASUS RS720Q-E8-RS8-P may not have the most drive capacity, the longest feature list, or the easiest servicing, the company targets a low-cost segment. As a result, having a good-enough platform to get compute nodes online is extremely effective. We do wish that ASUS had used a more standard form factor for the second expansion slot since PCIe slots are at a premium. Likewise, we would have liked to see support for larger 2280 and 22110 m.2 drives. At the end of the day, this was a highly effective setup that scales well. Our 12 nodes have been running at a load average of 78% ever since they were installed several months ago and have allowed us to consolidate machines in the data center.