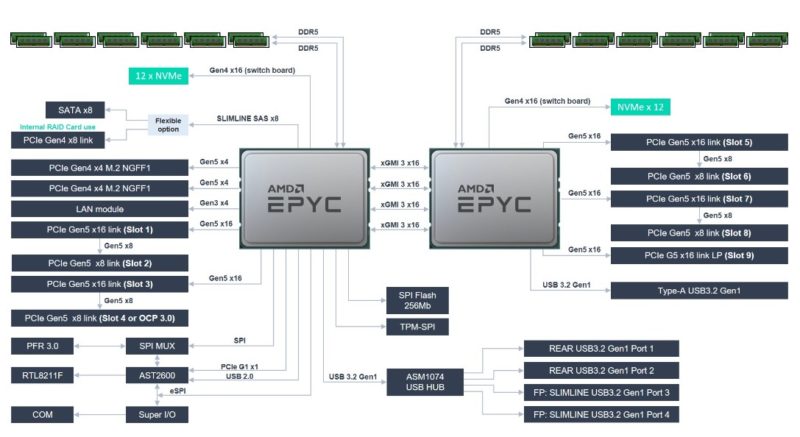

ASUS RS720A-E12-RS24U Topology

Here is the system’s block diagram. If it looks a bit complex, it is.

Let us dig into this one a bit. First, we can see the 12x NVMe with the Gen4 x16 switch from both CPUs. These are for the 24x NVMe front panel option. That means the drives are oversubscribed by a 3:1 basis. The benefit is that ASUS is able to conserve lanes for all of the slots.

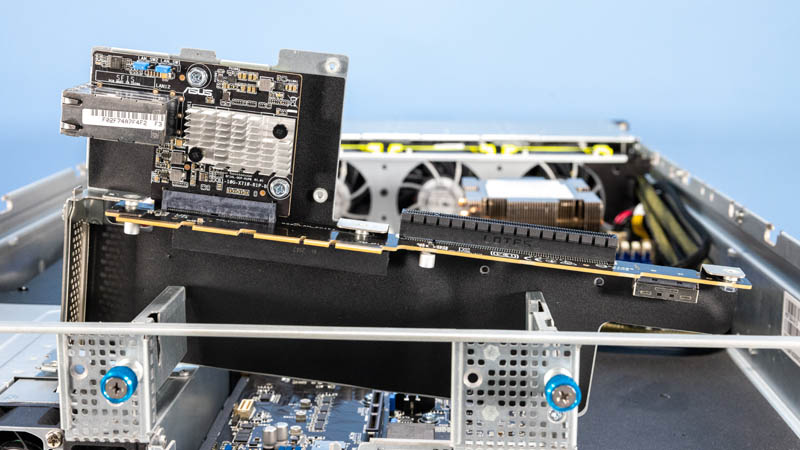

Next, the SlimSAS x8 that is providing SATA to the front bays 1-8 in our system can instead be routed to the internal slot on the low profile riser for an internal RAID card or HBA.

We can see the various options on the risers for x16/x0 or x8/x8 operation, but perhaps the most interesting are the PCIe Gen3 x4 lanes going to the LAN module. In this generation, AMD has additional PCIe Gen3 lanes for lower-value I/O. That is what those lanes are.

AMD also has a complete four sets of xGMI links between the CPUs. That provides the maximum socket-to-socket bandwidth. Some vendors only have three links between the CPUs for 75% of the bandwidth ASUS has here.

One small note is that we could not find CXL references in the documentation or spec sheet. Our sense is that we are early in the CXL ecosystem lifecycle, so this is something to keep an eye on in the future with this system.

Next, let us get to the performance.

ASUS RS720A-E12-RS24U Performance

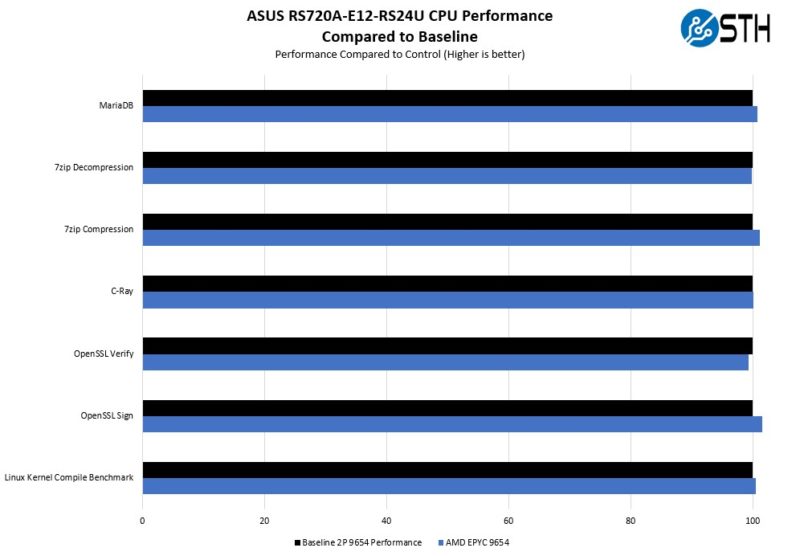

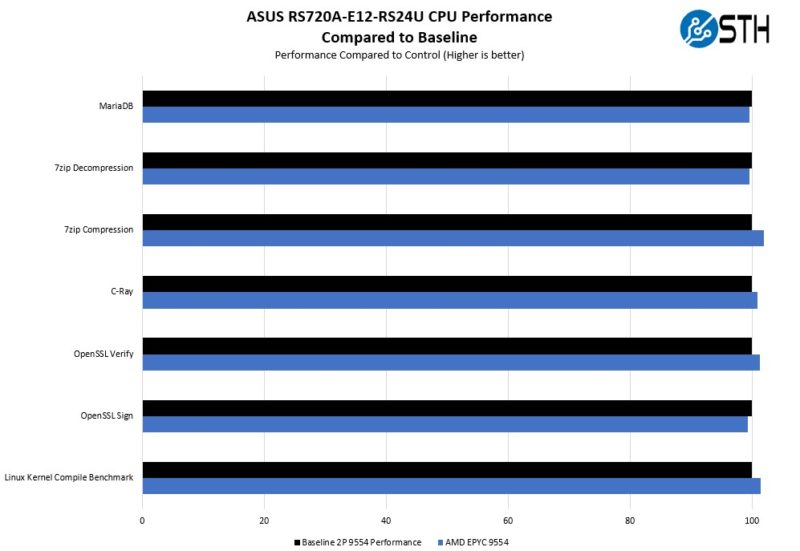

The performance of the AMD EPYC 9004 “Genoa” series is stunning. We were able to test a number of different CPUs in this platform to see how they performed. Again, for more of a generational comparison, check out the AMD EPYC Genoa launch article.

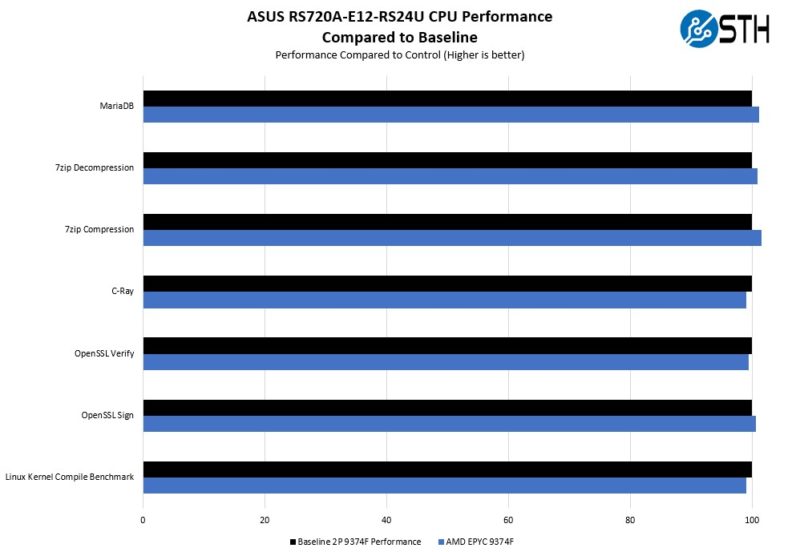

We first tested an array of applications with the 96-core AMD EPYC 9654 and the performance was very close to the baseline.

The AMD EPYC 9554 64-core parts actually generally did slightly better than our baseline results with the same CPUs.

The 32-core AMD EPYC 9374F frequency-optimized parts are also generally a bit better than baseline.

We did not know how this server would perform with a smaller size heatsink than we have seen on some systems. ASUS specs up to 400W cTDP support, and we were able to eek out ~3% more performance from increasing the CPU TDP in this system, so the cooling performance was certainly there.

Given how close we were to our baseline figures, again, we would suggest looking at the Genoa launch piece for more of an idea regarding competitive positioning.

Assume more on that as we get into the launch cycle for the 4th Gen Intel Xeon Scalable (Sapphire Rapids) parts in a few days.

Next, let us get to power consumption, the STH Server Spider, and then our final words.

Thank you Patrick.

It’s really interesting to see such performance with traditional cooling solution.

As you mentioned, other vendors are using quite impressive cooling solutions like closed loop liquid cooling or big radiators with heat pipes.

I wonder how such design like the one Asus used will cope with full rack scale.

How big is the loudness of this server ?

This is the best server I’ve seen from Asus or anyone else. How does Asus warranty compare to let’s say to Dell, hpe, Lenovo?

It looks like a fantastic server, love the focus on I/O without worrying so much about legacy interfaces like SAS. Unfortunately as far as I can see the prices are around USD 7000, EUR 6500, AUD 20k so a bit pricey for the homelab.

It isn’t a bad price for the enormous compute and I/O it provides.

HPE and Dell will indeed be coming along with their own equivalents along with vendor price inflation too.

Glad the likes of Asus and their counterpart Asrock, along with Supermicro exist, in all fairness 7K is easily within budget of the more advanced content-creators/homelabbers/home-AI-devs/smaller-companies dabbling in compute tasks/dev.

Well done Asus, a lovely very usable design sticking to industry standards and some backwards compatibility at realistic pricing. A super well done to STH for covering this. I know what is on my summer shopping list this year ;)