ASUS RS720A-E11-RS24U Power Consumption

Power consumption of this server is largely dictated by the GPUs and CPU used. With the dual AMD EPYC 7763 CPUs and for NVIDIA A100 GPUs, we had a lot of power hungry components.

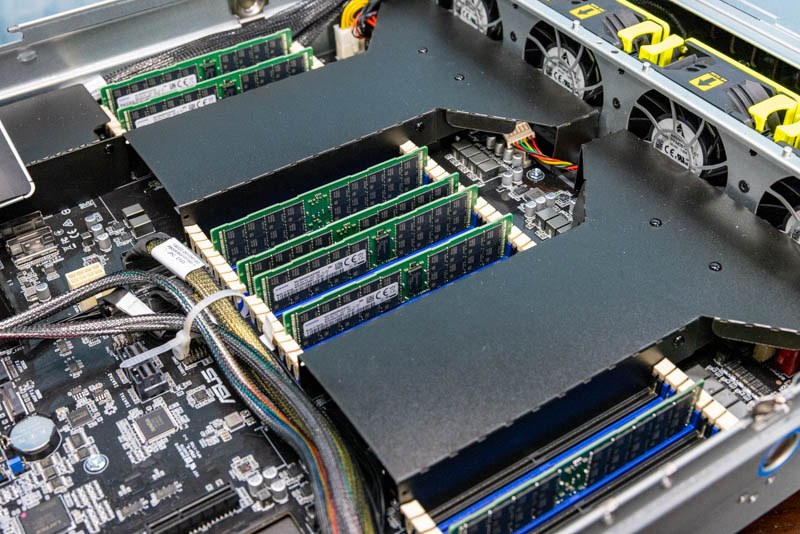

At idle, our system was using about 0.53kW with the four NVIDIA A100 GPUs, AMD EPYC 7763, and 16x 32GB DIMMs. We were fairly easily able to get the system above 1.5kW and to the 1.9-2.0kW range. As such, the key implication is that we have around 1kW/ 1U of power density. Many racks cannot handle that kind of density so we are simply going to remind our users, as with all servers, to have a plan for this kind of power density if this is a configuration you want to deploy.

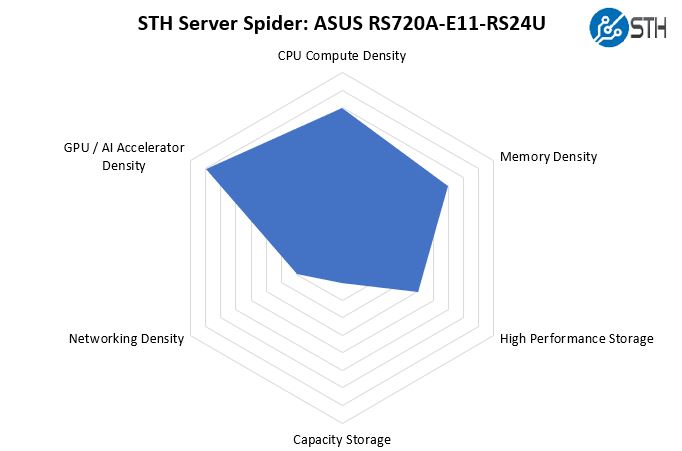

STH Server Spider: ASUS RS720A-E11-RS24U

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

This system is clearly focused on GPU/ accelerator density but also has a lot more CPU, memory, and storage capacity than the ASUS ESC4000A-E10 single-socket 2U server. We do not have 3.5″ drive bays for capacity storage and we also have the dual 10Gbase-T networking available in our test configuration. One could use the low-profile slot for more networking but it would still not be extremely dense on that side.

We are just going to quickly note that ASUS has options for more networking density, but we are doing the STH Server Spider based on the configuration we had. Clearly, the focus of our configuration was on the GPU/ accelerator density and storage instead of having many PCIe slots for networking.

Final Words

Overall, this is a very interesting system. ASUS combines a lot into a relatively short 840mm chassis. We get two 64-core CPUs which would be roughly equivalent to three Intel Xeon Platinum 8380 CPUs. ASUS did a great job getting compute density to match the accelerator density.

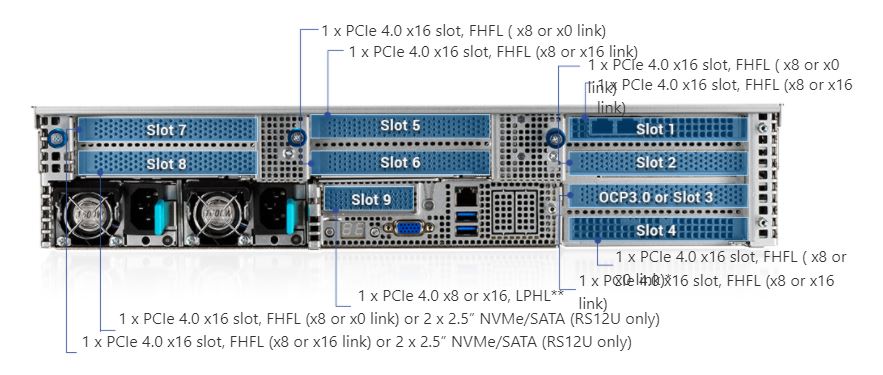

Having the ability to add four PCIe NVIDIA A100 GPUs and keep everything cool is great. It does require adding the extra fans internally and the external fan, but then again this is around a 1kW per U configuration which means cooling is going to be a major challenge. Again, while we are testing with the NVIDIA A100 40GbE PCIe GPUs because that is what we have access to, this system could use other GPUs or network/ storage/ AI accelerators just as easily. We also have a lot of storage potential on the front of the system.

The one item we wish that this server had more of is onboard networking. In the configuration does have higher-speed networking with the dual 10Gbase-T, but when paired with four $10,000+ accelerators, plus high-end CPUs, and storage this ends up being a configuration that can easily be configured to sell for $60,000+ which makes the dual 10Gbase-T option seem imbalanced. This can be remedied by adding a NIC in the place of the PIKE II card though.

Overall, it was really interesting to see how this PCIe Gen4 platform takes top-end NVIDIA accelerators and AMD CPUs and combines them with some of the new server design trends such as cabled accelerator cages/ risers and the latest BMC to make an efficient and relatively compact 2U server.

You guys are really killing it with these reviews. I used to visit the site only a few times a month but over the past year its been a daily visit, so much interesting content being posted and very frequently. Keep up the great work :)

But can it run Crysis?

@Sam – it can simulate running Crysis.

The power supplies interrupt the layout. Is there any indication of a 19″ power shelf/busbar power standard like OCP? Servers would no longer be standalone, but would have more usable volume and improved airflow. There would be overall cost savings as well, especially for redundant A/B datacenter power.

Was this a demo unit straight from ASUS? Are there any system integrators out there who will configure this and sell me a finished system?

there’s one in Australia who does this system:

https://digicor.com.au/systems/Asus-RS720A-E11-RS24U