ASUS RS720A-E11-RS24U Performance

For this exercise, we are using our legacy Linux-Bench scripts which help us see cross-platform “least common denominator” results we have been using for years as well as several results from our updated Linux-Bench2 scripts.

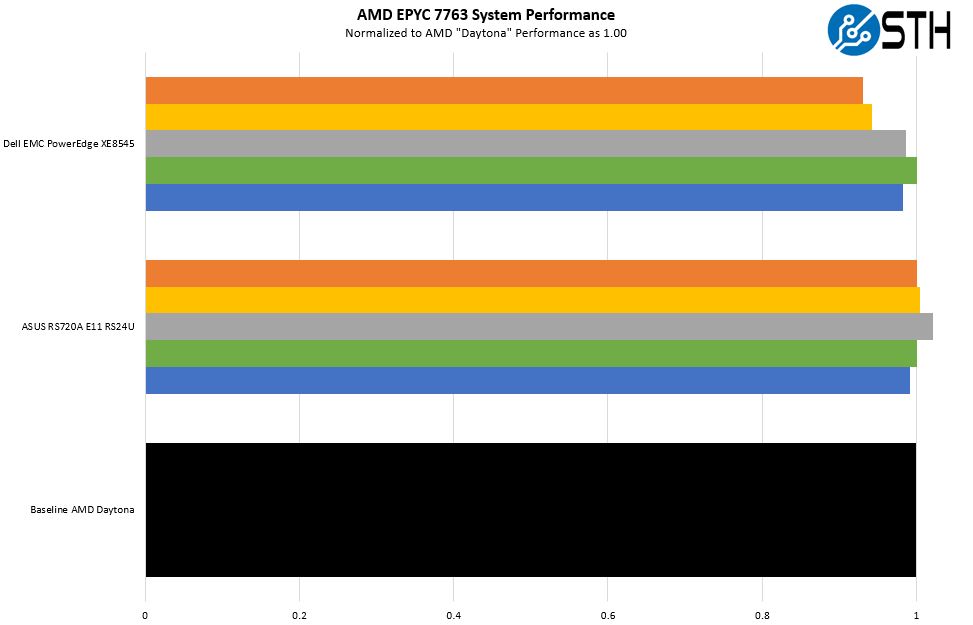

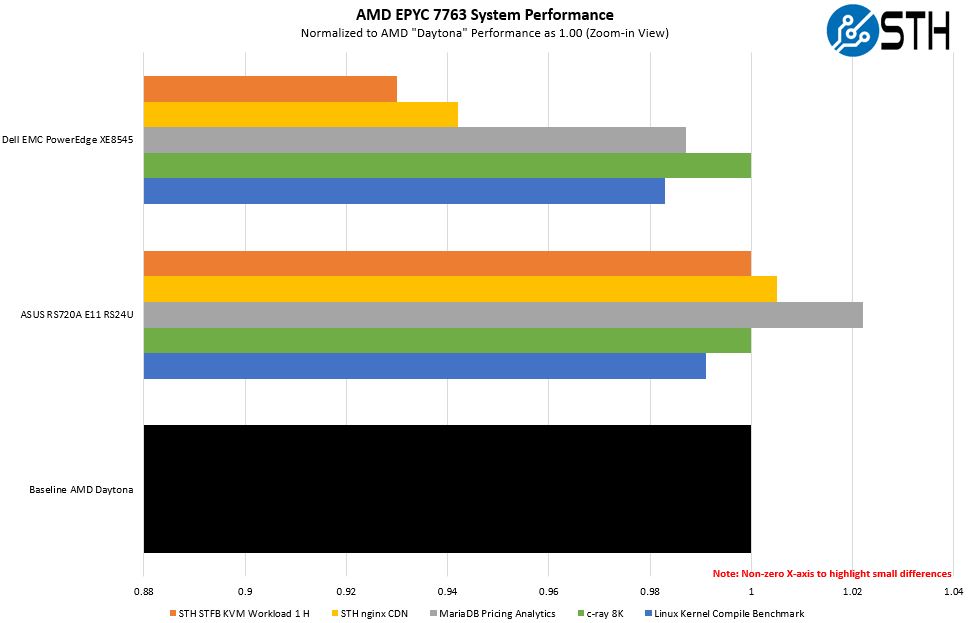

Since we just did our AMD EPYC 7003 review and the EPYC 7763 review, we thought presenting the same data for the third time in three weeks would be redundant. Instead, we are focusing on showing a number of different workloads and how the CPU performance fares compared to the baseline AMD “Daytona” reference platform as well as a NVIDIA A100 Redstone platform from Dell EMC that we will have a review of soon.

Here is a zoomed-in version specifically to make the results easier to see.

Overall, from a CPU perspective, ASUS is performing in-line with the AMD Daytona reference platform across this suite. Dell’s platform is a higher power platform and is slightly behind but the differences are fairly small. Still, the point here is that we wanted to validate that the high-end 64-core EPYC 7763’s were well-cooled in this system especially since the 1kW of GPUs require that space is dedicated to accelerator cooling. Those massive fans and ductwork that we saw in the hardware overview are doing a lot of cooling.

ASUS RS720A-E11-RS24U GPU Compute Performance

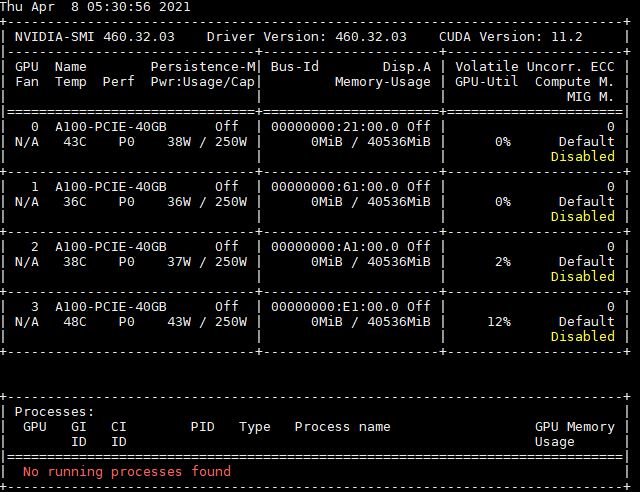

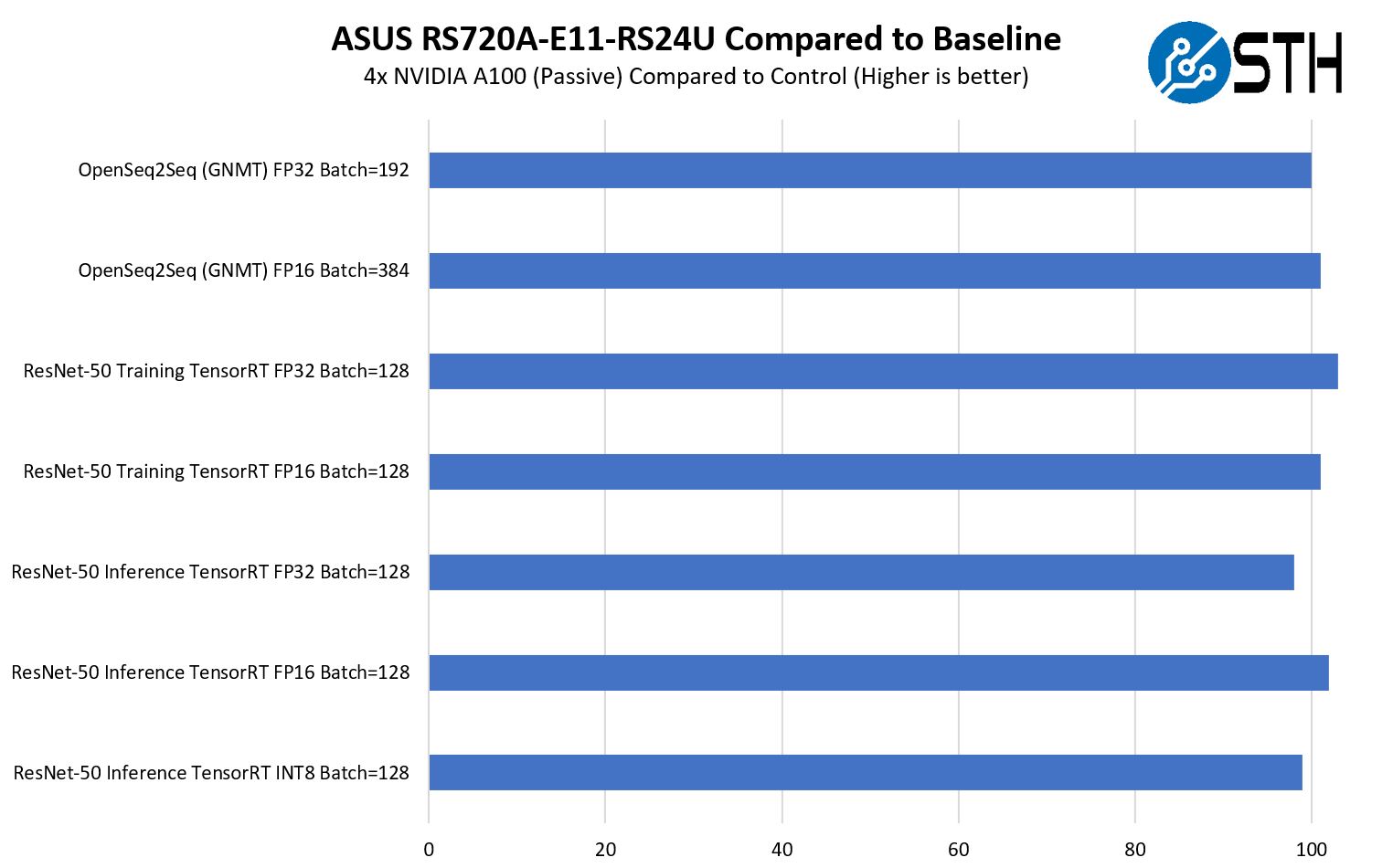

We wanted to validate that this type of system was analogous to the type of per-GPU performance that we see in more traditional 8-10 GPU 4U servers. Here we are using four passively cooled NVIDIA A100 40GB PCIe cards that we put into one of our 8x GPU boxes. We ran the P100 GPUs sitting alongside the new A100 GPUs in our reference platform to create a baseline that we could compare to the performance of the RS720A-E11-RS24U.

In the system, there are no PCIe switches. As a result, we can still get “SYS” P2P transfers however, the topology goes back to the AMD EPYC IO Die to traverse paths from one GPU to another. As such, we ran these four GPUs through a number of benchmarks to test that capability:

There are variances here, but the performance was very close to what we were seeing in the larger systems when we use 4x GPU sets. This falls within our margin of error which shows we are getting sufficient cooling. Generally, GPU compute is limited mostly by topology and cooling so this is about what we would expect. Hopefully, we will get to publish a review of an A100 8x GPU server soon.

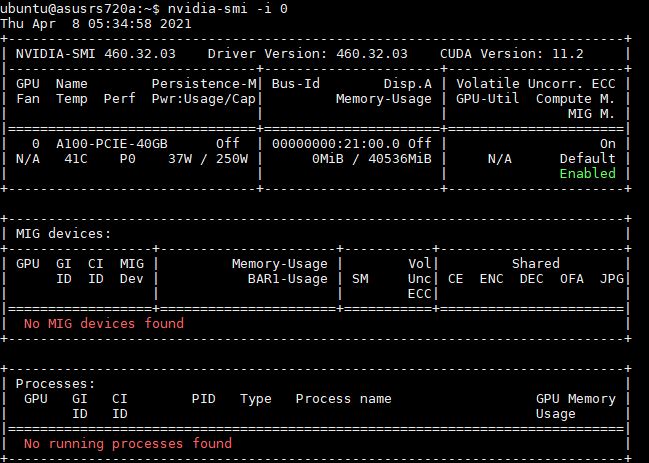

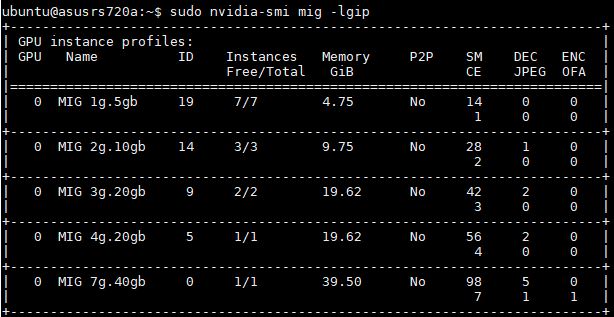

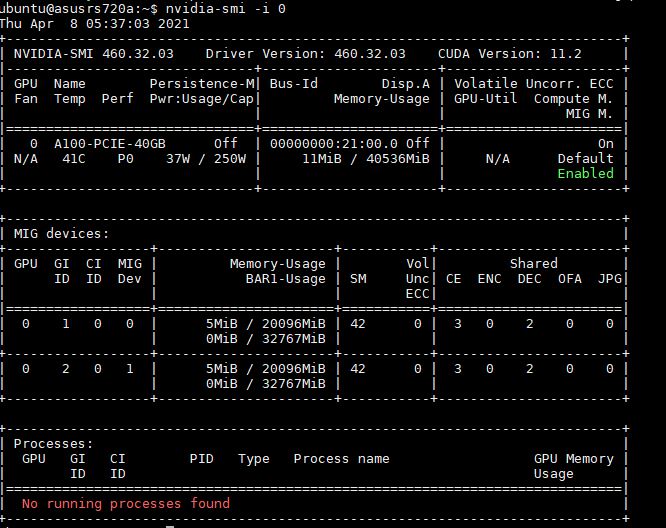

One other feature we wanted to show quickly is MIG, or multi-instance GPU. A new feature on the NVIDIA A100 is that one can “split” a GPU’s compute and memory resources in 1-7 different segments. This creates virtual GPU partitions that can then be assigned to different VMs or containers. A good example of this is that often inferencing workloads do not need a full large GPU and scale better across several smaller GPUs, so this can be accomplished using MIG.

Here are a few options we have for different MIG instance sizes:

Here we are have created a 20GB partition for a new MIG instance.

This is one of the most exciting features of this generation.

Next, we are going to wrap up with our power consumption, STH Server Spider, and our final words.

You guys are really killing it with these reviews. I used to visit the site only a few times a month but over the past year its been a daily visit, so much interesting content being posted and very frequently. Keep up the great work :)

But can it run Crysis?

@Sam – it can simulate running Crysis.

The power supplies interrupt the layout. Is there any indication of a 19″ power shelf/busbar power standard like OCP? Servers would no longer be standalone, but would have more usable volume and improved airflow. There would be overall cost savings as well, especially for redundant A/B datacenter power.

Was this a demo unit straight from ASUS? Are there any system integrators out there who will configure this and sell me a finished system?

there’s one in Australia who does this system:

https://digicor.com.au/systems/Asus-RS720A-E11-RS24U