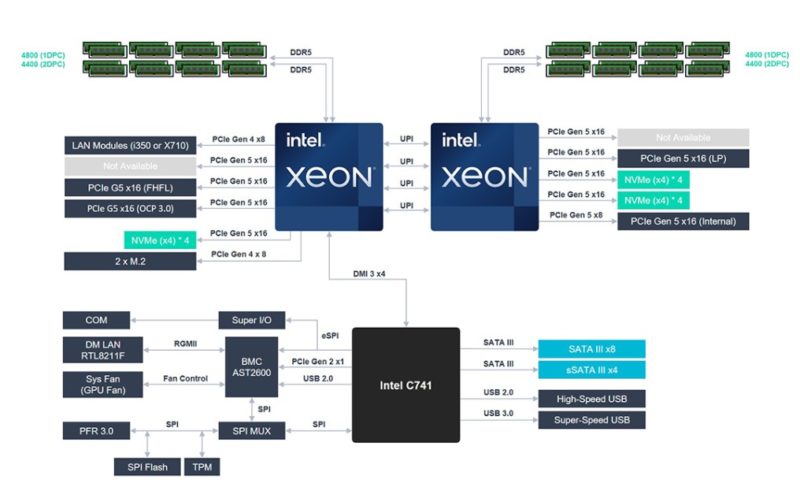

ASUS RS700-E11-RS12U Block Diagram and Topology

Here is the block diagram for the server. Something that is really nice that ASUS does is that it shows the PCIe Gen5 x16 lanes that are not being used in this 1U server.

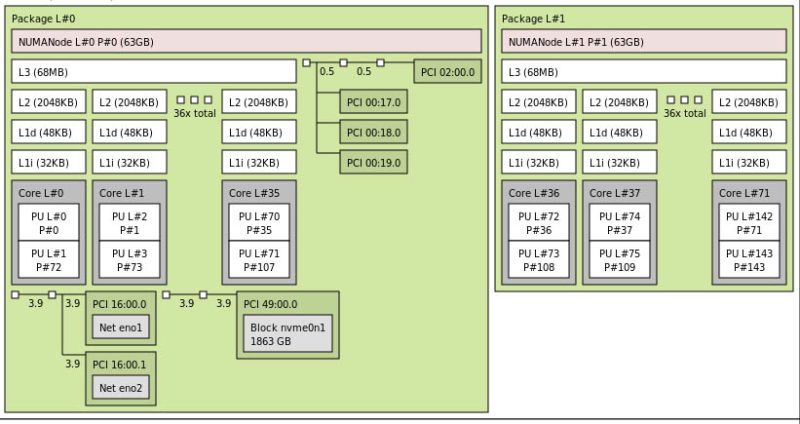

Here is a quick look at the topology. As configured when we got the system, CPU0 had all of the peripherals and CPU1 just had cores.

Our system only came with two 64GB DIMMs, one per CPU. While this worked for screenshots, we replaced these with 8x DDR5 RDIMMs in each socket for our performance testing.

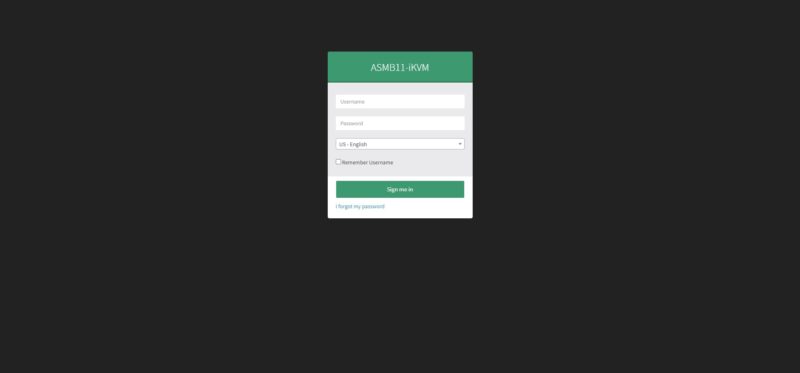

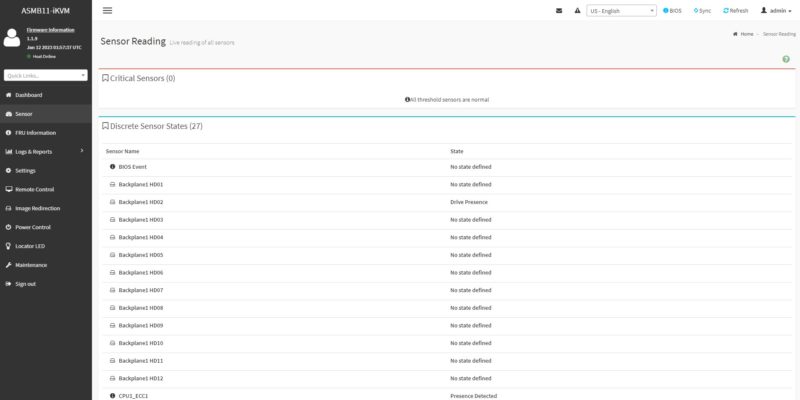

ASUS ASMB11-iKVM Management

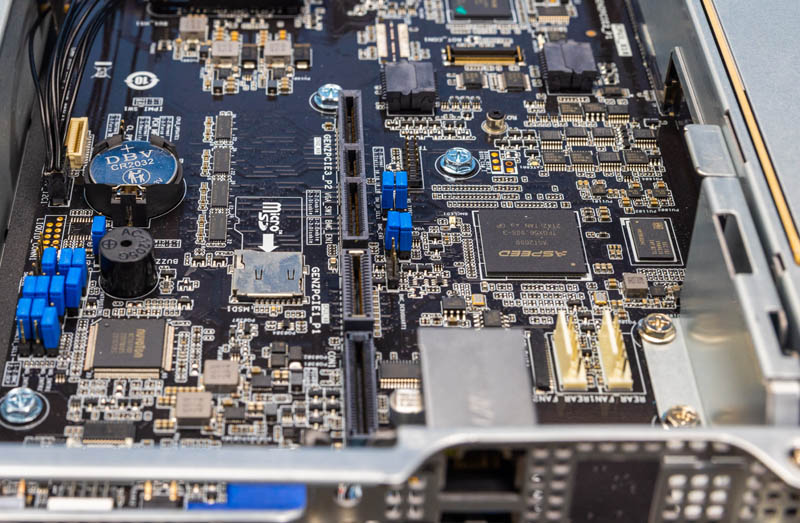

With this generation of servers, we have the new ASPEED AST2600 BMC. That means we also get a newer ASUS management solution.

The new solution appears to be MegaRAC based and is called the ASUS ASMB11-iKVM.

This has all of the sensor readings and logs that one would expect, as well as not just a web GUI management interface but IPMI and Redfish capabilities.

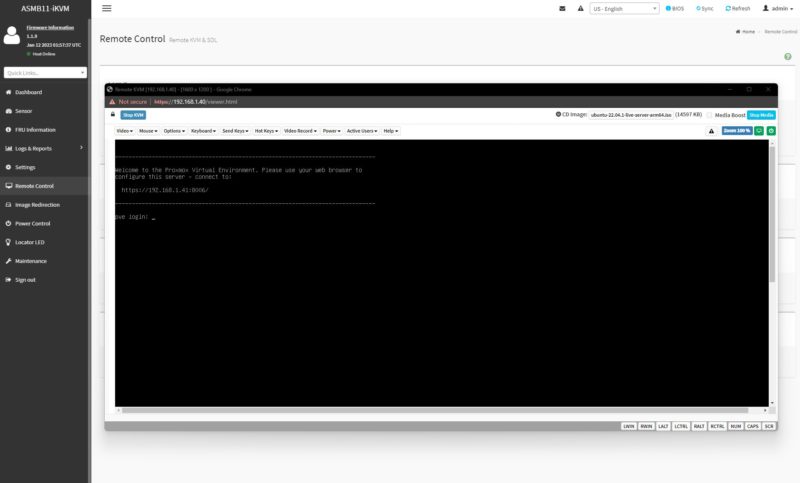

One nice feature is that ASUS has the HTML5 iKVM with remote media as standard. We have seen other vendors charge for this capability, so it is nice that ASUS includes it here.

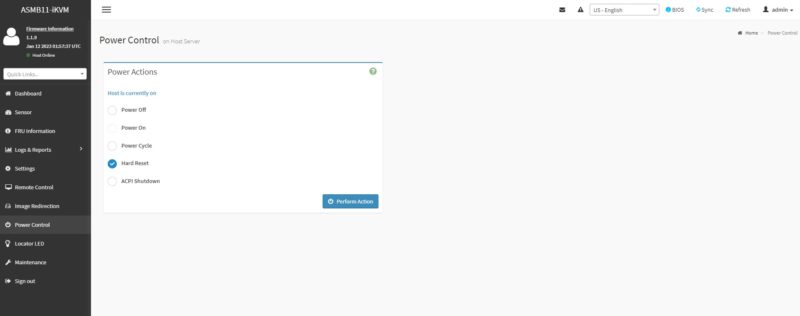

There are also standard features like being able to power cycle the server. One item we wish was here was the ability to boot to BIOS.

Next, let us get to the performance.

ASUS RS700-E11-RS12U Performance

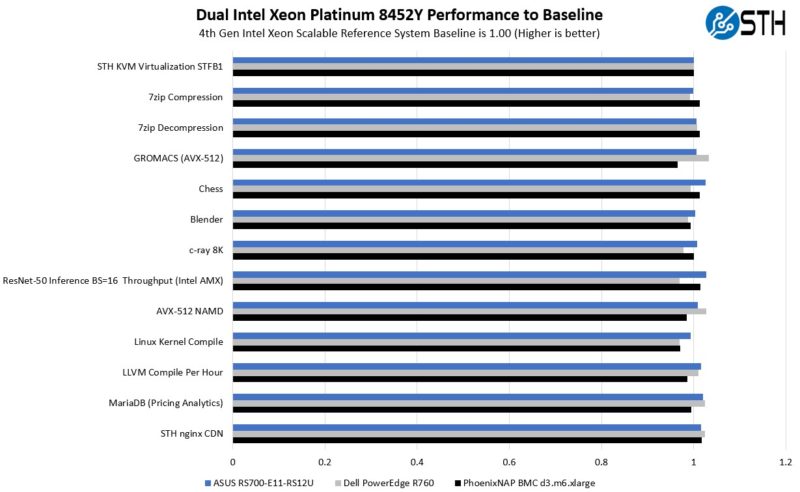

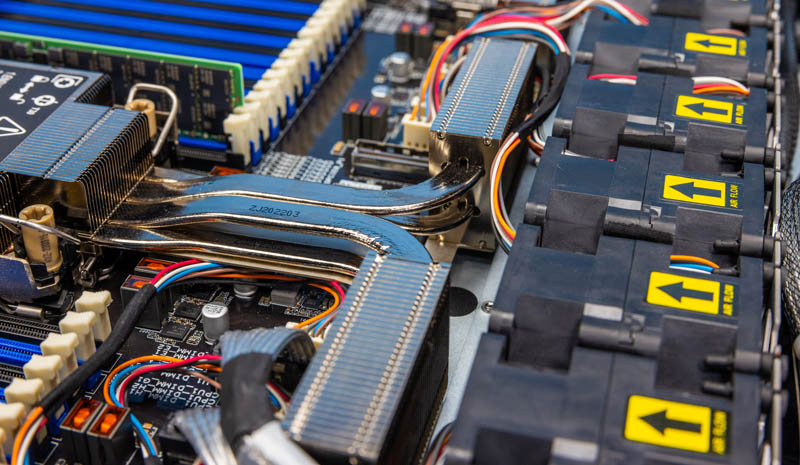

The CPUs installed in this unit are Intel Xeon Platinum 8452Y units. We configured them to P1 36 cores, 300W TDP, and a 2.0GHz base clock which is the maximum core count for these Intel CPUs. We have been testing a large series of servers with these processors, and that led to an interesting finding, especially since this was the 1U platform.

This server, despite only being 1U, performed similarly to the Dell PowerEdge R760 2U server we reviewed as well as the PhoenixNAP Bare Metal Cloud server.

This was notable since ASUS only has 1U of height to work with. In this generation cooling CPUs is a challenge, and so 2U servers can be a bit better in terms of performance just because they can utilize larger coolers. If you want to know why we spent extra time on the cooler design in this review, it was because of this result.

What was perhaps more interesting, is how ASUS did this while still using a reasonable amount of power. That is what we are going to look at next.

It’s amazing how fast the power consumption is going up in servers…My ancient 8-core per processor 1U HP DL 360 Gen9 in my homelab has 2x 500 Watt platinum power supplies (well also 8x 2.5″ SATA drive slots versus 12 slots in this new server and no on-board M.2 slots and less RAM slots).

So IF someone gave me one of these new beasts for my basement server lab, would my wife notice that I hijacked the electric dryer circuit? Hmmm.

@carl, you dont need 1600W PSUs to power these servers. honestly, i dont see a usecase when this server uses more than 600W, even with one GPU – i guess ASUS just put the best rated 1U PSU they can find

Data Center (DC) “(server room) Urban renewal” vs “(rack) Missing teeth”: When I first landed in a sea of ~4000 Xeon servers in 2011, until I powered-off my IT career in said facility in 2021, pre-existing racks went from 32 servers per rack to many less per rack (“missing teeth”).

Yes the cores per socket went from Xeon 4, 6, 8, 10, 12, Eypc Rome 32’s. And yes with each server upgrade I was getting more work done per server, but less servers per rack in the circa 2009 original server rooms in this corporate DC after maxing out power/cooling.

Yes we upped our power/cooling game at the 12 core Xeon era with immersion cooling, as we built out a new server room. My first group of vats had 104 servers (2U/4node chassis) per 52U vat…The next group of vats with the 32-core Romes, we could not fill (yes still more core per vat though)….So again losing ground on a real estate basis.

….

So do we just agree that as this hockey stick curve of server power usage grows quickly, we live with a growing “missing teeth” issue over upgrade cycles, perhaps start to look at 5 – 8 year “urban renewal” cycles (rebuild of the given server room’s power/cooling infrastructure at great expense) instead of 2010-ish perhaps 10 – 15 year cycles?

For anyone running their own data center, this will greatly effect their TCO spreadsheets.

@altmind… I am not sure why you can’t imagine it using more than 600w when it used much much more (+200w @70% load, +370w at peak) in the review, all without a gpu.

@Carl, I think there is room for innovation in the DC space, I don’t see the power/heat density changing and it is not exactly easy to “just run more power” to an existing DC let alone cooling.

Which leads to the current nominal way to get out of the “Urban renewal” vs “Missing teeth” dilemma as demand for compute rises as new compute devices power/cooling needs per unit rise: “Burn down more rain forest” (build more data centers as our cattle herd grows).

But I’m not sure everyplace wants to be Northern Virginia, nor want to devote a growing % of their energy grid to facilities that use a lot of power (thus requiring more hard-to-site power generation facilities).

As for “I think there is room for innovation in the DC space”, this seems to be a basic physics problem that I don’t see any solution for on the horizon. Hmmm.