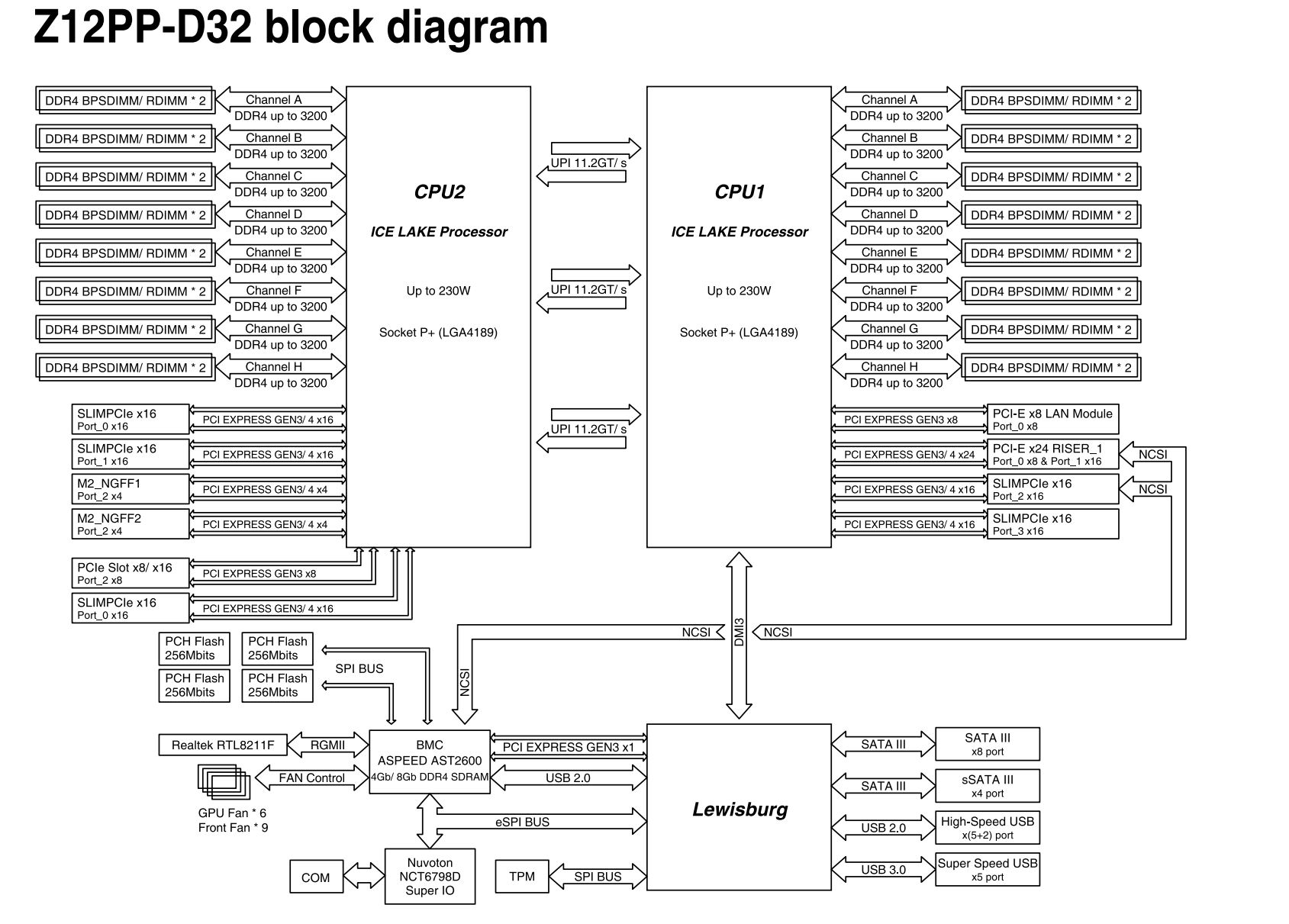

ASUS RS700-E10-RS12U Block Diagram

Here is the official block diagram for the ASUS Z12PP-D32. That is the motherboard used in this server.

There is not too much here, however, we will quickly note that this is a fairly well-balanced motherboard on its I/O from each CPU. That balance, and just the sheer amount of PCIe required, means that one will want to use two CPUs in this server to get access to the full feature set.

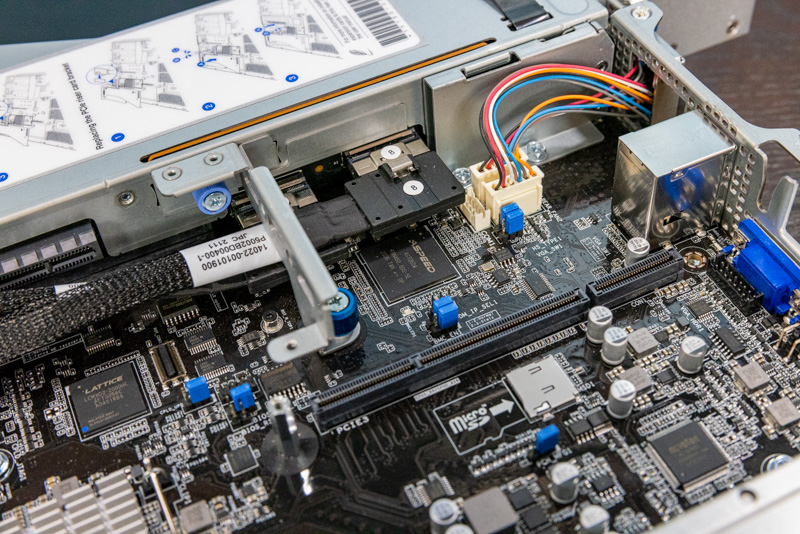

Next, we are going to get to management before getting to our performance section.

ASUS RS700-E10-RS12U Management

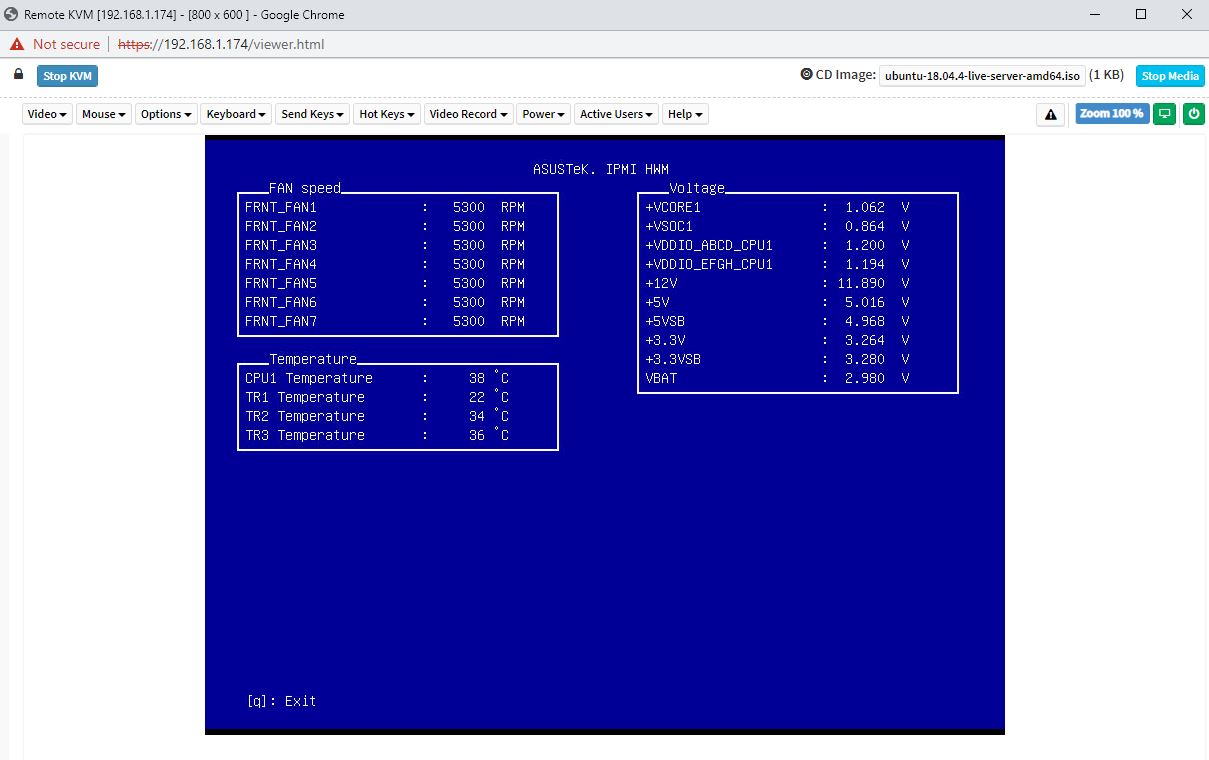

The ASUS management solution is built upon the ASPEED AST2600 BMC running MegaRAC SP-X. ASUS claims this is faster to boot, but we did not time it. The AST2600 is a jump in Arm compute resources, so that would make sense.

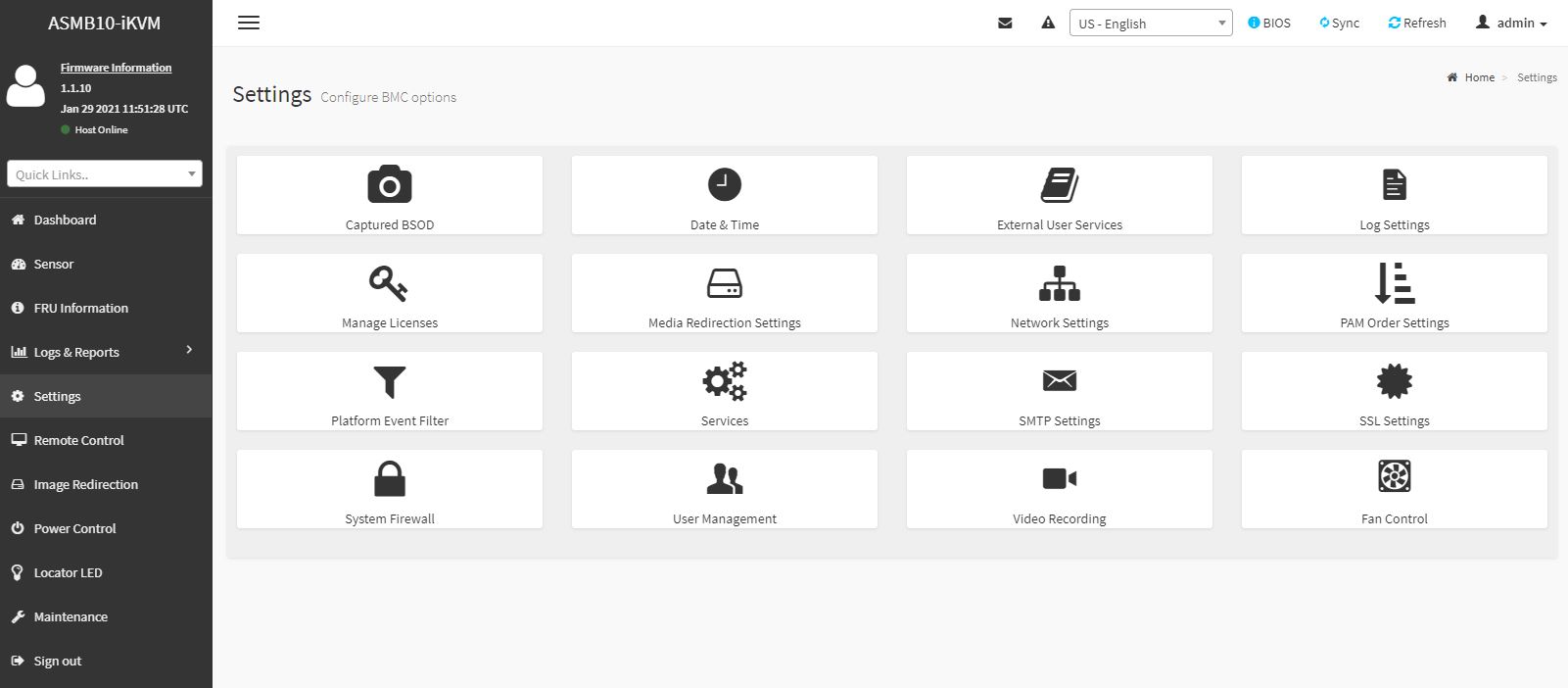

ASUS calls this solution the ASMB10-iKVM which has its IPMI, WebGUI, and Redfish management for the platform. The last EPYC GPU server we looked at from ASUS, the ESC4000A-E10 was still using ASMB9 and the AST2500, so this is an upgrade to a newer faster BMC.

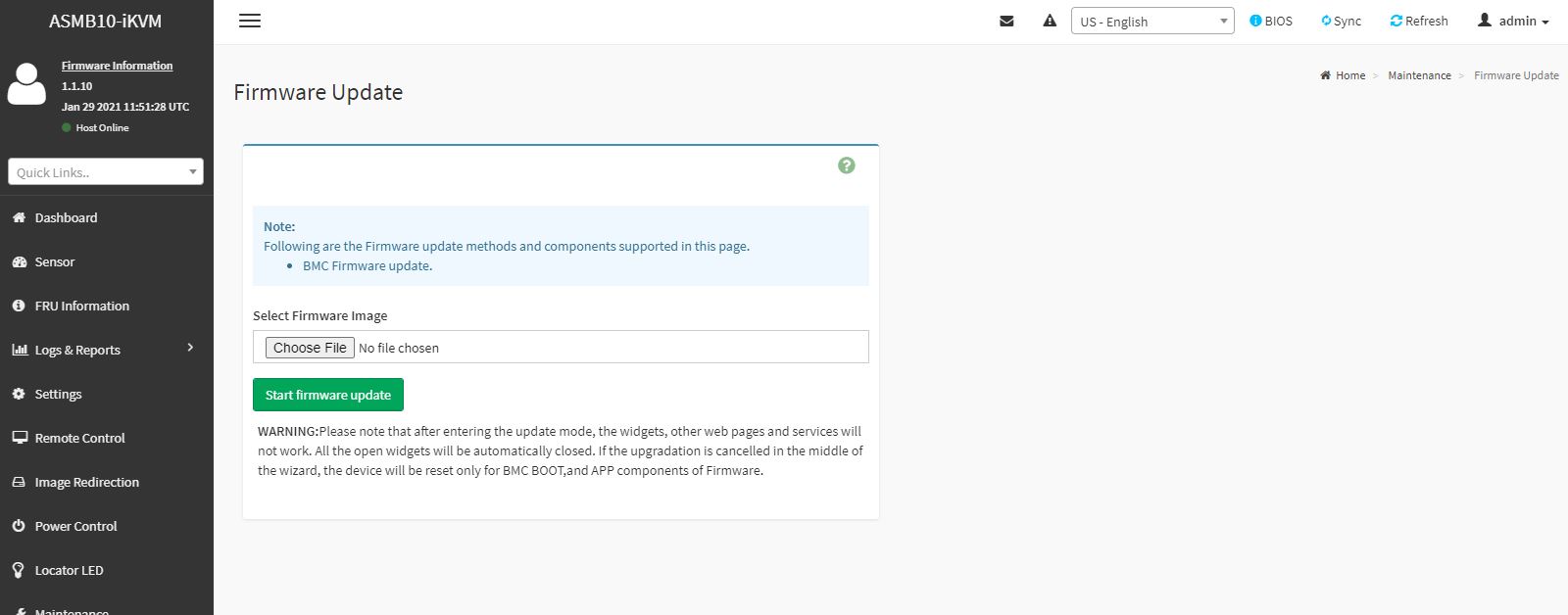

Some of the unique features come down to what is included. For example, ASUS allows remote BIOS and firmware updates via this web GUI as standard. Supermicro charges extra for the BIOS update feature.

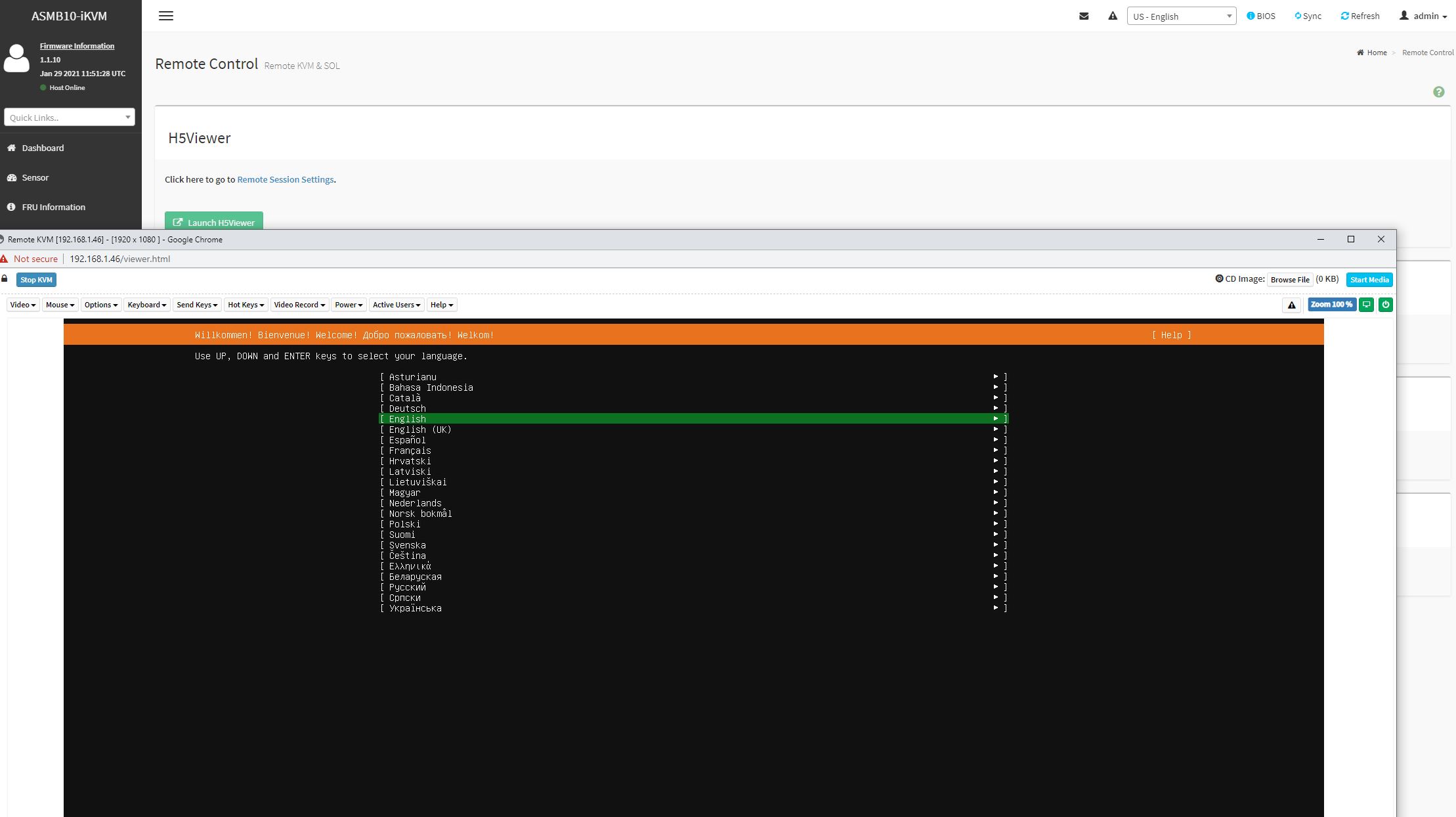

Another nice example is that we get the HTML5 iKVM functionality included with the solution. There are serial-over-LAN and Java iKVM options too. Companies like Dell EMC, HPE, and Lenovo charge extra for this functionality, and sometimes quite a bit extra.

ASUS supports Redfish APIs for management as well. Something that we do not get is full BIOS control in the web GUI as we get in some solutions such as iDRAC, iLO, and XClarity. For GPU servers where one may need to set features like the above 4G encoding setting with certain configurations, this is a handy feature to have.

Another small feature that is different from ASUS is the IPMI Hardware Monitor or IPMI HWM. While one can pull hardware monitoring data from the IPMI interface, and some from the BIOS, ASUS has a small app to do this from the BIOS.

We like ASUS’s approach overall to management. It aligns with many industry standards and also has some nice customizations.

Some organizations prefer more vendor-centric solutions, but ASUS is designing its management platform to be more of what we would consider an industry-standard solution that does not push vendor lock-in.

Next, let us discuss performance.

ASUS RS700-E10-RS12U Performance

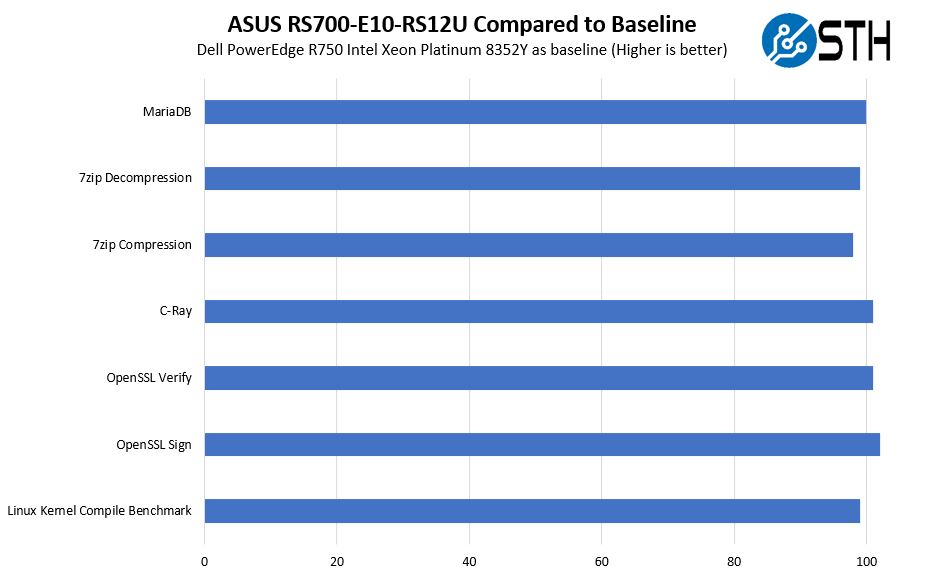

For this exercise, we are simplifying our discussion. We still only have a handful of Ice Lake SKUs, so we are trying to focus on whether the ASUS platform can cool the CPUs and GPU sufficiently. For that, we are trying two CPU options and a GPU option and comparing it to our baseline using the same RAM and SSDs. First, we are going to start with the Intel Xeon Platinum 8352Y in our ASUS system and our control.

There are certainly some differences in performance but we still have small test variances. We are going to say that this is effectively the same performance even though there are some benchmark variations.

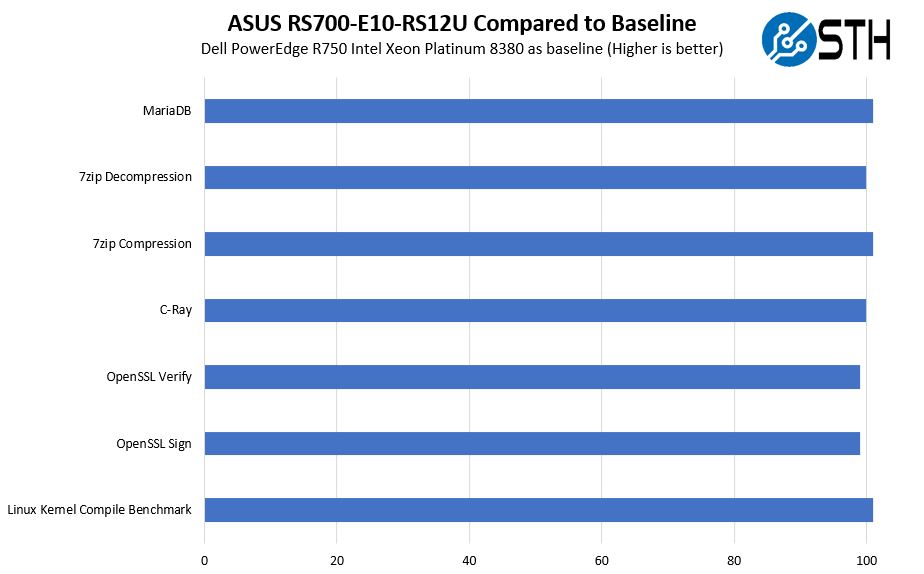

We also wanted to test high-end Intel Xeon Platinum 8380 270W TDP CPUs. Here is that view:

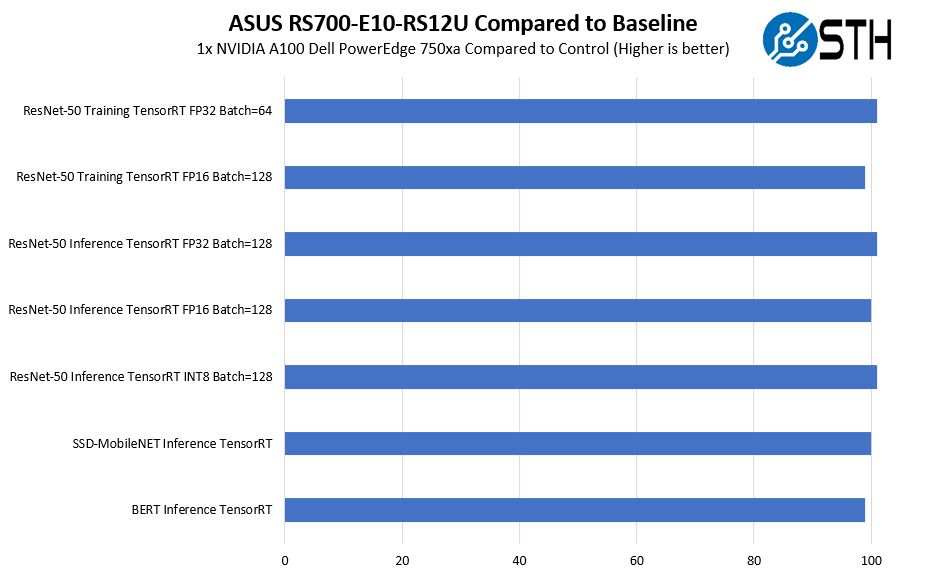

Perhaps the trend is ever so slighly below the Platinum 8352Y, but it again is close enough to be what we consider a test variation. Looking at the GPU side with an A100:

Here we were using a single A100 of the four installed in the R750xa as a comparison. Again, very similar performance between the two systems.

We did not get to test fan failure scenarios, but it seems like the ASUS cooling solution was able to produce the performance we would expect.

Next, we are going to have power consumption, market positioning, and our final words.

haha, the second RS is for Redundant PSU, and first RS stands for Rackmount Server

If that’s true Juno Shi it should be RP (redundant power) not RS

The name basically tells you:

RS – Rack Server

7 – DP Mainstream

0 – 1U

0 – W/O IB

–

E10 – Intel Mehlow / Whitney

R – Redundant PSU

S – Hot-Swap HDD Bay

12 – 12 HDD Bays

U – All Bays support NVME

Hope that clarifies it.

This looks like a great server appliance, however (and I know this is difficult) how does it cost – compared to a similar priced Dell or HPE?

Our in-house IT dept only buys HPE – everything. Sure it’s good hardware but you can’t help but think that we’re paying a premium for the name (and highly likely someone’s brother-in-law works at an HPE channel supplier)

Does this support 6 or 12 NMVe with 1 CPU installed?