ASUS ROG Zenith II Extreme Motherboard BIOS

The ROG Zenith II Extreme Motherboard BIOS is very much like past ROG motherboards we have reviewed. If you have used any of these motherboards before you will be right at home.

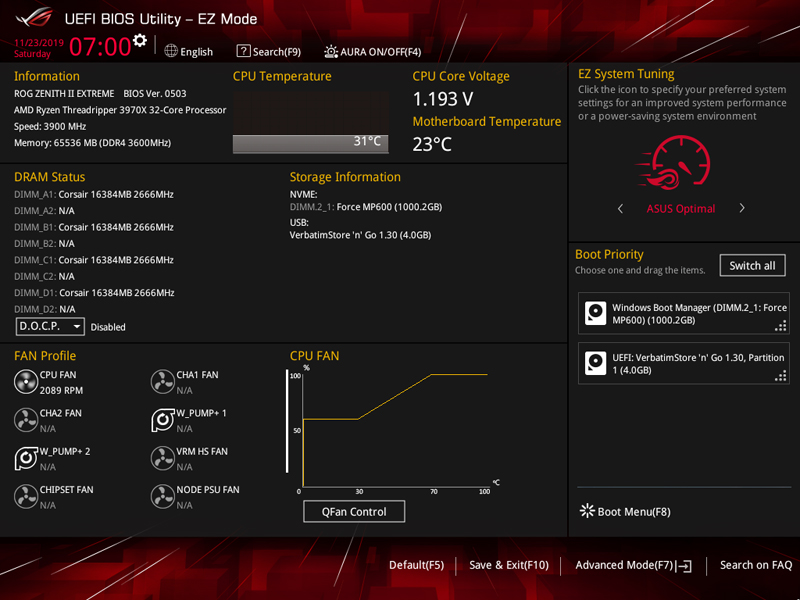

Here we see the Home screen where we can enter different aspects of the ROG Zenith II Extreme Motherboard BIOS in EZ-Mode.

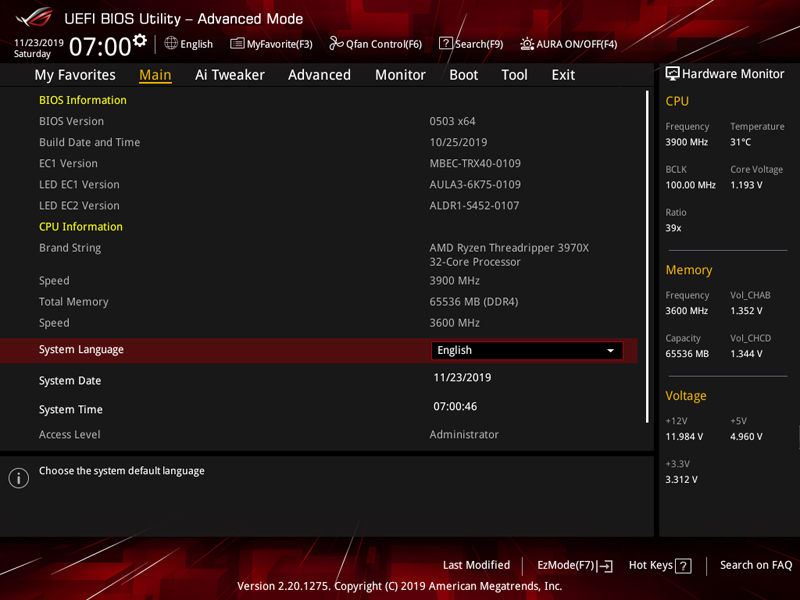

At the Main screen, we find typical BIOS functions found on these screens. At the right of the screen, the BIOS reports various system specifications that are useful.

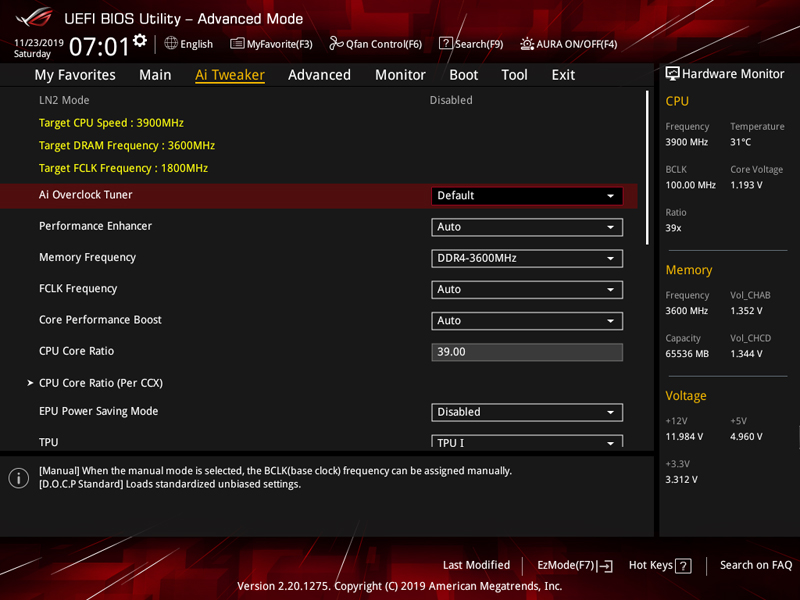

The Ai Tweaker tab allows a user to set overclocking features.

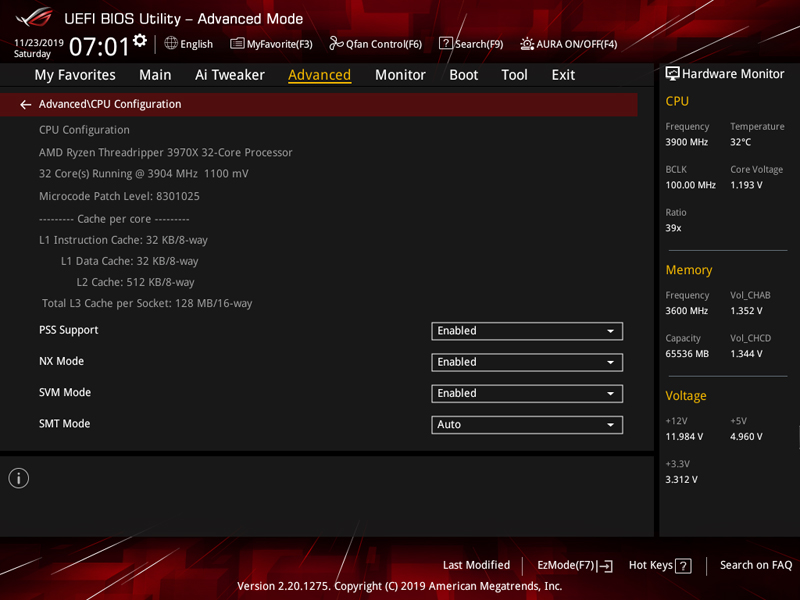

The Advanced Tab shows more advanced system functions.

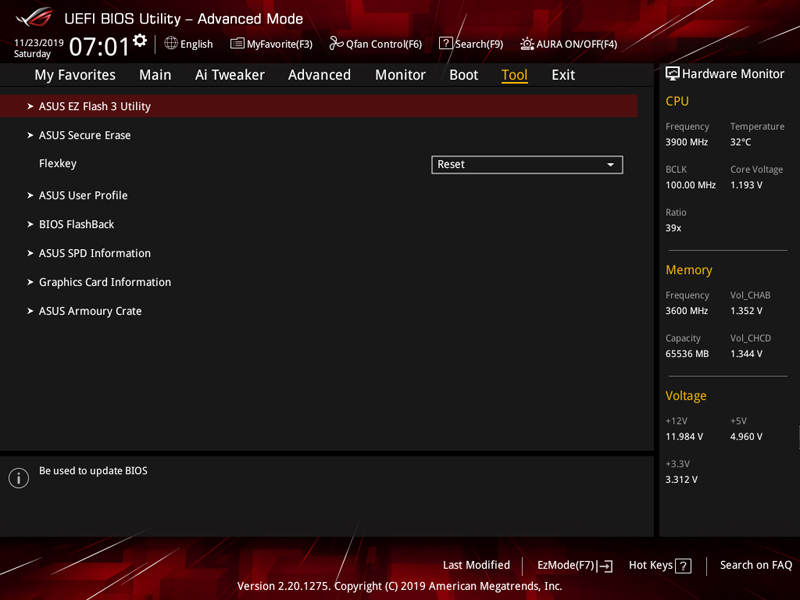

The last screen is the Tool Tab.

Overall, this follows the trend for the company’s BIOS which is good since it helps those who have used ASUS platforms transition between different offerings with a similar look and feel.

ASUS ROG Zenith II Extreme Motherboard Software

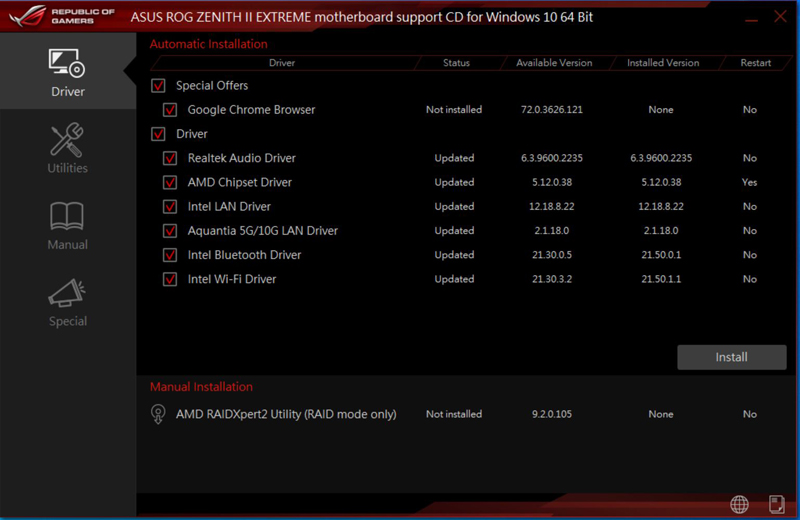

Drivers and Utilities for the ROG Zenith II Extreme Motherboard are found on a USB drive instead of on a CD/ DVD. Thank goodness for that update.

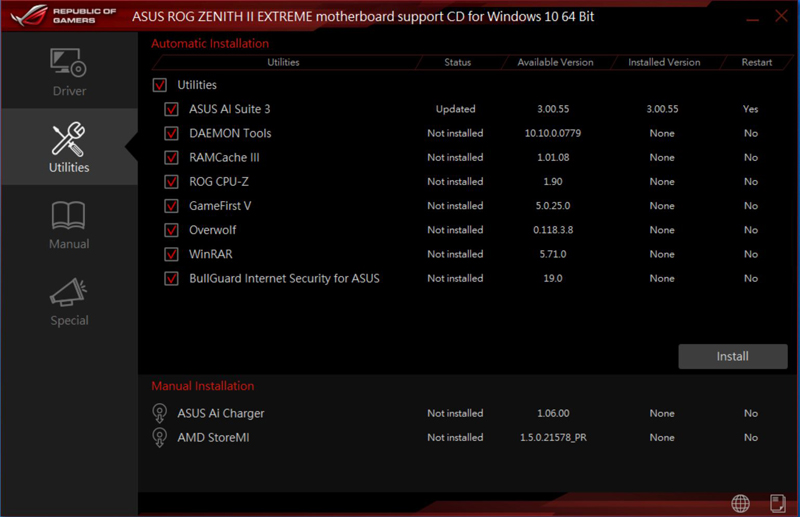

The last tab of interest is the Utilities section which brings up the next window.

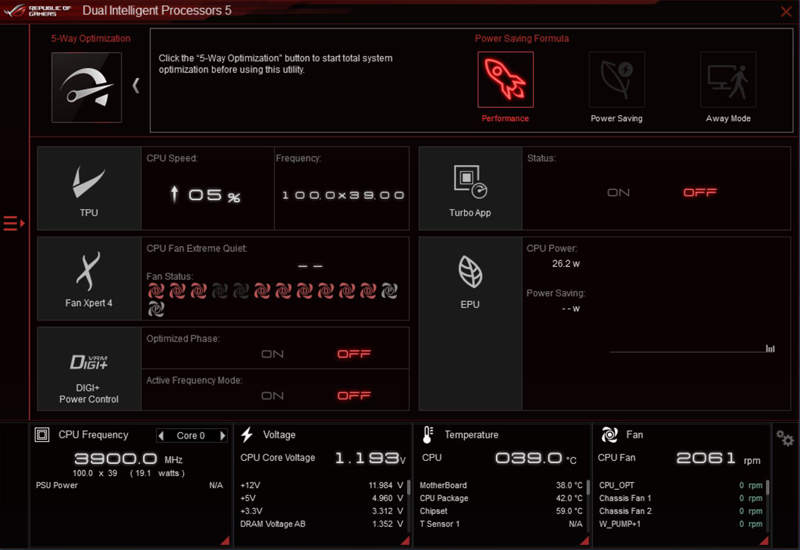

One key software to look at is ASUS AI SUITE which allows a multitude of system adjustments.

With some motherboards, the software package is less relevant. With this, we think most users are going to install ASUS software to take advantage of some of the value-added features of the platform.

ASUS ROG Zenith II Extreme Motherboard RGB

Located on the IO backplate heatsink there is ASUS 1.77” LiveDash which is a built-in color OLED panel that displays useful information about the system. In addition, many RGB LEDs and effects light up the ROG Zenith II Extreme. If you like RGB on your motherboards the ROG Zenith II Extreme has you covered. If you prefer to not use RGB effects these can be completely turned off in the BIOS. You are paying for features like the OLED panel, so we think this is best combined with a translucent side panel case.

There is even more ROG Zenith II Extreme RGB goodness on the chipset heat sink.

Many do not like the RGB trend, but this looks very cool.

Next, we are going to do a quick specification check, and commence with our performance benchmarks.

For my use (video & audio editing) I would need at least 6 PCIe slots (2 x GPUs, 40GbE NIC, pro audio card, SDI video card, storage HBA). I also need Thunderbolt 3, or else I’ll have to replace some costly TB3 components with something else. I really, really want to upgrade from my Dual Xeon E5 workstation to Threadripper, but I can’t until somebody makes a motherboard that actually uses all those PCIe lanes for PCIe slots. Seems rather counter-intuitive that we’re seeing 4-slot solutions.

So you have a 40GbE, a HBA and TB3 for storage..

Tyler. what about some of Epyc based solution(s)? For example MZ32-AR0 made by gigabyte seems to offer your number of slots… Also I can’t quite believe that you need 40GigE and HBA in memory constrained environment like TR is. Remember it supports only ECC UDIMMs and since 32GB modules are nowhere to find, then you will be able to put only 128GB RAM there!

I’ve had overheating issues with m.2 SSDs located below a GPU. Do you suppose the “shield” will make thermals better or worse for the m.2’s between the PCIe slots??

I’d agree with others. HBA and 40G is redundant. Your 40G network speed is about the same as the HBA. If you need fast you’ve also got PCIe4 SSDs which will blow any other storage you have out of the water.

2 double-width GPUs for AV? Premiere Pro, AE, and DaVinci at least really use 1 GPU at most?

Quadro or Titan RTX in the first slot, SDI, Audio, 40G, and you’re still short a card for TB3.

“you have a 40GbE, a HBA and TB3 for storage”

Yes and it’s not that uncommon. 40GbE connects to the NAS which has all the bulk storage, 10GbE isn’t enough to keep up with 4K EXR files, etc. used for VFX or final masters. HBA is for connecting to internal U.2 NVMe drives striped in RAID0 that server as local cache, because 40GbE reliable enough to do guaranteed realtime playback of 4K EXR files, etc., so for hero systems we need to cached locally. TB3 is because clients often bring bulk storage on TB3 external RAIDs that we want to quickly connect and ingest files. Workaround is that we could have an ingest station do this, but it adds another step.

We also use TB3 for KVM extension as it is much more full-featured and affordable than other solutions. Some of our pro audio devices are also wanting a TB connection and the alternatives are either less featured and/or more expensive. So far I haven’t seen TB3 with Epyc/Threadripper.

“Tyler. what about some of Epyc based solution(s)? For example MZ32-AR0”

That’s an interesting board because we could probably use the 4 x “SlimSAS” connectors to control our U.2 cache drives, and the OCP for 40GbE, leaving plenty of PCI slots left over.

“I can’t quite believe that you need 40GigE”

“HBA and 40G is redundant…40G network speed is about the same as the HBA. If you need fast you’ve also got PCIe4 SSDs which will blow any other storage you have out of the water.”

I explained in another reply, but yes we need both because 40GbE is not reliable for realtime performance in video/VFX at the datarates we are working with. One uncompressed stream of 4K EXR files is 1.3GBps (gigaBYTES). Now imagine you’re typically using 2-4 streams at a time. The math will tell you that 40GbE cold maybe be enough, but in the real world you’ll run up against protocol limitations and high CPU usage. Getting even one stream is tough with most protocol stacks.

PCIe SSDs is what we’re using, but U.2 variants because we need about 40TB local cache, which you can’t get in M.2 form factor. I suppose we could try connecting U.2 drives with adapter cables to the M.2 slots. In theory it should work…

“2 double-width GPUs for AV? Premiere Pro, AE, and DaVinci at least really use 1 GPU at most?”

The two main apps we use that leverage this level of hardware are DaVinci Resolve (color grading) and V-Ray Next (3D rendering). While Premiere and After Effects don’t leverage multiple GPUs effectively, Resolve very much does and most Hollywood shops using it have at least two GPUs if not 4 or even 8. V-Ray Next scales almost linearly, so two GPUs is barely scratching the surface (a render node would usually have 8 GPUs but that is a different build than this).

I point all this out because the article is about a workstation board, not a server board, and we need lots of slots.

Than your only solution is to go EPYC and TB3 with a cheap hook up station with a 40G ethernet card.

I bet you could use an adapter on each side of the DIMM.2 to get yourself 2 u.2 ports.

Well, hopefully Supermicro or Tyan or somebody comes out with a 6-slot Threadripper 2 mainboard, and bonus if there’s a TB3 option that works with it. I can wait a few months.

If Asus would make a 7 slot Sage series board with 4 u.2 I’d buy that so hard.

Can somebody tell me please why this is being marketed as a gaming motherboard?

Okay, okay, no need to answer. Back in the day i was playing Crysis on a HP Z800 with two Quadro 5800 (i don’t recall the CPU it was using, but it was obviously some Xeon variant). Why HP did not market the Z800 as a “gaming PC” is a mystery… Also, i heard that physical exercises are beneficial to health, so lets do some eye-rolling for about 15 minutes.

@Madness

I think they are positioning it more as an enthusiast board. Threadripper is kind of in this weird limbo between gaming and workstation segments though. It excels at workstation workloads more than gaming, yet all of the boards available are just hopped up gamer boards. And then on the EPYC end of things there are so few boards at all, and the ones that ARE out there are server oriented.

@Tyler: I would wait a month for TRX80; Those will be the more workstation orientated systems.

TRX40 seems to target more the game streamers, enthusiasts and less the professional work station crowd like you are.

It is a bit sad that AMD did not provide a clear roadmap on Threadripper V3. And the infos on TRX80 are still only rumors or educated guesses. But by now they seem to be right, so wait till AMD will reveal TRX80 in January (the current likely launch/paper launch).

Thx for the advice Steven, I think I’ll do that.

I also wish we’d see some TR3 workstations from HP, Supermicro, Dell, etc. We’ll build our own if we have to, though.

I’m hoping a mobo manufacturer will use pcie 4 ->3 switches on TR boards to go from 8x 4.0 to 16x 3.0 so they can put 4+ non-over-subscribed 16x 3.0 slots on a board with plenty of lanes left over for other IO. Would make for a great DL workstation.

Ryan, I second that! Although I’d be happy with a PCIe expansion chassis that broke out the lanes as well. Something that takes your x16 4.0 and gives you 4 x x8 3.0 slots would be so nice.

Any one got a good explanation on why TR need a chipset and not epic? Was it mostly for moving some power usage off chip? Anyone know if it is the same io chiplet in both?

@STH

Can You kindly let Me know if this motherboard can support EPYC cpu, even if no tlisted on ASUS website?!

I don’t need all those cores but I do need the GPU slots, that AM4 dooesnt offer, so id be more than glad to drop in an 8 core 500 dollar EPYC cpu, and keep the extra cash to buy DDR5 systems this summer, as these DDR4 Motherboards and CPUs are end of life.