ASUS ESC8000A-E13P Power Consumption

In terms of power, we had four 3.2kW Delta power supplies rated at 80Plus Titanium efficiency.

With four power supplies we have a 3+1 redundant configuration.

This is one of those systems that can vary wildly in power with the configuration. We can hit 5kW in a configuration like this without an issue. The system itself also allows for the NVIDIA H100 NVL and H200 NVL solutions and 2-way and 4-way NVLink bridges. That is to say we do not have the highest power configuration. Also, one can use different CPUs and NICs which have a big impact. Still, this is a far cry from the SXM systems in terms of power.

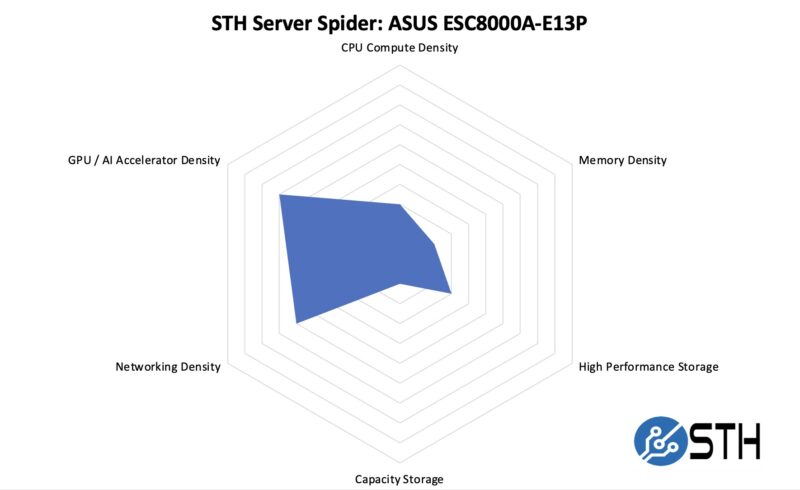

STH Server Spider: ASUS ESC8000A-E13P

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

This is a server mostly focused on GPU compute, however, there are still plenty of slots for high-speed networking. We also get eight NVMe SSDs which is more than some other systems out there. Overall, this is a well-optimized GPU and AI platform.

Final Words

All told, the ASUS ESC8000A-E13P is a really neat system. With the high-power accelerated systems using so much power these days, there is a need for PCIe GPU systems to fill a gap for lower-power environments. Also, for those applications where organizations want not just an AI machine, but want GPUs to accelerate rendering, VDI, and other applications.

The L40S is a neat GPU for many since it is an AI focused version of a GPU that was also focused on rendering that NVIDIA recommended for those only deploying GPUs in low numbers. Many of the high-end AI GPUs have been shedding features that take away from the GPU mission and so for those running mixed environments, other options from Hopper and Blackwell can make sense.

It is always cool looking at these 8x GPU systems. This was the first time that we had both 384 cores and 384GB of VRAM in a system which was a neat milestone made possible by the new generations of processors.

I like that this one’s got the PCIe switches better because it’s got more slots for NICs. I’m not totally behind just having 32 Gen 5.0 lanes back to the CPU.

There is a flaw in the block diagram, the DIMMs run from the PCIe switches in stead of the CPU’s.

I assume this is not a CXL solution (12 DIMMs through 32 lanes PCIe Gen5) ;-)