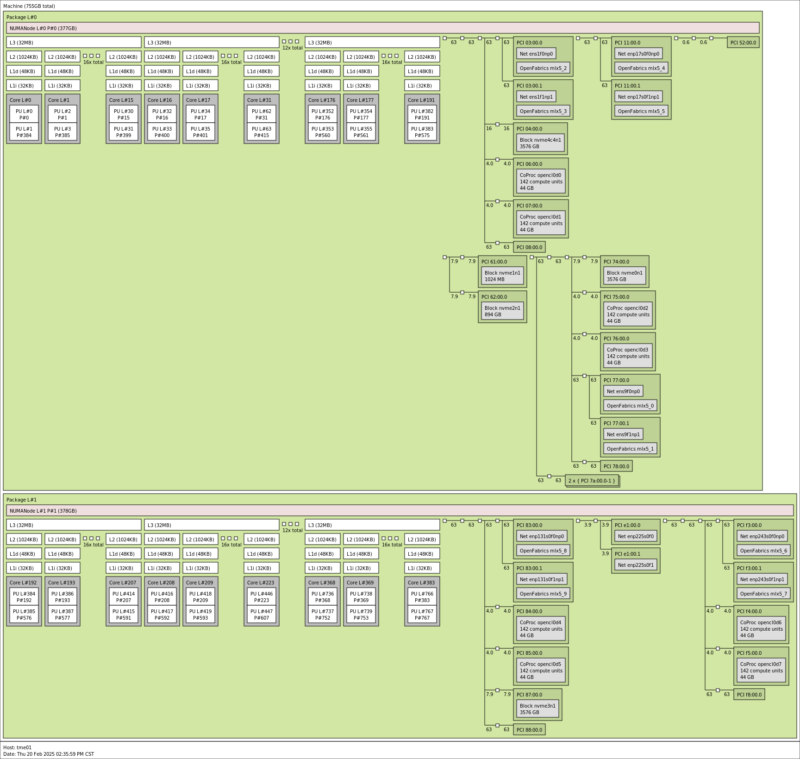

ASUS ESC8000A-E13P Topology

If you want to talk about a big AI server, this certainly had a robust configuration making for an interesting topology:

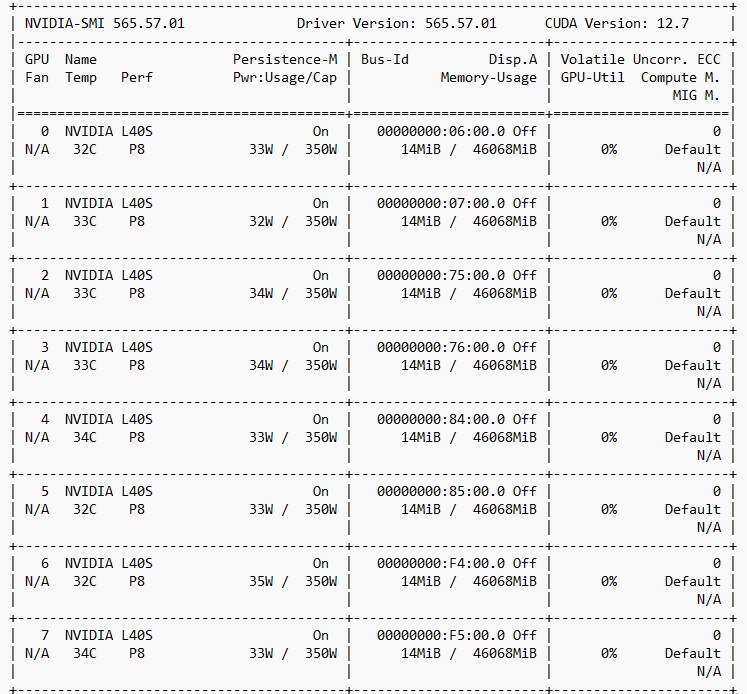

With so many NICs and NVIDIA L40S GPUs, that looks like a big topology.

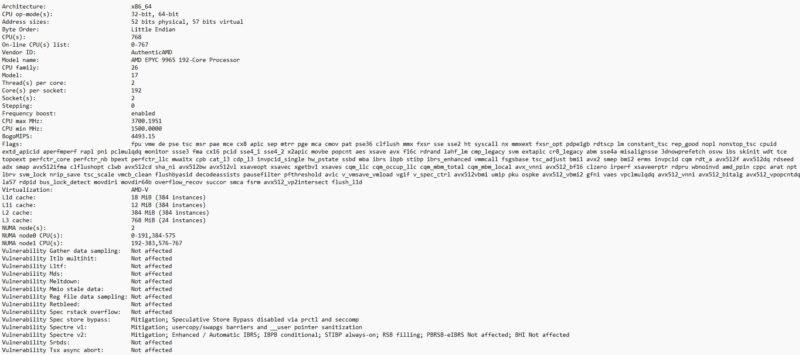

We also have two AMD EPYC 9965 CPUs for 192 cores/ 384 threads per CPU.

As a fun aside, we have 384 physical cores and 384GB of VRAM.

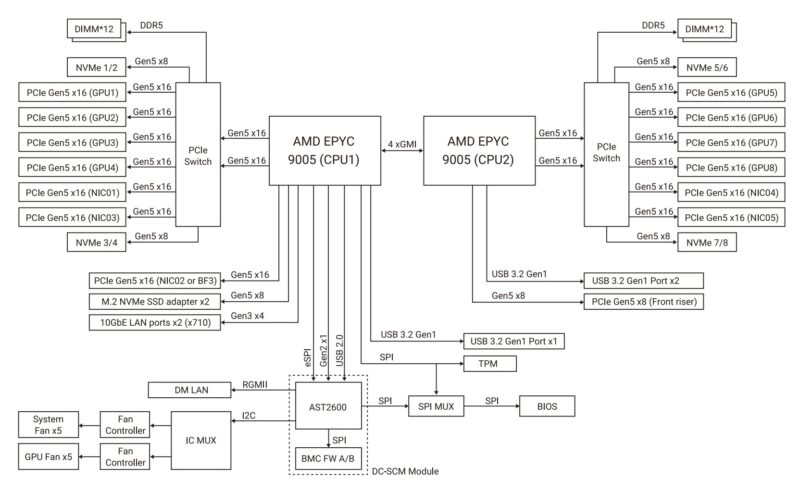

Here is the block diagram:

Here we can see the PCIe switches that help get us a lot more lanes in the system. They also help when you want to have peer-to-peer traffic between the GPUs over the PCIe switch.

Management

In terms of management, we have the ASUS ASMB12 solution which gives us our out-of-band management and IPMI. It is powered by an ASPEED AST2600 BMC.

We are not going to go into this one too deeply as it is ASUS’s standard management interface and there are so many unique things in this system.

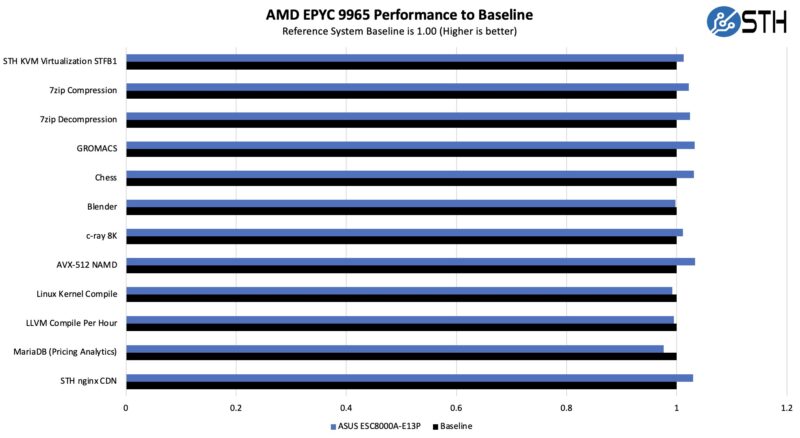

ASUS ESC8000A-E13P Performance

With systems like these, performance generally comes down to cooling the big components. We had the AMD EPYC 9965 192 core and 384 thread CPUs installed and the cooling was excellent with those front fans.

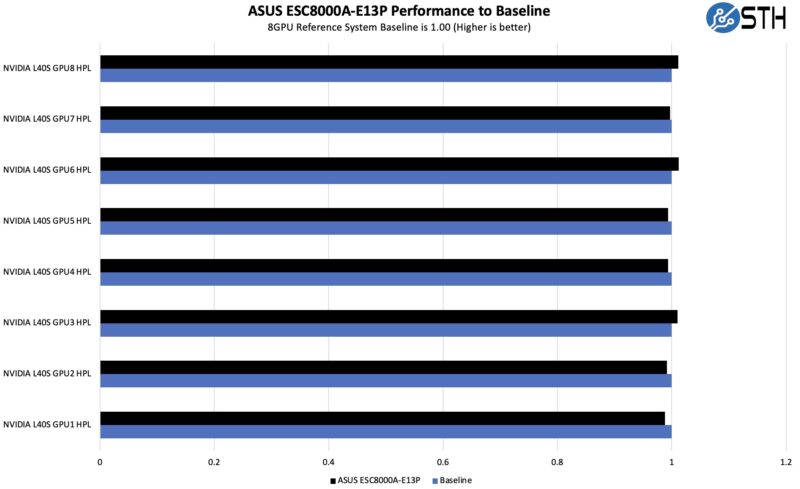

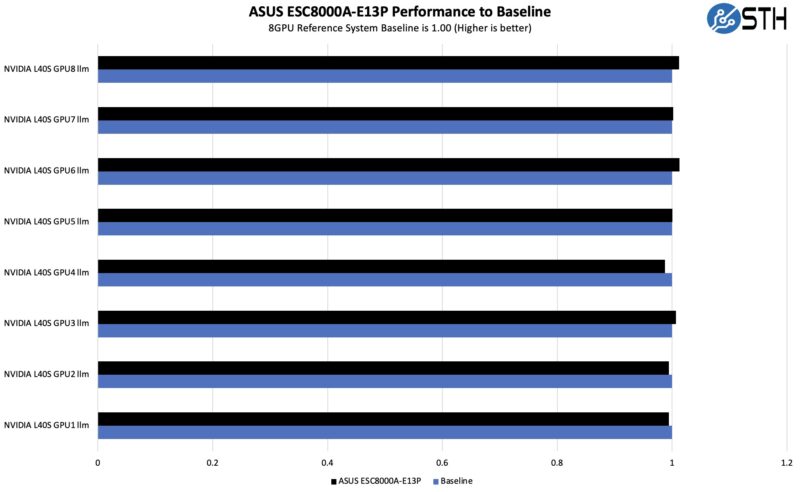

We also just wanted to see if the L40S GPUs were being cooled across all of the slots. We ran HPL on the GPUs to see how they were cooled. Sometimes in 8 GPU systems over the years, we get 1-2 GPUs (usually at the center or on the sides) that can heat and shed performance.

That was not the case here. We also ran llama3.3 70B across the GPUs.

Overall, these were fairly similar so within our margin of error. We can say the ESC8000A-E13P is doing a good job cooling the GPUs. We will just mention that there are hotter GPUs out there.

Next, let us get to the power consumption.

I like that this one’s got the PCIe switches better because it’s got more slots for NICs. I’m not totally behind just having 32 Gen 5.0 lanes back to the CPU.

There is a flaw in the block diagram, the DIMMs run from the PCIe switches in stead of the CPU’s.

I assume this is not a CXL solution (12 DIMMs through 32 lanes PCIe Gen5) ;-)