ASUS ESC4000A-E11 Power Consumption

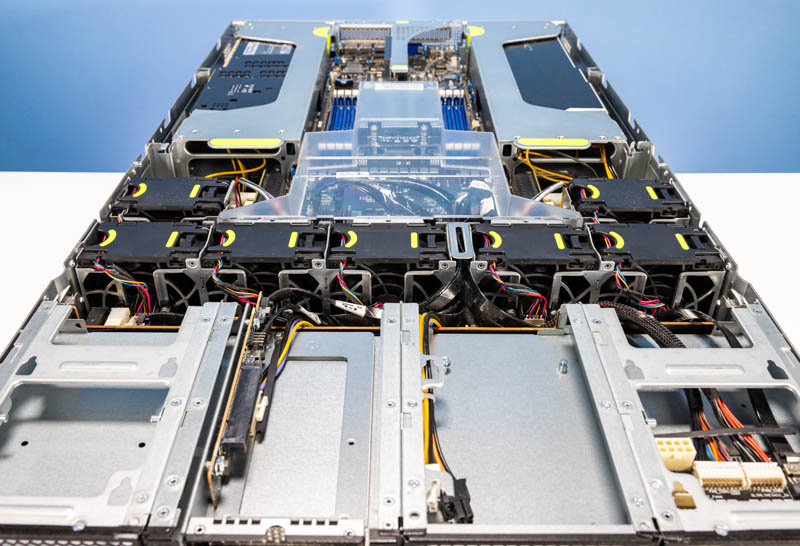

The power supplies for this server are 1.6kW 80Plus Platinum units. Again, they come with an extender attachment to help increase the depth.

At idle, our system was using about 0.13kW with the AMD EPYC 7763, and 8x 32GB DDR4-3200 DIMMs. Our maximum power consumption was just over 0.9kW with the two MI210’s installed. The GPU configuration is going to be a major factor in the overall power consumption and if we had four of these big 300W TDP GPUs, we would likely opt for the 2.2kW power supplies instead.

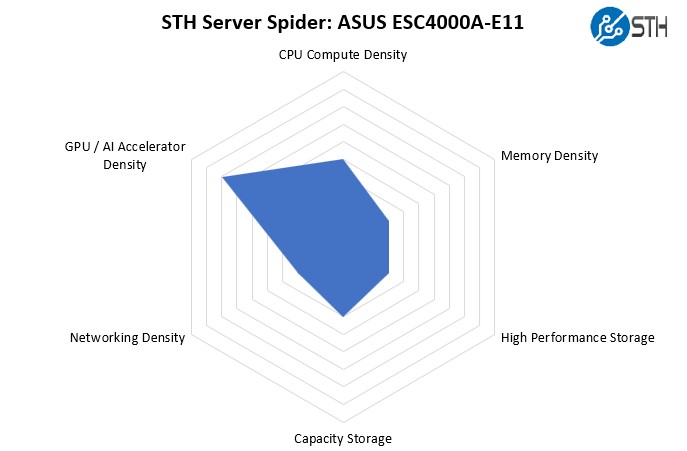

STH Server Spider: ASUS ESC4000A-E11

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

This is a very interesting system. By packing either four or eight GPUs in 2U it is one of the denser GPU compute platforms one can get. It may not offer the maximum 2.5″ NVMe bays, but there is room for both capacity 3.5″ and 2.5″ NVMe storage. Balancing this server, we have up to 64 cores from the AMD EPYC 7003 processor but only eight DIMM slots, not a full sixteen, so this is not the densest CPU compute or memory system out there.

A Word on the AMD Instinct MI210

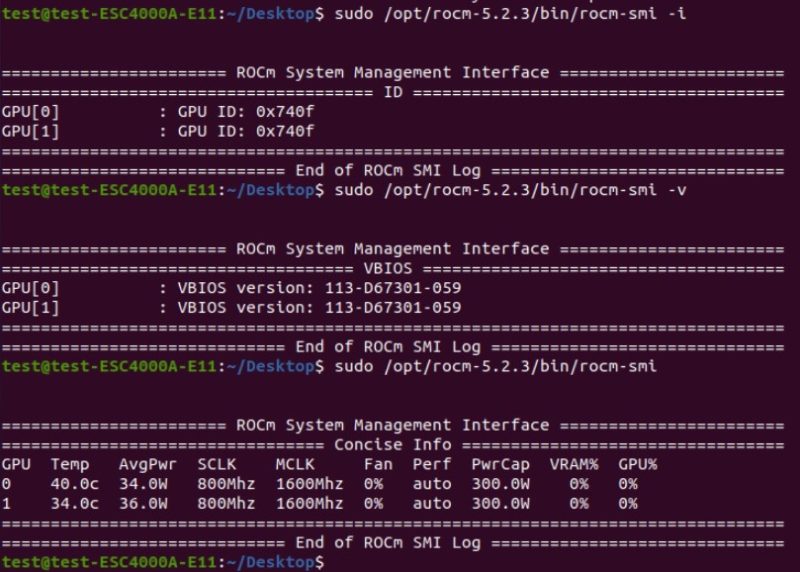

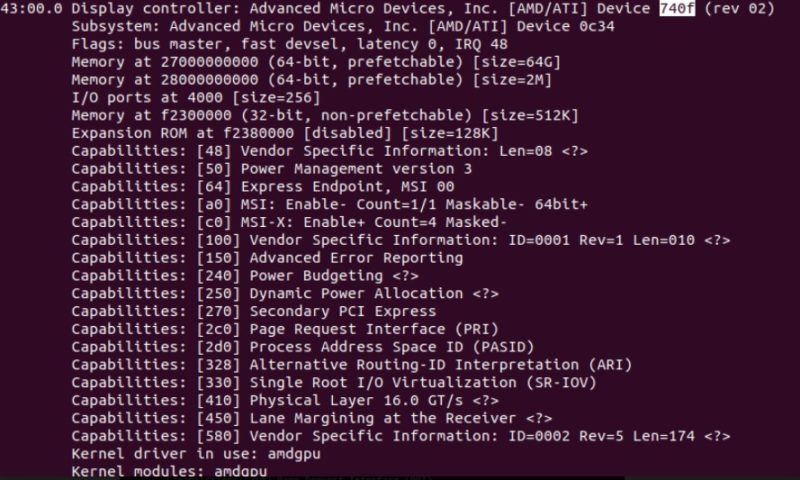

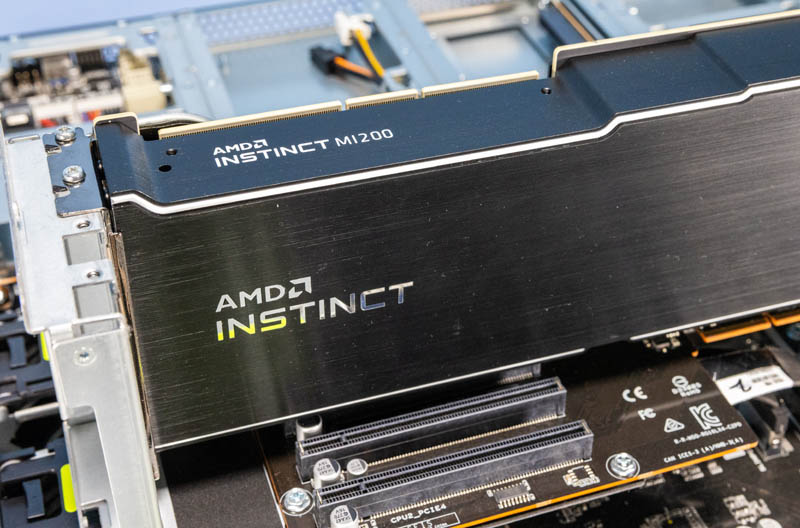

The AMD Instinct MI210 is the challenger in the GPU space. AMD has been enjoying a lot of success with its MI200 architectures in HPC, largely due to the Frontier win. At the same time, there is still a gap between AMD and NVIDIA’s solutions.

A great example of this is that as we were configuring the system, we hit a known bug with the MI210 that we had to fix using a workaround just to get to the stage it is usable. These are little things but are examples of where NVIDIA’s market share and maturity are noticeable. Still, we wanted to document this in the event someone tries using this combination on this machine.

Overall though, the ROCm experience is getting much better. A few years ago, ROCm installations were challenging and delicate. Now, not only is installing and using the cards much better, but the number of applications ROCm accelerates has vastly increased.

NVIDIA still has an enormous software engineering team optimizing in many important domains, but using the AMD MI210 is much easier than the MI100 when it came out. One of the big advantages of AMD winning two Exascale supercomputer installations is that there is a lot of investment now in ROCm and getting applications to run decently well on the Instinct MI200 cards. Here is a great resource for that.

We also want to note quickly that we are glad to see AMD also focusing on supporting some of its consumer GPUs with ROCm and adding new features like matrix cores to the 7900XT/7900XTX. We hope AMD continues to focus on this development angle since both NVIDIA and Intel are building features and software stacks to allow development on consumer GPUs and then applications to be run on data center GPUs like the MI210s we had in this ASUS ESC4000A-E11.

Final Words

Overall, the ASUS ESC4000A-E11 is a really interesting server. Having a single CPU with up to 64 cores powering multiple GPUs in a 2U form factor is a powerful platform. ASUS has a really innovative design with the ESC4000A-E11.

One of the most interesting parts of this server was also getting to test an AMD Instinct MI200 card and seeing just how much of a Linpack beast it is. These cards offer amazing performance in a 300W PCIe envelope.

There are many different form factors for AI training servers. Some use OAM/ SXM. Others use several GPUs along with two CPUs. For those that want to have either 4x high-end GPUs or FPGAs or 8x inference-class GPUs and to minimize the CPU overhead, this is a really interesting platform. Many of our readers will see eight full-height expansion slots in the rear, two low-profile PCIe Gen4 x16 rear slots, and one or two front expansion card options and immediately think of storage or networking applications as well. We can see how the ASUS ESC4000A-E11 might be attractive to many different use cases. That is what makes this server so intriguing.

What is the SD card slot for?

@Matt H.

That’s for the Hypervisors

It’s a bit unusual to see last-gen servers getting reviewed. Is there a concrete reason why the successor to this model, the E12, wasn’t offered for review? It is already available and comes with Epyc Genoa support.

Hm … Nvidia H100 is PCIe gen 5 – what about config with actual AMD CPU which supports the gen 5 I/O ?

Tomas – We have a Gen5 AMD EPYC Genoa server with video going live in a few hours.

It’s a fantastic server, but you have to know what”s missing too:

1. There is no PCIe bifurcation option. You can install only one M.2 ssd on board or 4 M.2 ssd if you choose to not have a RAID controller.

2. You can”t do RAID on default configuration, there is nothing onboard to do that. You have to install a RAID controller.

3. There is no SATA M.2 on board.

4. The SDcard is used for BMC log only. You have to to some non supported cobfigurations to boot from SD card.

5. There is no USB port inside the server.

6. You can”t do RAID with U.2 disks.

7. If you install a RAID controller, you have only 2xU.2 disks.