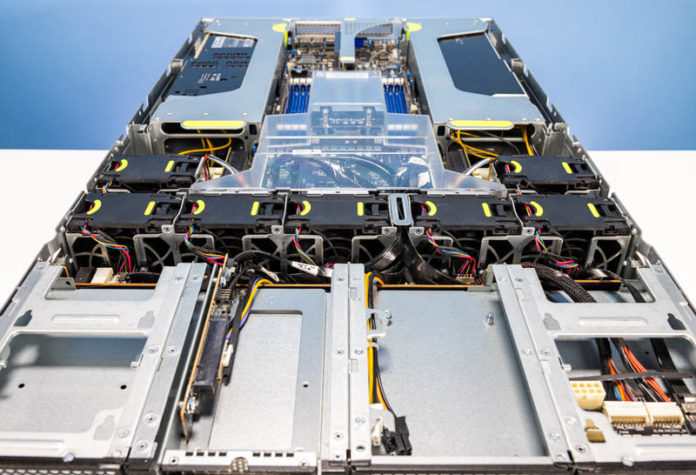

The ASUS ESC4000A-E11 is a single-socket AMD EPYC server capable of handling up to four double-width GPUs like the AMD Instinct MI210, NVIDIA A100, NVIDIA H100, or the Intel Data Center Max GPU 1100 series. One of the most intriguing parts of the AMD EPYC series has been its PCIe implementation and the sheer number of lanes one gets in a single socket. Today, we will look at the server along with AMD Instinct MI210 GPUs to see what it offers.

ASUS ESC4000A-E11 External Hardware Overview

The ASUS ESC4000A-E11 is a 2U server, but it is a highly optimized one. The system is only around 800mm / 31.5in by official specs, meaning it can fit in many racks. That is important since the purpose of this system is to get a lot of GPU density into 2U of rack space.

The front eight 3.5″ bays are interesting. All eight can be SATA or, optionally SAS. The left four can also be NVMe which is something we utilized. Each 3.5″ drive tray is toolless for hard drives, but 2.5″ drives can be mounted using screws to secure them. This is a fairly good solution for those organizations that may want fast NVMe storage and high-capacity storage.

The top left has an interesting feature. There are four USB 3 ports. Typically on servers we see two maximum in the front so this is a bit different. There is also the company’s QLED function to help see POST codes. We would have liked to see a VGA port in front so one could hook a cold aisle KVM cart to it if needed for troubleshooting. When GPU servers are racked, standing behind them on the hot aisle is usually loud and abrasive, so a VGA port would be great here.

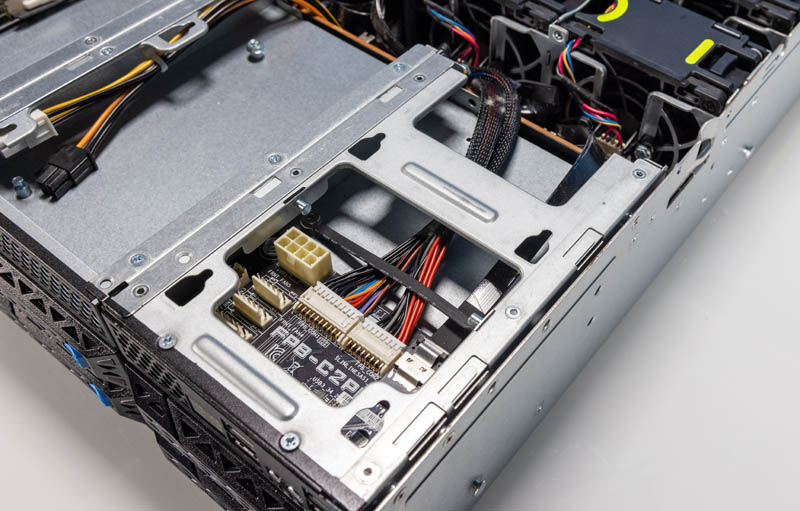

The fun part about this area is that it has a board with power and fan headers as well.

The middle of the chassis is also interesting. We have a PCIe Gen4 x8 slot in the review unit, but there are other options. For example, one can get an OCP NIC 3.0 slot or a M.2 setup added in this front area. Those are configuration options.

On the top right, we have an area for a 2.5″ drive.

While the top of the chassis above the drives may look boring at first, there are actually a lot of options. ASUS is likely doing this in order to provide more airflow so that four 3.5″ drives do not block all of the chassis airflow.

The rear is a bit simpler. The main features are the GPU/ FPGA/ Accelerator slots on either side. We will look at those during our internal overview.

In the center, we get a stack with expansion slots, rear IO, and power supplies. The rear I/O consists of two USB 3 ports, a VGA port, an IPMI/ Redfish management port, and then two 1GbE ports powered by an Intel i350-am2 NIC.

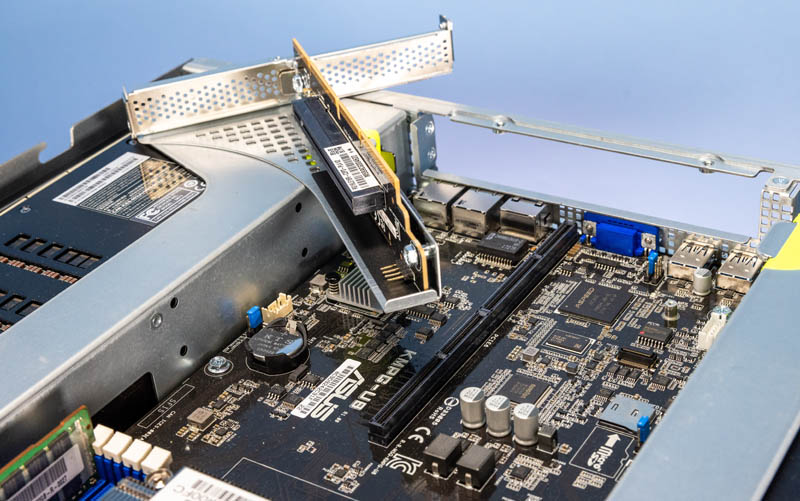

The expansion slots are both low-profile PCIe Gen4 x16 slots that are found on a single riser. This riser is unlike the GPU risers in that it is not toolless. One needs to remove two screws to get the riser out.

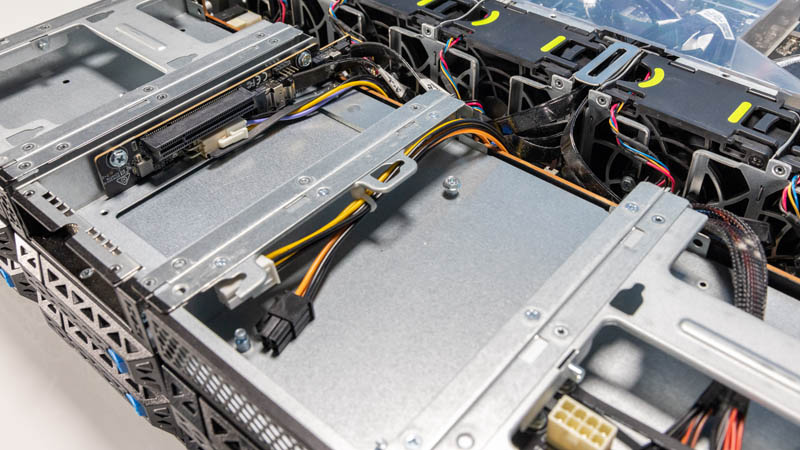

Power is supplied by Chicony 1.6kW 80Plus Platinum PSUs. There are also 2.2kW options available. Something different with these is what they are attached to.

The power distribution happens fairly deep inside the chassis. So the common power supply has an extender board mated to give it extra length to meet the internal mating connector of the power distribution board.

With that, let us get inside the system.

What is the SD card slot for?

@Matt H.

That’s for the Hypervisors

It’s a bit unusual to see last-gen servers getting reviewed. Is there a concrete reason why the successor to this model, the E12, wasn’t offered for review? It is already available and comes with Epyc Genoa support.

Hm … Nvidia H100 is PCIe gen 5 – what about config with actual AMD CPU which supports the gen 5 I/O ?

Tomas – We have a Gen5 AMD EPYC Genoa server with video going live in a few hours.

It’s a fantastic server, but you have to know what”s missing too:

1. There is no PCIe bifurcation option. You can install only one M.2 ssd on board or 4 M.2 ssd if you choose to not have a RAID controller.

2. You can”t do RAID on default configuration, there is nothing onboard to do that. You have to install a RAID controller.

3. There is no SATA M.2 on board.

4. The SDcard is used for BMC log only. You have to to some non supported cobfigurations to boot from SD card.

5. There is no USB port inside the server.

6. You can”t do RAID with U.2 disks.

7. If you install a RAID controller, you have only 2xU.2 disks.