ASUS ESC4000A-E10 Power Consumption

Power consumption of this server is largely dictated by the GPUs and CPU used. Officially this motherboard can support up to 280W TDP CPUs, but we could only get up to an AMD EPYC 7742 at 240W cTDP due to the chips we had on hand. (the AMD EPYC 7H12‘s we have are vendor locked.) Still, with four GPUs and a higher-end CPU, we were able to push the server quite a bit.

At idle, our system was using about 0.35kW with the four NVIDIA GPUs, AMD EPYC 7702P, 8x 32GB DIMMs, and 4x Samsung PM1733 3.84TB NVMe SSDs. We were fairly easily able to get the system above 1kW and to the 1.4kW range. As such, this is not a system we are going to recommend if you want redundancy on 120V power, but on 208-240V power, the 1.6kW rating seems to be enough even adding more components than we were testing.

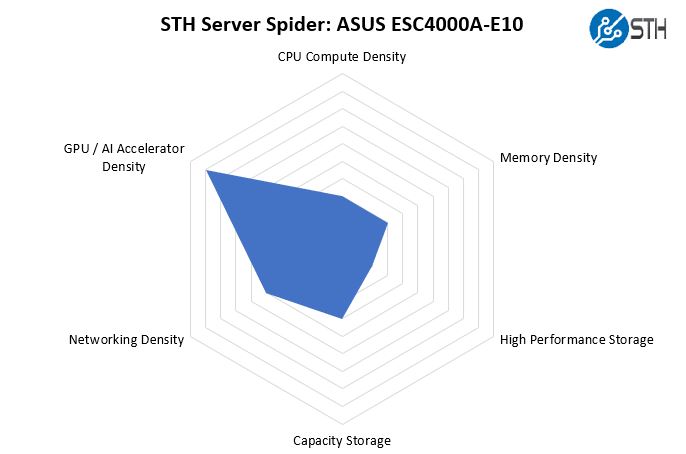

STH Server Spider: ASUS ESC4000A-E10

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

This system is clearly focused on GPU/ accelerator density. It is not the densest solution like some of the much deeper 4x GPU 1U servers, but it is still considered dense by modern standards, especially with the 8x single-slot GPU option. There is still plenty of room for networking and storage which is excellent. Something we pondered while using this system is whether it would make an interesting solution with multiple external drive HBAs as a control node for a storage server. We are focused on GPUs, but there is more going on here than just GPUs as one can easily use FPGAs or other PCIe Gen4 devices.

Final Words

Overall, this is a system designed for a specific customer deployment scenario. Some organizations like to deploy 4U dual-socket training servers to scale up nodes and share costs such as boot drives. Others like 1U platforms for density. With a 2U platform, one eliminates local transfers between NUMA nodes. It also is a more power and cooling density friendly design with a single CPU and four GPUs in a 2U form factor.

The performance was exactly what we would expect from a well-cooled single-socket AMD EPYC solution with 4x NVIDIA Quadro RTX 6000 GPUs, and that is a good thing. Years ago we would sometimes see performance degrade in form factors such as this, but the industry, including ASUS, has evolved the 2U 4x GPU designs to the point where cooling is not a major concern.

ASUS did a great job with the ESC4000A-E10 adding features such as the flexible front expansion configurations as well as maximizing the usefulness of the AMD EPYC 7002 PCIe configuration. Compared with a current 2nd Gen Intel Xeon Scalable offering, one gets the benefit of having more PCIe lanes with a single CPU than Intel has with two CPUs.

Overall, if you want to deploy single-socket solutions with 4x GPUs which has become the new trend, the ASUS ESC4000A-E10 is certainly a system you should look at.

Patrick – Nvidia GPUs seem to like std 256MB BAR windows. AMD Radeon VII and MI50/60 can map the entire 16GB VRAM address space, if the EUFI cooperates ( so-called large BAR).

Any idea if this system will map large BARs for AMD GPUs?

The sidepod airflow view is quite

…… Nice

Fantastic review. I am considering this for a home server rack in the basement to go with some of my quiet Dell Precision R5500 units. Didn’t see anything in the regards to noise level, idle and during high utilization. Can you speak to this?

This is a really nice server. We use it for FPGA cards, where we need to connect network cables to the cards. This is not possible in most GPU servers (e.g. Gigabyte). It just works :-)

But don’t get disappointed if your fans are not yellow, ASUS changed the color for the production version.

We’ve bought one of these servers just now, but when we build in 8x RTX4000’s, only on gets detected by our hypervisor (Xenserver).

Is there any option in the BIOS we need to address in order for the other 7 to be recognized?

Update on my reply @ 24 feb 2022:

It seemed we received a faulty server. Another unit DID present us with all 8 GPUs in Xenserver without any special settings to be configured in the BIOS at all (Default settings used)