ASUS ESC4000A-E10 Internal Overview

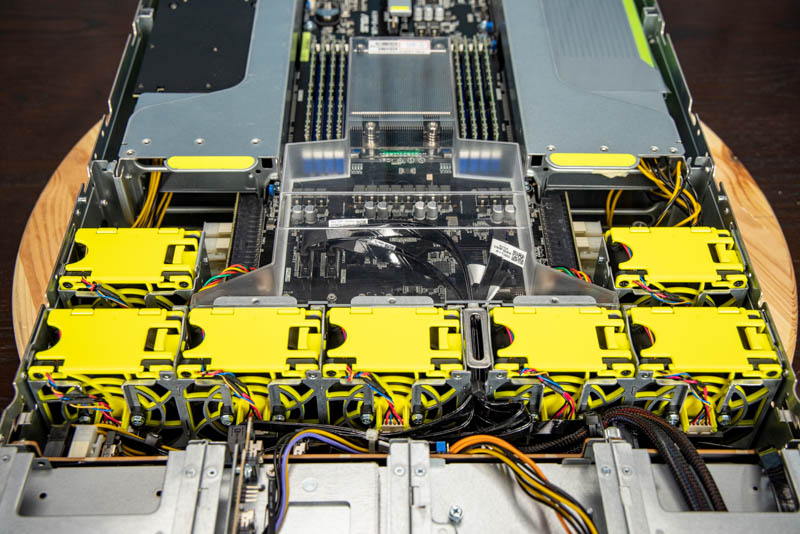

Since we already covered the front bays and the I/O expansion in our external overview, we are going to get into the internal section starting with the fans. There are a total of seven large fans that move air through the chassis. The CPU, motherboard, low-profile expansion, power supply, and memory area are generally cooled by three of these fans. There are two fans on either side that cool the GPU side pods. We wanted to take a quick second to note that the use of the hard plastic air duct that is clear is a great feature. It makes visual inspection under the air duct faster and is more sturdy than some of the softer materials we see used in competitive systems. The air duct is held in place by guide pins which makes servicing it very easy.

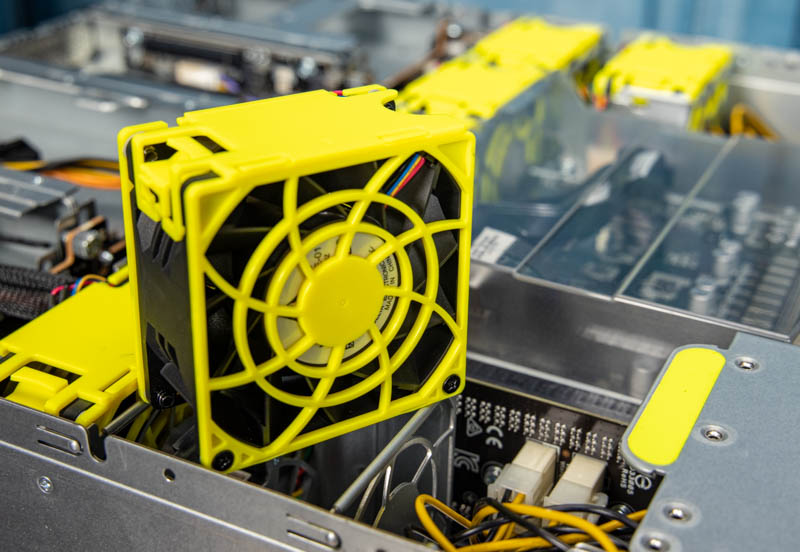

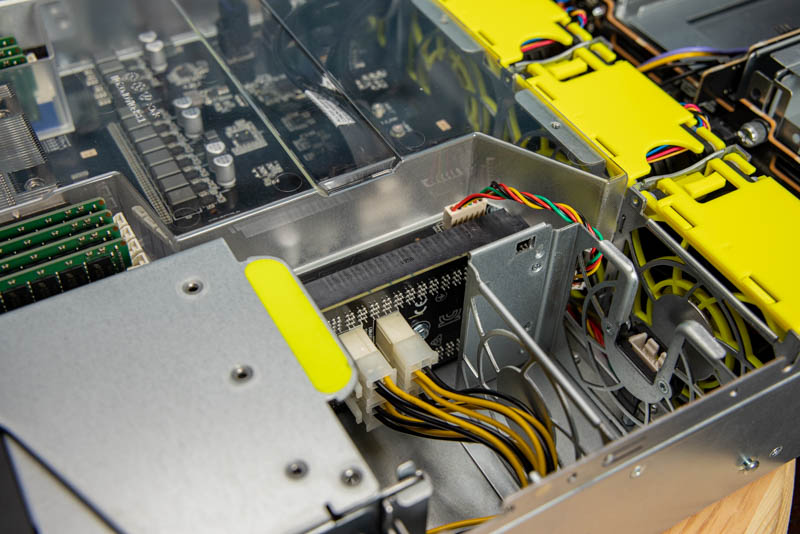

The fans themselves are hot-swappable and come in brightly colored carriers. ASUS is using this bright coloration to show some of the internal chassis service spots that are designed to be touched during service. For example, one will see these colors used on the GPU side pods and PCIe risers where the chassis is designed to be handled internally.

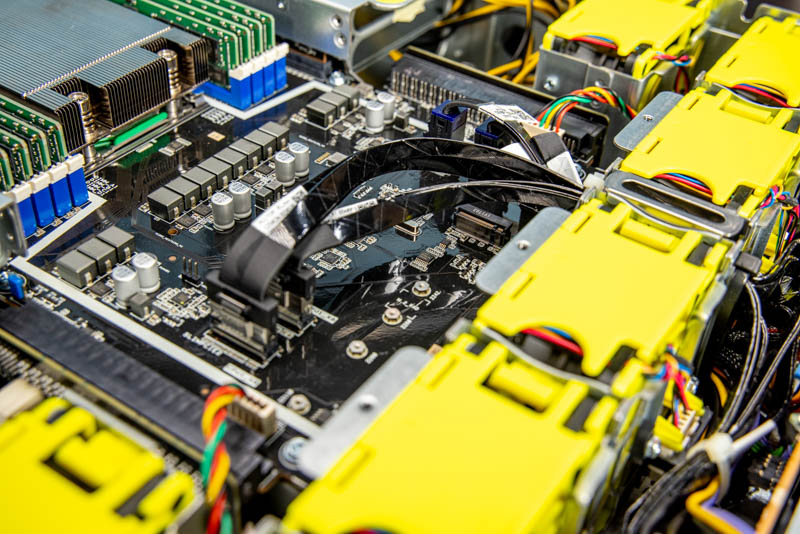

Under the clear airflow guide, we have the cabling headers that bring data to the front panel area for storage and expansion cards. We also have a M.2 slot that can handle up to M.2 22110 (110mm) SSDs. We would like to see ASUS make this a tool-less service option in future revisions of this platform.

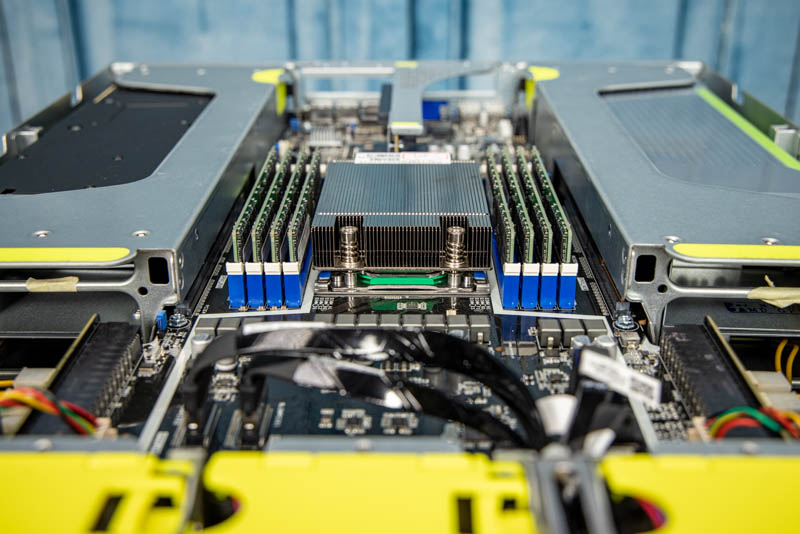

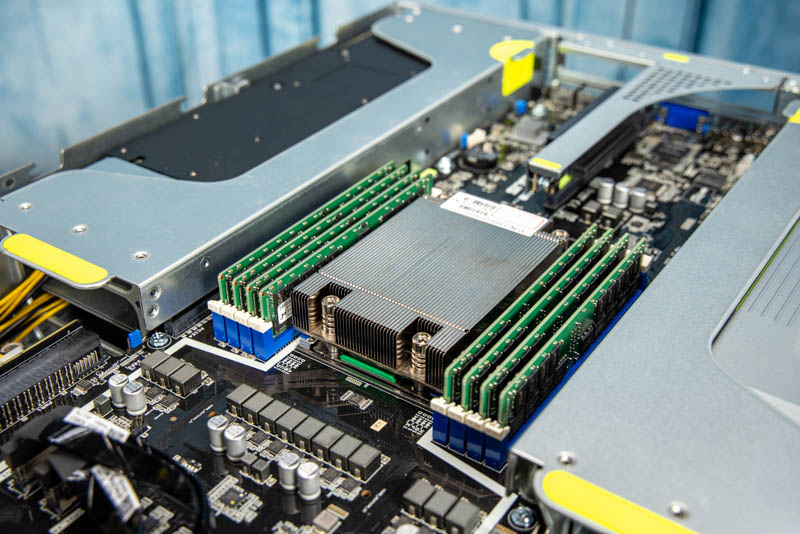

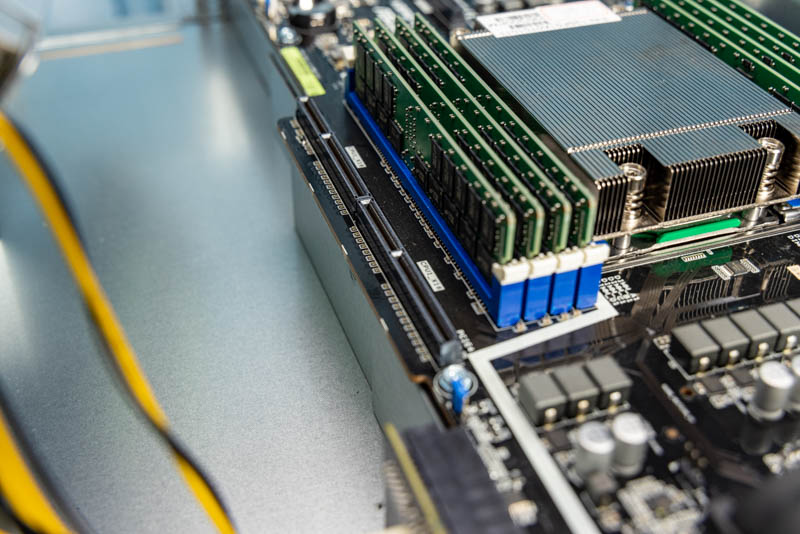

Behind this area, we get the AMD EPYC 7002 “Rome” and soon AMD EPYC 7003 “Milan” CPUs. We have a Rome SKU pictured here. We tested the system up to the AMD EPYC 7742 64-core SKUs which provide a lot of CPU power to go along with the GPU power in this system. Alternatively, one can use lower-cost SKUs with lower power requirements and lower core counts if one wants to save on capital outlay. Some organizations like to have many cores per GPU, others use fewer cores per GPU and EPYC adds this flexibility in a single socket.

Alongside the AMD EPYC 7002 CPU, we get eight DDR4-3200 DIMM slots. This means we get up to 2TB of RAM capacity using 256GB LRDIMMs. That 2TB of memory capacity is equal to two 2nd Gen Intel Xeon Scalable Refresh SKUs. Effectively, ASUS is using the AMD platform to offer a single socket alternative to what would be a dual-socket system for Intel. That is perhaps a simplistic way to look at this since a current-generation 2nd gen Intel Xeon Scalable dual-socket system would not have PCIe Gen4 nor enough PCIe lanes to run this entire system.

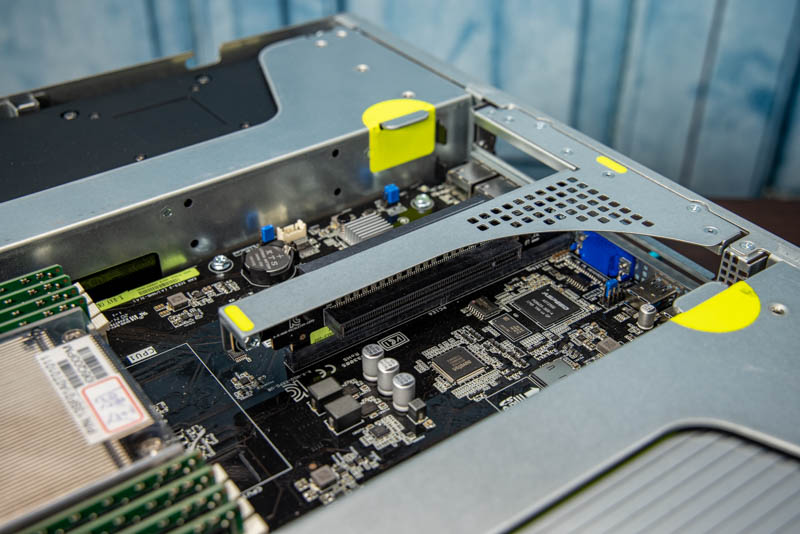

On the topic of PCIe expansion slots, we have two low profile slots in the rear of the system. Here is one PCIe Gen4 x16 slot:

Here is the second slot. If you wanted to add high-speed dual 100Gbps NICs for InfiniBand or 100GbE, this offers a tremendous amount of networking bandwidth in the system.

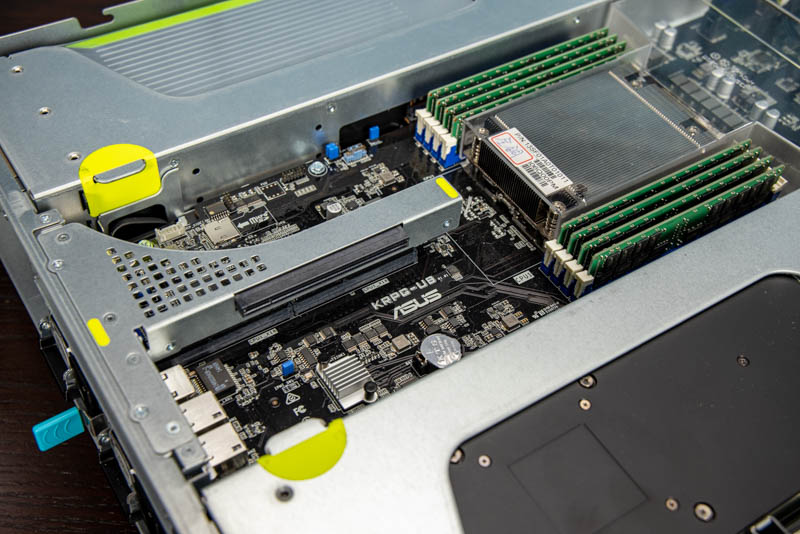

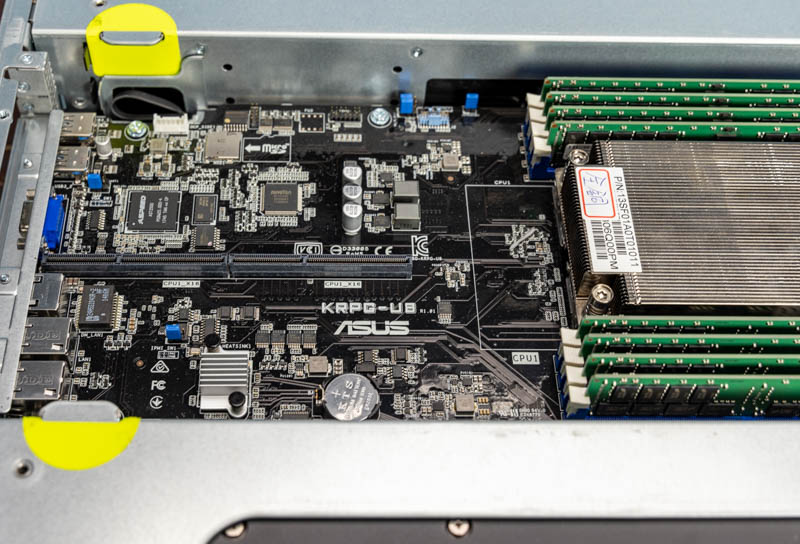

ASUS prides itself on motherboards as we saw in our Interview with Robert Chin GM of the ASUS Server BU some time ago. Here we can see the dual PCIe Gen4 riser slots of the ASUS KRPG-U8 motherboard along with the main I/O components including the ASPEED AST2500 BMC that we will discuss in our management section. The micro SD card slot and the small heatsink on the Intel i350 NIC are nice touches.

Now that we discussed the main system internals, it is time to start looking at the main feature: the GPU side pods. Here we can see the second fan removed from its housing along with the GPU side pod power. There is a small power distribution board that has four power connectors. In our system, we have two connectors wired to each GPU. The system supports up to two double-width full-height PCIe Gen4 x16 GPUs per side and four total. Alternatively, one can have four single-width slots wired with PCIe Gen4 x8 per side for a total of eight GPUs per system. ASUS is using this power delivery design to accommodate that flexibility using cabling which is a nice design.

This was not the original photo we were going to use to show the GPU side pod, but it was our favorite. Each GPU side pod can be removed by unscrewing a single hand screw. It can then be lifted out of the chassis. We are going to look at the PCIe connector for this next, however, this shot with two NVIDIA Quadro RTX 6000 passively cooled cards shows a key feature. One can see the NVLink bridge connectors at the top of the GPU. Also, one can see on the far edge of the chassis that there is a metal divider to maintain airflow. By sizing the motherboard how it did, ASUS is able to accommodate an NVLink bridge between two GPUs since there is extra space. Some competitive solutions do not have extra space beyond the GPUs to accommodate connections on top of the GPUs.

Each GPU side pod is connected via two PCIe Gen4 x16 connectors. This gives 32x PCIe Gen4 lanes total to two double-width or four single-width expansion cards. We have the NVIDIA Quadro RTX 6000’s here, but PCIe Gen4 GPUs such as the NVIDIA A100 PCIe and AMD Instinct MI100 32GB CDNA GPU can take advantage of the higher-speed data connection.

One other small feature we wanted to point out was the airflow through the side pod. Here we can see the dual fan setup we looked at earlier being channeled for primary cooling of the GPUs. ASUS also designed the pods to channel air so we see sheet metal ducting airflow away from other components and through the GPUs. This is another small touch. Some older systems did not have this level of ducting which led to high GPU temperatures and reduced performance.

Overall, the system was nicely laid out. One can see the generational improvements of this server versus some of the older 2U 4x GPU designs we have seen previously. One of the key aspects of a platform like this is that we get a single CPU, a lot of expansion for networking and storage, and no PCIe switches. That makes this type of system more cost-optimized than many competing platforms while still providing a high-performance server.

Next, we are going to look at the management of the system.

Patrick – Nvidia GPUs seem to like std 256MB BAR windows. AMD Radeon VII and MI50/60 can map the entire 16GB VRAM address space, if the EUFI cooperates ( so-called large BAR).

Any idea if this system will map large BARs for AMD GPUs?

The sidepod airflow view is quite

…… Nice

Fantastic review. I am considering this for a home server rack in the basement to go with some of my quiet Dell Precision R5500 units. Didn’t see anything in the regards to noise level, idle and during high utilization. Can you speak to this?

This is a really nice server. We use it for FPGA cards, where we need to connect network cables to the cards. This is not possible in most GPU servers (e.g. Gigabyte). It just works :-)

But don’t get disappointed if your fans are not yellow, ASUS changed the color for the production version.

We’ve bought one of these servers just now, but when we build in 8x RTX4000’s, only on gets detected by our hypervisor (Xenserver).

Is there any option in the BIOS we need to address in order for the other 7 to be recognized?

Update on my reply @ 24 feb 2022:

It seemed we received a faulty server. Another unit DID present us with all 8 GPUs in Xenserver without any special settings to be configured in the BIOS at all (Default settings used)