ASUS ESC A8A-E12U 8-way AMD Instinct MI325X

This is ASUS’s high-end AMD AI GPU server.

This is the dual AMD EPYC 9005 “Turin” CPU server plus 8-way AMD Instinct MI325X for a total of 2.048TB of HBM3E memory onboard.

For folks looking at high memory capacity training and inferencing accelerators, AMD at 256GB per GPU is a big deal.

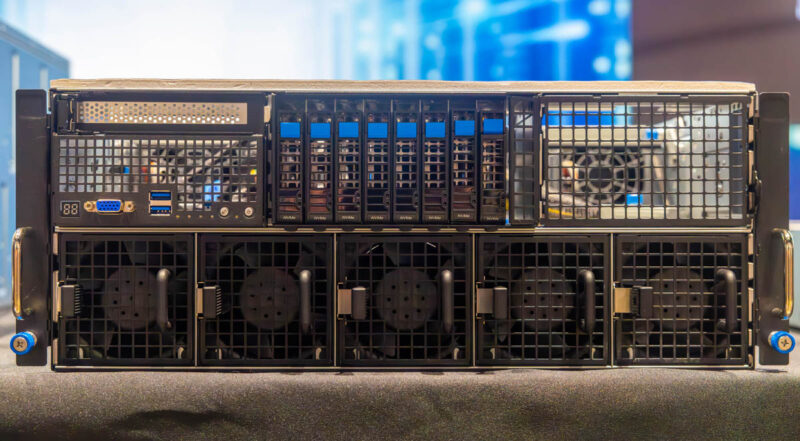

Inside is a massive fan wall to keep the GPUs cool.

The rear has a top tray for the GPUs, but then a bottom portion for the six 3kW power supplies, and all of the NIC slots.

This bottom section also slides out on trays, making it a much better serviceability design than the Dell PowerEdge XE9680.

Next, we will get to another GPU server and a 1U dual-socket server.

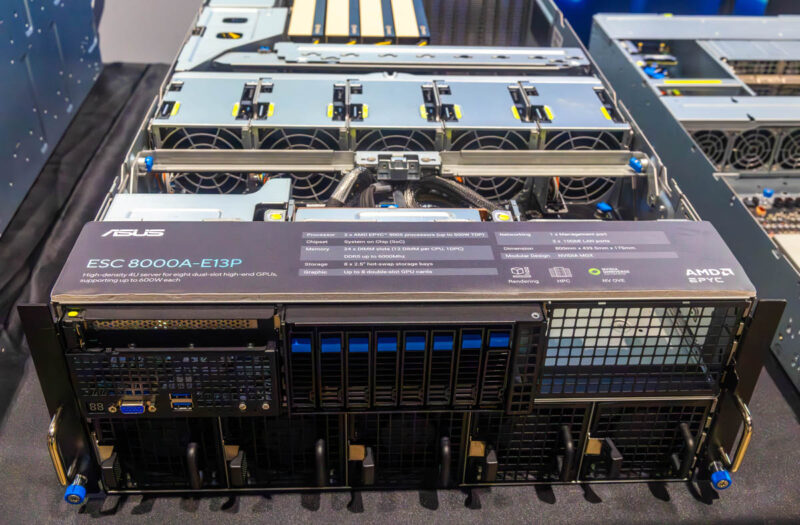

ASUS ESC 8000A-E13P

This is 4U PCIe GPU server with a twist.

It supports dual AMD EPYC 9005 processors, which one might expect.

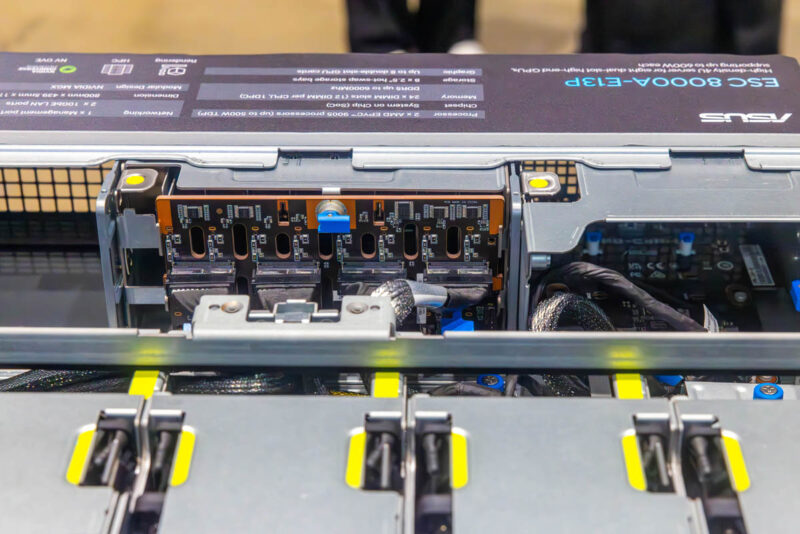

One might also expect the eight PCIe GPUs being supported.

The catch is that these GPUs can be up to 600W each.

It was not long ago when folks in the industry used 250-300W as the maximum for a passively cooled dual-slot GPU. Now we are at 600W.

The server also has front I/O with eight 2.5″ drive bays and a slot for a NIC.

PCIe GPU systems have been popular for a long time, so it is great to see new versions come out.

Next, the standard server.

On the CXL system; is there any sort of inter-node connection to allow flexible allocation of the additional RAM; or is it purely a measure to allow more DIMMs than the width limits of a 2U4N and the trace length limits of the primary memory buss would ordinarily permit?

I’d assume that the latter is vastly simpler and more widely compatible; but a lot of the CXL announcements one sees emphasize the potential of some degree of disaggregation/flexible allocation of RAM to nodes; and it is presumably easier to get 4 of your own motherboards talking to one another than it is to be ready for some sort of SAN-for-RAM style thing with mature multi-vendor interoperability and established standards for administration.

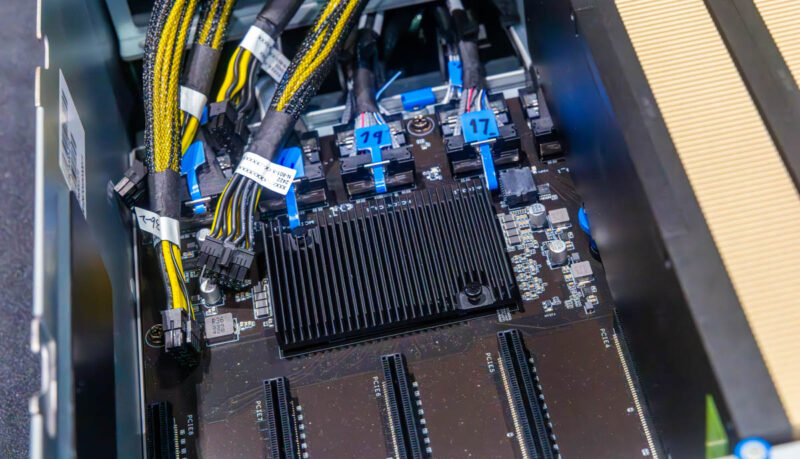

It is not obvious where the other four GPUs fit into the ASUS ESC8000A E13P. Is it a two level system with another four down below, or is ASUS shining us a bit with an eight-slot single slot spacing board and calling that eight GPUs?

Oh, nevermind. Trick of perspective, the big black heatsink looks like it would block any more slots.

Page 1 “The 2.5″ bays take up a lot of the rear panel”

I think you mean “The 2.5″ bays take up a lot of the **front** panel”

Hard to imagine telling us any less about the cxl! What’s the topology? Can the cxl RAM be used by any of the 4 nodes, and how do they arbitrate? What’s the bandwidth and latency?