ASRock Rack ROMED6U-2L2T Market Perspective

There are probably going to be two types of reactions to the ASRock Rack ROMED6U-2L2T. The first is of those who will look at this platform and think “this is exactly what I want, mATX EPYC.” The second is going to be those who simply think “ATX might let me have 8 DDR4 channels.” Those are both valid ways to view a platform like this.

After spending some time with the platform, personally, I migrated from the second type of reaction to the first, with the specific mATX caveat. A few months ago we did a Building a TrueNAS Core 8-bay mATX ZFS NAS article and video. There we focused on many of the challenges with finding mATX cases and motherboards. Specifically, here we now have a platform that offers a better option than what we could find on the market a year ago. There are more I/O possibilities and more CPU cores opened up which is a big deal for the mATX market.

Having gone through that experience, and documenting options, this may have been the best option if cooling can be sorted out in often cramped mATX chassis. Cooling 225-280W TDP CPUs in many of the mATX desktop chassis can be hard. It can be even harder in some of the short-depth 1U server chassis on the market.

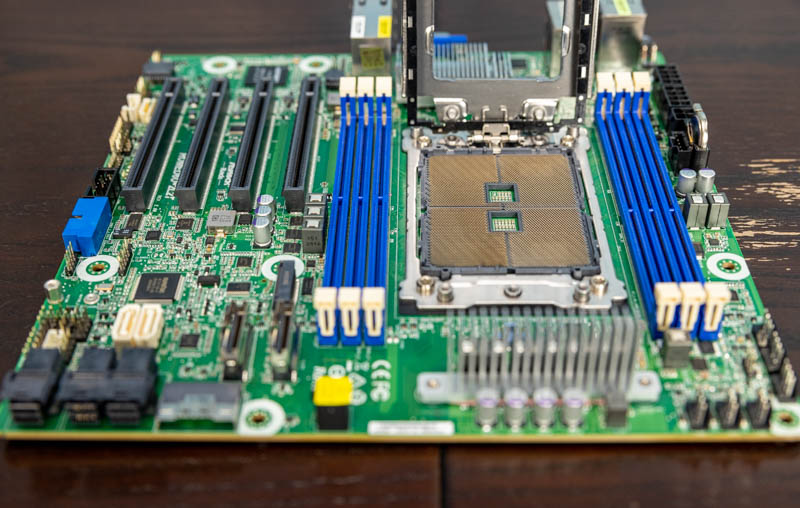

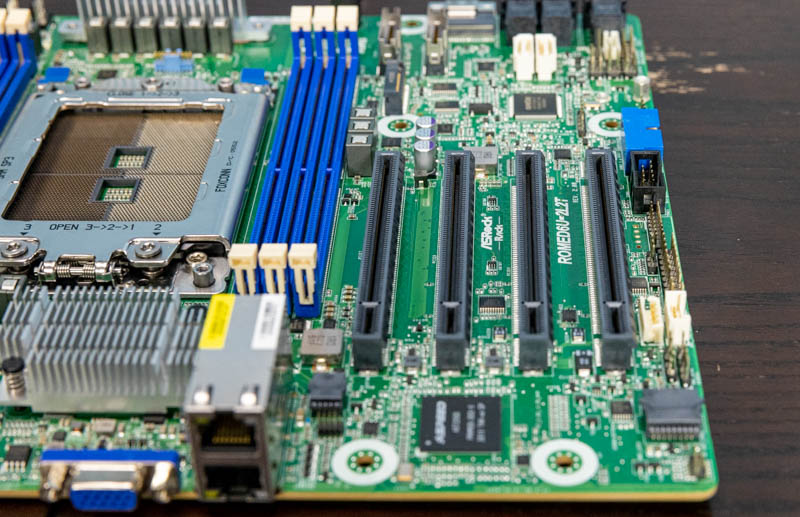

Still, just the sheer amount of flexibility provided by having almost unconstrained I/O in a mATX platform is refreshing. All four PCIe slots are available and using PCIe Gen4 x16 links. Often in mATX there are trade-offs with PCIe slots. The PCIe slots also do not need to be used for features such as SATA HBAs (in many cases) and NVMe storage since that is available on the platform.

Final Words

Overall, this is going to be one of the platforms that our STH readers will appreciate. ASRock Rack has made the most out of building an AMD EPYC mATX platform. Many that purchase this motherboard will not use all of the I/O, but at the same time, it is nice to have the options and not make trade-offs.

In terms of pricing, as of the date this article is published we are seeing prices in the $485- $600 range in the US for street pricing which is a fairly wide variance. With the onboard 10Gbase-T, a PCB supporting PCIe Gen4, and the lower-volume nature of the motherboard it seems about right in terms of a pricing range.

Reviewing so many server platforms each year, there are few that I feel are relatively “wild” and the ASRock Rack ROMED6U-2L2T may just be one of them. This platform is packed with I/O to the point that an ATX 24-pin power connector and two DIMM slots could not be fit, but there is still a SATA power output onboard. While we cannot say this is the perfect motherboard for everyone, but it absolutely has a lot to offer if you are looking to fit more into an mATX server.

ASRock Rack should start building boards with onboard sfp connectors for ethernet >= 10G.

That makes the stuff much more useable.

Thanks for this long waiting review !

I think it’s an overkill for high density NAS.

But why did you put a 200Gb NIC and say nothing about it?

Incredible. Now I need to make an excuse that we need this at work. I’ll think of something

Hello Patrick,

Thanks for the excellent article on these “wild” gadgets! A couple of questions:

1. Did you try to utilize any of the new milan memory optimizations for the 6 channel memory?

2. Did you know this board has a second m.2? Do you where I can find an m.2 that will fit that second slot and run at pcie 4.0 speeds?

3. I can confirm that this board does support the 7h12. It also is the only board that I could find that supports the “triple crown”! naples, rome and milan (I guess genoa support is pushing it!)

4. cerberus case will fit the noctua u9-tr4 and cools 7452, 7502 properly.

5. Which os/hypervisor did you test with and did you get the connectx-6 card to work at 200Gbps? with vmware, those cards ran at 100Gbps until the very latest release (7.0U2).

6. which bios/fw did you use for milan support? was it capable of “dual booting” like some of the tyan bios?

Thanks again for the interesting article!

Beautiful board

@Sammy

I absolutely agree. Is 10GBase-T really prevalent in the wild? Everywhere I’ve seen that uses >1G connections does it over SFP. My server rack has a number of boards with integrated 10GBaseT that goes unused because I use SFP+ and have to install an add-in card.

Damn. So much power and I/O on mATX form factor. I now have to imagine (wild) reasons to justify buying one for playing with at home. Rhaah.

@Sammy, @Chris S

Yes, but if they had used SFP+ cages, there would be people saying why don’t they use RJ-45 :-) I use a 10GbE SFP+ MicroTik switch and most the systems connected to it are SFP+ (SolarFlare cards) but I also have one mobo with 10GBase-T connected via an Ipolex transceiver. So, yes if I were to end up using this ASRock mATX board it would also require an Ipolex transceiver :-(

My guess it that ASRock thinks these boards are going to be used by start-ups, small businesses, SOHO and maybe enthusiasts who mostly use from CAT5e to CAT6A, not fiber or DAC.

The one responsible for the Slimline 8654-8i to 8 x SATA cable on Aliexpress is me. Yes. I had them create this cable. I have a whole article write up on my blog and I also posted here on STH forums. I’m in the middle of them creating another cable for me to use with next gen Dell HBA’s as well.

The reason the cable hasn’t arrived yet, is because they have massive amounts of orders, with thousands of cables coming in, and they seemingly don’t have spare time to make a single cable.

I’ve been working with them closely for months now, so I know them closely.

Those PCIe connectors look like surface mounts with no reinforcement. I’d be wary of mounting this in anything other than flat/horizontal orientation for fear of breaking one off the board with a heavy GPU…

@Sammy and @chris s….what is the advantage of sfp 10gb over 10gbase-t ??

I remember PCIe for years not having reinforcement Eric. Most servers are horizontal anyway.

I don’t see these in stock anymore. STH effect in action

@Patrick, wouldn’t 6 channel memory be perfect for 24 core CPUs, such as the 7443P; or would 8 channels actually be faster – isn’t it 6*4 cores?

@erik answering for Sammy

SFP+ would let you choose DAC, MM/SM fiber connectors. Ethernet @ 10gb is distance limited anyway, and electrically can draw more power than SFP+ instances.

Also 10G existed as SFP+ a lot longer than ethernet 10G existed, I’ve got sfp+ connectors everywhere, and SFP+ switches all over the place. I don’t have many 10G ethernet cables (cat 6a or better recommended for any distance over 5ft), and I only have 1 switch around that does 10G ethernet at all.

QSFP28 and QSFP switches can frequently break out to SFP+ connectors as well so newer 100/200/400gb switches can connect to older 10g sfp+ ports. 10GB ethernet conversions from SFP+ exist but are relatively new and power hungry.

TLDR: SFP became a server standard of sorts that 10Gbase-T never did.

One thing I noticed is that even if ASRock wanted SFP+ on this board they probably couldn’t fit it. There isn’t enough distance between the edge of the board and the socket/memory slots to fit the longer SFP+ cages.

Thanks Patrick, that is quite nice find. Four x16 PCIe slots plus a pair of m.2, a lot of SATA and those 3 slim x8 connectors is IMO nearly a perfect balance of I/O. Too bad about the DIMMs. Maybe Asrock can make an E/ATX board with the same I/O balance and 8 DIMMs. I still thimk the TR Pro boards with their 7 x16 slots are very unbalanced.

My main issue with the 10GbaseT (copper) ports is the heat. They run much hotter than the sfp+ ports.

I believe there is a way to optimize this board with it’s 6 channels if using the new milan chips. I was looking for some confirmation.

Patrick, Thank you for your in-depth review. Hit every mark on what i was looking for that is important. ASRock ROMED6U-2L2T fantastic board, for the I/O, can’t find one that works like Data Center server in mATX form. Is there a recommended server like or other casing you or anyone tested this board, the required FANs cooling and power with fully populated and maintains server level reliability. I love that this has IPMI.

I would like to see a follow on review with 2x100G running full memory channels on this board, and running RoCE2 and RDMA.

Great review, thank you. I am still confused about what, if anything, I should connect to the power socket label 1 and 2 in the Quick Installation Guide. The PSU’s 8-pin 12 V Power connector fits but is it necessary?

@Martin Hayes

The PSU 8-pin/4+4 CPU connectors are what you use to connect. You can leave the second one unpopulated however but with higher TDP CPU’s it’s highly recommended for overall stability.

Thanks Cassandra – my power supply only has one PSU 8-pin/4+4 plug but I guess I can get an extender so that I can supply to both ports

OMG, this is packed with features then my dual socket xeon. I must try this. Only ASRock can come up with something like this.