ASRock Rack ROME2D16-2T Testing

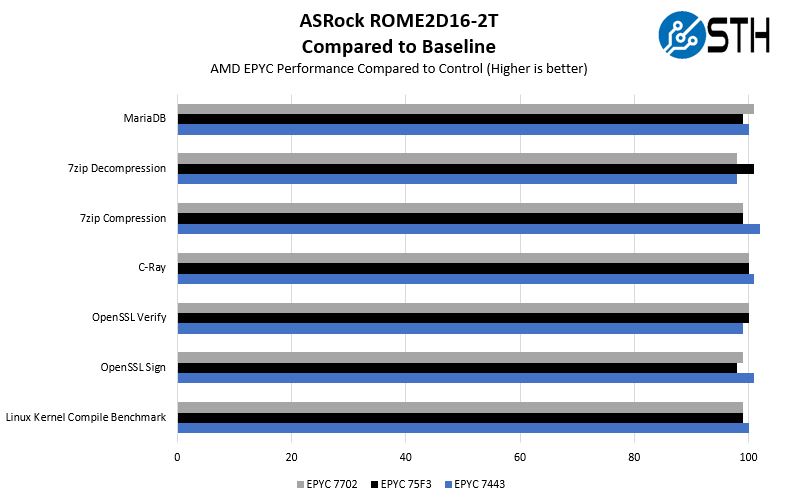

We wanted to gather a few quick data points to validate that the ASRock Rack ROME2D16-2T indeed is performing as expected.

Overall, we saw a solid performance from the motherboard with a variety of chip configurations. We may have had slightly lower performance on a few of the tests but overall these are within a margin of +/-2% which is a decent test variation range. What we are really looking for here is a dip of 5-10% or even more and we did not see that.

Final Words

Overall, the ASRock Rack ROME2D16-2T performed well in our testing and provided a stable platform for us. There is frankly a ton of customization that can be done to this motherboard and that is very welcome.

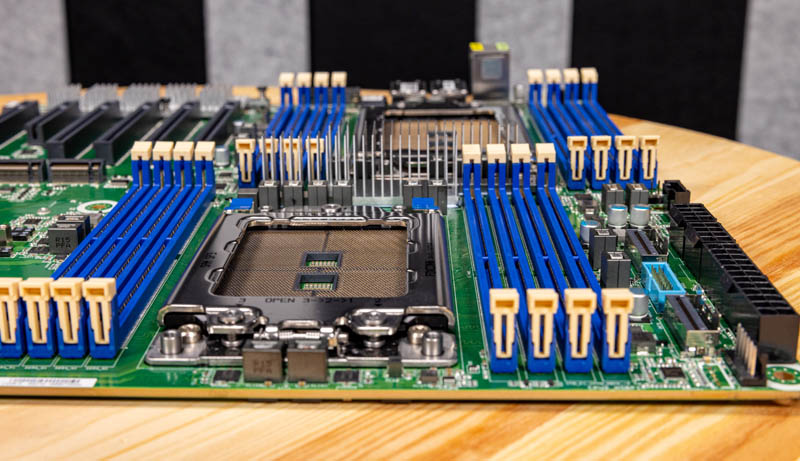

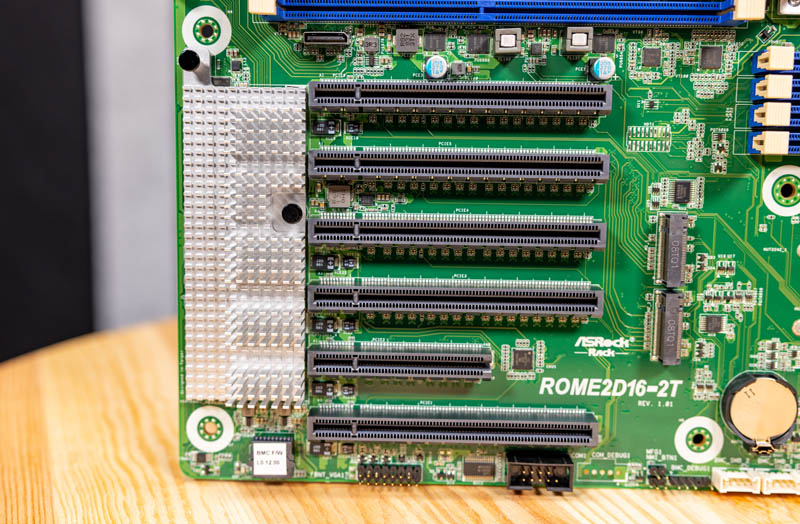

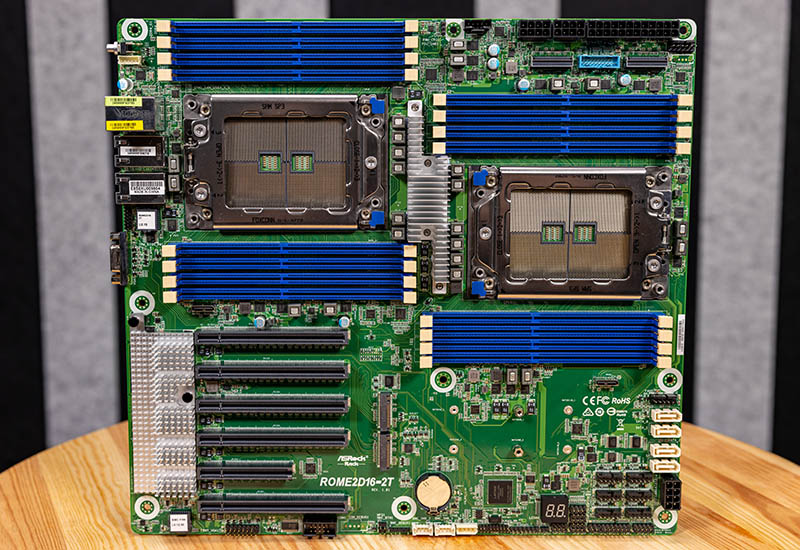

Even at a 305mm x 330mm EEB size, the motherboard is still quite packed with features. That means that one does need to do a bit more looking through the planned components to ensure they will fit. For example, one may need different length OCuLink cables. Some PCIe slots can be obstructed by either DIMM slots or large NVMe heatsinks. Those are just some of the challenges that motherboard designers face trying to fit modern server platforms into smaller form factors like this EEB motherboard.

Still, for many applications, the ability to move to a PCIe Gen4 platform with up to 128 cores and 4TB of memory in a standard motherboard form factor is going to be a big deal. Many of the systems that are being upgraded these days are from the early Skylake Xeon era or the Xeon E5 era that were put in custom chassis for markets. Having a platform that can drop into a familiar chassis but provide an enormous capacity upgrade in terms of compute as well as I/O is a big deal.

Overall, ASRock Rack did a great job balancing the limitations of a 305mm x 330mm form factor with fitting a ton of functionality into the platform. There were even small nice-to-see features such as the dual 10Gbase-T networking onboard. This was an overall solid platform for us in our testing and that is what one wants in a server motherboard.

Did I understand that this motherboard uses the infinity fabric interconnects between sockets which since others use for interconnects? If so, is there a way of measuring the performance difference between such systems?

Yikes, my question was made difficult to understand by the gesture typing typos. Here’s another go at it:

Did I understand that this motherboard uses three infinity fabric interconnects between sockets which since others use four interconnects? If so, is there a way of measuring the performance difference between such systems?

This will be really a corner case to measure. You need to have a workload that needs to traverse these links consistently in order to see any difference.

Something like GPU or bunch of NVMe disks connected to the other CPU socket.

If you consider how many PCIe lanes are in these interconnects you will notice that the amount of data that needs to be pushed through is really astounding.

Anyway if you want to see the differences you may want too look up the Dell R7525

There might be a chance that they tested it in the spec.org in the without xGMI config (aka 3 IF links). You may then compare it with HPE or Lenovo which are using NVMe switches.

I am looking to purchase the ROME2D16-2T Qty30

What is the ETA and price ?

Can diliver this ROME2D16-2T for a very sharp price from The Netherlands.

Hello Patrick , I am trying to find the specs of the power supply you used in your test bed for this MB ?

Tia , JimL

How are the VRM temps? I can get this board and 7702 EPYCs for a good price however those phases look really basic well… like all the epyc boards. I don’t want to run some exotic water cooling or something, jet engine fans.