SlimSAS and Oculink

There are two small features that one may have seen earlier in this review that are scattered across the motherboard. Specifically, there are Oculink and SlimSAS connections onboard for SATA and NVMe expansion.

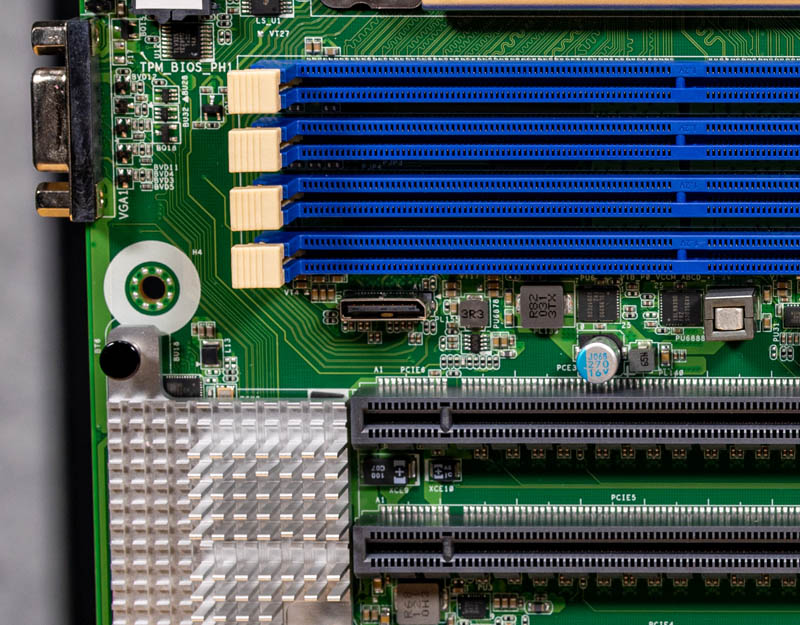

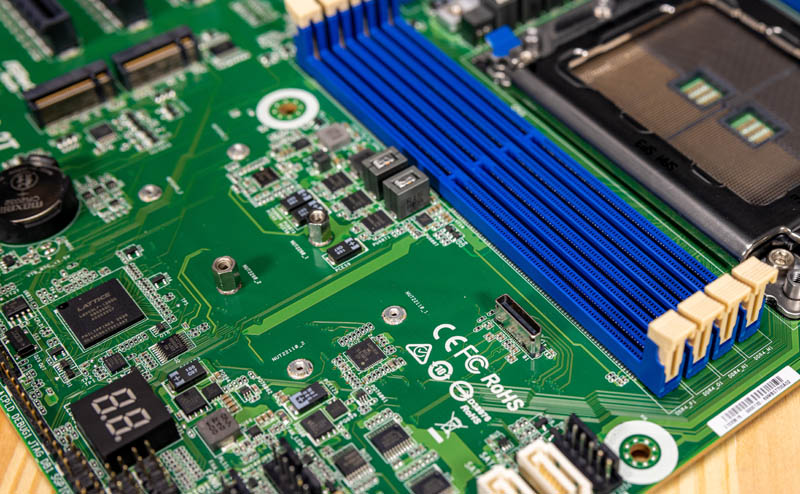

One of these Oculink ports sits between the DIMM slots and the PCIe slots. This can be either 4x SATA or a PCIe Gen4 x4 link. Cable routing will need special attention here especially if a large PCIe card is used.

The second Oculink port is just below the other set of DIMMs. That practically also means that one may need significantly different length Oculink cables if the lanes are being routed to the same front storage backplane as an example.

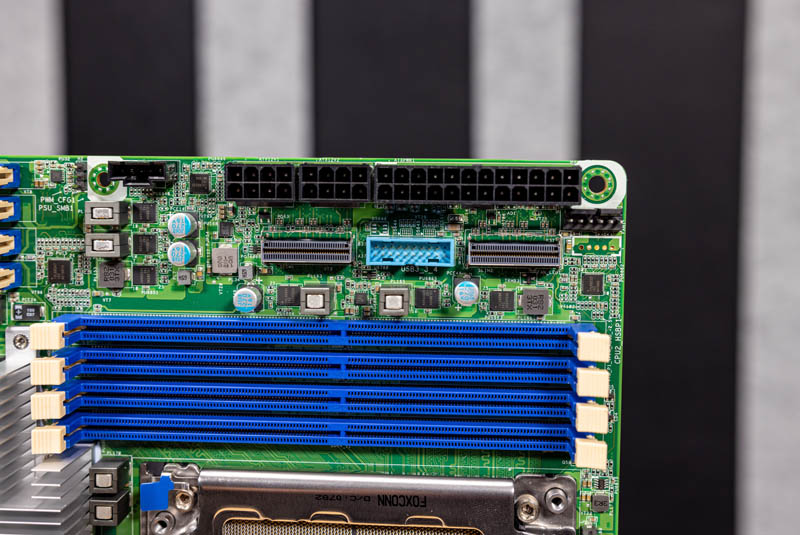

There are also two Slimline or SlimSAS x8 ports. These each get PCIe Gen4 x8 and one can support 8x SATA devices as well.

Overall, there are options to get more SATA lanes and also lanes for NVMe SSD storage directly from the motherboard without needing PCIe risers. Getting access to these additional lanes will require different types of cables here.

ASRock Rack ROME2D16-2T Topology

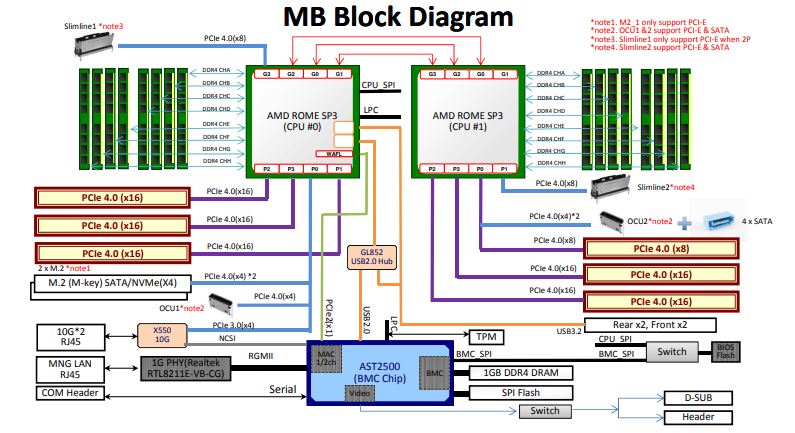

In terms of the block diagram, in a dual-socket system like this, these are very important.

One can see that the PCIe lanes, NICs, and various storage connectors are split between the two CPUs and we have ~128 lanes of I/O exposed. ASRock Rack also shows only three of the four possible Infinity Fabric links between the two CPUs in order to get all of this I/O on a motherboard.

ASRock Rack ROME2D16-2T Management

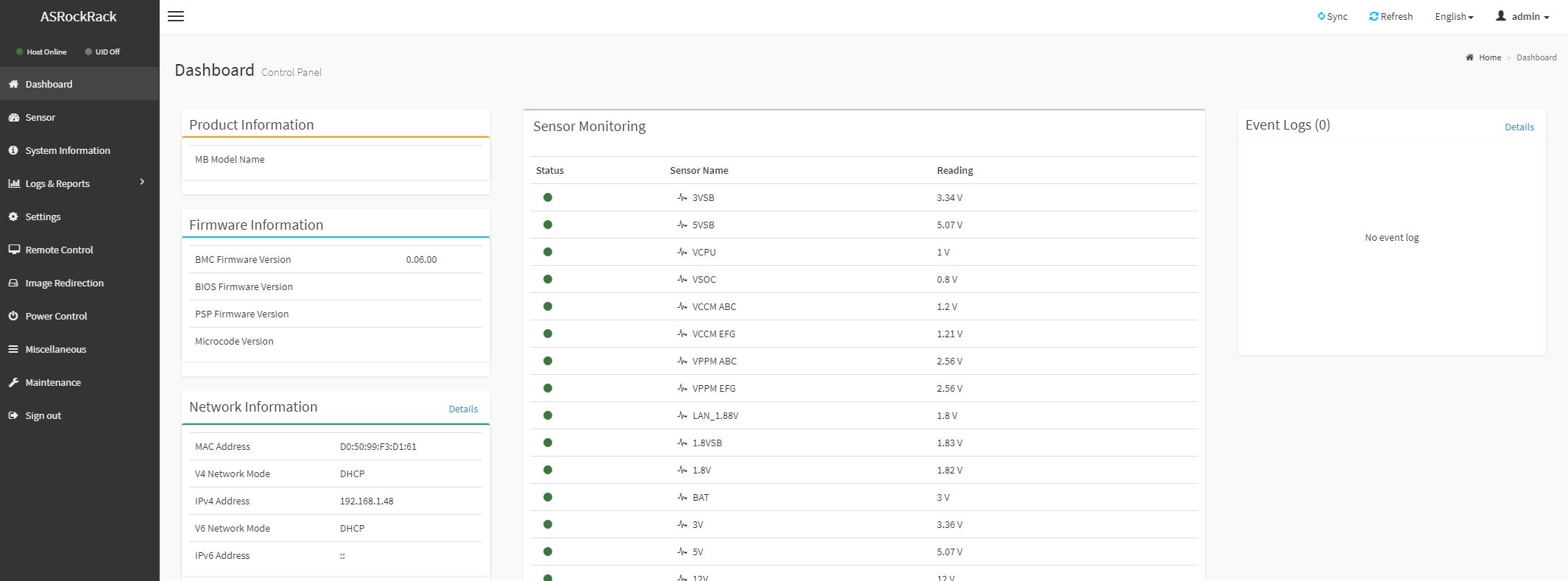

We have gone over ASRock Rack ASPEED AST2500 management a few times already, but here is the general overview. In our hardware overview, we showed the out-of-band management port. The management port allows OOB management features such as IPMI but also allows one to get to a management page. ASRock rack seems to be using a lightly skinned MegaRAC SP-X interface. This interface is a more modern HTML5 UI that performs more like today’s web pages and less like pages from a decade ago. We like this change.

Going through the options, the ASRock Rack solution seems as though it is following the SP-X package very closely. As a result, we see more of the standard set of features and options.

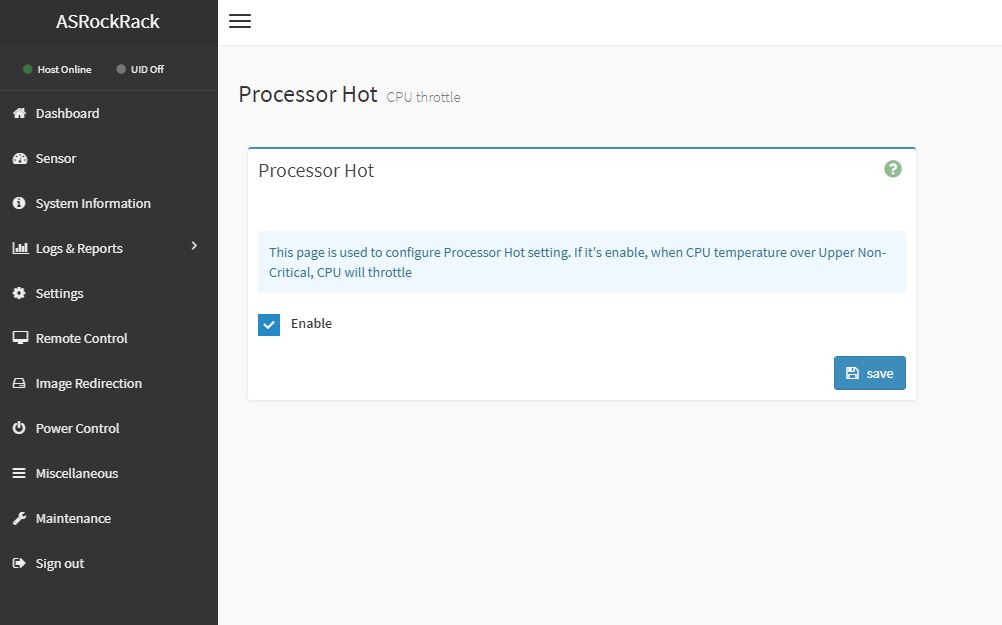

There was one feature that we specifically enjoyed. One can see there is a “Processor Hot” feature in the firmware that allows the system to throttle if an overheat situation is detected.

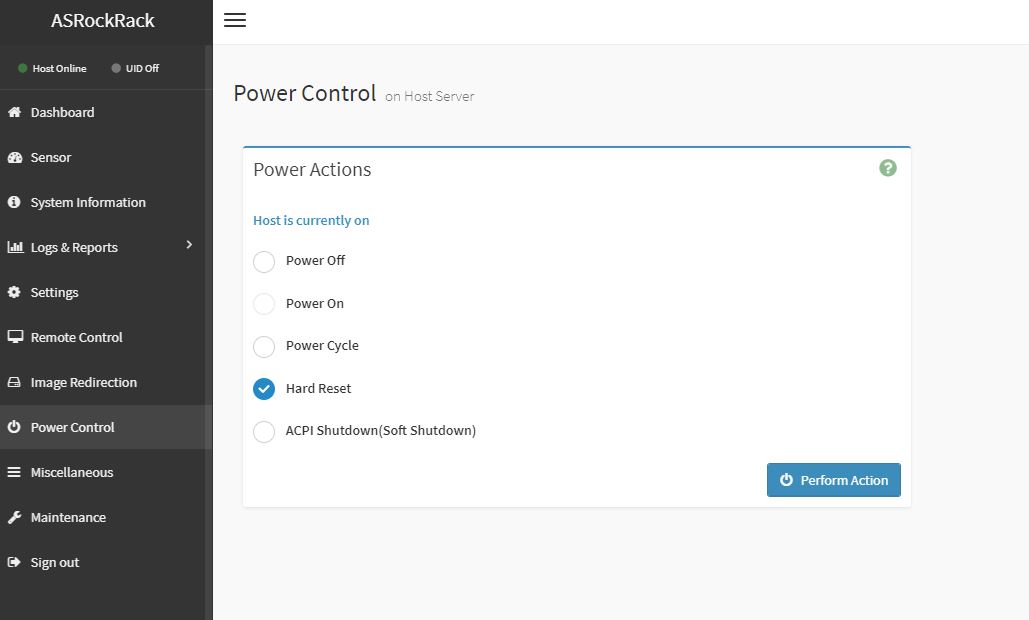

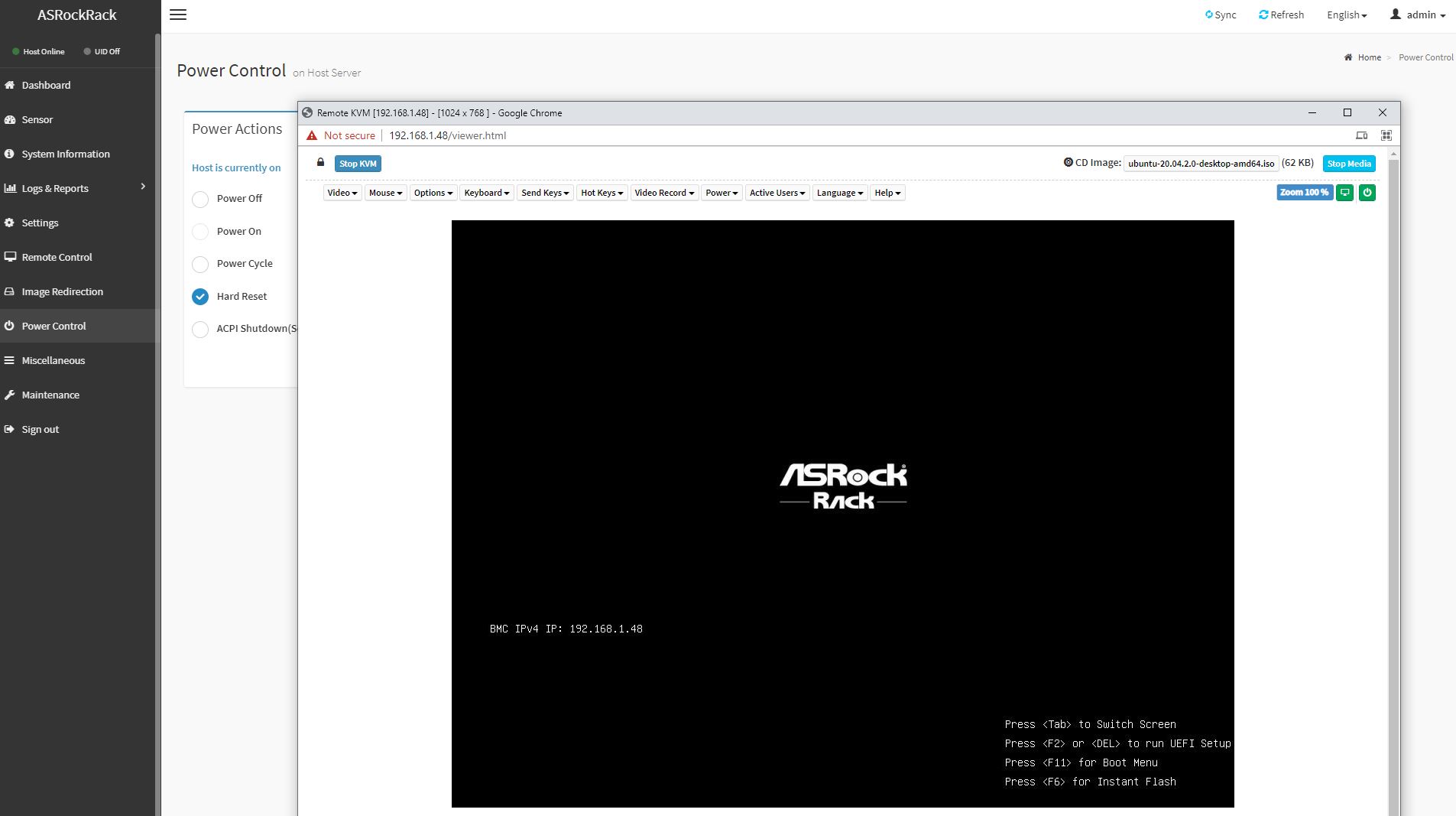

One nice feature is that we get a modern HTML5 iKVM solution. Some other vendors have implemented iKVM HTML5 clients but did not implement virtual media support in them at the outset. ASRock Rack has this functionality as well as power on/ off directly from the window.

Many large system vendors such as Dell EMC, HPE, and Lenovo charge for iKVM functionality. This feature is an essential tool for remote system administration these days. ASRock’s inclusion of the functionality as a standard feature is great for customers who have one less license to worry about.

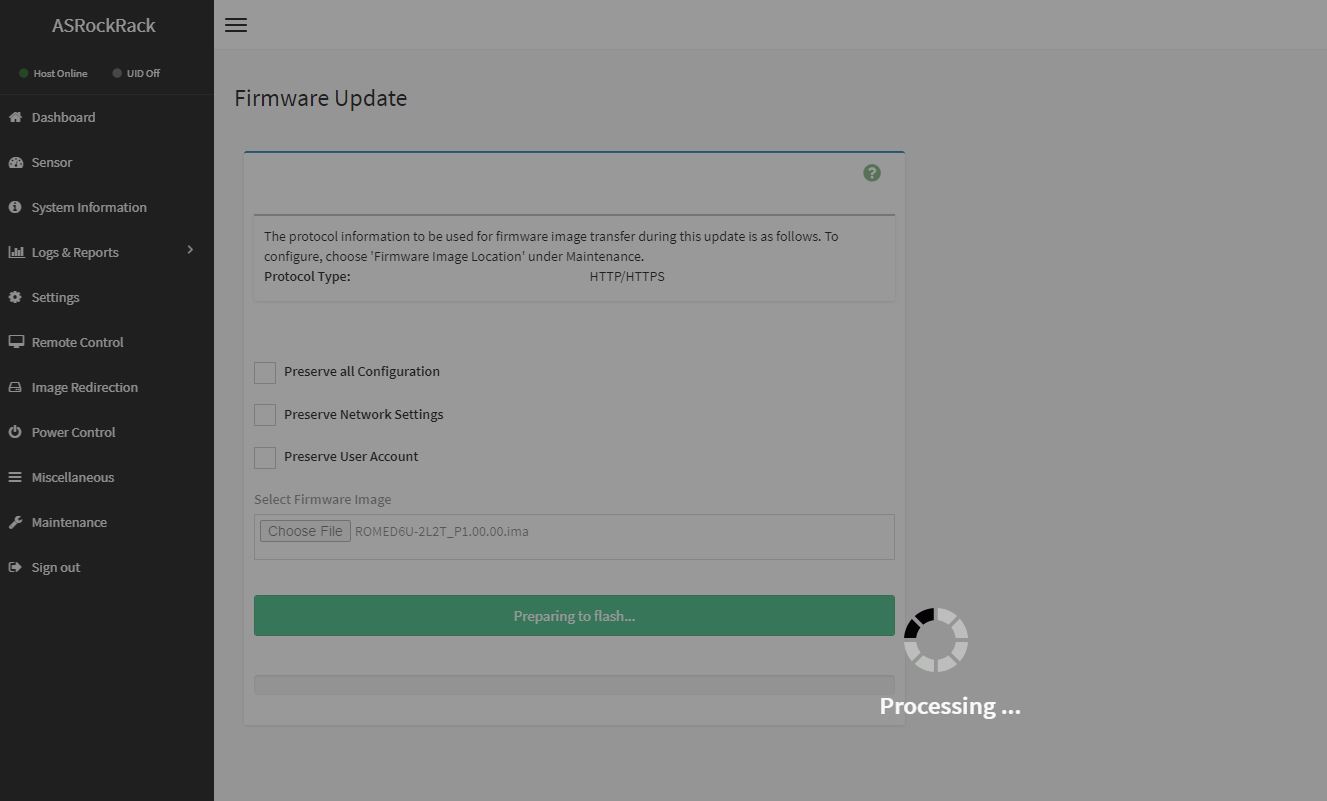

Beyond the iKVM functionality, there are also remote firmware updates enabled on the platform. You can update the BIOS and BMC firmware directly from the web interface. This is something that Supermicro charges extra for.

Overall, this is a fairly standard set of out-of-band management features.

Next, let us get to testing, and our final words.

Did I understand that this motherboard uses the infinity fabric interconnects between sockets which since others use for interconnects? If so, is there a way of measuring the performance difference between such systems?

Yikes, my question was made difficult to understand by the gesture typing typos. Here’s another go at it:

Did I understand that this motherboard uses three infinity fabric interconnects between sockets which since others use four interconnects? If so, is there a way of measuring the performance difference between such systems?

This will be really a corner case to measure. You need to have a workload that needs to traverse these links consistently in order to see any difference.

Something like GPU or bunch of NVMe disks connected to the other CPU socket.

If you consider how many PCIe lanes are in these interconnects you will notice that the amount of data that needs to be pushed through is really astounding.

Anyway if you want to see the differences you may want too look up the Dell R7525

There might be a chance that they tested it in the spec.org in the without xGMI config (aka 3 IF links). You may then compare it with HPE or Lenovo which are using NVMe switches.

I am looking to purchase the ROME2D16-2T Qty30

What is the ETA and price ?

Can diliver this ROME2D16-2T for a very sharp price from The Netherlands.

Hello Patrick , I am trying to find the specs of the power supply you used in your test bed for this MB ?

Tia , JimL

How are the VRM temps? I can get this board and 7702 EPYCs for a good price however those phases look really basic well… like all the epyc boards. I don’t want to run some exotic water cooling or something, jet engine fans.