ASRock Rack 6U8X-EGS2 H200 NVIDIA HGX H200 Assembly

The NVIDIA HGX H200 assembly is on a tray that pulls out of the front of the chassis. GPUs between the compute silicon, the HBM packages, thermal sensors, and so forth can fail, and there are eight of them, so the HGX tray is a service item. Unlike in a Dell PowerEdge system, you do not have to remove 17-20 components and the entire system at least partially out of the rack to service these. Instead, the tray slides right out.

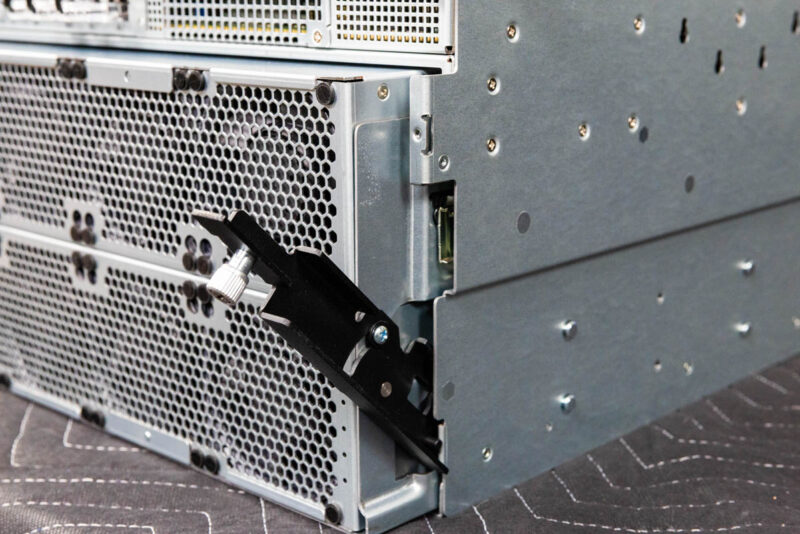

There are latches on either side of the front of the chassis.

The design here is really cool. Instead of designing some kind of custom sliding mechanism, ASRock Rack is just using a standard King Slide set. This is a set of King Slide rails inside the main server chassis which is just a lot of fun to see. It is also exactly like using those rails at a server level, except instead of the outer rails being installed in a rack, they are installed in the server.

Once out, we can see the NVIDIA HGX H200 assembly in its tray.

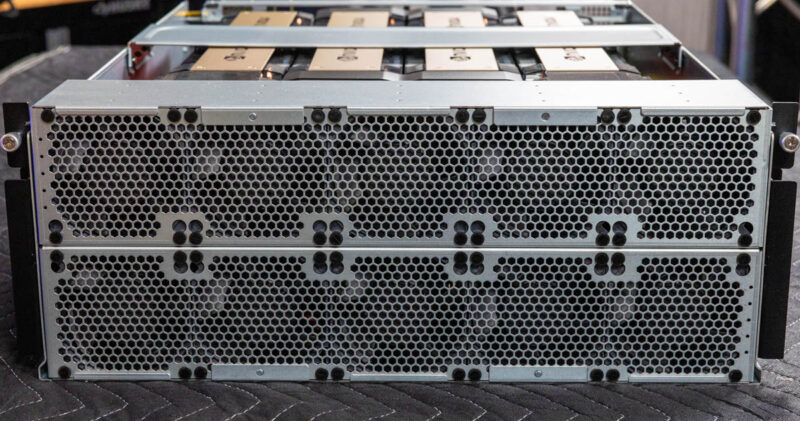

The front of the assembly is a giant fan wall. Fans are generally reliable, but these do take a second to swap. In most hyper-scale data centers with huge numbers of machines, if you ask how many spare fans they have the most common answer we hear is “a few” since fans fail so rarely. Still, this would be nice to be a front hot-swap item in the future.

Those fans have an important job: cooling the NVIDIA HGX H200 8-GPU.

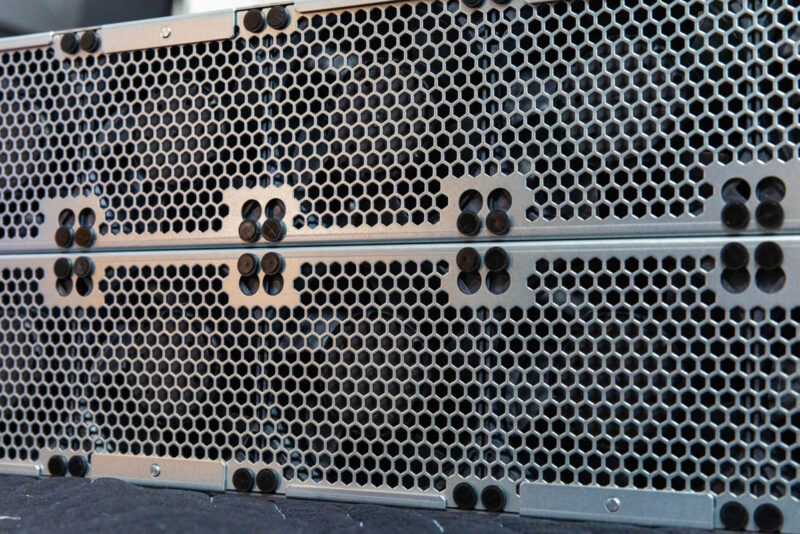

The first components cooled are the four NVIDIA NVLink Switches. In the B200 generation, the NVLink Switches drop in quantity from four to two and migrate to the middle of the B200 GPUs. In the NVIDIA Hopper generation, they are in front.

Here is a quick look at the overall GPU tray with those fans and NVLink switch heatsinks in front and then the NVIDIA H200 GPUs behind.

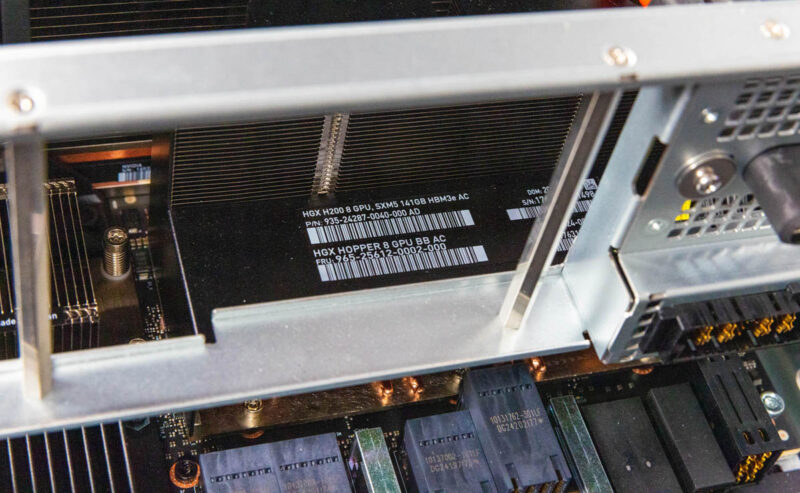

We can see that each of these is a NVIDIA H200 141GB HBM3e GPU. With eight GPUs, that is 1.128TB of HBM3e memory.

We can see that we have a NVIDIA HGX H200 8 GPU SXM5 141GB HBM3e AC (air cooled) assembly here.

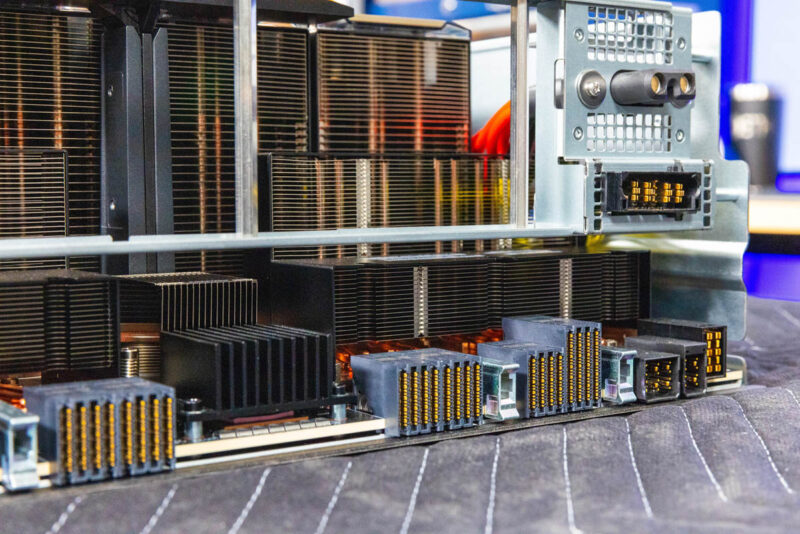

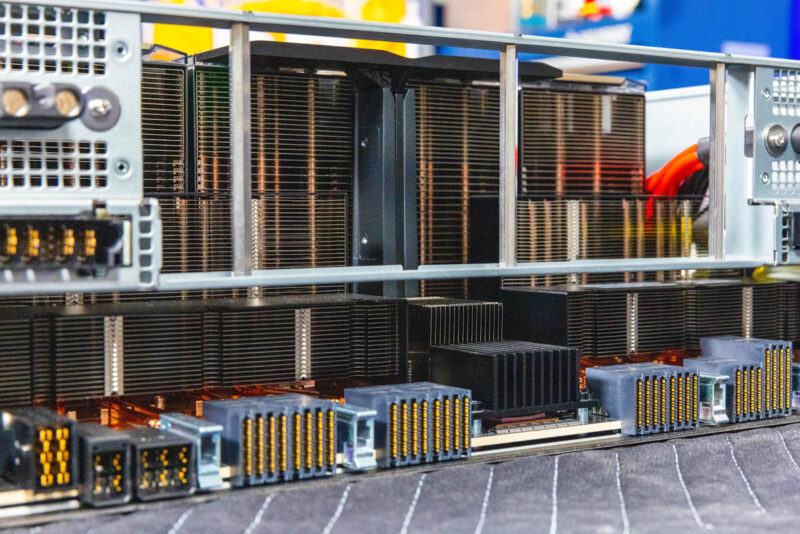

In the back, we have the mating connectors for the power and data feeds to this board.

We also have a few additional heatsinks for the Astera Labs PCIe retimers onboard.

Bringing this full circle, here is inside the system where these connectors mate to.

That PCIe switch board is extremely important for these servers. Next, let us get to the rear of the server.

STH team is doing such in-depth AI server reviews. I’d like to say thanks for this content.

The allowance for either front or rear access to the management NICs is a cute touch.

I’d be curious just how much cheaper low port count GbE switching would have to get to make reducing the internal connector count and just having both front and rear jacks live at the same time the preferred option. (or whether the unmanaged ones are already cheap enough; but customer requirements around BMC security and VLANs and such would require something nice enough that nobody bothers to make low port count versions of it that would be stupidly expensive in context.)

Great review