Every time we review an ASRock Rack server, there is something cool. The ASRock Rack 6U8X-EGS2 H200 is no exception. This is the company’s new NVIDIA HGX H200 8-GPU platform and it is very neat indeed. Designed to take on the AI training and inference market, we will get inside the system to see what makes this server different. ASRock Rack has been designing very different GPU compute platforms ever since we reviewed the ASRock Rack 3U8G-C612 back in 2015 when PCIe GPUs were the norm and we were told by AMD’s FAE that “nobody doing any serious work would use Blender.” Since then, we have reviewed dozens of GPU servers, and ASRock Rack has continued to offer its own spin with each generation. Now, we have the latest NVIDIA H200 generation for review.

This is set to be around three times the number of images of our standard server review. As a result, we are breaking this up slightly differently for our hardware overview sections. Of course, we did not buy this system. ASRock Rack is loaning us the system, so we need to say this is sponsored. We cannot buy systems that cost this much for reviews.

ASRock Rack 6U8X-EGS2 H200 Front and Interior Hardware Overview

We are going to start our journey at the front of this 6U server. On top, we have drive bays, in the middle is the front I/O, with a twist, and on the bottom, we have the NVIDIA HGX H200 8 GPU tray. We will first cover the front and internals, then the NVIDIA HGX H200 8 GPU tray, and then we will go through the rear, building the system from the various components.

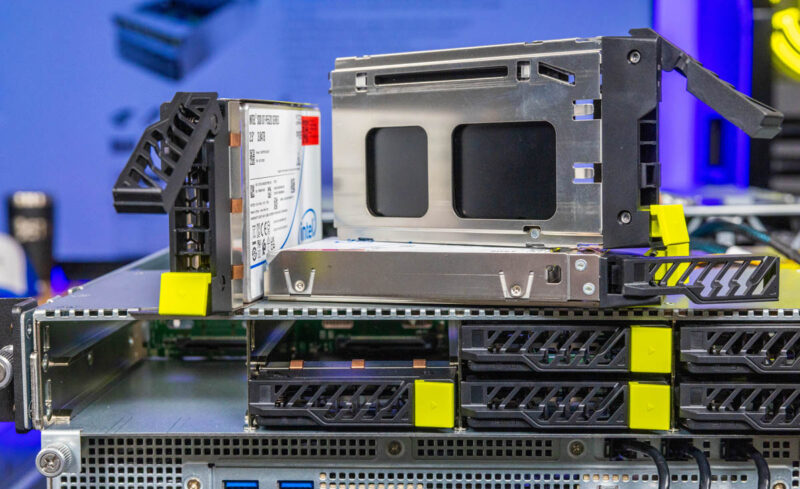

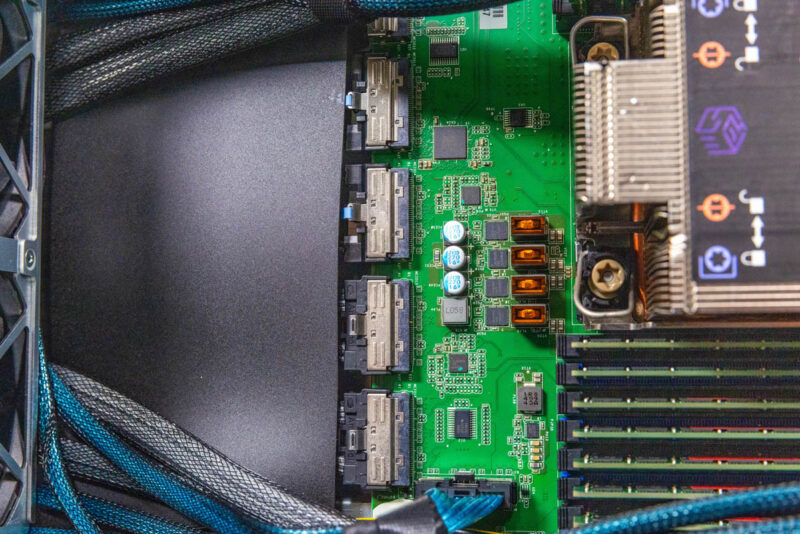

Starting with the top drive bays, there are twelve total. All twelve can be PCIe Gen5, but four can alternatively be configured as SATA for boot drives.

Here is a quick look at the storage backplane in front of the CPUs. Under the storage backplane we also have the Intel C741 PCH, and two M.2 SSDs for boot devices.

Here is another look. Something wild about these 8 GPU systems in general is that cables need to traverse long distances with PCIe signals due to the system architecture.

Below the twelve storage bays is a front I/O area with a little ASRock Rack flair.

First, we have one of the best front I/O blocks with four USB ports and a VGA port. Many GPU servers offer front I/O given how much cooler it is in the cold aisle of GPU racks. The difference here is that with four USB ports, you can plug in a keyboard, mouse, and a USB 3 drive, for example, without resorting to using a hub. That may not sound exciting, but the day you run out of USB ports is one that you will wish you had this feature.

There are also physical power and reset buttons along with indicator LEDs.

Then we get to some more fun ASRock Rack design. What if you want to have your two 1GbE ports for things like PXE boot on the front, or perhaps the management port on the front? Or what if you want to have them all on the rear? What about if you want to mix and match. ASRock Rack has a simple solution: the ports are all in front, but there are Ethernet cables that bring the signal to the rear. In the photo below, you can see the rear configuration, but for the front configuraion, just pull out a cable. We will show you how these connect later in the rear.

Underneath this is the NVIDIA HGX H200 8 GPU tray. This is one we will dedicate an entire section to on the next page.

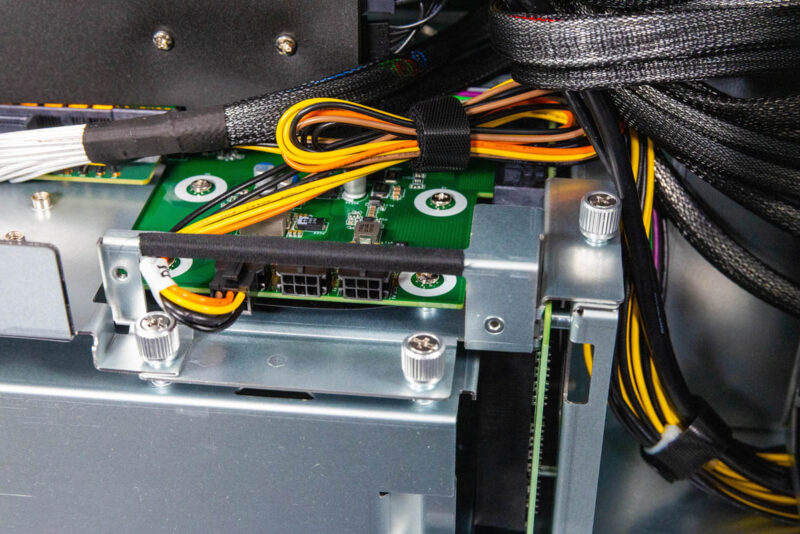

That assembly plugs into a PCIe switch board that sits at the bottom rear of the system. ASRock also has added blind mate power connectors as well.

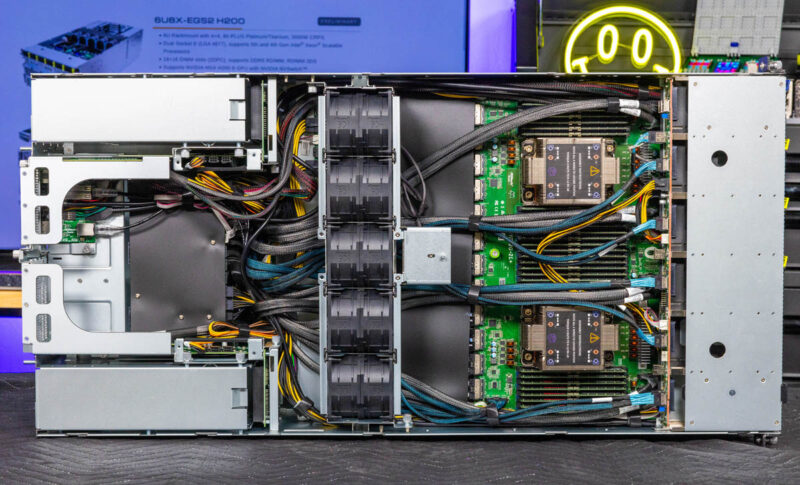

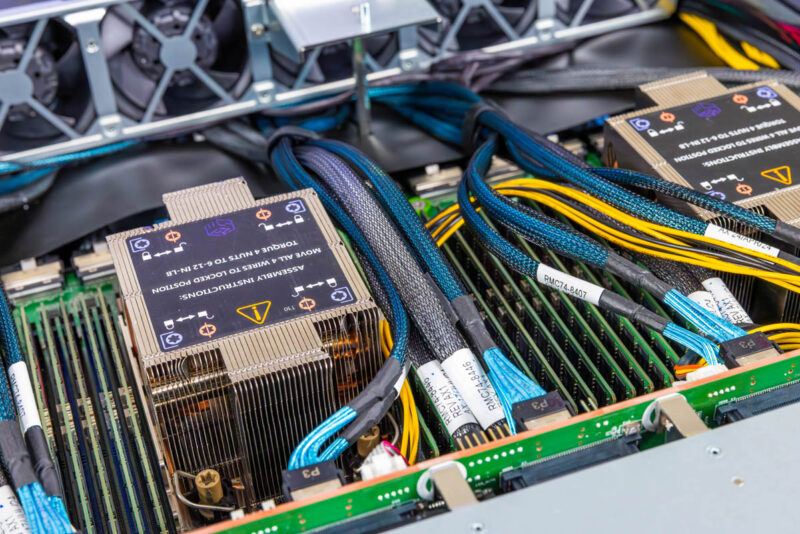

Here is a quick look at this setup, and all of the cables involved. Under the middle heatsink there are the PCIe switches, and all of those MCIO cables connect various devices except the NVIDIA GPUs and the main East-West NICs which we will show in our rear component build section.

The system contains the GPU and main NIC section in the bottom 4U, but the top is like a 2U server with storage in front, then the CPUs and memory, midplane fans, and then some I/O in the rear. We are going to work from right to left.

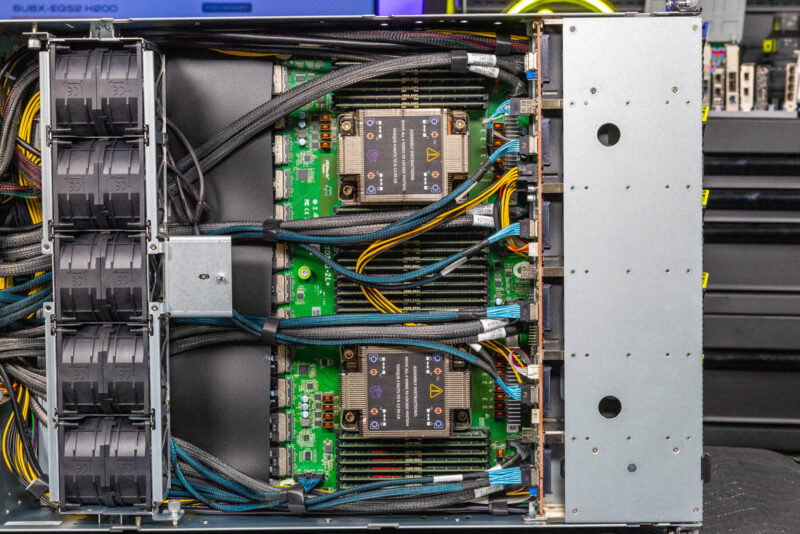

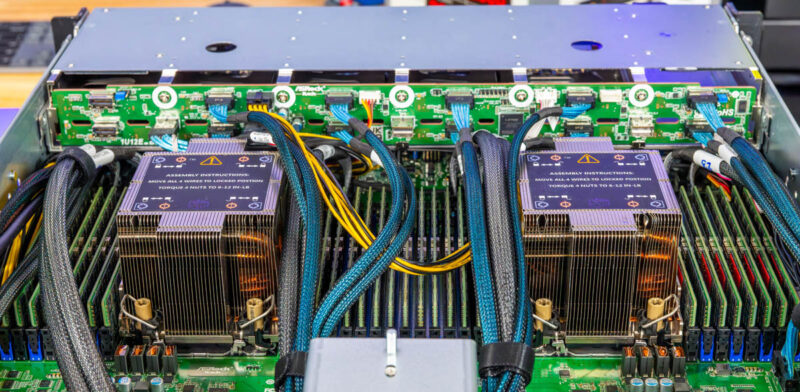

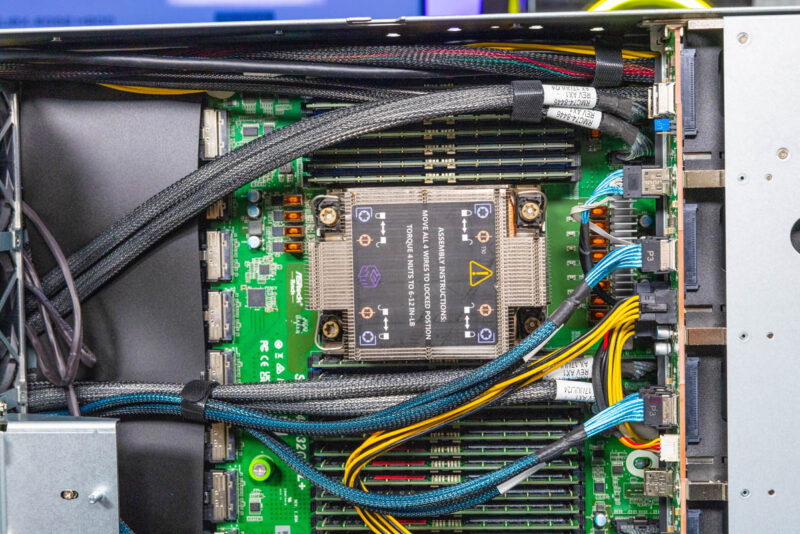

At the heart of this system are two Intel LGA 4677 sockets for 4th and 5th Generation Intel Xeon Scalable processors. Many of these systems will follow the NVIDIA spec and use Sapphire Rapids processors.

Each of the two Intel Xeon Scalable processors sit behind the storage backplane and have simple airflow guides.

An advantage to these processors, over CPUs like Granite Rapids-AP and AMD EPYC is that they have 8 channel 2DPC memory configurations. Practically, that means there are 16 DDR5 DIMMs per CPU and 32 DIMMs total. When you have over 1.1TB of HBM3e memory, 2TB of system RAM is not even a 2:1 ratio. Having more DIMM slots means more capacity without having to use higher capacity (and more costly) DIMMs.

Cables are tied into bundles, but they are everywhere here.

Getting to the DIMMs here was not perfectly clean since some cables routed over the DIMM slots, but there was enough play to make servicing the DIMMs not particularly challenging.

Behidn the CPUs and memory, instead of rear I/O and perhaps an OCP NIC 3.0 slot, instead we get all MCIO connectors. This is a custom motherboard design to help minimize the main PCIe runs down to the PCIe switch, GPU, and NIC area at the bottom of the chassis.

In the top section, we had large dual fan modules moving air through components.

In the rear of the system, we have risers that take MCIO cable inputs. Here is the right rear x16 riser. This would be for a NIC like the NVIDIA BlueField-3 DPU for our North-South network in an AI cluster.

There is also a little power distribution board since these risers may need power.

Here is the left rear riser again with MCIO connections.

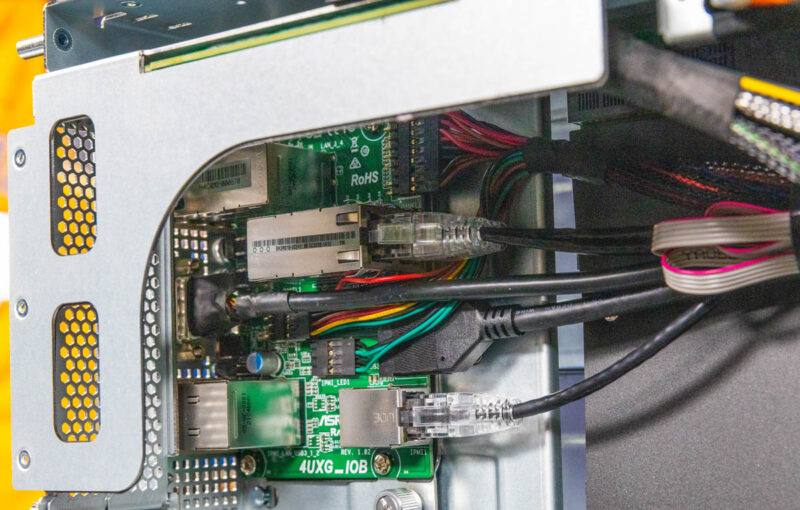

That sits just above the rear I/O board or the ASRock Rack 4UXG_IOB.

Next, let us take a look at the NVIDIA HGX H200 assembly.

STH team is doing such in-depth AI server reviews. I’d like to say thanks for this content.

The allowance for either front or rear access to the management NICs is a cute touch.

I’d be curious just how much cheaper low port count GbE switching would have to get to make reducing the internal connector count and just having both front and rear jacks live at the same time the preferred option. (or whether the unmanaged ones are already cheap enough; but customer requirements around BMC security and VLANs and such would require something nice enough that nobody bothers to make low port count versions of it that would be stupidly expensive in context.)

Great review