ASRock Rack 4U8G-TURIN2 Block Diagram

We could not find a block diagram for this platform, but we found one for the motherboard.

Really, this shows us what is going on in the system. We have our two AMD EPYC 9004/ 9005 CPUs. Then the rest of the system is dedicated to providing PCIe lanes over MCIO connectors. There are 10x PCIe Gen5 x16 roots routed to 20x MCIO x8 connectors. When we look inside the system, you can see 16 of these MCIO connectors being used for the GPUs and then the other four being used for storage and front networking. That also means that the M.2 slots are on slower Gen3 x2 links.

The 160 PCIe Gen5 lane solution is what makes this platform different. It used to be that having that many PCIe lanes would require at least two big PCIe switches. Now, all of the connectivity can go to the CPU. The other impact of that is since we have CXL 2.0 on Turin CPUs, this is actually one of the more interesting platforms if you just wanted to scale memory capacity and bandwidth by adding CXL Type-3 memory expansion devices. If you have PCIe switches in the architecture, then those need to support CXL, and most at this point do not.

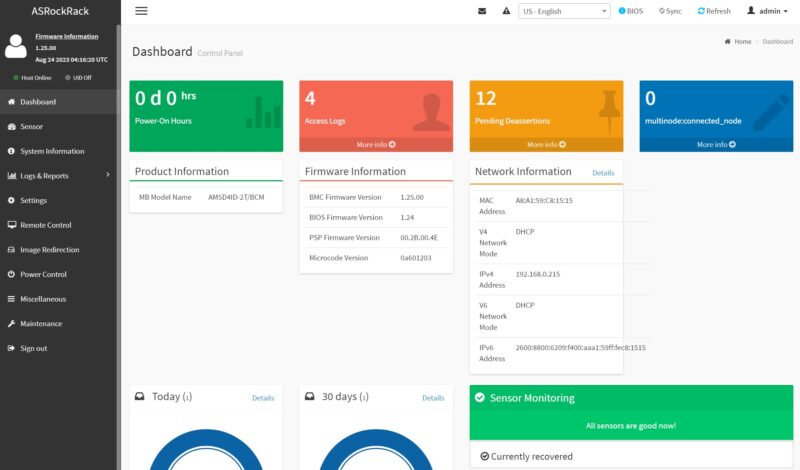

ASRock Rack 4U8G-TURIN2 Management

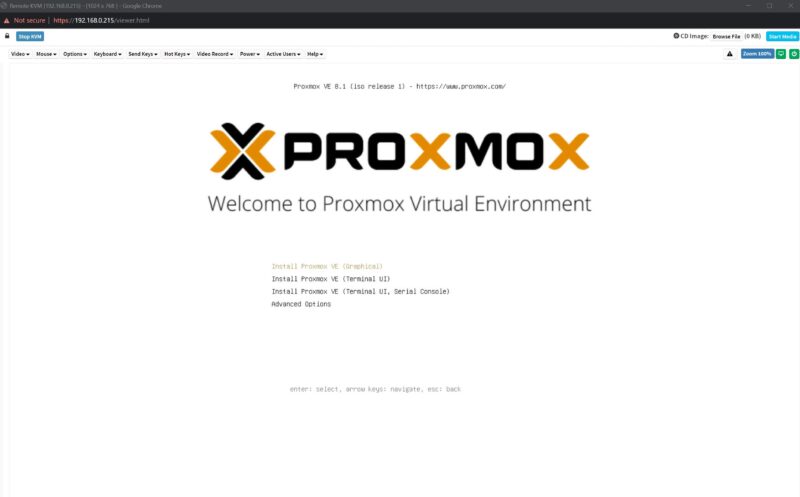

In terms of management, this systemuses the ASPEED AST2600 BMC. Instead of going into this in-depth, since we just did that in the ASRock Rack AM5D4ID-2T/BCM review, we are just going to show the key features from that. Logging in, we can see an ASRock Rack skinned management interface. This is an industry-standard IPMI interface.

Included are features like HTML5 iKVM with remote media. Companies like Dell, HPE, and Lenovo charge a lot for iKVM functionality. Now, companies like Supermicro charge for remote media mountable via the HTML5 iKVM. This is a small feature, but one that is handy for many users and it is great that ASRock includes this with the board.

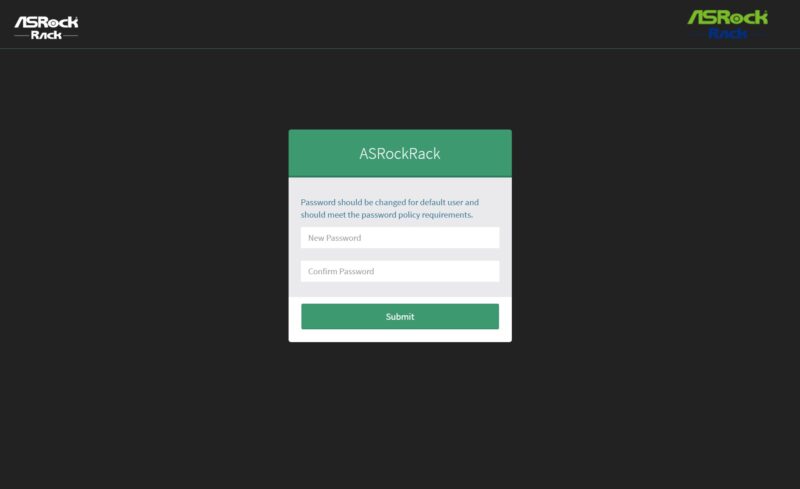

Another new feature with this generation is that the default password is admin/ admin, but then it immediately prompts for a change with some validation rules (e.g. you cannot just make “admin” the new password.) This is done to comply with local regulations.

Next, let us get to the performance.

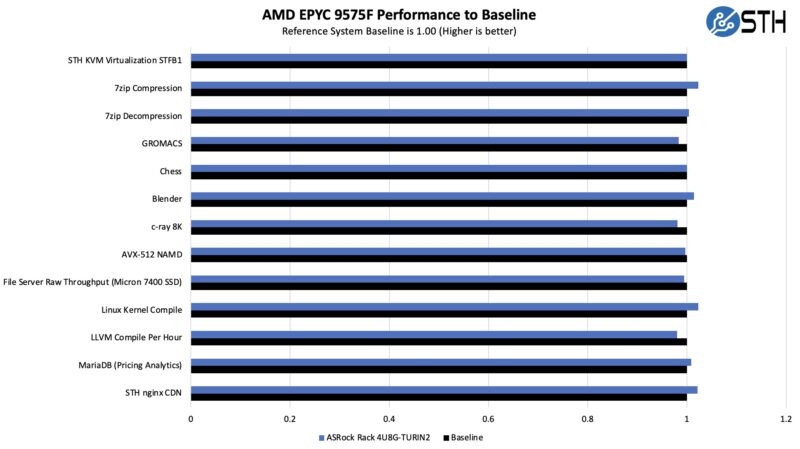

ASRock Rack 4U8G-TURIN2 Performance

With systems like these, performance generally comes down to cooling the big components. These primarily are CPUs, GPUs, and NICs (and now CXL Type-3 memory expansion modules) as the SSDs are generally at the front of the chassis in standard 2.5″ trays. As such, we used the AMD EPYC 9575F which is the frequency optimized 64 core part, a popular one in AI servers.

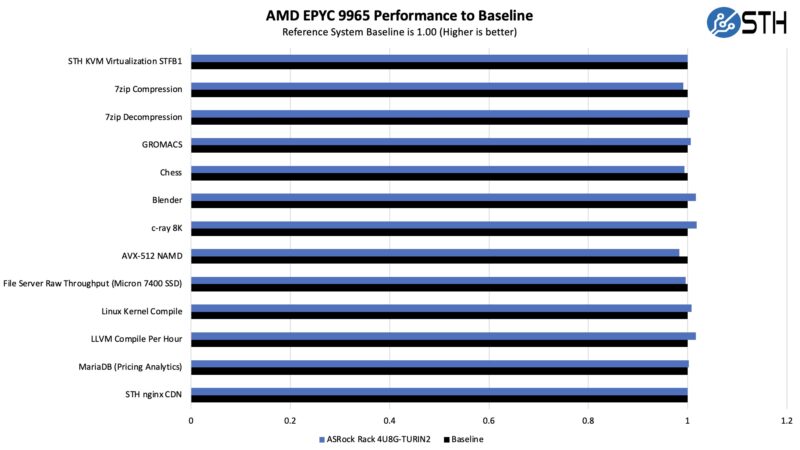

For folks that just want cores, we also tried the AMD EPYC 9965.

Overall, the CPUs performed as they would in a high-end 2U server. That is what we would want to see.

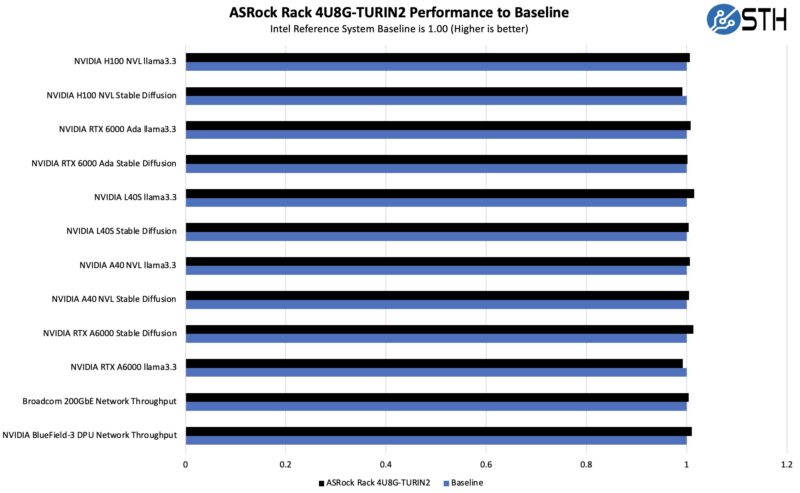

On the other hand, the perhaps more impactful version of this what happens to AI accelerators. Unfortunately, we do not have enormous lab budgets that would allow us to fill the systems with eight of the same cards. What we did instead was to just mix and match cards to see the performance, both on the GPU and the networking side.

Again, we saw results within what we would consider a margin of error. The one item we should note is that if you front mount the NICs, they get a ton of airflow. On the other hand, if you rear mount them into the GPU slots, then you will need to blank out slots to ensure you are getting proper airflow over hot NICs like the NVIDIA BlueField-3 DPU’s single-slot heatsinks.

Maybe this also shows the big advantage of this design. You can mix and match parts and use the NVLink bridge parts.

Next, let us get to the power consumption.

> two M.2 slots under the 2.5″ storage and PCIe expansion slots on the motherboard. These are lower speed PCIe Gen3 x2 slots

According to the block diagram these are Gen 3 x4 slots, although the first one had a rather interest lane arrangement where one lane is reused for a SATA controller if SATA m.2 SSDs are used.