ASRock Rack 4U8G-TURIN2 Internal Hardware Overview

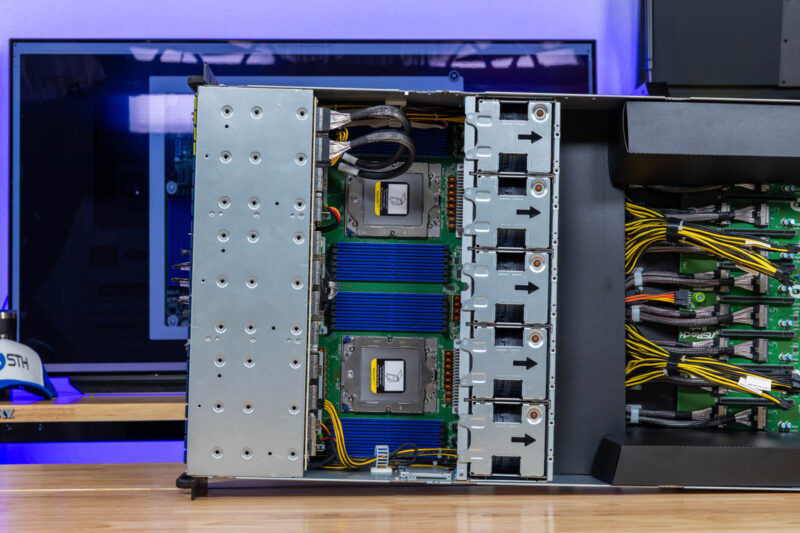

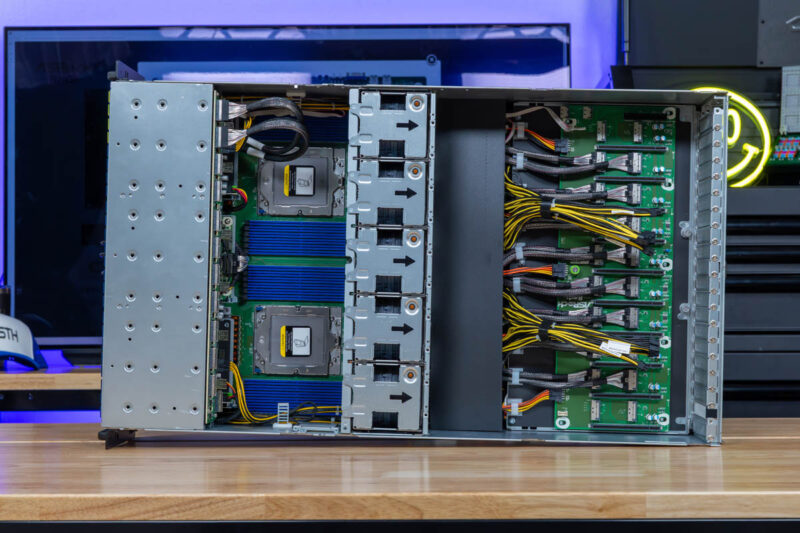

Inside the system we have a classic 8-GPU server layout.

In the front, we have our storage and front I/O along with the dual AMD EPYC 9004/ 9005 CPU sockets.

Here we can get another look at the backplane that only has two MCIO cables for a total of 16 lanes used for the four NVMe SSD bays.

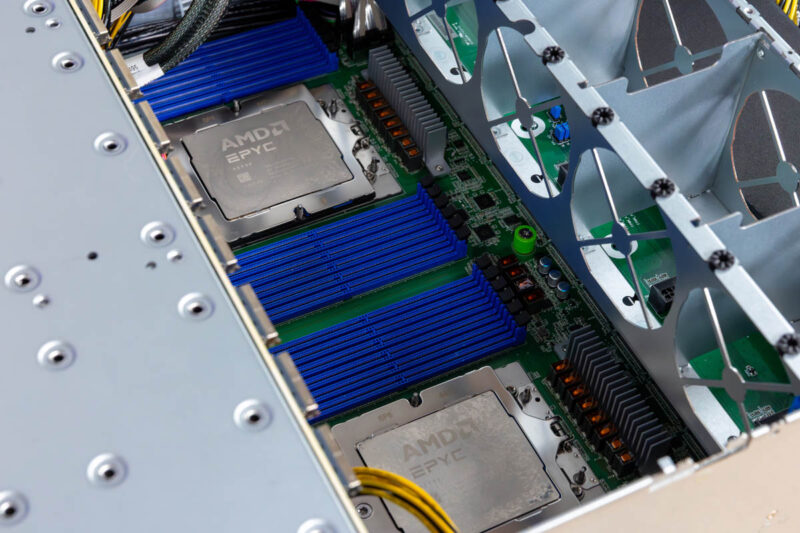

The processors are dual AMD EPYC 9004 or 9005 series.

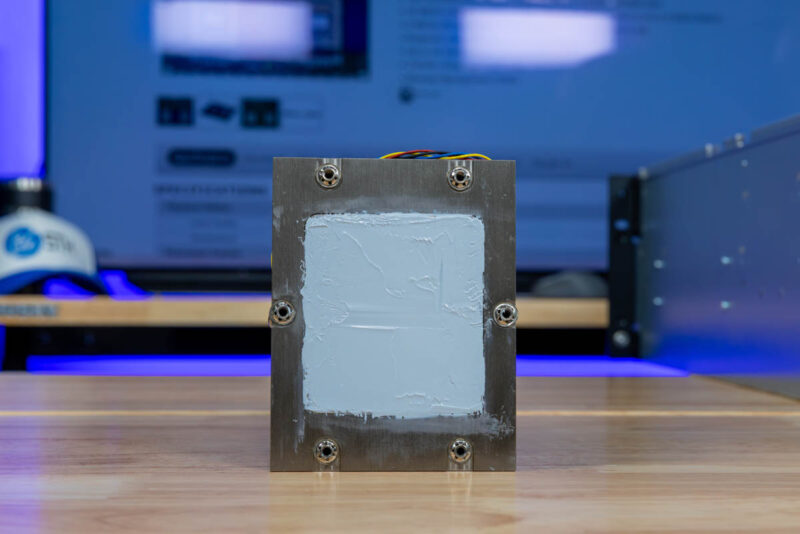

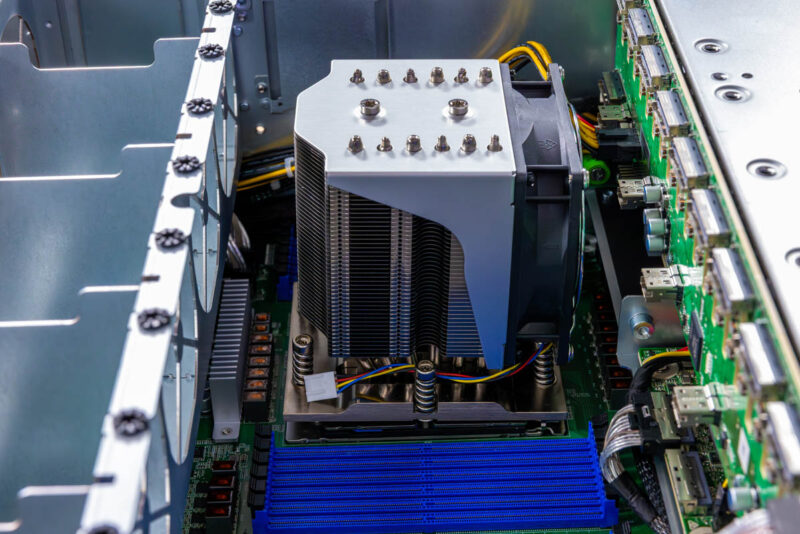

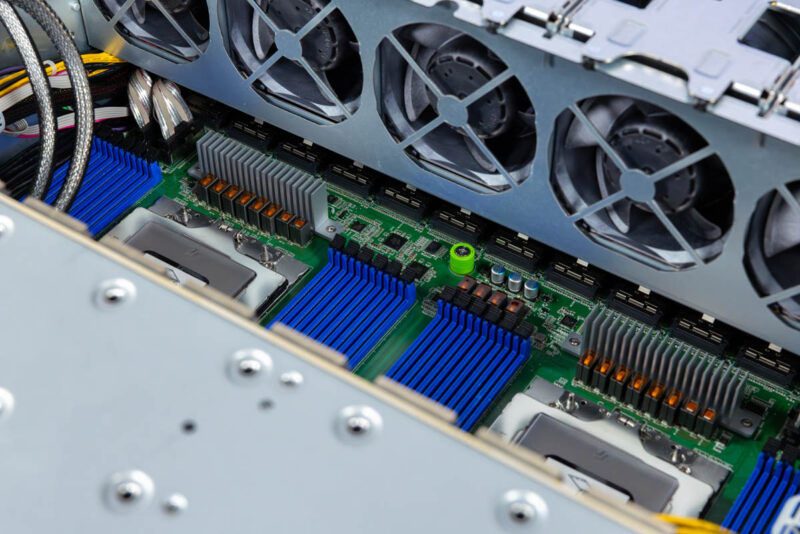

Something common in these systems over the past decade, but different from other classes of servers is that these heatsinks also have fans.

Again, this is an early production system so this particular unit had been through a few re-pastings.

Still, there is plenty of room to work on these in the system.

Each CPU socket gets 12x DDR5 RDIMM slots. Having a 1DPC solution keeps the chassis length relatively compact. It also ensures that the memory is running at the top speed that it can as you can lose memory speed on AMD EPYC 9004/ 9005 servers that have 24 DDR5 DIMM slots per socket, even if only twelve are filled.

You can look up the ASRock Rack TURIN2D24G-2L+/500W motherboard if you want, but there are actually two M.2 slots under the 2.5″ storage and PCIe expansion slots on the motherboard. These are lower-speed PCIe Gen3 slots, so not fast, but they can be used for boot. It is a good thing that SSDs are so reliable, and there are a pair here as they are not easy to get to.

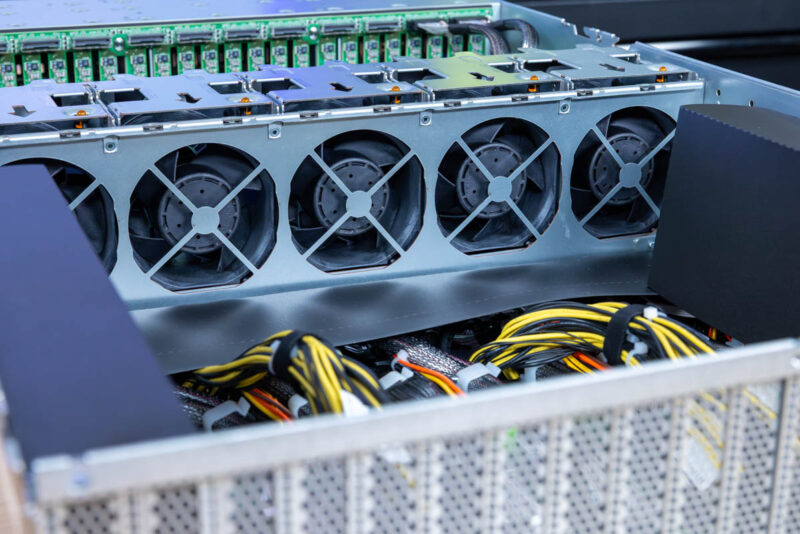

Behind the CPU sockets are two big features. First, we have a big fan array. Second, we have a huge number of MICO connectors. These MCIO x8 connectors each carry eight lanes of PCIe Gen5.

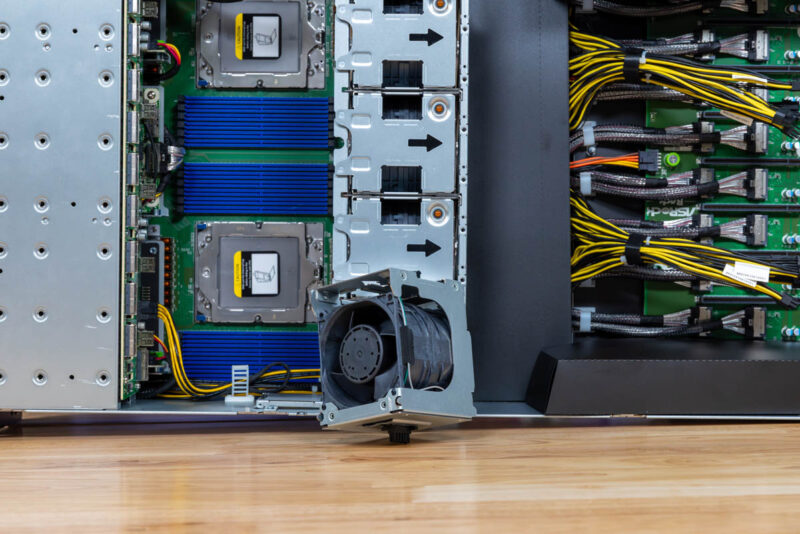

The fans are on hot swappable modules with LED status indicators, and perhaps the easiest to see direction indicators. The arrows are cut out of the sheet metal.

Here is one of the fan modules out and on the bench.

The fan modules exhaust to the PCIe expansion area.

The PCIe expansion area houses eight PCIe Gen5 x16 double-width slots and also airflow guides to ensure that airflow continues through the cards, and not around to the sides.

That is the reason we have so many expansion slots at the rear of the chassis.

You might notice a nest of cables. There are two primary cable types. First are the MCIO x8 cables that bring PCIe data connectivity. The others are power cables.

Each slot gets up to two MCIO x8 cables for PCIe Gen5 x16 connectivity. Of course, if you only needed x8 lanes, then you could just re-home the second cable elsewhere in the system.

Of course, we wondered, what would happen if we removed the airflow guides on either side.

As you can see, we have two more unconnected slots on the top, one however is only a single-width slot.

There was another double-width slot on the bottom.

If you were mixing cards, or using PIe Gen5 x8 double-width cards, then you can get 10 cards in here. Something that you will quickly notice is that the PCIe board is simple and small. There are no large PCIe switches here, only the slots. This is a direct slot to AMD EPYC CPU design without PCIe switches.

ASRock Rack also has a number of different power connectors. GPUs and accelerators can use different types of power connectors. The additional power cables meant we could use different types of accelerators in here.

Next, let us get to the block diagram.

> two M.2 slots under the 2.5″ storage and PCIe expansion slots on the motherboard. These are lower speed PCIe Gen3 x2 slots

According to the block diagram these are Gen 3 x4 slots, although the first one had a rather interest lane arrangement where one lane is reused for a SATA controller if SATA m.2 SSDs are used.