As we discuss trends in the server industry with different organizations, one of the clear trends has been a migration toward more accelerated computing servers. Sometimes these are standard rack servers with a GPU like a NVIDIA T4 for inference. Other times, these are large acceleration and training-focused servers. Leaning into this trend, we have the ASRock Rack 2U4G-ROME/2T server that utilizes a single AMD EPYC CPU and can handle up to four double-width GPUs in 2U. In our review, we are going to see what this new server offers.

ASRock Rack 2U4G-ROME/2T Hardware Overview

Given the complexity of modern servers, we have changed to a format where we are splitting our coverage into internal and external views of the server. We start with the external look and then work our way inside.

ASRock Rack 2U4G-ROME/2T External Hardware Overview

The 2U4G-ROME/2T is a 2U server, but immediately from the front one can see that something is different. While most 2U 2.5″ storage servers have 24x 2.5″ drive bays on front, instead we get five.

We have a very interesting configuration here. There are three SATA bays and one NVMe only bay. Since there are only five bays, the remainder of the front of the chassis is focused on providing airflow.

As one can see one of the bays can be SATA or NVMe which is enabled by using both PCIe and SATA connections to that drive slot. This is one that if you did not read the specs or this review of the server and just saw it in the data center it is unlikely you would know there was this dual capability in this slot.

We also wanted to quickly note that the drive trays are tool-less in this chassis. That is a major design trend we are seeing in this generation of servers.

Moving to the rear of the system, we see a somewhat standard layout with PSUs on one side, IO in the middle, then expansion on the other side.

This system has redundant 80Plus Platinum PSUs. Each is capable of delivering 2kW of power which is enough when using PCIe GPUs and a single CPU. Something we will note here is that these are rated for 200-240V input and there is not a lower 110-120V option. For most data centers running GPUs, this will be fine. For some lower-end data centers on lower voltage, we just wanted to point this out.

In the middle of the chassis we have the standard I/O. Here we have a serial port and a VGA port along with two USB 3.0 ports. Networking is dual 10Gbase-T provided by the Intel X550 NIC onboard. We also get an out-of-band management port.

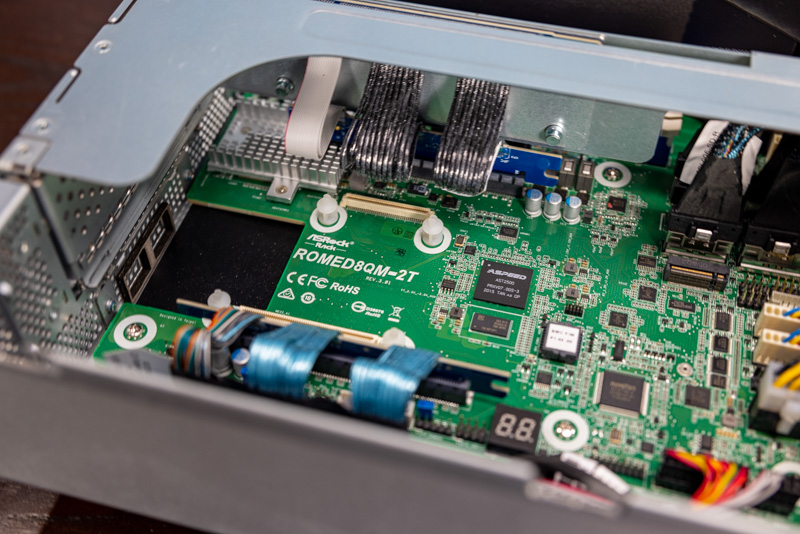

The out-of-band management port is powered by the ASPED AST2500 BMC.

The expansion slot portion will look a bit strange to some. First, one can see the OCP NIC 2.0 slot on the bottom of the system. Most of the industry is moving to OCP NIC 3.0 form factors. Still, this is a low-cost way to add 10GbE/ 25GbE networking to a server as OCP NIC 2.0 options are plentiful and in higher-volume production.

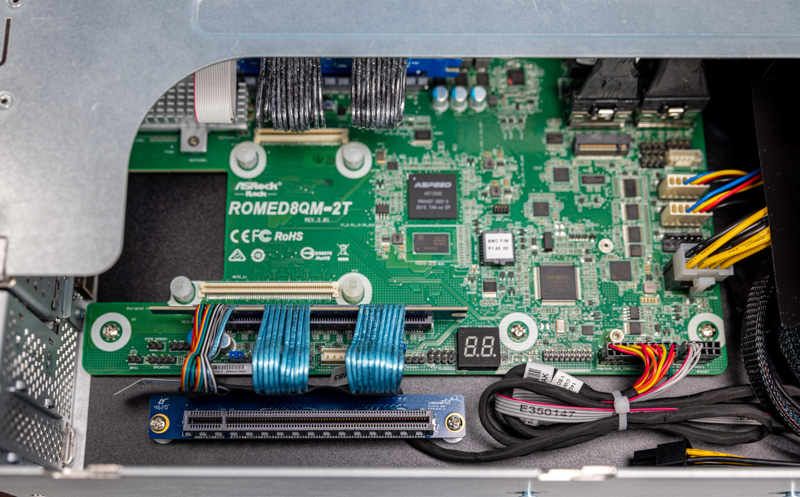

The large removable section is for an accelerator which we will get to on our internal overview. Instead, we are going to look at the far right which is a low-profile PCIe Gen4 x16 slot. What is interesting here is that this slot does not plug directly into the motherboard. Instead, ASRock Rack has power and PCIe cables that connect to a riser that is mounted to the chassis for this expansion slot.

As one can tell since we showed a few internal shots to explain the external features, there are a lot of cables. We are going to get to our internal hardware overview next so one can see what those are for.

We plan to use this chassis for our new AI stations.

Would the GPU front risers provide enough space to also fit a 2 and 1/2 slot wide GPU Card with the fans on top like a RTX3090?

I just like the fact STH has honest feedback in it’s reviews.

Thanks, Patrick!

Markus,

I was wondering the same thing as you!

the HH riser rear cutout also looks like it could take two single width cards.

in fact I will be surprised if this chassis doesn’t attract a lot of attention from ebtrepid modders changing the riser slots for interesting configurations.

jetlagged,

I think I have to order one and test it myself.

At the moment we design the servers for our new AI GPU cluster system and now, since it looks like NVIDIA had successfully removed all the 2 slot wide turbo versions of the RTX3090 with the radial blower design from the delivery chains, it has become very difficult to build affordable space saving servers with lots of “inexpensive” consumer GPU cards.

Neither the Asrock website or this review specify the type of NVME front drive bays supplied or the PCI gen supported. This is important information to know in order to be able to make a sourcing decision. AFAIK, there is only m.2, m.3, u.2, and u.3 (backwards compatible with u.2). Most other manufacturers colour code their nvme bays so that you can quickly identify what generation and interface are supported. What does Asrock support with their green nvme bays?