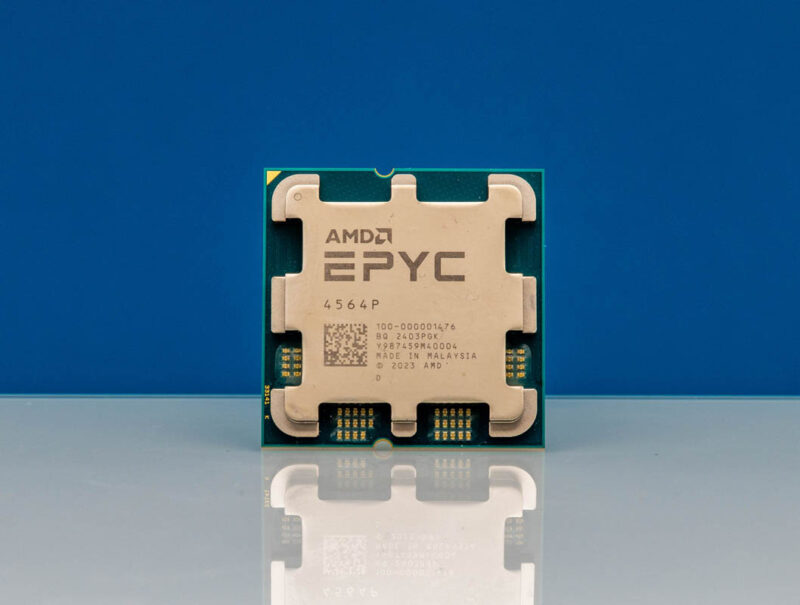

We published our ASRock Rack 2U1G-B650 2U AMD Ryzen GPU Server Review a few months ago. At that point, we knew the AMD EPYC 4004 series was coming, and one of the big features was the higher-end 16 core 170W TDP CPUs. ASRock Rack sent us the cooler and airflow guide to convert our system to the ASRock Rack 2U1G-B650/EVAC model and we used that as part of our EPYC 4004 piece. We thought we would show the huge cooler.

ASRock Rack 2U1G-B650/EVAC Cooler for Higher-End CPUs

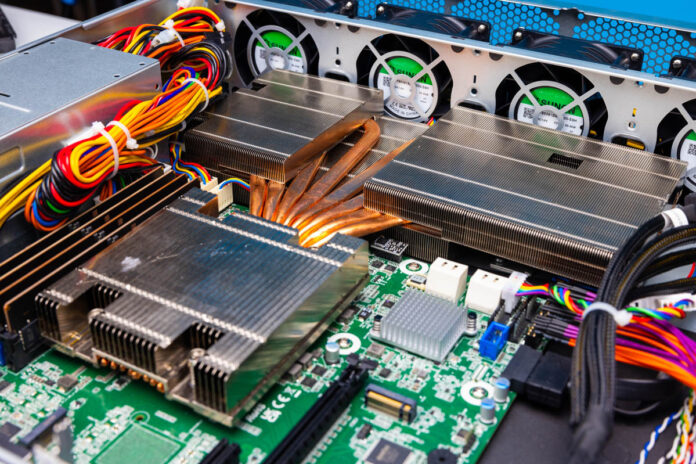

EVAC stands for “Enhanced Volume Air Cool” and is essentially a larger cooler than the stock cooler. It is so large that a change in the chassis is required to accommodate it.

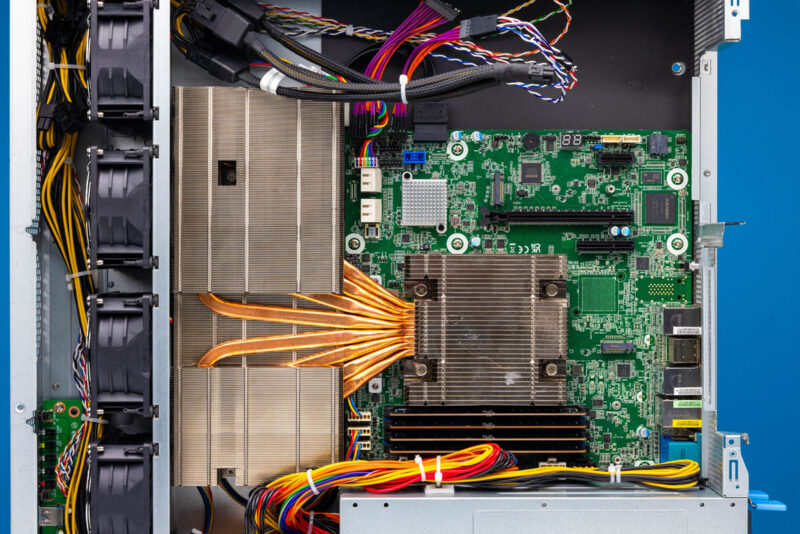

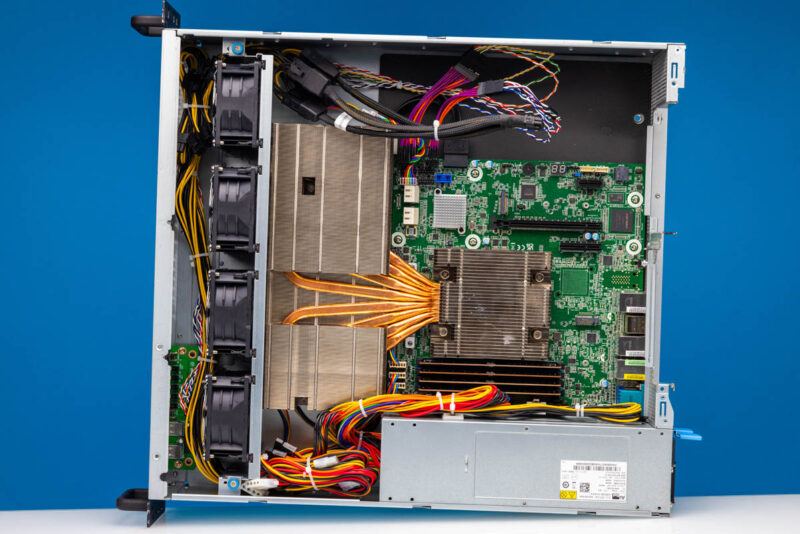

For context, here is the original cooler we had in the chassis. In the distance, you can see extra mounting points in the chassis. Those are to secure the larger cooler.

Here is a similar with the eight copper heatpipes leading to the larger front wings.

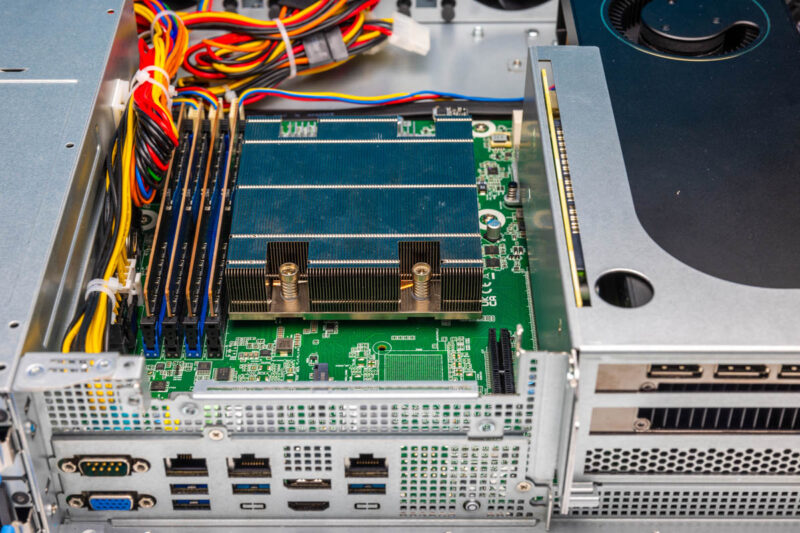

One of the wings goes under the GPU riser level and has cutouts for the SATA cables.

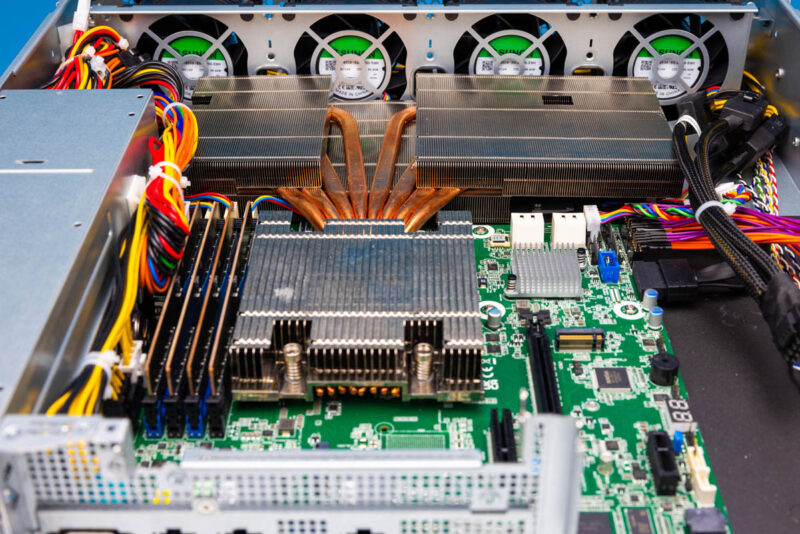

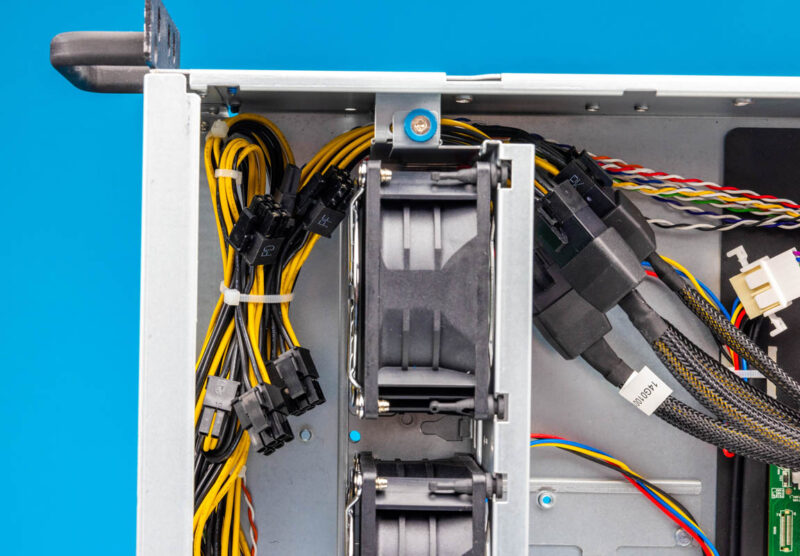

There is another change in the server. While it might look like it is just the bigger heatsink, the entire fan partition actually needed to move forward to accommodate the huge heatsink.

For a good visualization, check out where the fan partition is located on the standard server.

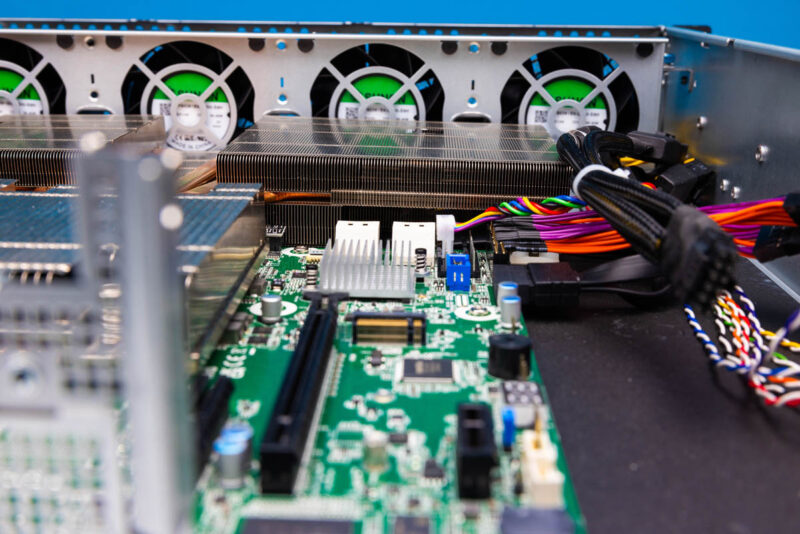

Here is the EVAC version:

In case you were wondering, we did not know that we needed to change the partition position at first, but it was the only way to fit the heatsink.

Final Words

The net impact of this is pretty huge. In our hotter configurations, this was lowering server temperatures by about 9-13C, which can directly help keep turbo clocks higher on the 170W chips like the EPYC 4564P.

Overall, this is something we wish more server vendors would offer. These bigger heatsinks allow for using higher-temperature parts in an environment, or they allow for operating in higher ambient temperatures.

If you want to check out our AMD EPYC 4004 launch video that used this ASRock Rack EVAC server, here is the link:

The fins on the heat sink wings look very densely packed. This can result in them offering a large resistance to air flow and most of the air just flowing around them rather than through them. Does the server come with ducting that was not shown in the pictures?

Also, a little dust and this cooler will be blocked, overheating is inevitable

@Tim the ducting is shown at ~950 in the yt video. @Konstantin, it’s a pretty standard server heatsink. You should probably clean up if you are worried… this one runs 9-13c cooler than the og.

@Brian, thanks for pointing that out! The ducting does not fill me with confidence lol, but the 9-13 degC speak for themselves.

With how much heat all these new CPUs put out, liquid cooling will be more viable unless these units live in a decent data center. Probably live with a Dynatron solution for this AM5. Been enjoying my L35 a lot.

A more general question, inspired by the report on this impressive heatsink: approximately how much power does the cooling of one of these server drawers consume? I get that it’ll vary, but I am looking for a ballpark range, especially for this (entry level) class of server. With blade server setups, it could (can?) easily be > 80 W per blade, but that was years ago.

Figure 10-20% of system power for cooling.

Is there any way to buy these heatsinks separately?