ASRock Rack 2U1G-B650 Internal Hardware Overview

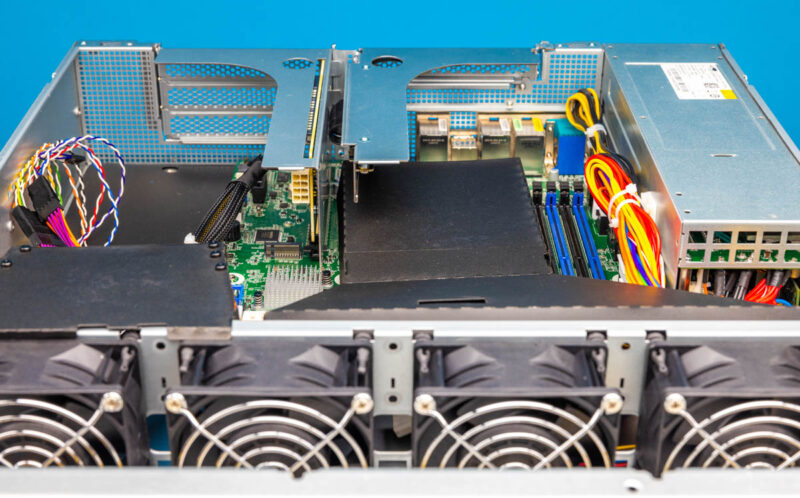

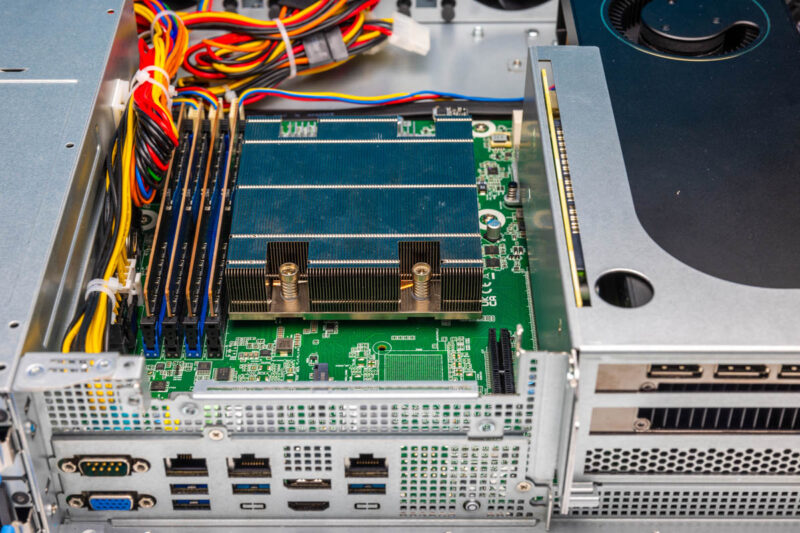

Inside the server, we have flexible air baffles. Generally, we prefer hard plastic models since they are (usually) a lot easier to fit. It took some finesse to get into the correct position. There are other versions of this server. The ASRock Rack 2U1G-B650/AQUA uses a liquid cooler and the ASRock Rack 2U1G-B650/EVAC which uses an enormous heatsink. This chassis was designed to handle all three cooling methods. We have the EVAC cooler version that we will do a follow-up with.

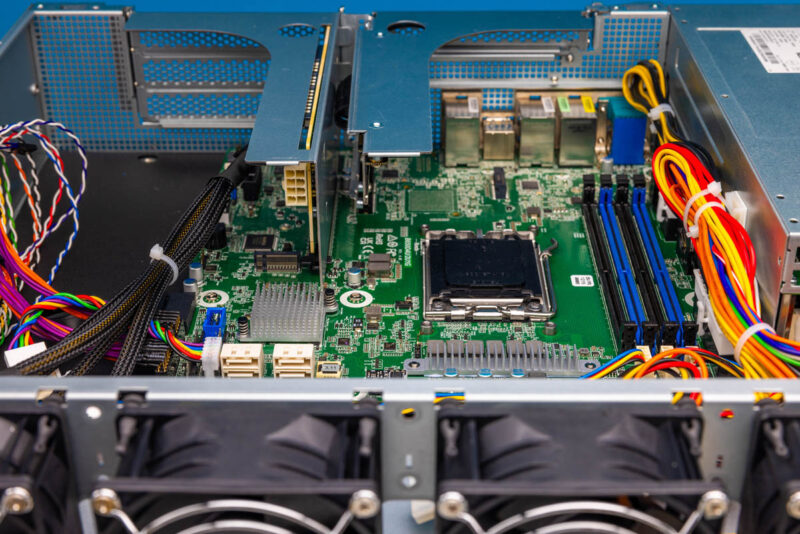

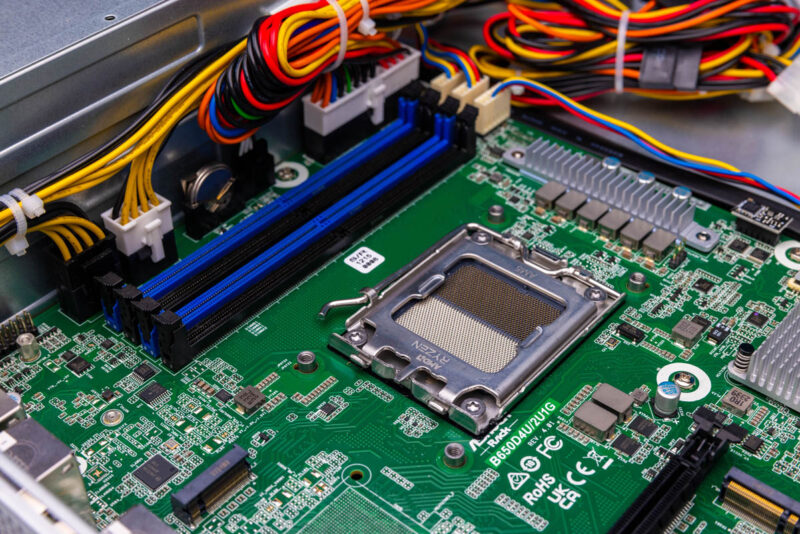

With these removed, we can see the AMD Ryzen AM5 motherboard with DDR5 memory slots aligned for front-to-back airflow.

Here is the internal overview. It is a short-depth system so it is relatively easy to navigate.

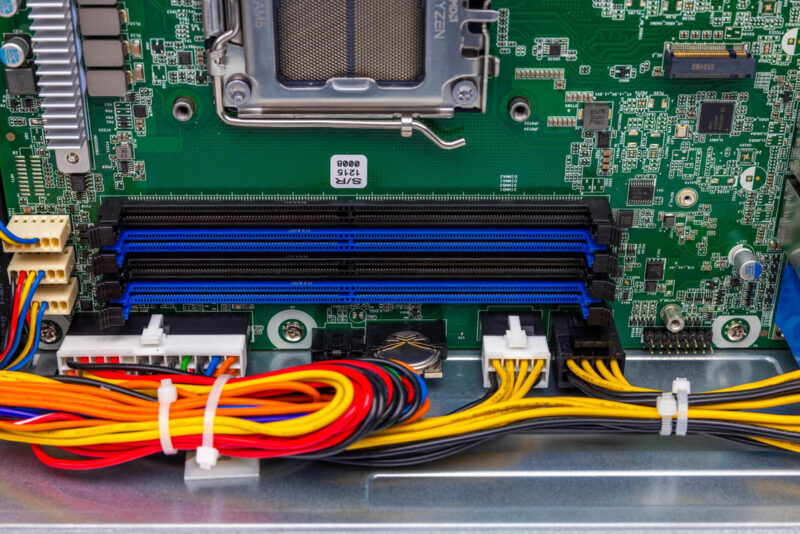

With the risers removed, we can see the layout, including a PCIe x1 slot that effectively goes unused in this system.

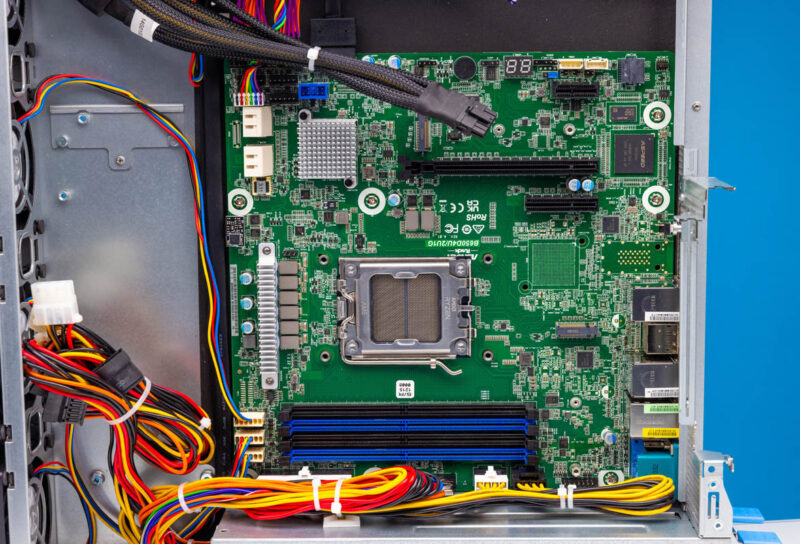

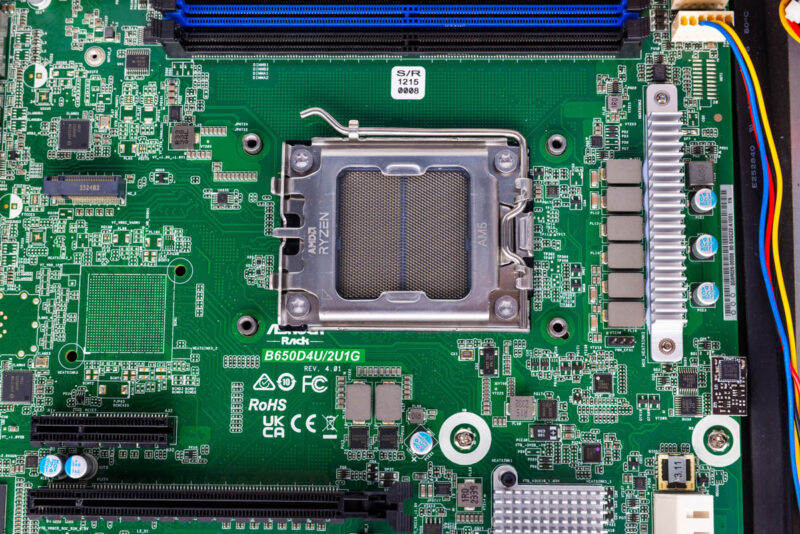

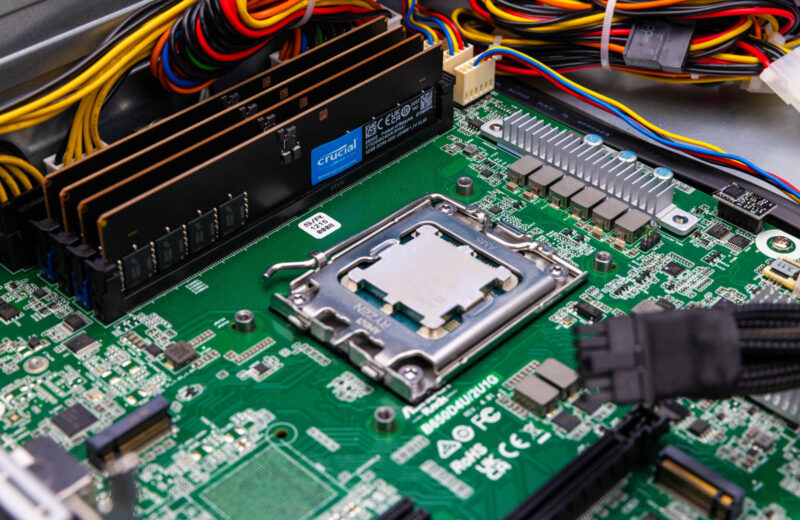

The socket AM5 is getting interesting. Not only can one use high-power parts like the AMD Ryzen 9 7950X, but there are also X3D high-cache parts like the AMD Ryzen 7 7800X3D, low-power parts like the AMD Ryzen 9 7900, and even newer AMD Ryzen 7 8700G parts with an NPU and a bigger GPU.

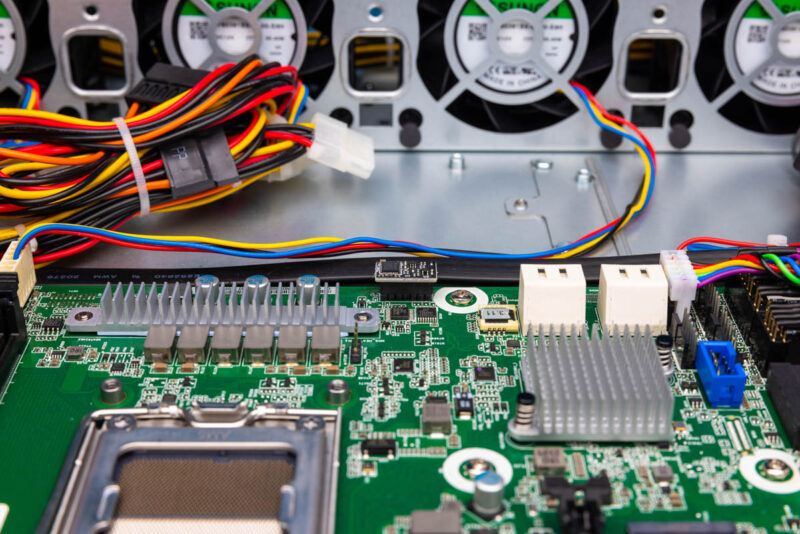

The socket itself is flanked by four DDR5 ECC or non-ECC Unbuffered DIMM slots. Note that DDR5 RDIMMs do not work on these platforms.

We tried up to 4x 32GB of ECC and non-ECC memory in the board, along with a variety of CPU options. The AMD Ryzen 9 7900 is still one of our favorite Ryzen server CPUs since it has 12 cores and low 65W TDP.

Here is te configuration with the standard cooler, 128GB of memory, and a 48GB GPU installed. The EVAC cooler is much larger.

DDR5 memory can be installed, and the speed is largely dictated by the CPU used. ASRock Rack even has 48GB DDR5 ECC modules from SMART on its memory QVL.

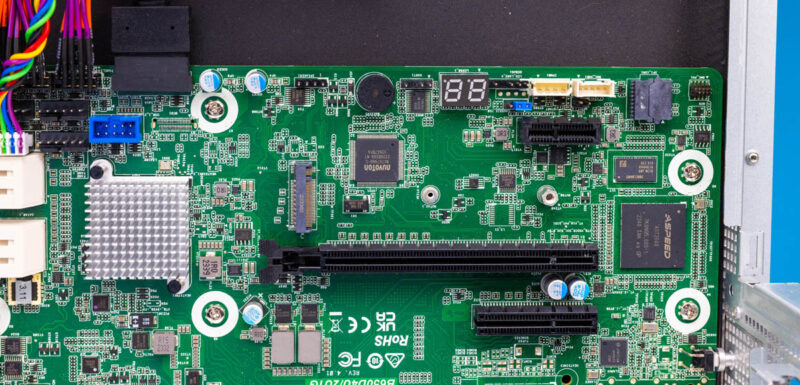

Looking at what would be the bottom of the motherboard, we see a POST code display, the PCIe slots, and perhaps most importantly, one of the two M.2 slots. If you want storage in this chassis, you will likely use these M.2 slots and perhaps a PCIe add-in card.

On the front edge of the motherboard, we have the SATA ports that are going unused. We also have features like the AMD B650 chipset.

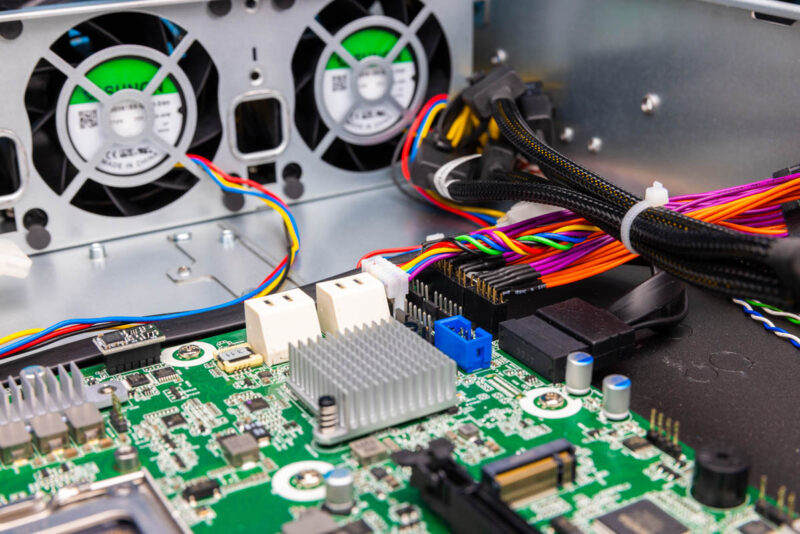

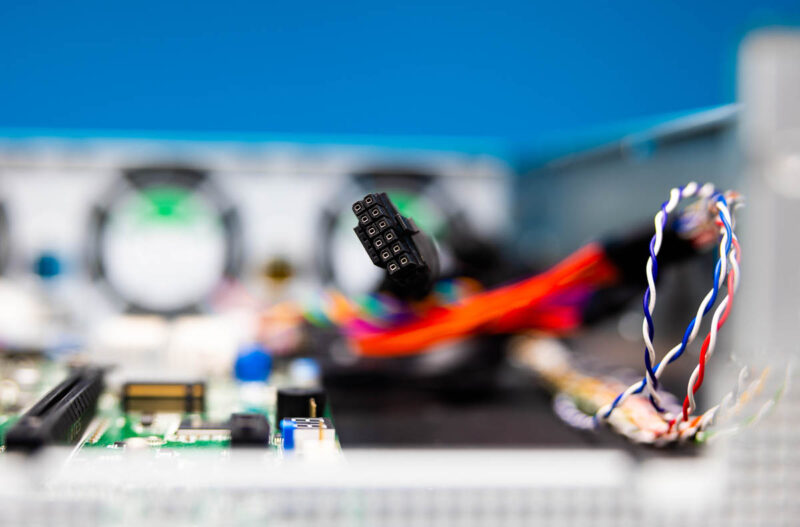

One fun part of this motherboard is the front I/O situation. ASRock Rack has this wiring harness and 90-degree connectors along with its front USB header. It was just something fun to see, but it was practical. This allows for the cables to be added without interfering with GPU cooling.

On the GPU side, ASRock Rack has 1.2kW power supplies which are plenty to power GPUs as well. There is also enough room in this chassis for taller than standard GPUs given the riser location. As a result, the GPU can be taller and the power input can be on the top side of the GPU and still fit in this chassis.

Next, let us see how this is connected via the block diagram.

Page 2, “The socket itself is flanked by four DDR5 SODIMM slots.” Those are DIMM slots.

This is for all of the Linus Tech Tips wannabes who want a rack mounted gaming machine in their basement.

Too bad ASRock Rack has terrible software support for those motherboards. Their AM4 boards are stuck at ancient AGESA due to not having a newer BIOS than 2022 available, despite several known vulnerabilities. The consumer ASRock boards have almost all been patched. This is unacceptable for “server quality” hardware.

AM5 boards are already two generations of AGESA behind, still vulnerable to LogoFAIL.

Their BIOS updates force a reset of all settings, which is very annoying on the AM4 versions with a dGPU present since by default it’s the main display which makes the BMC’s VNC feature not functional.

This forces the user to physically change this setting back to the BMC VGA. While the BMC is capable of changing some BIOS settings via the web interface it’s not able to control the AMD-specific parts. Of course the main display setting is in the AMD part, so it has to be changed physically.

I hope that AM5 versions have improved on those issues, but I’m skeptical and would do more research before committing to them.

Patrick, in your opinion would this thing work well with fanless GPUs like L40? It looks to me like the number of holes in the back panel would limit internal static pressure thus aorflow thru such a GPU.

This one looks perfect for a local development, if I could buy one

Can you test the liquid cooled one? AQUA

I may have missed it, but was any noise level testing done on this? Suspect mostly idle it’d be reasonably quiet?

For a server that’s is unique in supporting a GPU, there’s no testing of how it handles a GPU. Temperatures, noise, power? Anything?

This article seems misnamed as it’s far from being a “gpu server review”

“Some organizations are finding that using physical servers for remote desktops instead of virtualization can be less costly.”

I’d definitely like more information on this. Is this simply due to licensing costs?

There is nothing interesting about the Asrock Rack hanging a M.2 off the chipset. Actually this is one of the less crazy block diagrams of theirs. The lanes to the chipset are only potentially connecting about 3.5x the bandwidth of the actual connection. What’s surprising is that, for once, none of the system networking is directly connected to the BMC as it’s potential backup and potential vulnerability.

The truly annoying thing is that there is nothing between a Ryzen and Threadripper/Epyc in terms of PCIe lanes.

From everything I have seen, the X470D42U was the dog that caught the car and neither Asrock nor AMD knew what to do, and likely still don’t. Maybe questions could be posed. Things reported. Measurements taken. The quality of reviews have been little less than manual rewrites.

Is there any sort of release date for when this will be available to retail buyers?

I just want the server chassis and a dynatron tripple 80mm 2U AIO.

As we see on the image with the GPU: If we close the server case the GPU radiator will be covered. Is this correct? There is only about 2 mm space between the GPU radiator and the upper server case lid. Strange.