ASRock Rack 1U4LW-ICX/2T Power Consumption

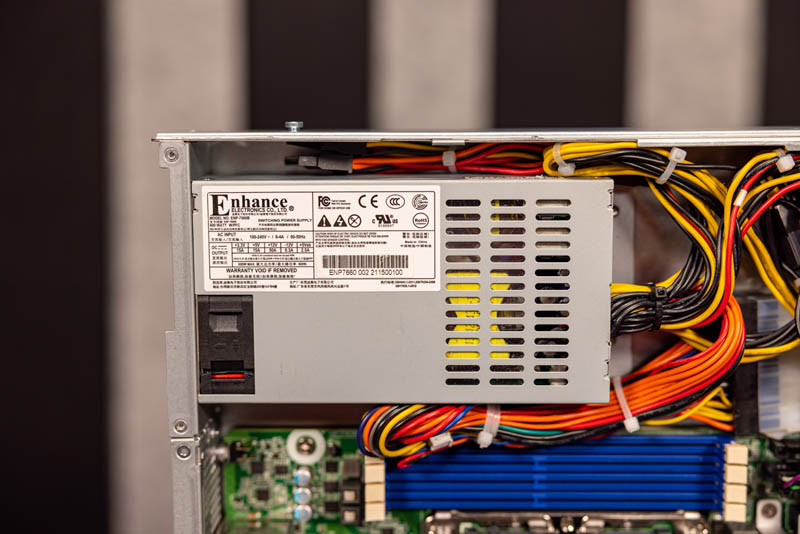

The single power supply in the server is a 600W unit.

Here is what we saw in terms of power consumption:

- Idle: 0.09kW

- 60% Load (non-AVX): 0.28kW

- 100% Load: 0.32kW

- AVX-512 GROMACS Load: 0.36kW

Note these results were taken using a 208V Schneider Electric / APC PDU at 17.0C and 67% RH. Our testing window shown here had a +/- 0.3C and +/- 2% RH variance.

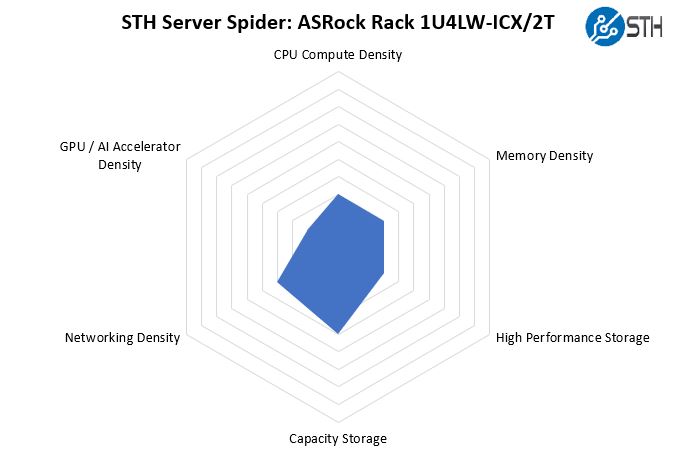

STH Server Spider: ASRock Rack 1U4LW-ICX/2T

In the second half of 2018, we introduced the STH Server Spider as a quick reference to where a server system’s aptitude lies. Our goal is to start giving a quick visual depiction of the types of parameters that a server is targeted at.

This is a fairly good all-around server, but it is clearly not designed for density. In an age where we can get 1U servers with 128 cores/ 256 threads, 6TB+ of memory, and many NVMe SSDs/ accelerators, an entry-level server is optimized around cost instead of density. Here we get solid 3.5″ storage with some extra 2.5″ mounting, 10GbE onboard and the cost benefits of a single socket CPU.

Final Words

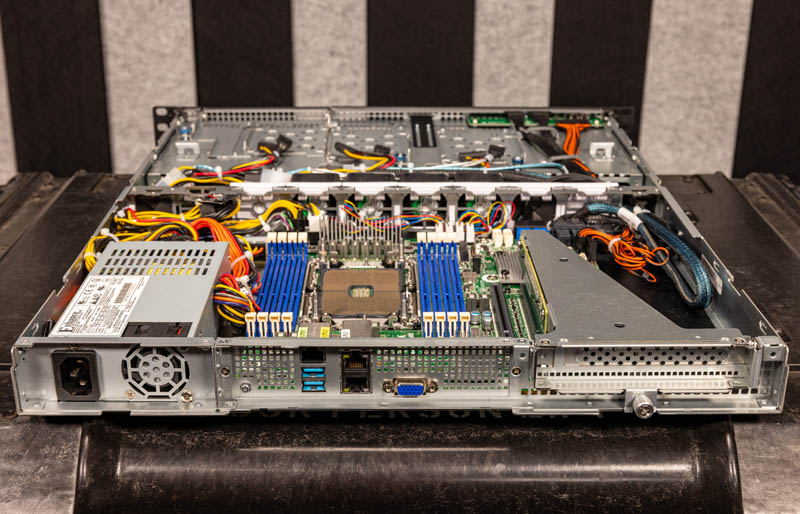

There is so much to like with the 1U4LW-ICX/2T. If you are looking for a high-end dual socket enterprise server, or GPU compute platform, this is clearly not the server for you. Instead, it is designed to be heavily cost-optimized to provide a low-cost platform to get a 3rd Generation Intel Xeon Scalable CPU into a hosting cluster.

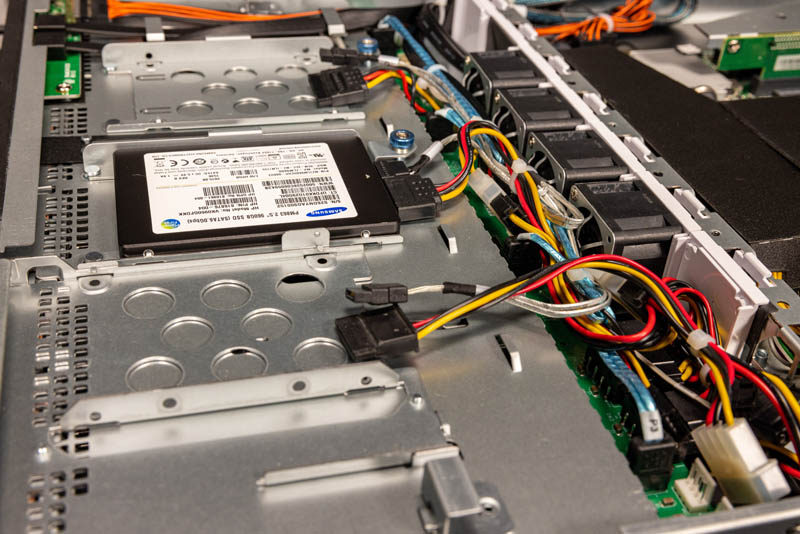

In that pursuit, there are a few items that really stand out. For example, this array of 2.5″ mounting points requires half the screws and a thumbscrew design to make them easier to service. That is not strictly necessary in a low-cost design, but it is something really nice to have.

Overall we wish that ASRock Rack had something like a 1U M.2 (or dual M.2) solution for the empty and still accessible PCIe x16 slot just to add a bit more versatility. Still, with the new generation of processors, there are a lot of upgrades to the overall platform. When one factors in the U-series single-socket Xeon pricing, having a system like this consolidating 3-4 Xeon E servers is very possible. Perhaps in this market, it gives hosting providers the ability to offer customers higher-end boxes at cost structures more similar to Xeon E servers on a cost/ core basis.

Overall, this was a very fun system to get to review. We had the opportunity to use a lower-cost system and yet really see some improvement to quality and serviceability over previous generations. Those physical characteristics go beyond just the performance benefits of the newer chips and the new features they bring to the table. Ice Lake is a big upgrade for those deploying Xeon servers. ASRock Rack has done a great job of balancing the 1U4LW-ICX/2T to provide a nice entry Ice Lake Xeon server.

With all those bits hanging off the PCH it’d be great to see some tests of internal bandwidth, identify if there are bottlenecks.

Say you had an A10 GPU in the slot and it was slurping data from elsewhere via 10Gb ethernet, and some other process is beating the heck out of an NVME SSD in the m.2 slot, and a third process is having a strong exchange of views with a collection of SATA SSD configured as an mdraid RAID 0.

Situations like that. Can that CPU to PCH link keep up, or is the system actually not capable of heavy multitasking work?

Maybe I need to know more about the tests, eh? Compiling the kernel, that’s pretty straightfoward.

What is the nature of the Mariadb test? How many queries in flight, how many spindles, how many rows scanned? How complex are the queries? Mariadb seems like the only one that might be capable of a somewhat whole system test, though probably not so much the ethernet IO.

C-Ray, ssl, 7zip are almost pure CPU tests. I’d be pretty surprised if the platform made any difference.

How’s the depth on the rail kit? We now have a small fleet of the 1U2LW-X570/2L2T units, and the fact the rail kit is a few inches too short for full-depth racks continues to irritate the hell out of me.