ASRock Rack 1U4LW-ICX/2T Internal Hardware Overview

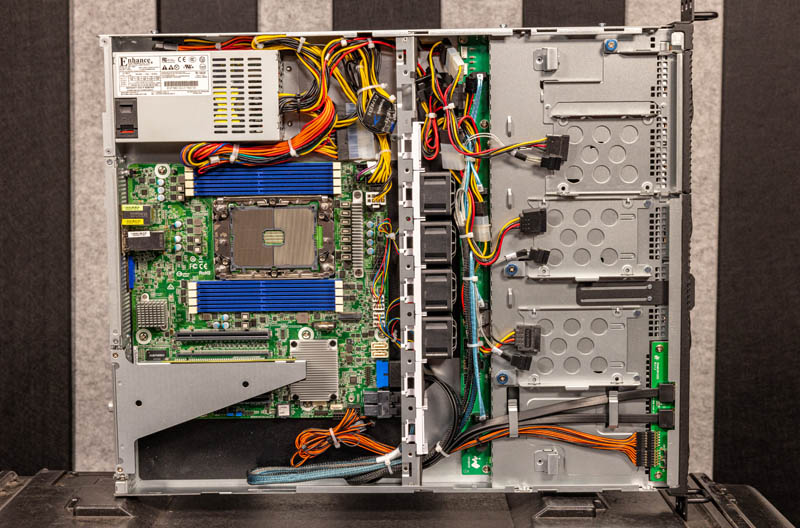

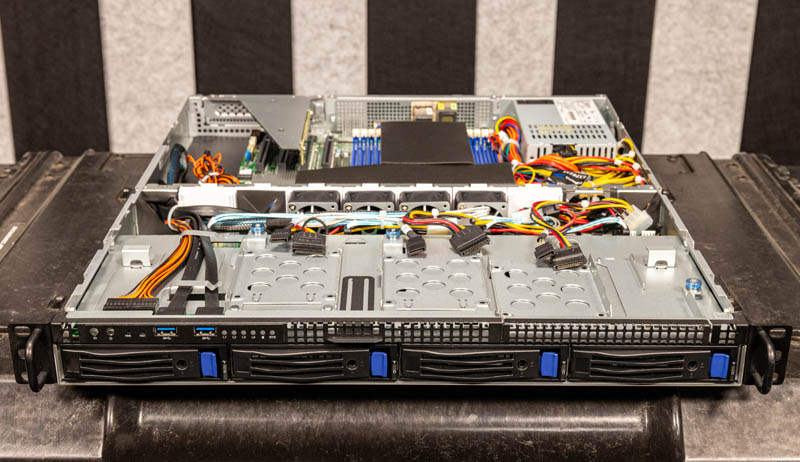

Taking a look at the inside of the system, we are going to start with the front and work our way back. Here is the system overview for reference.

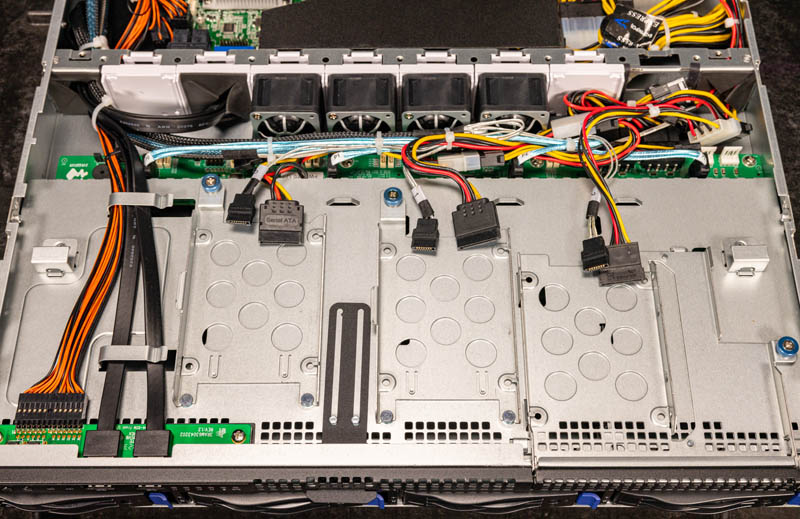

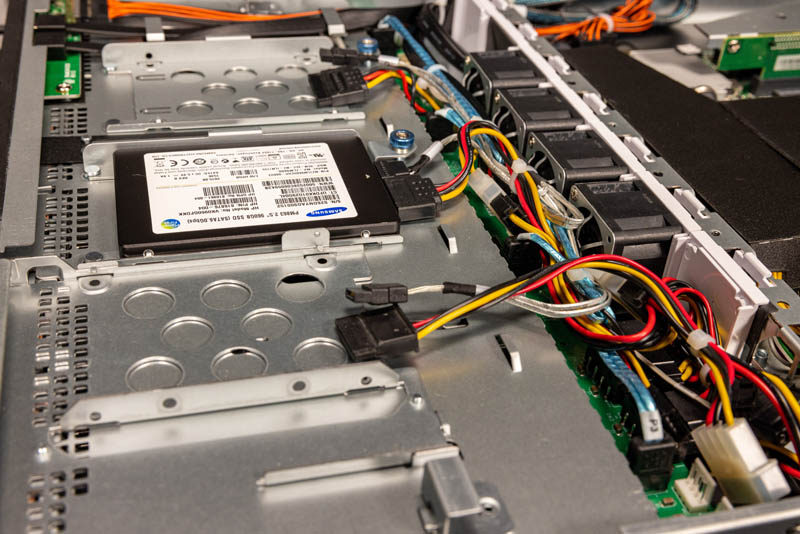

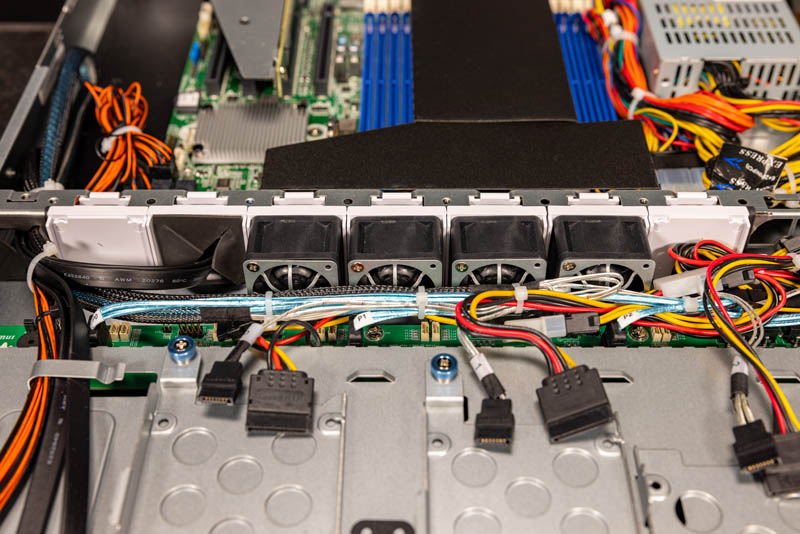

We mentioned the 3.5″ bays, but just behind them are the 2.5″ mounting points.

The chassis has three mounting points for 2.5″ drives. One of them is on the assembly that can be replaced for an optical drive. These are not front-accessible, and are cabled instead of having a backplane, but they also have some nice features.

One of these features is that each of these three utilizes a thumbscrew so one can quickly remove the mounts from the chassis and have easier service. In addition, the sleds have mounting prongs instead of screws on one side of the drives so one can use 1-2 screws to install a SSD into these spaces. Of course, one may want to have hot-swap drive bays, but this is a nice compromise between expense and functionality, especially for SSDs with generally lower failure rates.

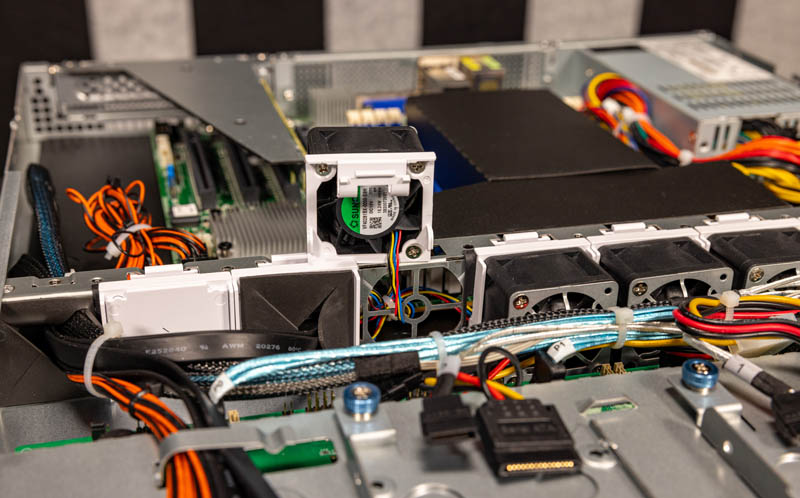

Behind the front storage is the fan partition.

There are four fans, but perhaps the interesting point here is that they have a clipping retention mechanism to hold in place. Doing 1U hot-swap fans is very expensive in chassis. As a result, most 1U single-socket servers do not use hot-swap fans. Here we still have a cabled 4-pin connection, but it is very easy to swap and move the fans because of this retention mechanism.

If one has a higher-power PCIe expansion card, there is a blank that can be replaced with a fan to deliver airflow to the PCIe riser.

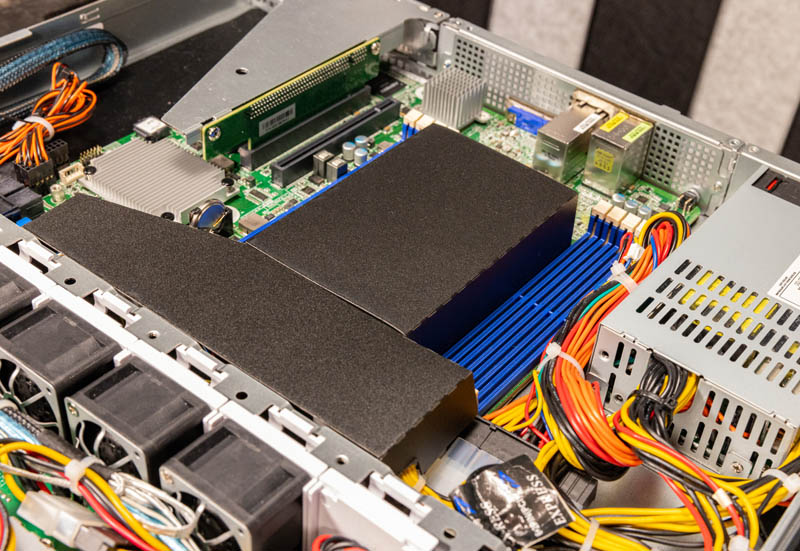

Across the CPU we have a flexible airflow guide. Most STH readers will know I generally do not prefer this type of flexible guide. It does take a little bit of time to get seated properly but on a spectrum, this is actually one of the easier flexible guide installations.

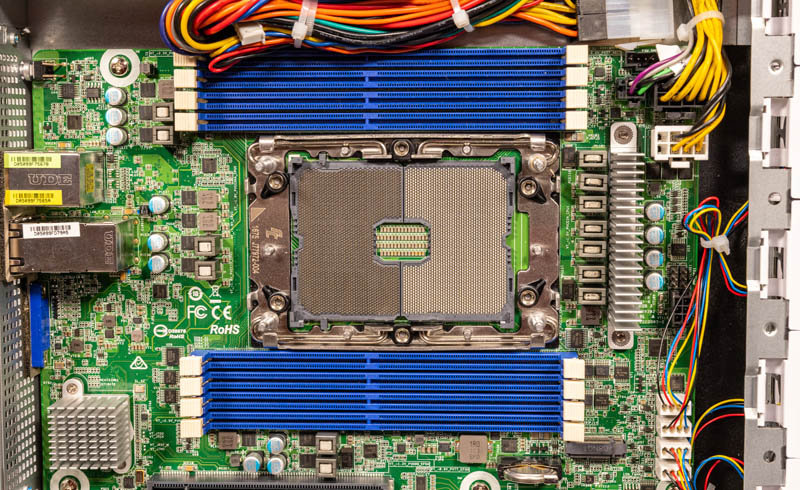

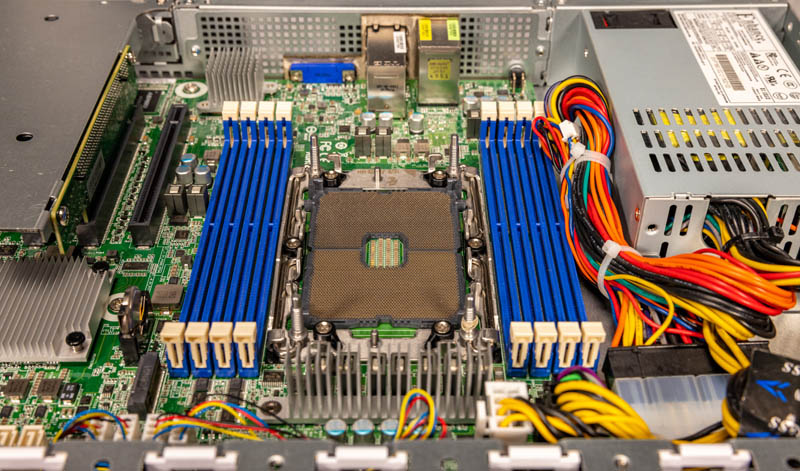

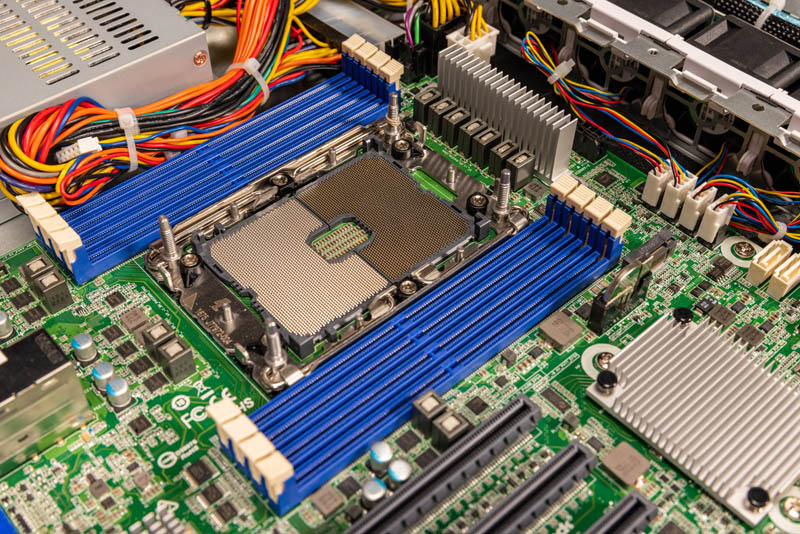

Underneath the airflow guide, we have a Socket P+ LGA4189 socket. This is for Ice Lake CPUs, not Cooper Lake in the 3rd Generation Intel Xeon Scalable.

A few of the big features that we get with the new generation include 8 channel DDR4-3200 support, up from 6 in the previous generation and 2 in the Xeon E segment. We also get PCIe Gen4 in this generation. On CPU, we have accelerators such as QAT crypto as well as VNNI for AI inference. We recently looked at the impact of these accelerators in AWS EC2 m6 Instances: Why Acceleration Matters.

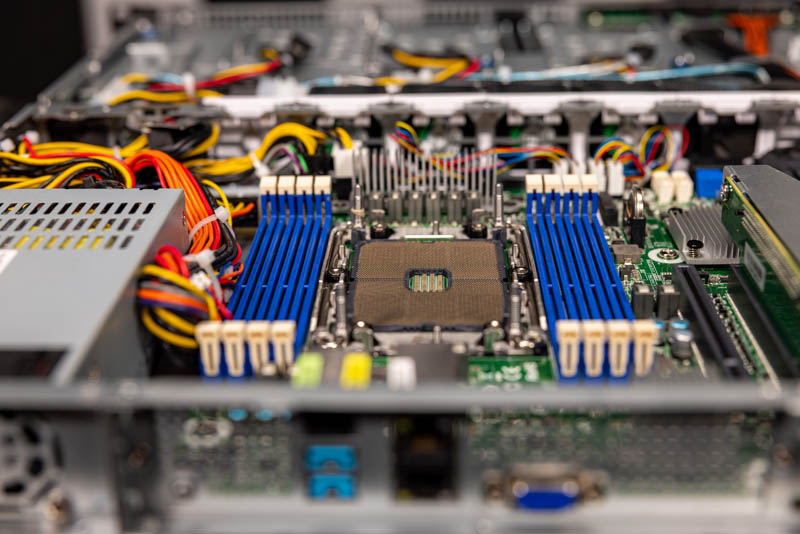

Here is a three-quarter view looking from the PCIe slot side to the fans and PSU.

Here is a view of the airflow looking back towards the fans.

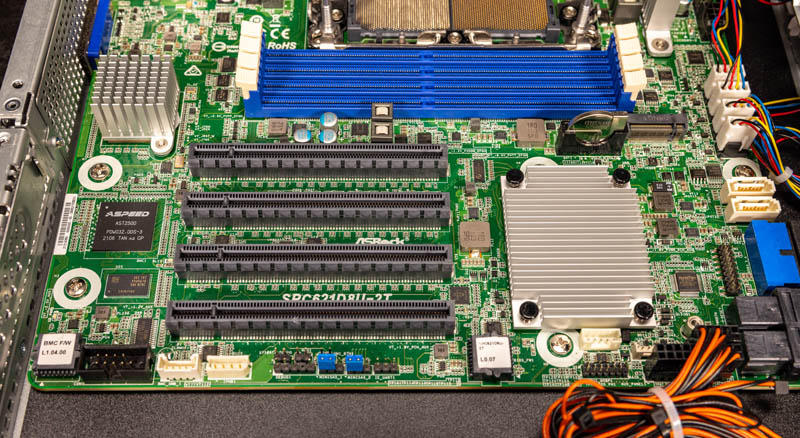

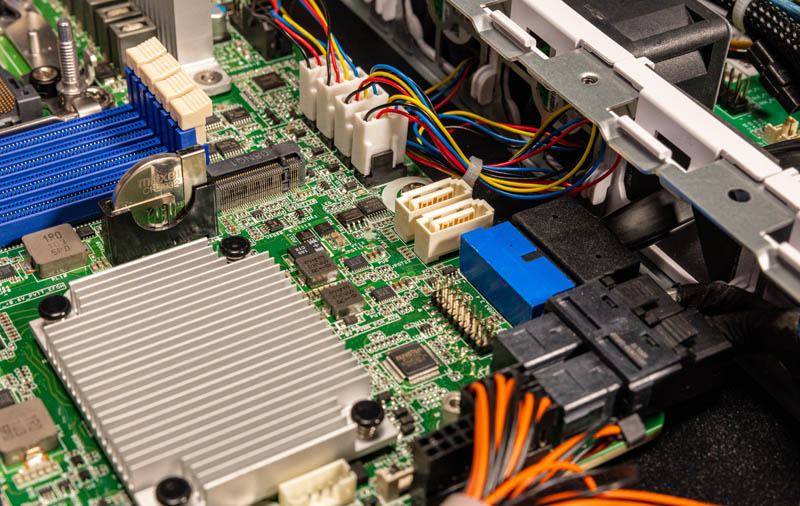

The ASRock Rack SPC621D8U-2T is a mATX motherboard. It actually exposes 64x PCIe lanes from the processor directly to four x16 PCIe slots. One of these is used for the riser so the others are likely to go unused.

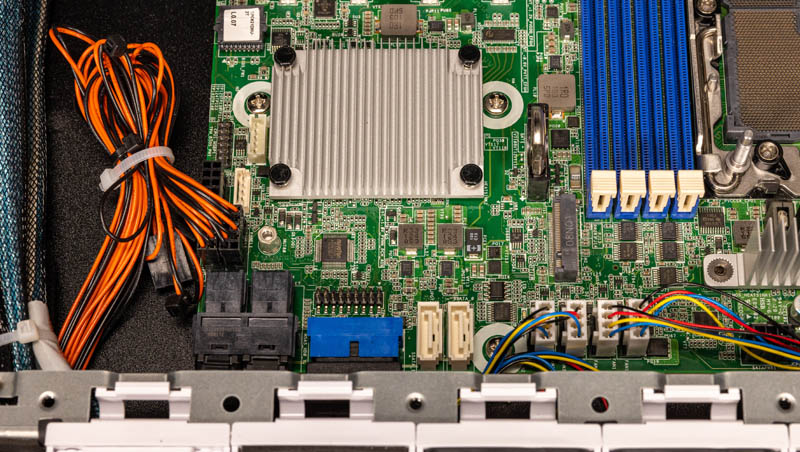

The large heatsink is for the Intel C621A PCH.

Next to that PCH we have a M.2 slot that can be either PCIe or SATA. We then have two SATA ports and then the front I/O connectivity for the drives USB ports and such. For those wondering, the orange and white wire bundle is for all of the LEDs and switches on the front of the chassis.

Overall, there is actually quite a bit of functionality fit into this small chassis.

Next, let us get into the block diagram, management, and performance.

With all those bits hanging off the PCH it’d be great to see some tests of internal bandwidth, identify if there are bottlenecks.

Say you had an A10 GPU in the slot and it was slurping data from elsewhere via 10Gb ethernet, and some other process is beating the heck out of an NVME SSD in the m.2 slot, and a third process is having a strong exchange of views with a collection of SATA SSD configured as an mdraid RAID 0.

Situations like that. Can that CPU to PCH link keep up, or is the system actually not capable of heavy multitasking work?

Maybe I need to know more about the tests, eh? Compiling the kernel, that’s pretty straightfoward.

What is the nature of the Mariadb test? How many queries in flight, how many spindles, how many rows scanned? How complex are the queries? Mariadb seems like the only one that might be capable of a somewhat whole system test, though probably not so much the ethernet IO.

C-Ray, ssl, 7zip are almost pure CPU tests. I’d be pretty surprised if the platform made any difference.

How’s the depth on the rail kit? We now have a small fleet of the 1U2LW-X570/2L2T units, and the fact the rail kit is a few inches too short for full-depth racks continues to irritate the hell out of me.