ASRockRack 1U4G-ROME Management

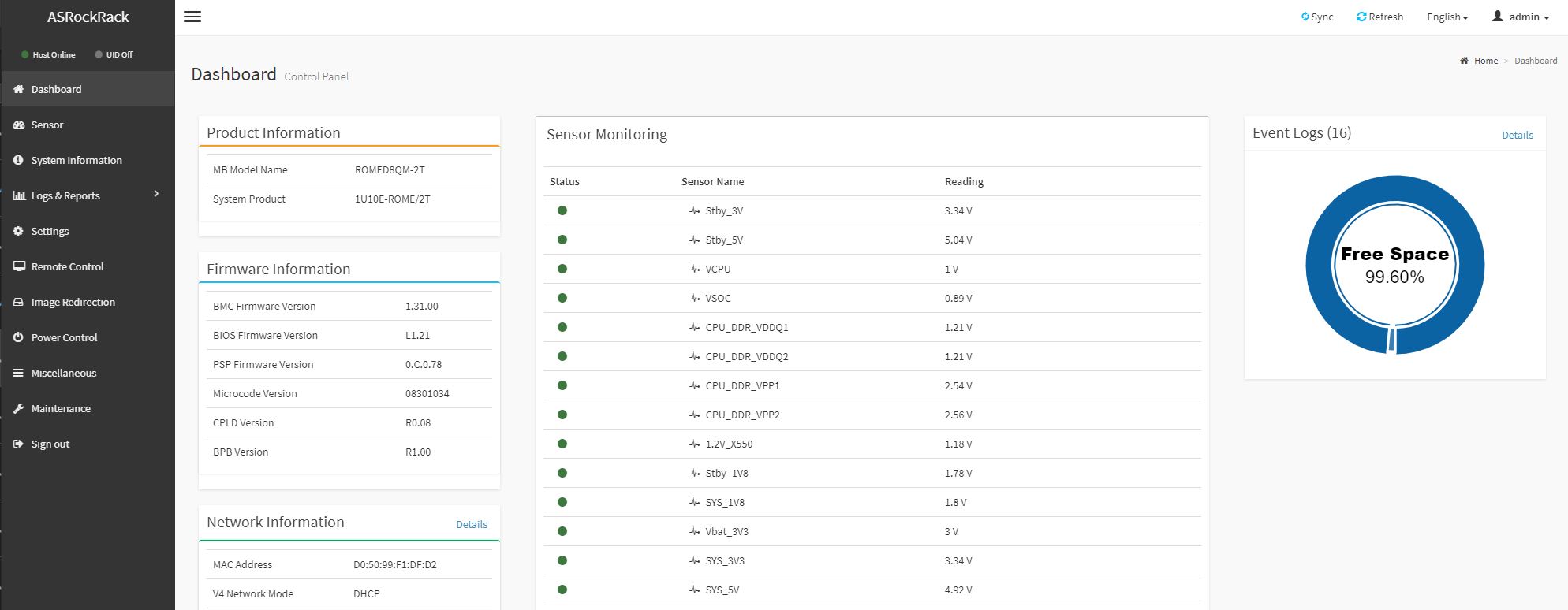

In our hardware overview, we showed the out-of-band management port. This allows OOB management features such as IPMI but also allows one to get to a management page. ASRock rack seems to be using a lightly skinned MegaRAC SP-X interface. Since we have covered this a number of times, and it is standard on ASRock Rack servers here is the quick overview.

This interface is a more modern HTML5 UI that performs more like today’s web pages and less like pages from a decade ago. We like this change. Here is the dashboard.

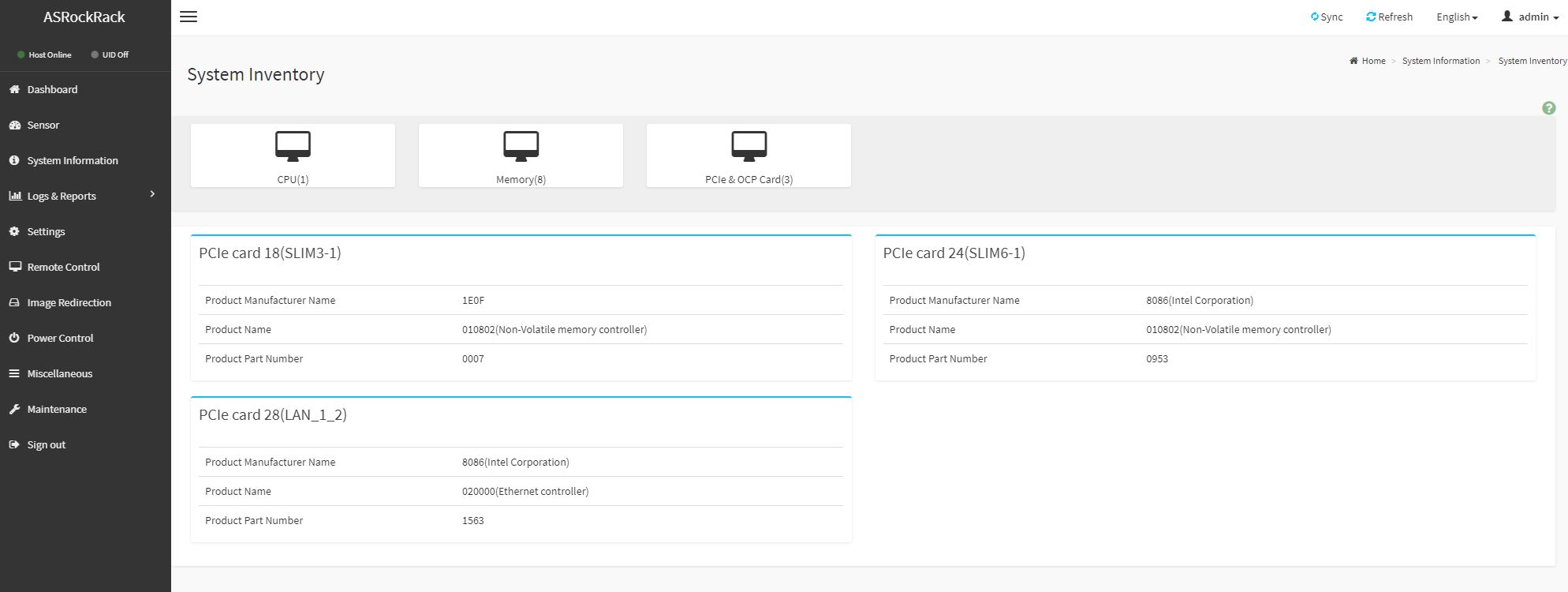

Going through the options, the ASRock Rack solution seems as though it is following the SP-X package very closely. As a result, we see more of the standard set of features and options.

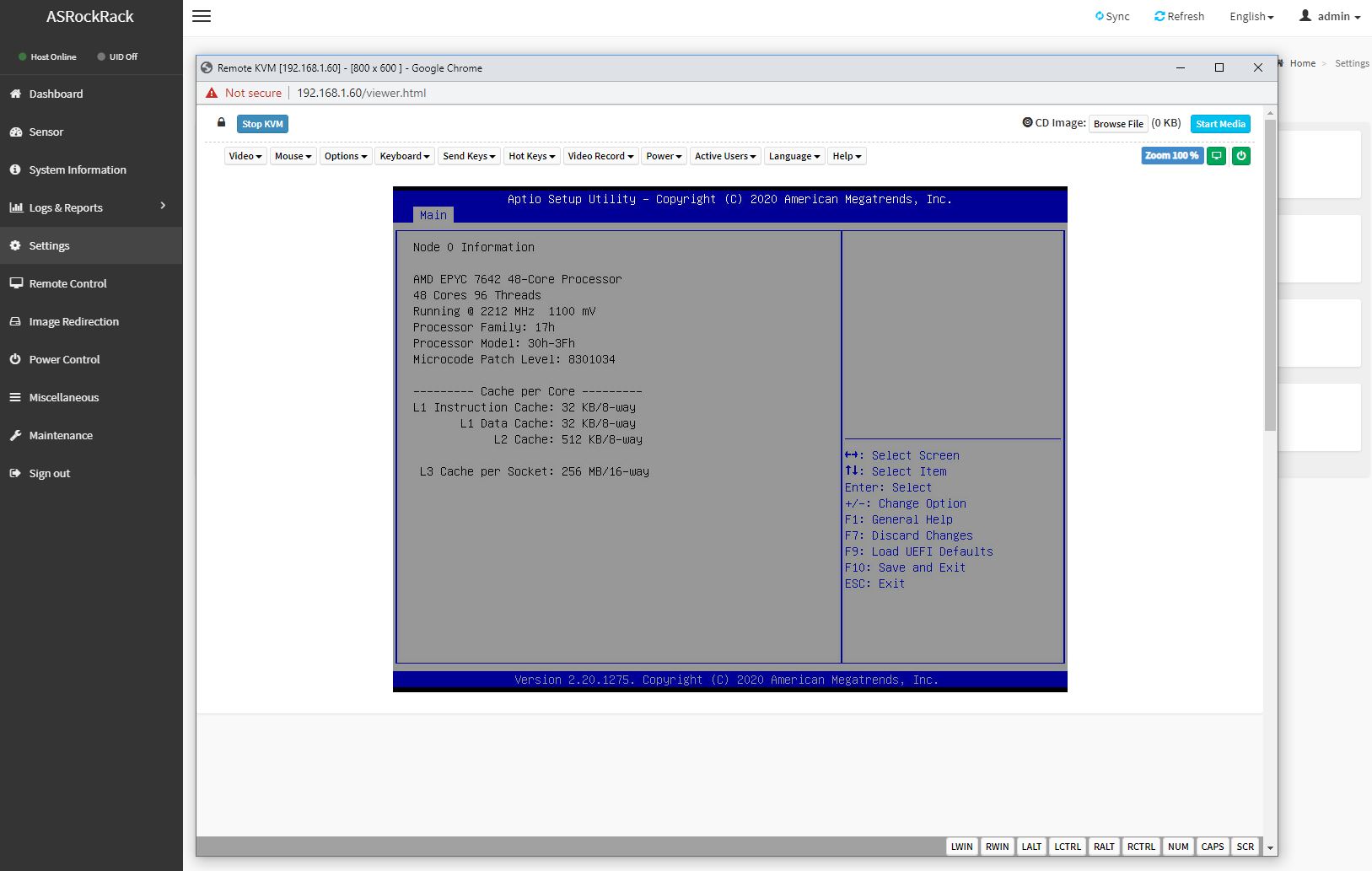

One nice feature is that we get a modern HTML5 iKVM solution. Some other vendors have implemented iKVM HTML5 clients but did not implement virtual media support in them at the outset. ASRock Rack has this functionality as well as power on/ off directly from the window.

Many large system vendors such as HPE, Dell EMC, and Lenovo charge for iKVM functionality. This feature is an essential tool for remote system administration these days. ASRock’s inclusion of the functionality as a standard feature is great for customers who have one less license to worry about.

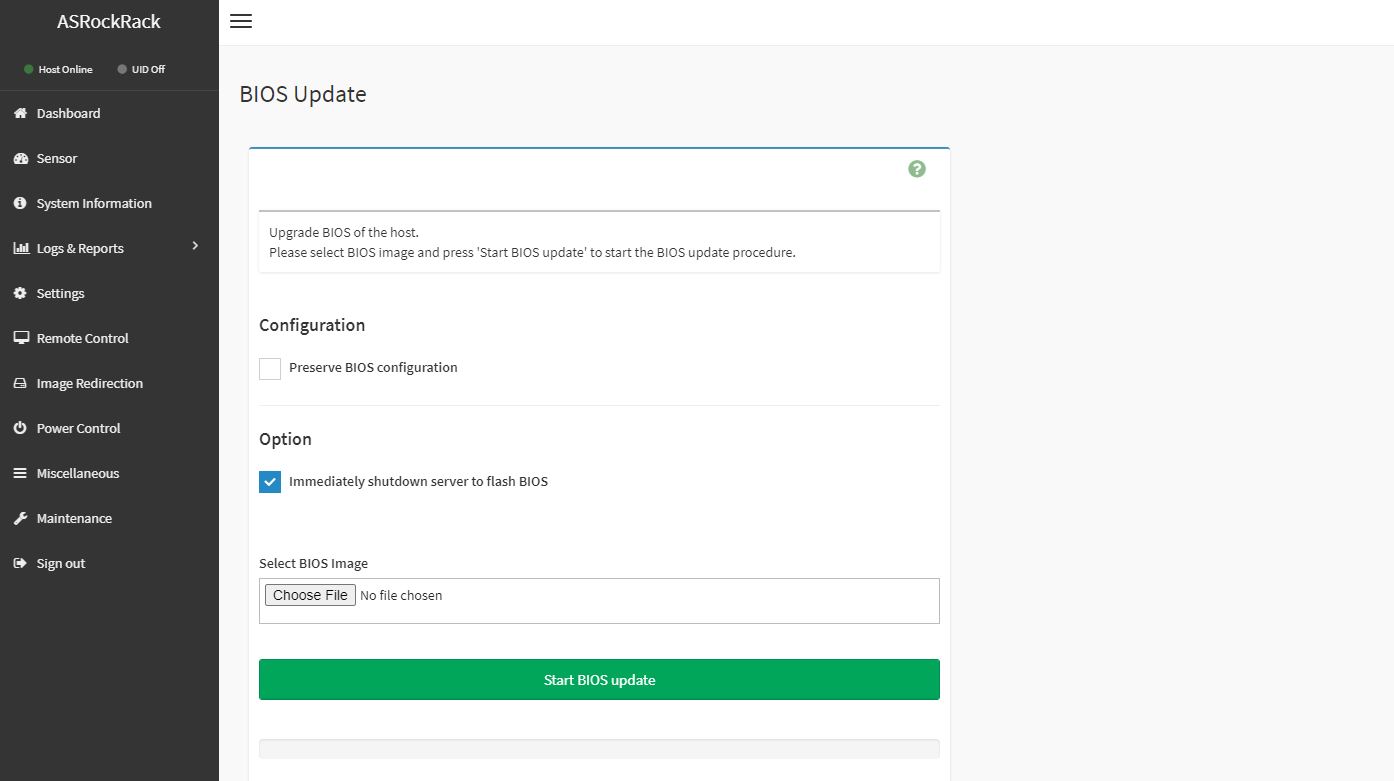

Beyond the iKVM functionality, there are also remote firmware updates enabled on the platform. You can update the BIOS and BMC firmware directly from the web interface. This is something that Supermicro charges extra for.

Something that we did want to mention here is that ASRock Rack is not vendor locking CPUs as we find in servers from vendors such as Lenovo and Dell EMC. We covered how AMD PSB Vendor Locks EPYC CPUs for Enhanced Security at a Cost. ASRock Rack has a more eco-friendly design that does not vendor-lock AMD EPYC CPUs to ASRock Rack-only servers. You can learn more about what is happening and why it is important you know if your server vendor is doing this either in that article or in the video below.

This is important for the industry so we wanted to point it out to our readers.

Next, we are going to move on to the performance of the server.

ASRock Rack 1U4G-ROME Performance

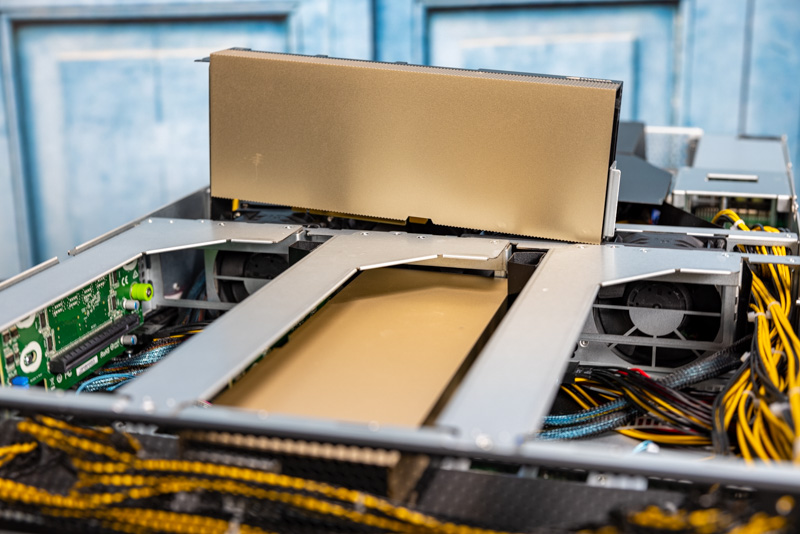

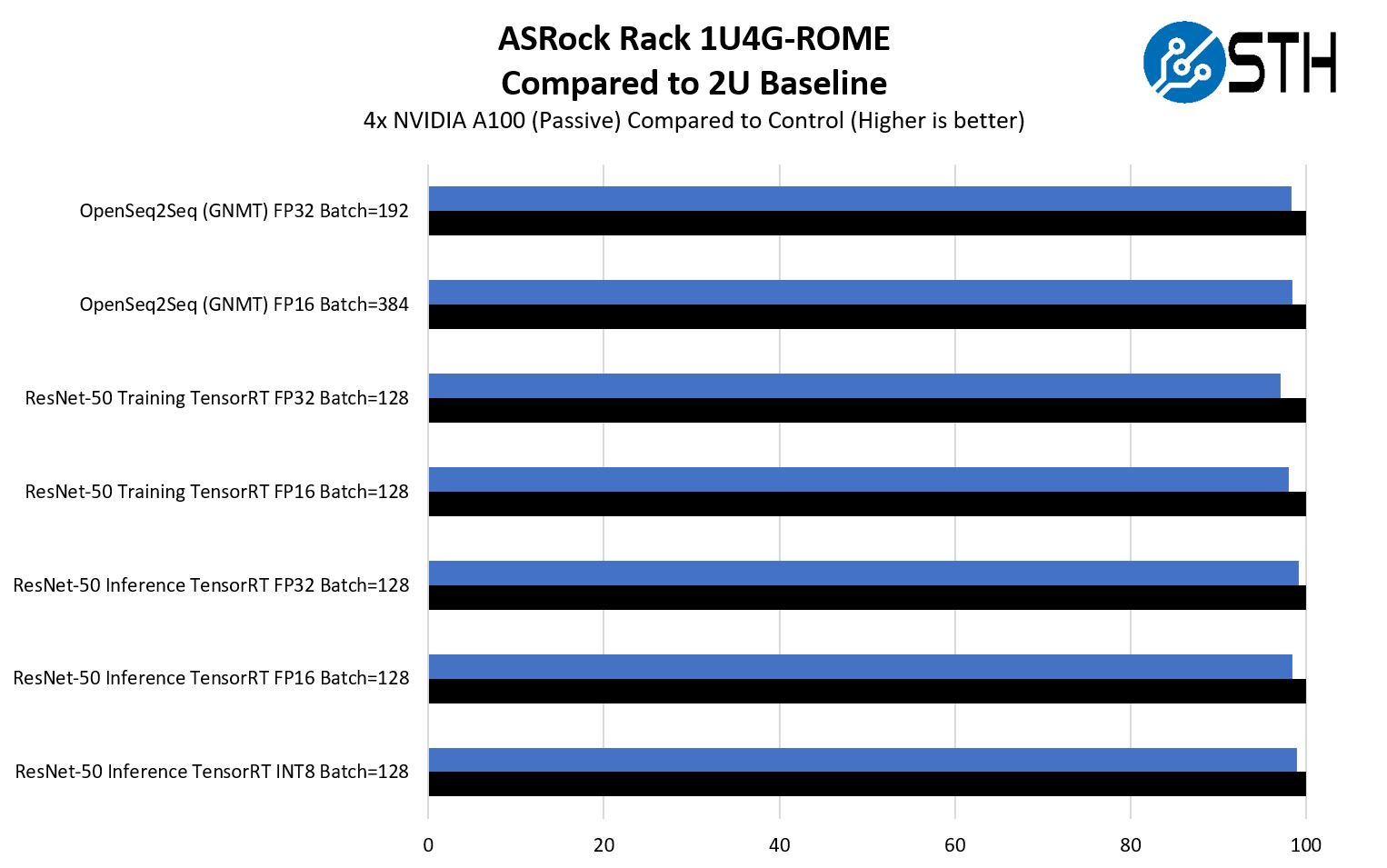

Something that we had a fairly unique opportunity to do here was to test the 1U versus 2U 4x GPU solution. Just recently we tested the ASRock Rack 2U4G-ROME/2T and we were able to test the system using the same components, including the same NVIDIA A100 GPUs. The density implications of moving from 2U to 1U with the same four GPUs was easy

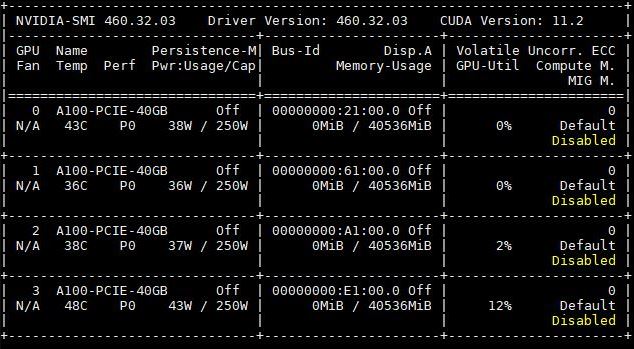

We wanted to validate that the cooling was again able to cool all of the accelerators since that is a major feature for the server. Here we are using four passively cooled NVIDIA A100 40GB PCIe cards that we put into one of our 8x GPU boxes. We also reviewed the ASUS RS720A-E11-RS24U dual-socket system and have a Dell EMC PowerEdge R750xa review coming. These have seen some mileage.

In terms of cooling, we saw that this system was able to keep the GPUs cool in our testing but there was a bit of a delta that was noticeable.

Overall, we see that we had performance we would characterize as slightly lower than the 2U 4x GPU ASRock Rack server. This is likely a cooling delta and instinctively makes sense. It was also a bit more pronounced in our training workloads and we did see the FP32 ResNet-50 training test go a bit outside what we may forgive as just a test variation. Of course, the other side of this is that losing 1-2.5% of GPU performance to double the density in a data center is a trade-off that will make sense for many looking at these 1U servers.

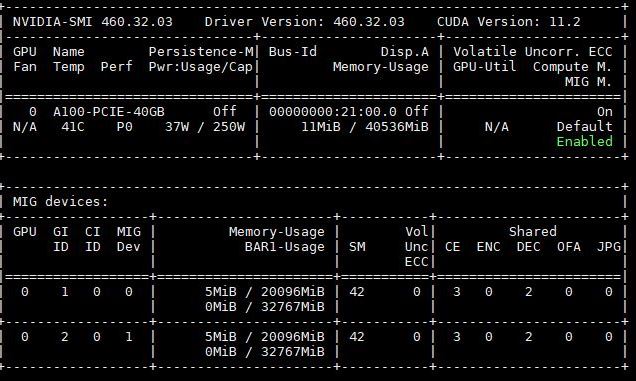

One other feature we wanted to just mention is that the NVIDIA A100’s we have here have MIG or multi-instance GPU support. This allows one to partition a GPU into multiple smaller slices. Here is an example partitioning a single 40GB A100 to two 20GB slices. This is actually a big deal for inferencing workloads. Those inferencing workloads also tended to be much closer in terms of performance than the training workloads.

This is important since it practically means that one can get up to seven GPU instances per card. With four A100’s, that means this server could have up to 28 A100 slices. For inferencing workloads, a single A100 is often too much. Using MIG functionality means one can get often get the benefit of having many smaller GPUs, like NVIDIA T4‘s, without having to install so many physical cards.

One item we could not test was NVLink in this system. The four separate PCIe GPU risers meant that we could not utilize NVLink bridges on the A100’s as we did in this photo to get NVLink support in this system. The same would hold true for the AMD Radeon Instinct MI100 bridges. With the GPUs spread throughout the system, the top of GPU short PCB point-to-point links do not fit.

Overall though, we saw good CPU and GPU performance in this platform.

Next, we are going to move to our power consumption, server spider, and final words.

The EDSFF storage and connectors are a thing of real beauty. It would be wonderful if this tech supplants m.2 in the desktop world also.

@Patrick, can you tell us the part number and manufacturer of the fans used to force air theough this system?