ASRock Rack 1U4G-ROME Internal Overview

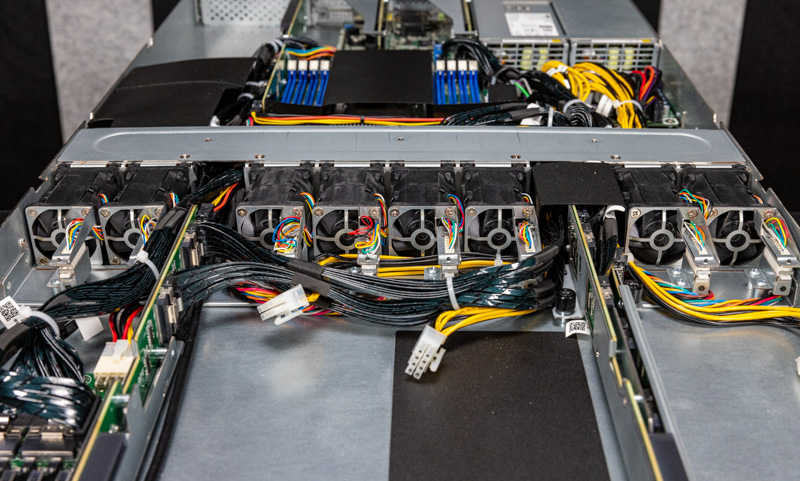

The front of the server has three of the four GPUs. Some vendors put all four GPUs up front, but this is a fairly common configuration for a 1U GPU server.

We already looked at the EDSFF section in detail, but the rest of the front of the server is dedicated to three GPUs. We are going to discuss them in the orientation they appear looking from the front of the chassis.

The left GPU has a PCIe Gen4 x16 connector that is close to the SSD cage.

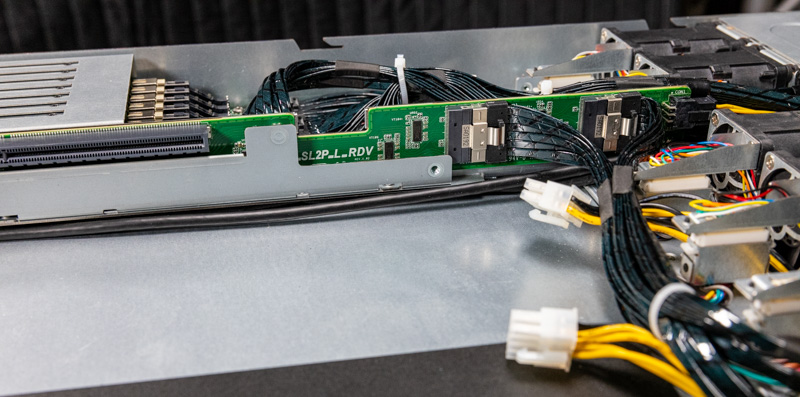

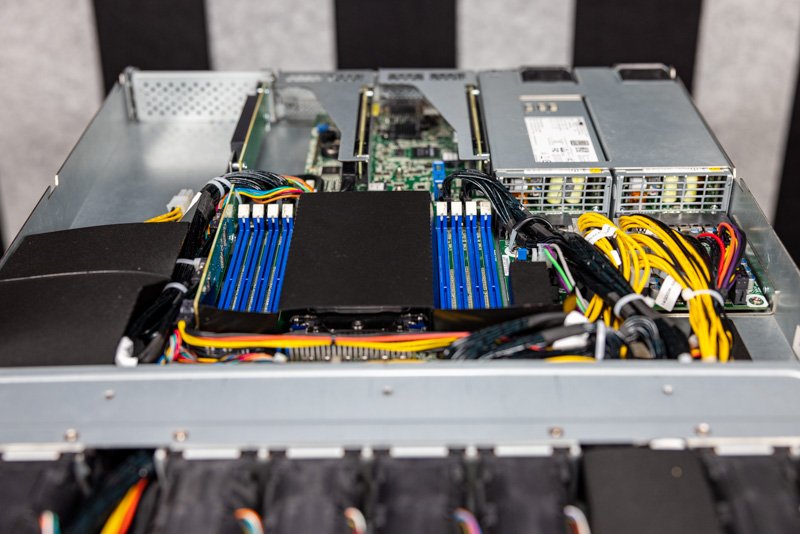

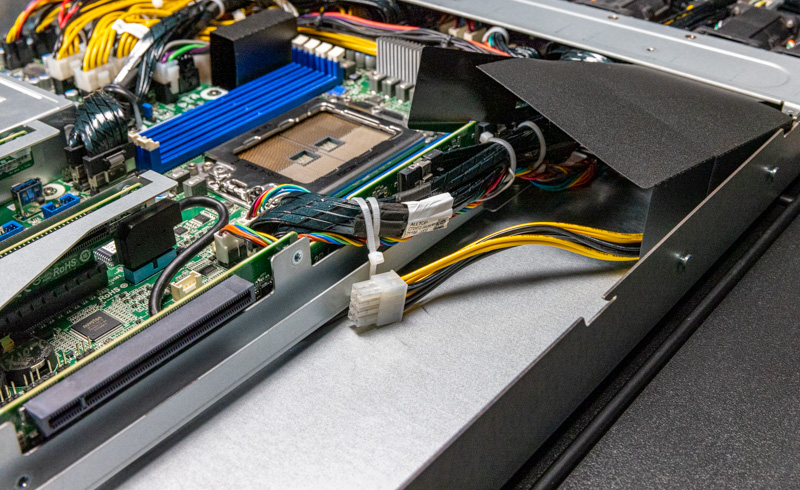

The PCIe slots in this server sit on risers. The risers each have two PCIe x8 cables along with a power connector. There are additional data center GPU power connectors near the fan partition for each GPU.

The right riser has a single GPU in this area. There is less of a hard partition here and more of just the need for the risers to occupy a vertical column. Having the single GPU on this side allows for more efficient airflow in the center of the chassis since that then cools the CPU and expansion cards.

The middle GPU riser is a bit different. Here, the PCIe connector is at the bottom of the chassis so in the system this middle GPU will have an opposite orientation. That is also why the black material is below this section since the back of a PCIe card is oriented close to the bottom of the chassis.

Here is a shot of the right and middle risers from the right side of the chassis. One can see that they are built so the right riser goes above the assembly for the middle riser. It also made the right riser a bit more difficult during installation for us.

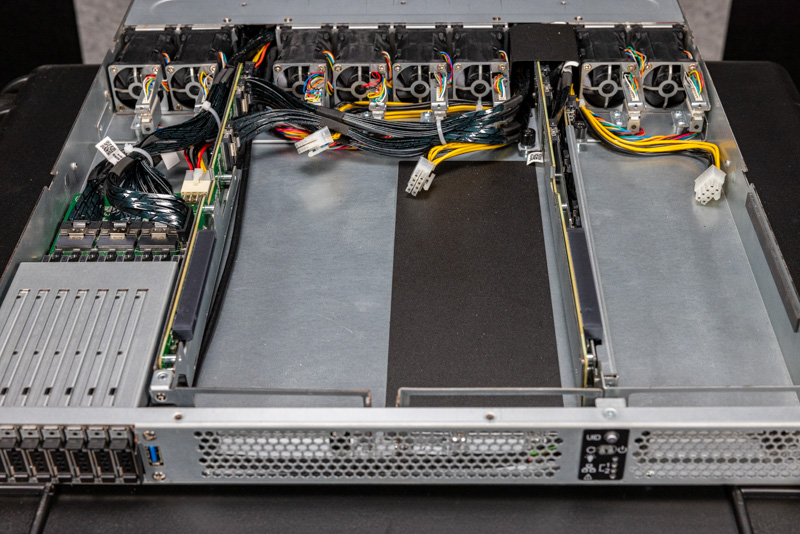

The middle of the chassis has an array of eight counter-rotating fan modules. There are two on either side and four in the middle. One can also see a lot of cables for the GPUs and SSDs. This is a very tight chassis.

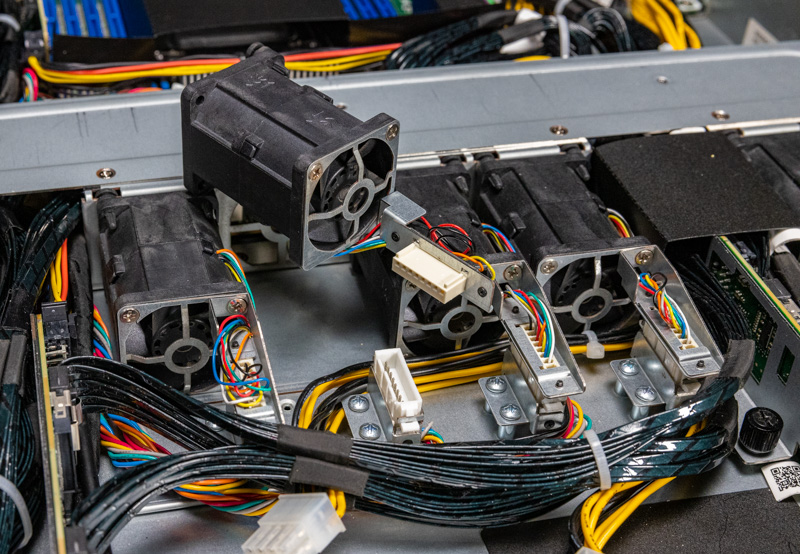

Something interesting that ASRock Rack did was to put the fans on a little hot-swap fan solution. One is still likely going to turn off the server to service it, however, these act as easier disconnects for the fans and are much better than just having 4-pin fan connectors and having to figure out how they snake through the chassis. This was a surprise feature, but a welcome surprise.

In the middle, we also have a structural brace running behind the fans. This brace both helps maintain enough structural rigidity, but it also helps duct airflow from the fans to the rear of the system.

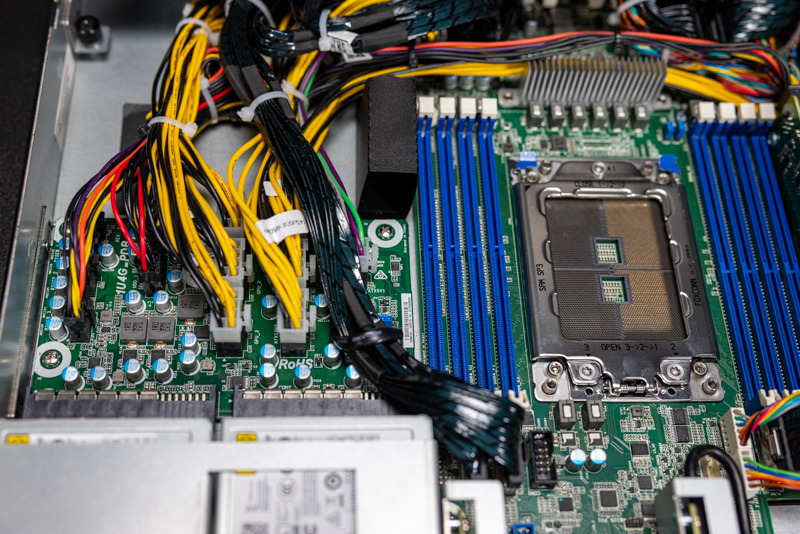

We already showed the power distribution board, but here is a look of it next to the main motherboard.

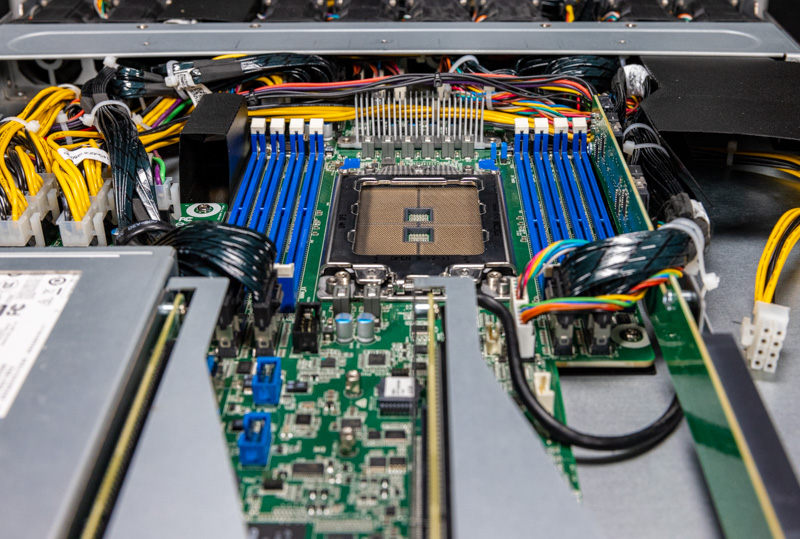

Here is a view looking at how the fan partition has to move air through a lot of cables to get to the motherboard area.

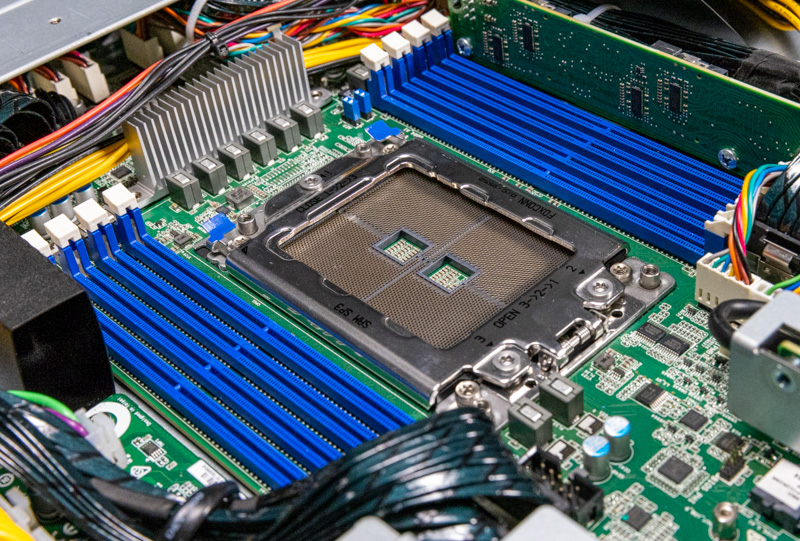

The CPU socket is a SP3 socket. Although this is the 1U4G-ROME system the solution itself takes both AMD EPYC 7002 “Rome” and AMD EPYC 7003 “Milan” CPUs.

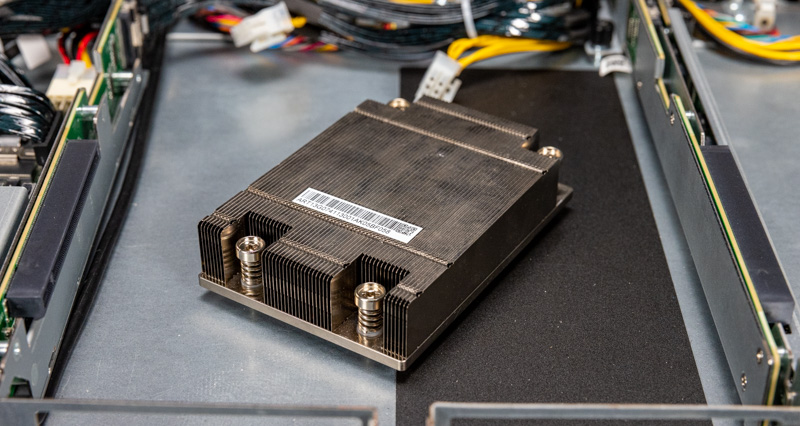

One quick note is that ASRock Rack is limited to cooling the single CPU with air that has already cooled the left and middle GPU. In a 1U chassis, there is limited space for a heatsink. As such, ASRock Rack has guidelines for ambient data center temperatures with 200-280W TDP parts. Eventually, this type of system will need to be liquid-cooled.

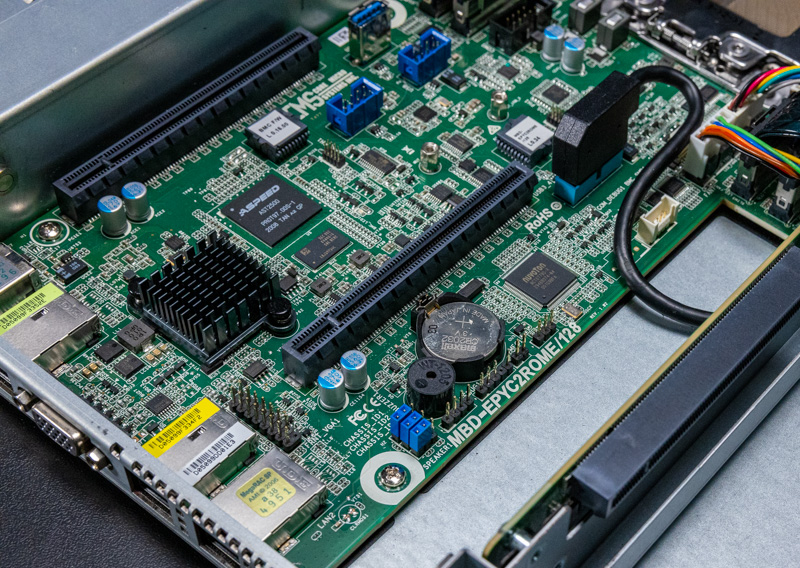

The motherboard is called the MBD-EPYC2ROME/128. There are fewer features here although we still get the Intel i350-am2 NIC (under the heatsink), an ASPEED AST2500 BMC, and an internal Type-A USB 3 port.

There are also two slots. These are for the PCIe risers that we showed in our external overview.

The other major rear internal feature is the fourth GPU slot. Again, we have a similar riser configuration but this time it is oriented toward the rear.

This GPU has a cabled PCIe riser as well as its own power cable.

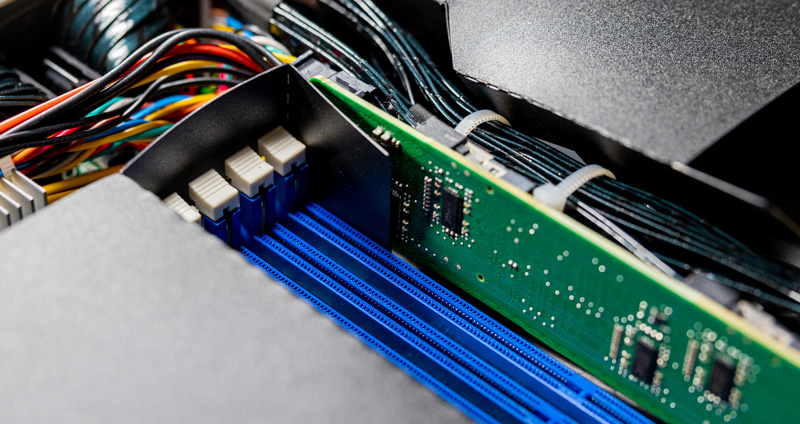

Perhaps my least favorite part of this server, as one can tell by the photos, is the airflow guides. These were appreciably difficult to seat. Thinner airflow guide material is used here to allow for a bigger airway, but it makes them a challenge to seat properly.

It was not just the GPU airflow guide, but also the one near the CPU, especially where it ran between the rear GPU riser and the DIMM slots. Once in place, these guides work well, but they were far from the easiest installation step.

Next, we are going to move on to the management before getting to our testing and our final words.

The EDSFF storage and connectors are a thing of real beauty. It would be wonderful if this tech supplants m.2 in the desktop world also.

@Patrick, can you tell us the part number and manufacturer of the fans used to force air theough this system?