ASRock Rack 1U24E1S-GENOA/2L2T Internal Hardware Overview

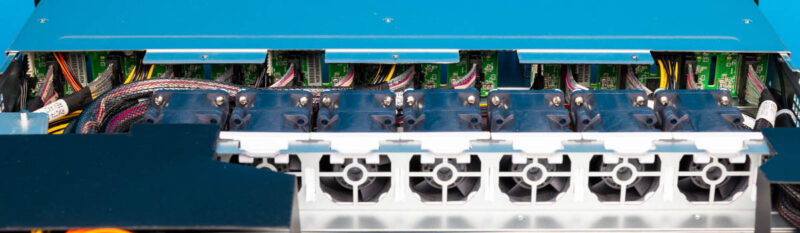

Starting again at the front of the server, we are going to work from the storage backplane’s rear to the rear of the chassis. Here is a quick shot from the front to help with orientation.

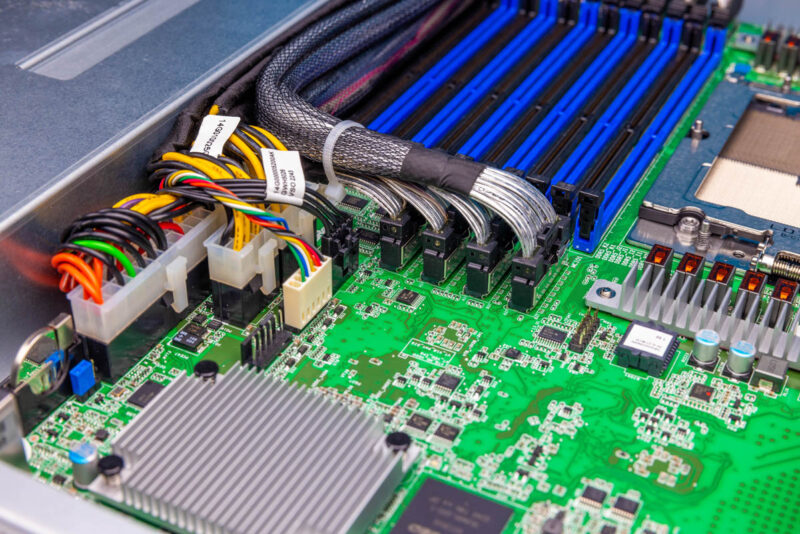

The backplane uses MCIO connectors and has power. We can also see a big gap in the backplane where the front air vent is on the server.

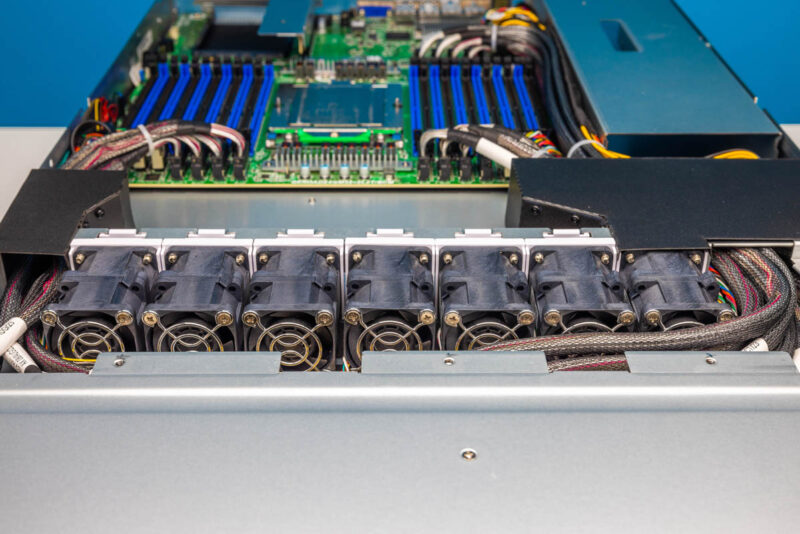

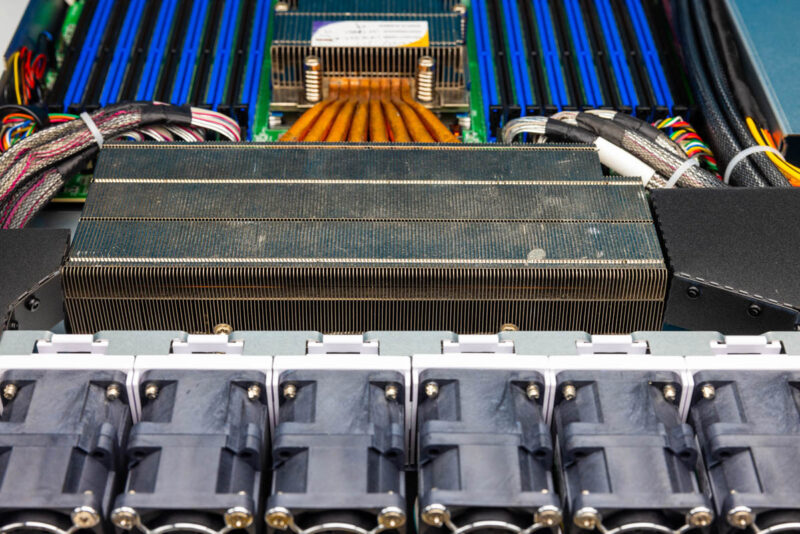

Behind that, backplane, we get seven dual fan modules.

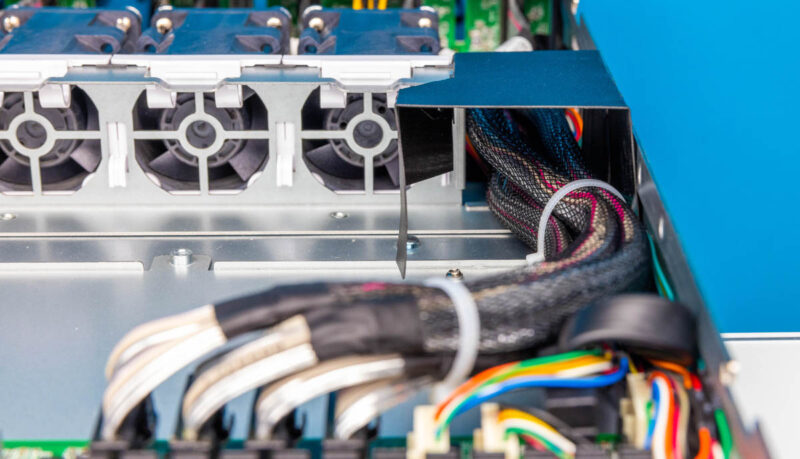

ASRock has carriers, but they are not hot-swappable. Hot swap fans are common on 2U servers, but much less so on 1U given vertical space constraints. Behind the fan partition there is a giant void with airflow guides channeling cables around on either side.

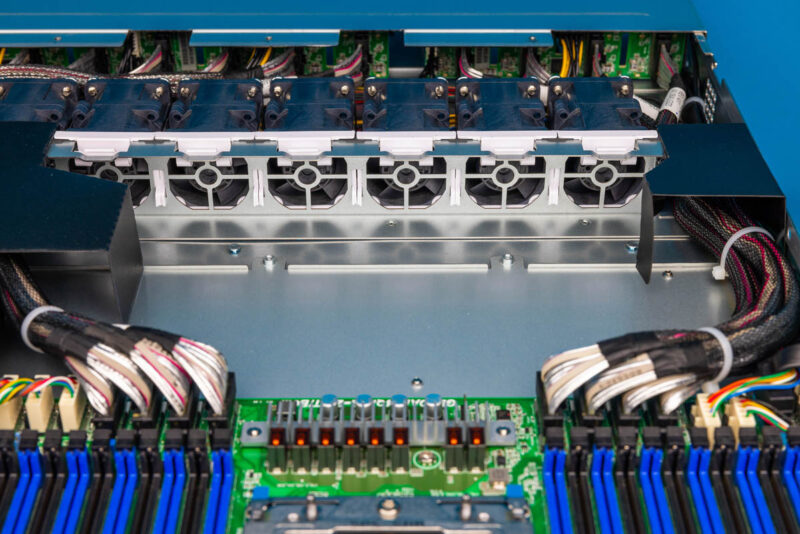

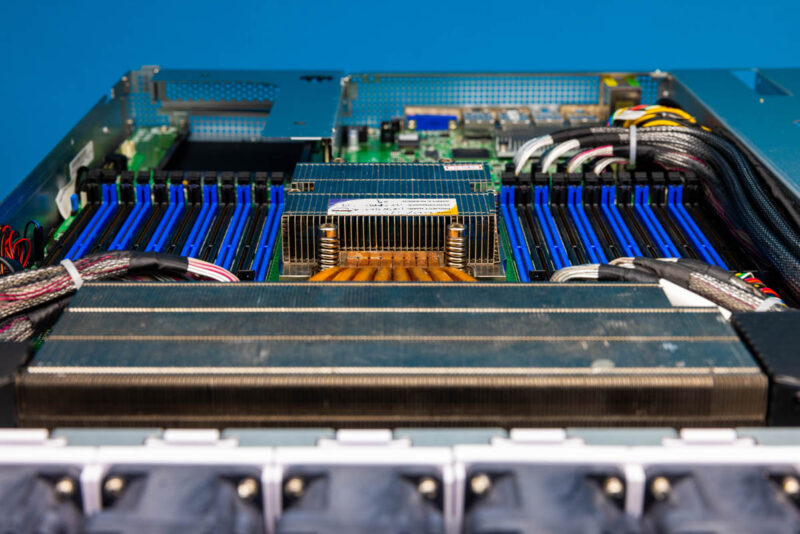

The channels may look a bit strange, but they look like this because ASRock Rack is doing something really neat with them.

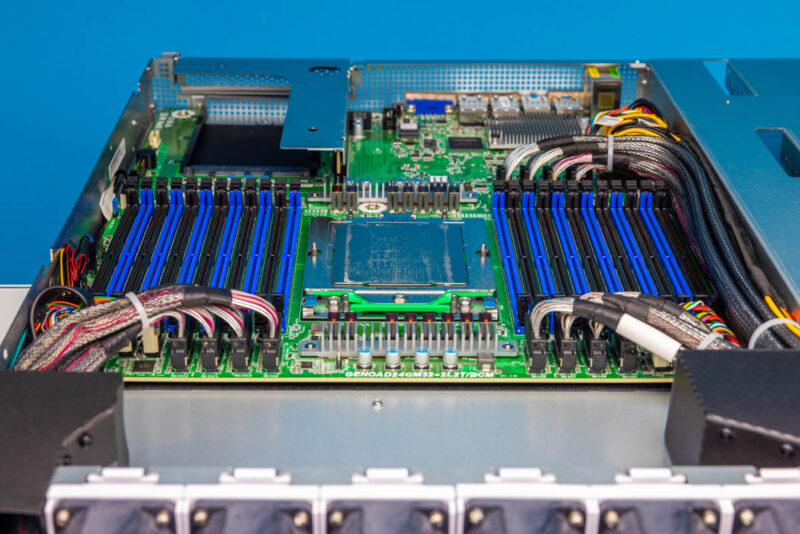

Modern CPUs require so much cooling that it is common to see big heatsinks that extend well beyond the socket in 1U servers. In this case, that void between the fans and the motherboard is designed to fit the main fin area of the CPU cooler.

What is unique about this solution is that instead of placing an airflow guide over the heatsink, ASRock Rack is using the cable channel airflow guides to direct air over the heatsink.

The main CPU socket part of the cooler still has a heatsink, but the fins are much less densely packed, so it seems primary there to serve as a place to have heat pipes. This is a very different design

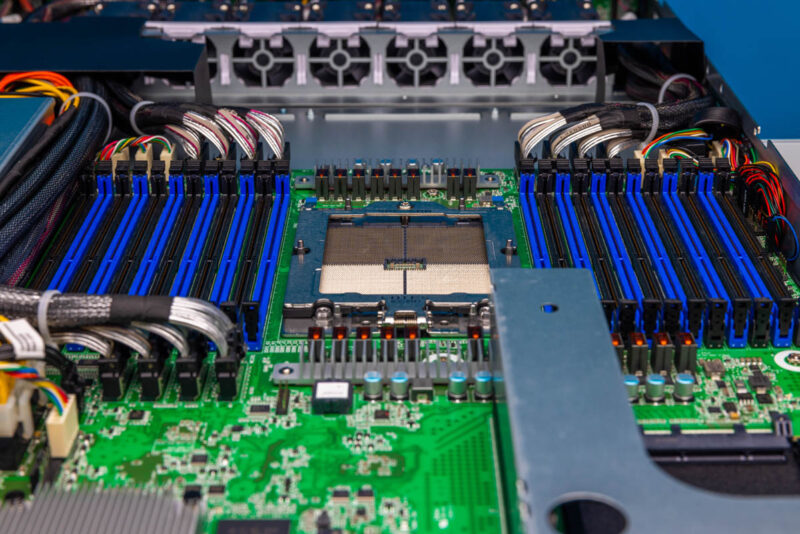

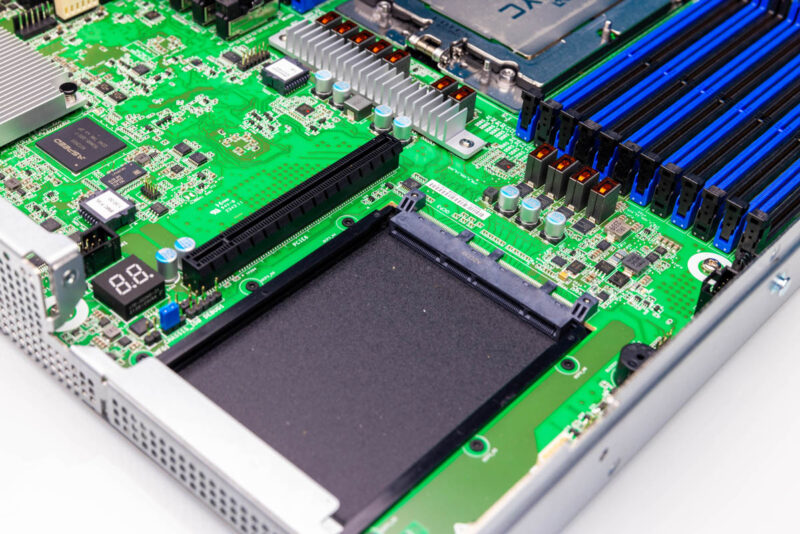

The cables that go around the cooler go to MCIO x8 connectors that are on the front of the motherboard and just behind the DIMM slots. Keeping the MCIO connectors close to the CPU means that this system is able to span the distance to the front E1.S connectors without needing power-hungry PCIe retimers.

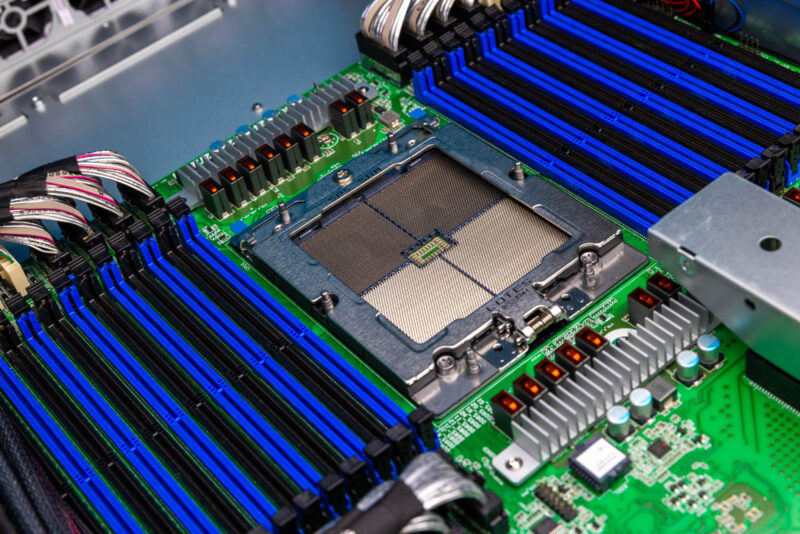

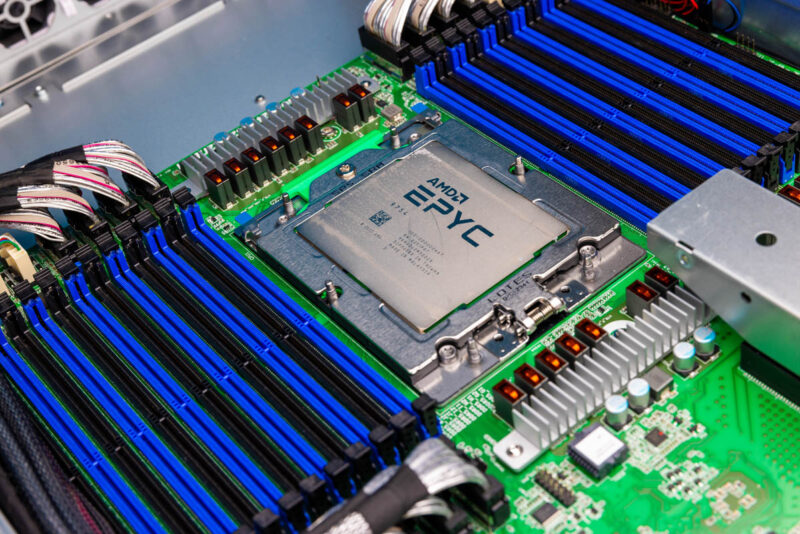

The CPU socket is for the AMD EPYC 9004 Genoa, Bergamo, and Genoa-X.

We have been using the 128 core/ 256 thread AMD EPYC 9754 in this server.

Something worth noting is that this is a 2 DIMMs Per Channel (2DPC) design. As such, the CPUs have 12 memory channels, but there are 24 slots on this motherboard. Often, we see AMD EPYC socket SP5 platforms with only 12 DDR5 RDIMM slots because that is already a lot of memory to fill, and going to 24 slots takes up a lot more space. In this platform, you can use lower-cost 64GB DDR5 RDIMMs and hit 1.5TB of memory capacity without having to step up to more costly 128GB DIMMs which have a premium on $/GB.

Power to the motherboard is standard ATX power. It is nice that the motherboard uses very standard connections since that helps serviceability down the line.

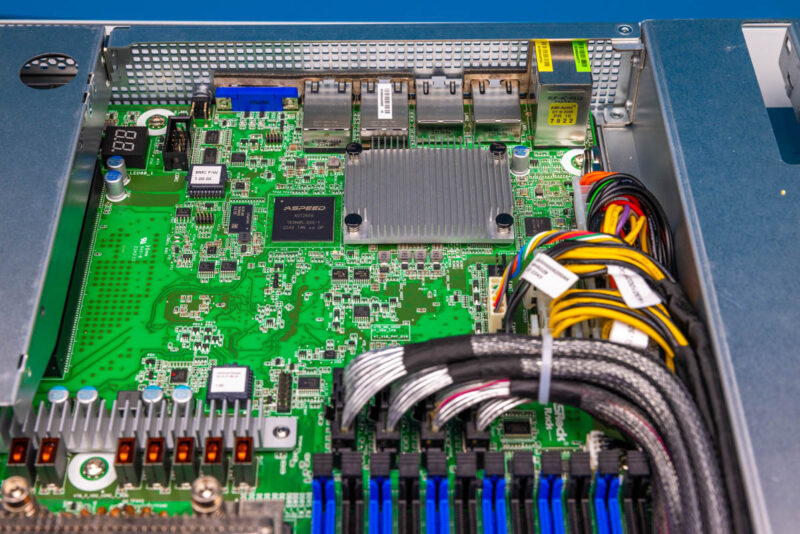

The ASPEED AST2600 BMC is a fairly standard BMC in this generation. Next to it, we can see a larger heatsink. If you are coming from pre-Intel Xeon 6 servers, you might think this is a PCH. Instead, it is a Broadcom BCM57416 10Gbase-T controller.

Aside from the 10GbE and 1GbE onboard, the PCIe Gen5 x16 riser and OCP NIC 3.0 slot provide avenues for fast networking.

The server also has a POST code display to help with troubleshooting.

Next, let us see how this is all wired up in the block diagram.